神经网络基础结构

目录

1. 神经网络的基本骨架:

2. 卷积层

3. 最大池化层(下采样)

4. 非线性激活

5. 归一化/标准化层

6. 循环层

7. 线性层

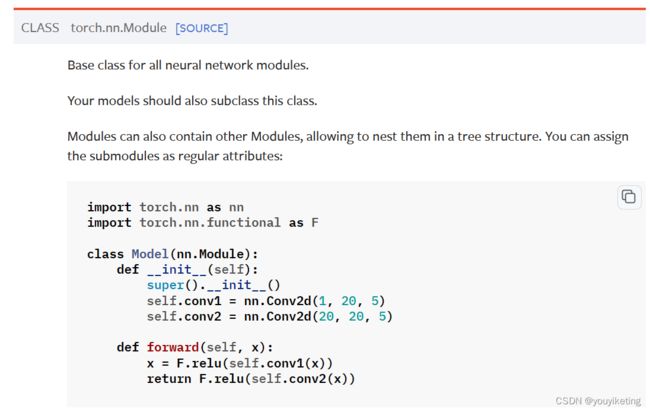

1. 神经网络的基本骨架:

-

Containers

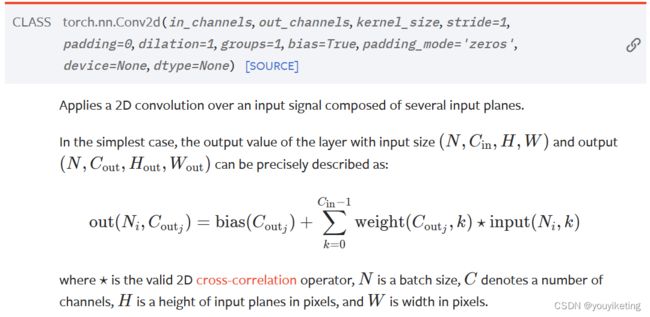

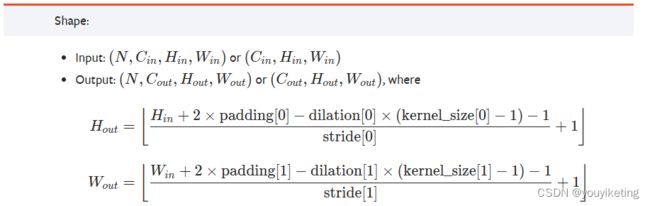

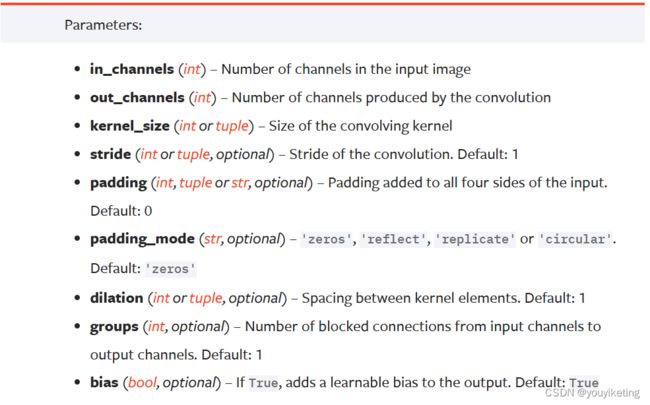

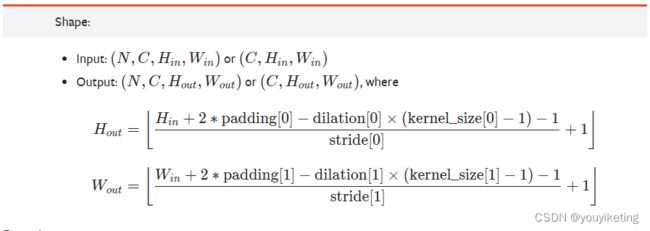

2. 卷积层

-

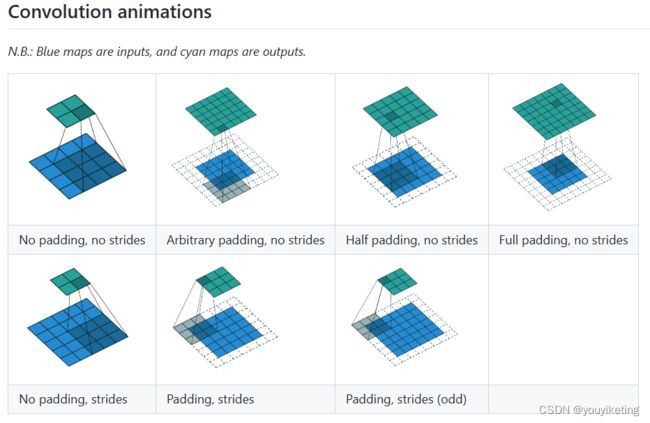

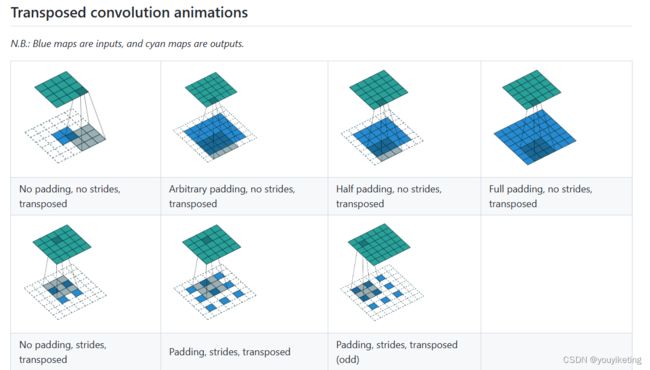

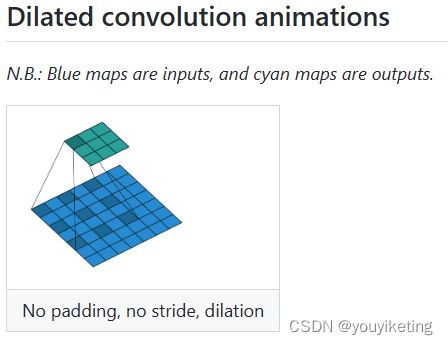

Convolution Layers

参数动态理解: conv_arithmetic/README.md at master · vdumoulin/conv_arithmetic (github.com)

实战:

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# 数据集CIFAR10

dataset = torchvision.datasets.CIFAR10("../dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

# 搭建神经网络(只有一个卷积层)

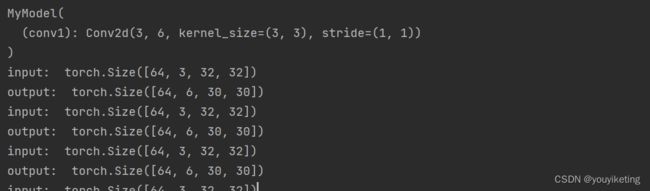

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0) # 卷积层

def forward(self,x):

x = self.conv1(x)

return x

myModel = MyModel()

print(myModel)

# test

writer = SummaryWriter("logs")

step=0

for data in dataloader:

imgs, targets = data

output = myModel(imgs)

print("input: ", imgs.shape)

print("output: ", output.shape)

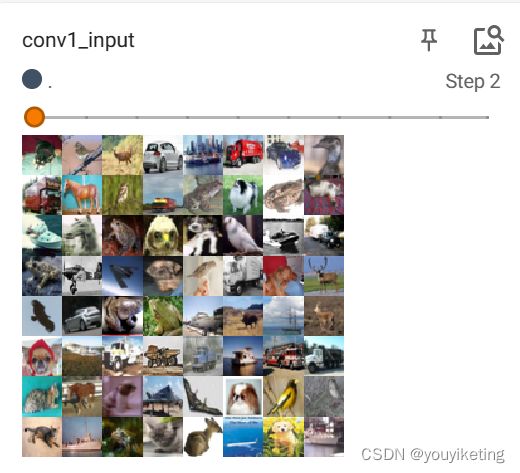

writer.add_images("conv1_input", imgs, step) # torch.size([64,3,32,32])

output = torch.reshape(output, (-1, 3, 30, 30)) # torch.size([64,6,30,30]) -> [xxx,3,30,30]

writer.add_images("conv1_output", output, step)

step = step + 1

结果:

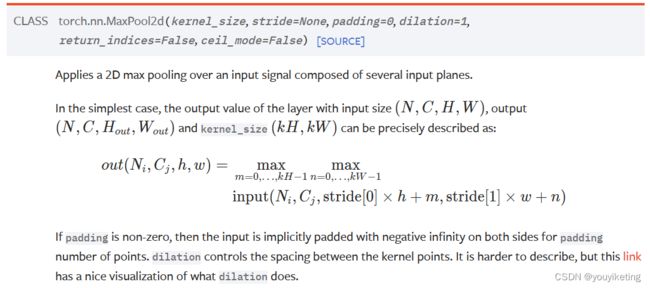

3. 最大池化层(下采样)

-

Pooling layers

目的:保留输入数据特征,同时减少数据量.

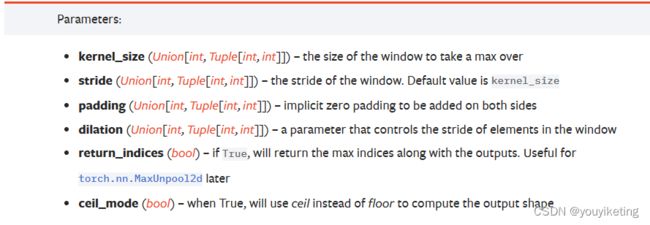

Ceil_model参数理解:(图片from B站up主:我是土堆)

Ceil_model参数理解:(图片from B站up主:我是土堆)

实战:

import torch

from torch import nn

from torch.nn import MaxPool2d

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]], dtype=torch.float32)

# 5*5的2维矩阵 -> reshape -> 4维张量(N=1,C=1,H=5,W=5)

# 以满足nn.MaxPool2D输入要求

input = torch.reshape(input, (-1, 1, 5, 5))

print("input: ", input.shape)

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, input):

output = self.maxpool1(input)

return output

myModel = MyModel()

output = myModel(input)

print("output: ", output)结果:

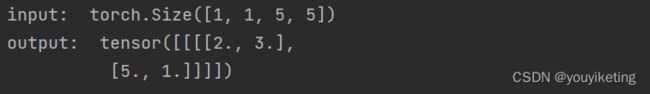

ceil_mode=True:

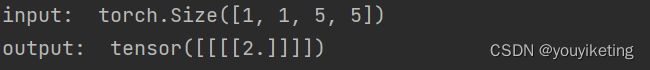

ceil_mode=False:

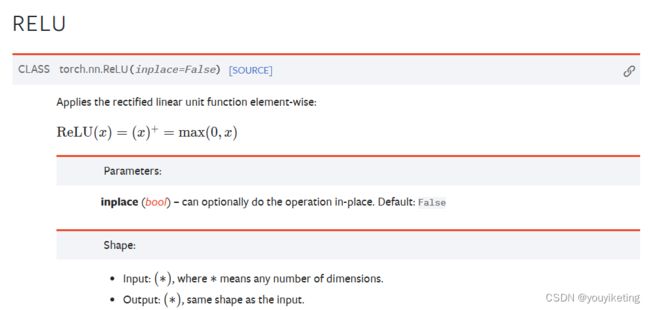

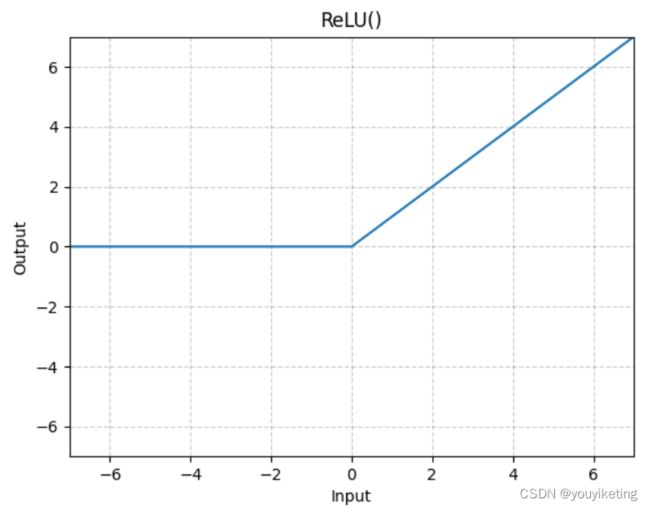

4. 非线性激活

-

Non-linear Activations (weighted sum, nonlinearity)

目的:在神经网络中引入非线性特征.

实战:

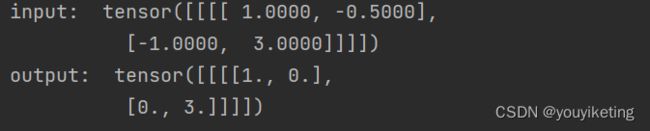

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1, -0.5],

[-1, 3]])

# 2*2的2维矩阵 -> reshape -> 4维张量(N=1,C=1,H=2,W=2)

# 以满足nn.Relu输入要求

input = torch.reshape(input, (-1, 1, 2, 2))

print("input: ", input)

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.relu1 = ReLU()

def forward(self, input):

output = self.relu1(input)

return output

myModel = MyModel()

output = myModel(input)

print("output: ", output)

结果:

5. 归一化/标准化层

-

Normalization Layers

目的:防止梯度爆炸、梯度消失,可加速收敛

6. 循环层

-

Recurrent Layers

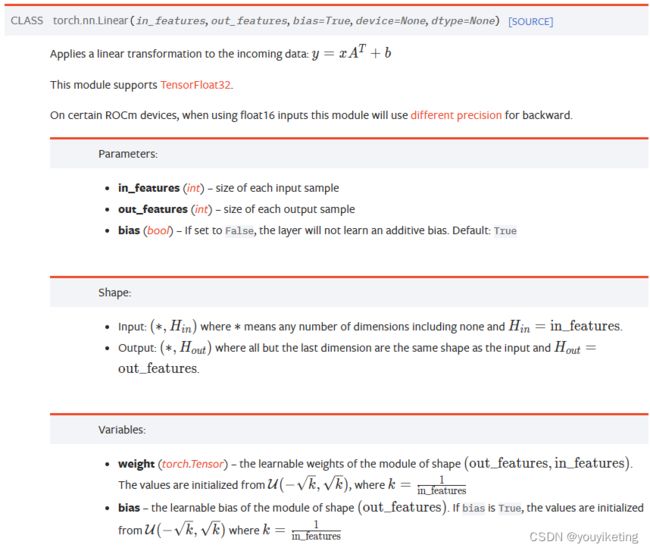

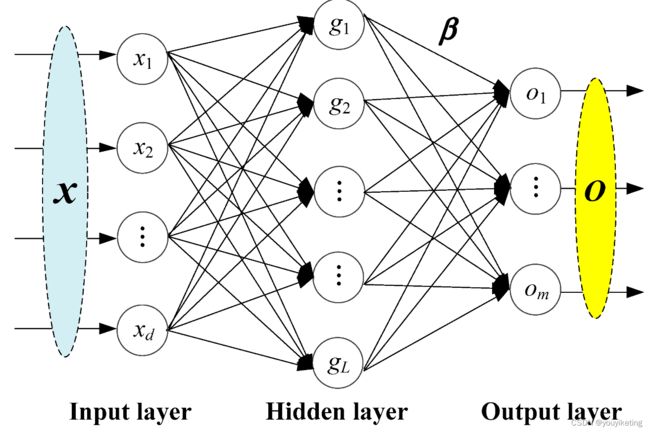

7. 线性层

-

Linear Layers

全连接层 = 线形层 + 非线性激活

线性层(全连接层)神经网络:

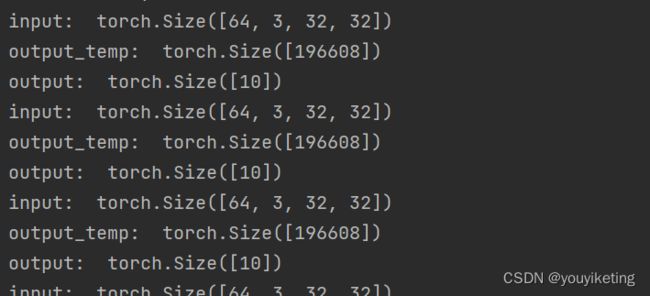

实战:

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

# 数据集CIFAR10

dataset = torchvision.datasets.CIFAR10("../dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64, shuffle=True, num_workers=0, drop_last=True)

# 构建模型

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.linear1 = Linear(in_features=196608,out_features=10)

def forward(self, input):

output = self.linear1(input)

return output

myModel = MyModel()

# test

for data in dataloader:

imgs, targets = data

print("input: ", imgs.shape)

output_temp = torch.flatten(imgs) # 4维张量[64,3,32,32] -> flatten -> 1维张量[196608]

print("output_temp: ", output_temp.shape)

output = myModel(output_temp) # 1维张量[196608] -> linear layers -> 1维张量[10]

print("output: ", output.shape)

结果: