Pytorch学习笔记(9)———基本的层layers

卷积神经网络常见的层

| 类型 | 名称 | 作用 |

|---|---|---|

| Conv | 卷积层 | 提取特征 |

| ReLU | 激活层 | 激活 |

| Pool | 池化 | —— |

| BatchNorm | 批量归一化 | —— |

| Linear(Full Connect) | 全连接层 | —— |

| Dropout | —— | —— |

| ConvTranspose | 反卷积 | —— |

pytorch中各种层的用法

卷积 Convolution

| 卷积类型 | 作用 |

|---|---|

| torrch.nn.Conv1d | 一维卷积 |

| torch.nn.Conv2d | 二维卷积 |

| torch.nn.Conv3d | 三维卷积 |

| torch.nn.ConvTranspose1d | 一维反卷积 |

| torch.nn.ConvTranspose2d | 二维反卷积 |

| torch.nn.ConvTranspose3d | 三维反卷积 |

m = nn.Conv1d(in_channels=16,

out_channels=33,

kernel_size=3,

padding=1,

stride=1)

input = torch.randn(1, 16, 50)

output = m(input)

print(output.size())

# nn.Conv2d()

m = nn.Conv2d(in_channels=16,

out_channels=33,

kernel_size=3,

stride=2)

input = torch.randn(20, 16, 50, 100)

output = m(input)

print(output.size())

m = nn.Conv2d(in_channels=16,

out_channels=33,

kernel_size=(3, 5),

stride=(2, 1),

padding=(4, 2),

dilation=(3, 1))

output = m(input)

print(output.size())

# nn.ConvTranspose2d()

# 2d transpose convolution operator

m = nn.ConvTranspose2d(in_channels=16,

out_channels=33,

kernel_size=3,

stride=2)

input = torch.randn(20, 16, 50, 100)

output = m(input) # (20,33,101,201)

print(output.size())

# exact output size can be also specified as an argument

input = torch.randn(1, 16, 12, 12)

downsample = nn.Conv2d(in_channels=16,

out_channels=16,

kernel_size=3,

stride=2,

padding=1)

upsample = nn.ConvTranspose2d(in_channels=16,

out_channels=16,

kernel_size=3,

stride=2,

padding=1,

output_padding=1)

output = downsample(input) # 下采样, (1x16x6x6)

print(output.size())

output = upsample(output) # 上采样, (1x16x12x12)

print(output.size())

对图像进行卷积操作

- 图像读取: 采用PIL.Image读取

- Tensor转换: 采用

torch.from_numpy()将图像转为Tensor - 维度变换: 采用

tensor.squeeze()进行降维度,tensor.unsqueeze()升维

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

# 载入图像

image = Image.open('./data/lena_gray.jpg')

# 图像转换为Tensor

dir(torch)

torch.set_default_dtype(torch.float)

x = torch.from_numpy(np.array(image, dtype=np.float32))

print(x.size())

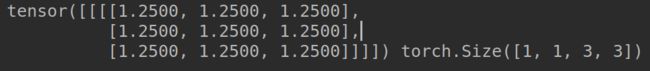

# 4D Tensor

x = x.unsqueeze(0)

x = x.unsqueeze(0)

print(x.size())

filter1 = torch.tensor([[-1, 0, 1],

[-2, 0, 2],

[-1, 0, 1]], dtype=torch.float32)

filter1 = filter1.unsqueeze(0)

filter1 = filter1.unsqueeze(0)

out = F.conv2d(x, filter1)

print(out.size())

out = out.squeeze(0)

out = out.squeeze(0)

plt.imshow(out.numpy().astype(np.uint8), cmap='gray')

plt.axis('off')

plt.show()

池化层 Pooling

一般池化采用这两种方法:最大池化和平均池化

pytorch中提供的池化API:

# https://pytorch.org/docs/stable/nn.html#pooling-layers

# torch.nn.MaxPool1d/2d/3d

# torcn.nn.MaxUnpool1d/2d/3d

# torch.nn.AvgPool1d/2d/3d

# torch.nn.FractionMaxPool2d

# torch.nn.AdaptiveMaxPool1d/2d/3d

# torch.nn.AdaptiveAvgPool1d/2d/3d

# nn.MaxPool2d()

m = nn.MaxPool2d(kernel_size=3, stride=2)

input = torch.randn(20, 16, 50, 32)

output = m(input)

print(output.size()) # (20,16, 24, 15)

m = nn.MaxPool2d(kernel_size=(3, 2), stride=(2, 1))

output = m(input)

print(output.size()) # (10,16,24,31)

Dropout层

Dropout层的特点: 输出Tensor与输出Tensor尺寸相同(Output is of the same shape as input)

计算过程: tensor_out = 1/(1-p) * tensor_input

# torch.nn.Dropout

# torch.nn.Dropout2d

# torch.nn.Dropout3d

# torch.nn.AlphaDropout

m = nn.Dropout(p=0.2, inplace=False)

input = torch.randn(1, 5)

output = m(input)

print('input:', input, '\n',

output, output.size())

m = nn.Dropout2d(p=0.2)

input = torch.randn(1, 1, 5, 5)

output = m(input)

print(output, output.size())

全连接层 / 线性层 Linear

import torch

import torch.nn as nn

import torch.nn.functional as F

# https://pytorch.org/docs/stable/nn.html#linear-layers

# torch.nn.Linear()

# torch.nn.Bilinear() # 双线性变换

# torch.nn.Linear()

m = nn.Linear(in_features=20, out_features=30)

input = torch.randn(128, 20)

output = m(input)

print(output.size()) # (128, 30)

# torch.nn.Bilinear()----???

# Applies a bilinear transformation to the incoming data

m = nn.Bilinear(in1_features=20, in2_features=30, out_features=40)

input1 = torch.randn(128, 20)

input2 = torch.randn(128, 30)

output = m(input1, input2)

print(output.size()) # (128, 40)

Normalization层

mport torch

import torch.nn as nn

# https://blog.csdn.net/wzy_zju/article/details/81262453

# https://pytorch.org/docs/stable/nn.html#normalization-layers

# torch.nn.BatchNorm1d/2d/3d

# torch.nn.GroupNorm

# torch.nn.InstanceNorm1d/2d/3d

# torch.nn.LayerNorm

# torch.LocalResponseNorm (LRN)

# nn.BatchNorm1d (same shape as input)

# affine=False, without Learnable Parameters

m = nn.BatchNorm1d(num_features=10, affine=False)

input = torch.randn(20, 10)

output = m(input)

print(output, output.size())

# nn.BatchNorm2d (same shape as input)

# affine=True, with learnable parameters

m = nn.BatchNorm2d(num_features=100, affine=True)

input = torch.randn(20, 100, 35, 45)

output = m(input)

print(output.size())

链接:https://www.jianshu.com/p/343e1d994c39

PyTorch的inplace的理解

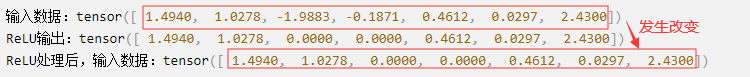

inplace=True指的是进行原地操作,选择进行原地覆盖运算。 比如 x+=1则是对原值x进行操作,然后将得到的结果又直接覆盖该值。y=x+5,x=y则不是对x的原地操作。inplace=True操作的好处就是可以节省运算内存,不用多储存其他无关变量。

注意:当使用 inplace=True后,对于上层网络传递下来的tensor会直接进行修改,改变输入数据,具体意思如下面例子所示:

inplace=True操作 会修改输入数据:

import torch

import torch.nn as nn

relu = nn.ReLU(inplace=True)

input = torch.randn(7)

print("输入数据:",input)

output = relu(input)

print("ReLU输出:", output)

print("ReLU处理后,输入数据:")

print(input)

原文链接:https://blog.csdn.net/qq_40520596/article/details/106958760