NTHU competition4(stackGAN)

concept

CGAN

conditional GAN

element-wise multiplication

就是点乘 , shape要一样

[1,2,3] .* [2,2,2]

ans = [2,4,6]

stack GAN 架构

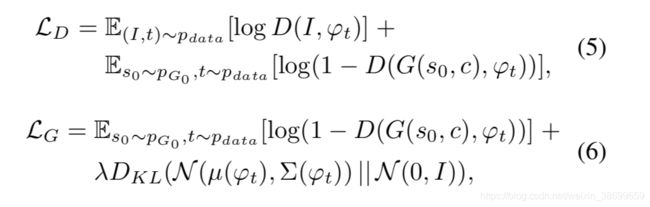

φt 指的是 text embedding (使用 pre-train model)

μ(φt ) : mean ,指平均值

Σ(φt): diagonal covariance matrix

I0 : real image

t : the text description

pdata : true data distribution.

z is a noise vector randomly sampled from a given distribution pz (e.g., Gaussian distribution used in this paper).

Stage-I

-

G0

φt 喂进 全连接层产生 μ0 σ0 (σ0 are the values in the diagonal of Σ0)c0 = μ0 + σ0 ⊙ ε (where ⊙ is 点乘, ε ∼ N (0, I )).

c0和 Nz dimensional noise vector 连接在一起concat

-

D0

真图片,假图片都喂进 down-sampling再把 text embedding 通过全连接 变成 Md × Md × Nd ( 4 x 4 x 128)

concat起来,使用1x1 convolution 加只有一个节点的全连接层得到 [ 0 or 1 ]

Stage-II

-

G1

如图,需要 conditional augmentation 和 stage1 结果 ,得到 c , 和stageI的结果down-sampling完 concat 在一起,

经过 res , 再 upsampling

输出 256 x 256 图片

-

D1

input:

1.256x256 的 真图片

2.256x256 的 G1 图片output:

[ 0 or 1]

loss

Upsample

implement

python2 读取 python3’s pickle

https://stackoverflow.com/questions/29587179/load-pickle-filecomes-from-python3-in-python2

python3:

import pandas as pd

import pickle

df = pd.read_pickle('text2ImgData.pkl')

with open("pkle.txt","wb")as f:

f.write(pickle.dumps(df, 2))

python2:

import cPickle

with open("../dataset/pkle.txt","rb") as f:

a=cPickle.loads(f.read())

print(a)

python pd to np.array

np_array=df.values()

python 转所有 高维list 到 int

l = [['1', ' 1', ' 3'], ['2', ' 3', ' 5'], ['3'], ['4', ' 5'], ['5', ' 1'], ['6', ' 6'], ['7']]

result = [map(int,i) for i in l]

[[1, 1, 3], [2, 3, 5], [3], [4, 5], [5, 1], [6, 6], [7]]

======================================================

numpy:

np.array(i).astype(int)

pytorch 使用tensorboard

pip install tensorboard-pytorch tensorboardX

pytorch 需要double,给了float

RuntimeError: expected Double tensor (got Float tensor)

A fix would be to call .double() on your model

(or .float() on the input)

网友真的牛逼

清空 jupyter 的 output

jupyter nbconvert --ClearOutputPreprocessor.enabled=True --inplace name.ipynb

UnicodeDecodeError: ‘ascii’ codec can’t decode byte 0xe2 in position 4: ordinal not in range(128)

解决方法

pip install --upgrade pip

report

I chose stackGAN as my text-to-image model this time.Because this model’s result is better than most of the others.

This tensorflow implementation is my first reference architecture.But I gived up caused the code is so hard to understand and the PrettyTensor module annoyed me so much. So i take this pytorch implementation as my final reference.

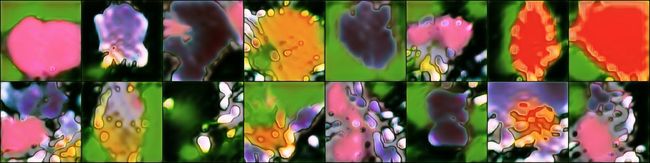

Since the stackGAN is separated to 2 part , the first output image size is 64 x 64 and second output is 256 x 256 image. So this is the result when train after 100 epoch.

64 x 64 (batch_size = 40)

I used same noised,so the result is like this:

ID = 3296 [list([‘9’, ‘2’, ‘17’, ‘9’, ‘521’, ‘1’, ‘6’, ‘11’, ‘13’, ‘18’, ‘3’, ‘626’, ‘89’, ‘8’, ‘21’, ‘101’, ‘5427’, ‘5427’, ‘5427’, ‘5427’])]

ID = 3323 [list([‘4’, ‘1’, ‘15’, ‘22’, ‘3’, ‘44’, ‘13’, ‘18’, ‘7’, ‘2’, ‘10’, ‘6’, ‘141’, ‘3’, ‘113’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’])]

ID = 5187 [list([‘9’, ‘1’, ‘5’, ‘45’, ‘11’, ‘2’, ‘119’, ‘9’, ‘20’, ‘19’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’])]

ID = 8101 [list([‘4’, ‘1’, ‘15’, ‘12’, ‘3’, ‘11’, ‘13’, ‘18’, ‘7’, ‘2’, ‘10’, ‘6’, ‘40’, ‘3’, ‘26’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’])]

ID = 5682 [list([‘9’, ‘1’, ‘15’, ‘20’, ‘13’, ‘18’, ‘7’, ‘8’, ‘25’, ‘33’, ‘7’, ‘53’, ‘27’, ‘61’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’, ‘5427’])]

this pytorch code is not for oxford-flower dataset but for coco dataset. So I changed the input shape to [data_length,sentences_num,word_embedding] and set z dimention & condition dimention to 156.The word embedding content taked from TA.

setting D_LearningRate to 0.0002 and G_LearningRate to 0.0002 as the author do .

I haven’t used pre-train model,cause the model is for coco dataset. So I train 256x256 model after 64x64 model .

- Conclusions

總體来说,效果還是不錯的,但是有些花還是有點畸形,好笑的事情是,我使用64x64 evaluation出來的效果居然比256x256還好。我認為可能的原因是 train完 完整的 64的model后,再train 256,結果train到20個epoch的時候,網斷了,我就用這個第20個epoch的model 來繼續train,epoch1還是有點像花,

2之後就有點學歪了,偏離軌跡的感覺,

時間大概耗時8小時,256 train完想說 再來一次 從頭train到尾,但是時間已經不夠了。所以下次吸取教訓,從頭開始train

還有pickle 和 cpickle 由於支持的python版本不同,所以我把TA的word embedding 用py3 pickle寫到txt,再從py2 讀 txt出來。