C++(ALL) STL ATL Opencv 视觉图像实践进展记录

目录

检索文件夹中的图片名——可用于批量读取图片

C++ 创建文件夹并检测指定文件的个数

C++ 检测指定文件的个数

C++ 多线程

C++ 常见错误解决

"Error: "const char"类型的实参与"LPCWSTR"类型的形参不兼容",解决方法:

operator()

找数组最大最小值

图像增强——RGB图分离并均衡化

图像增强——RGB图分离并对数log变换

图像增强——滤波器

图像增强——高反差

图像增强——边缘增强

形态学——膨胀腐蚀

图像分割

图像分割——OTSU阈值

图像分割——Kmeans

图像滤波

多种滤波器

轮廓

findContours

drawContours

boundingRect

minAreaRect

轮廓子程序

图像特征提取思路

ATL::CImage读取图片

ATL::CImage转Mat

C++ 图像处理——滤波

C++ Opencv HSV

C++ Opencv 双边滤波

C++ Opencv 图像特征( Opencv3)

C++ Opencv 图像特征brisk s

C++ Opencv hog+svm

C++ Opencv 颜色、纹理、形状+svm

主函数

子程序

子函数

C++ Opencv HU、MOM、GLCM

C++ Opencv hog+SVM(opencv3)

C++ Opencv 特征AKAZE(opencv3.3.0)

C++ Opencv 矩形分割

C++ vector容器打印

C++ FlyCapture相机

C++ Modbus通信

C++ Opencv xml调用

C++ Opencv xvid编码录像

C++ Opencv glcm

C++ Opencv 同态滤波

C++ Opencv hsv的h直方图

C++ Opencv HSV H、S、V直方图

C++ ATL::CImage和Mat的转换

C++ Opencv 图像目标分割

C++ Opencv 特征Feature.h{颜色、形状、纹理}

C++ vector操作

C++ io头文件进行zhi指定文件夹的文件名获取+文件个数返回

检索文件夹中的图片名——可用于批量读取图片

/*

#include

using namespace std;

*/

std::string img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\目标分割结果\\";

for (int i = 0; i < 6; i++){

string pos;

stringstream ss;

ss << i;

ss >> pos;

string img_name = img_dir + "test" + pos + ".bmp";

cout << img_name << endl;

}

system("pause"); C++ 创建文件夹并检测指定文件的个数

// 创建文件夹

string dirName = "save";

bool flag = CreateDirectory(dirName.c_str(), NULL);

//读取指定文件夹的文件数目

cv::String pattern = "./save/*.bmp";//

size_t cout;

cout = read_images_in_folder(pattern);

// 保存图片

char filesave[100];

cout++;//全局变量进行命名统一名称累加保存

sprintf_s(filesave, "./save/save%d.bmp", cout);

imwrite(filesave, my_camera.src);//保存图片到当前项目位置

//读取当前文件夹图片的个数子程序

size_t read_images_in_folder(cv::String pattern)//读取当前指定目录的图片的个数

{

vector fn;

glob(pattern, fn, false);//OpenCV自带一个函数glob()可以遍历文件

size_t count = fn.size(); //number of png files in images folder

return count;

} C++ 检测指定文件的个数

//读取当前文件夹图片的个数子程序

/*

cv::String pattern = "./save/*.bmp";

int cout = read_images_in_folder(pattern);

*/

size_t read_images_in_folder(cv::String pattern)//读取当前指定目录的图片的个数

{

vector fn;

glob(pattern, fn, false);//OpenCV自带一个函数glob()可以遍历文件

size_t count = fn.size(); //number of png files in images folder

return count;

} C++ 多线程

#include

#include //多线程

#include //mutex是用来保证线程同步的,防止不同的线程同时操作同一个共享数据。但是使用mutex是不安全的,当一个线程在解锁之前异常退出了,那么其它被阻塞的线程就无法继续下去。

using namespace std;

int cnt = 20;

mutex m;

void t1()

{

while (cnt > 0)

{

lock_guard lockGuard(m);//lock_guard则相对安全,它是基于作用域的,能够自解锁,当该对象创建时,它会像m.lock()一样获得互斥锁,当生命周期结束时,它会自动析构(unlock),不会因为某个线程异常退出而影响其他线程。

if (cnt > 0)

{

--cnt;

cout << cnt << endl;

}

}

}

void t2()

{

while (cnt > 0)

{

lock_guard lockGuard(m);

if (cnt > 0)

{

--cnt;

cout << "t2";

cout << cnt << endl;

}

}

}

int main()

{

thread th1(t1);

thread th2(t2);

th1.join();//线程执行后才执行下一个线程

th2.join();

system("pause");

return 0;

} C++ 常见错误解决

"Error: "const char"类型的实参与"LPCWSTR"类型的形参不兼容",解决方法:

项目菜单——项目属性(最后一个)——配置属性——常规——项目默认值——字符集,将使用Unicode字符集改为未设置即可。

operator()

IntelliSense: 在没有适当 operator() 的情况下调用类类型的对象或将函数转换到指向函数的类型

找数组最大最小值

// 计算最大最小值

/*

float p[10] = { 1, 20, 2.3, 3.3, 5, -0.22, 5, 40, 5, 4 };

int length = 10;

float max = 0;

float min = 0;

feature_class.normalize_nolinear(p,length,max,min);

cout<<"max:"< p[i])

{

min = p[i];

}

}

} 图像增强

图像增强——RGB图分离并均衡化

Mat src = imread(argv[i]);

Mat imageRGB[3];

split(src, imageRGB);

for (int i = 0; i < 3; i++)

{

equalizeHist(imageRGB[i], imageRGB[i]);

}

merge(imageRGB, 3, src);图像增强——RGB图分离并对数log变换

Mat image = imread(argv[i]);

Mat imageLog(image.size(), CV_32FC3);

for (int i = 0; i < image.rows; i++)

{

for (int j = 0; j < image.cols; j++)

{

imageLog.at(i, j)[0] = log(1 + image.at(i, j)[0]);

imageLog.at(i, j)[1] = log(1 + image.at(i, j)[1]);

imageLog.at(i, j)[2] = log(1 + image.at(i, j)[2]);

}

}

//归一化到0~255

normalize(imageLog, imageLog, 0, 255, CV_MINMAX);

//转换成8bit图像显示

convertScaleAbs(imageLog, imageLog);

图像增强——滤波器

// 滤波器

void sharpenImage(const cv::Mat &image, cv::Mat &result)

{

//创建并初始化滤波模板

/*滤波核为拉普拉斯核3x3:

0 -1 0

-1 5 -1

0 -1 0

*/

cv::Mat kernel(3, 3, CV_32F, cv::Scalar(0));

kernel.at(0, 1) = -1.0;

kernel.at(1, 0) = -1.0;

kernel.at(1, 1) = 5.0;

kernel.at(1, 2) = -1.0;

kernel.at(2, 1) = -1.0;

result.create(image.size(), image.type());

//对图像进行滤波

cv::filter2D(image, result, image.depth(), kernel);

} 图像增强——高反差

Mat HighPass(Mat img)

{

Mat temp;

GaussianBlur(img, temp, Size(7, 7), 1.6, 1.6);

int r = 3;

Mat diff = img + r*(img - temp); //高反差保留算法

return diff;

}图像增强——边缘增强

void edgeEnhance(cv::Mat& srcImg, cv::Mat& dstImg)

{

if (!dstImg.empty())

{

dstImg.release();

}

std::vector rgb;

if (srcImg.channels() == 3) // rgb image

{

cv::split(srcImg, rgb);

}

else if (srcImg.channels() == 1) // gray image

{

rgb.push_back(srcImg);

}

// 分别对R、G、B三个通道进行边缘增强

for (size_t i = 0; i < rgb.size(); i++)

{

cv::Mat sharpMat8U;

cv::Mat sharpMat;

cv::Mat blurMat;

// 高斯平滑

cv::GaussianBlur(rgb[i], blurMat, cv::Size(3, 3), 0, 0);

// 计算拉普拉斯

cv::Laplacian(blurMat, sharpMat, CV_16S);

// 转换类型

sharpMat.convertTo(sharpMat8U, CV_8U);

cv::add(rgb[i], sharpMat8U, rgb[i]);

}

cv::merge(rgb, dstImg);

} 形态学——膨胀腐蚀

erode(hu_dst, hu_dst, getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1)));

dilate(hu_dst, hu_dst, getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1)));图像分割

图像分割——OTSU阈值

//otsu阈值自动求取

double getThreshVal_Otsu(const cv::Mat& _src)

{

cv::Size size = _src.size();

if (_src.isContinuous())

{

size.width *= size.height;

size.height = 1;

}

const int N = 256;

int i, j, h[N] = { 0 };

for (i = 0; i < size.height; i++)

{

const uchar* src = _src.data + _src.step*i;

for (j = 0; j <= size.width - 4; j += 4)

{

int v0 = src[j], v1 = src[j + 1];

h[v0]++; h[v1]++;

v0 = src[j + 2]; v1 = src[j + 3];

h[v0]++; h[v1]++;

}

for (; j < size.width; j++)

h[src[j]]++;

}

double mu = 0, scale = 1. / (size.width*size.height);

for (i = 0; i < N; i++)

mu += i*h[i];

mu *= scale;

double mu1 = 0, q1 = 0;

double max_sigma = 0, max_val = 0;

for (i = 0; i < N; i++)

{

double p_i, q2, mu2, sigma;

p_i = h[i] * scale;

mu1 *= q1;

q1 += p_i;

q2 = 1. - q1;

if (std::min(q1, q2) < FLT_EPSILON || std::max(q1, q2) > 1. - FLT_EPSILON)

continue;

mu1 = (mu1 + i*p_i) / q1;

mu2 = (mu - q1*mu1) / q2;

sigma = q1*q2*(mu1 - mu2)*(mu1 - mu2);

if (sigma > max_sigma)

{

max_sigma = sigma;

max_val = i;

}

}

return max_val;

}图像分割——Kmeans

Mat Image_Kmeans(Mat src, int n)

{

int width = src.cols;

int height = src.rows;

int dims = src.channels();

// 初始化定义

int sampleCount = width*height;

int clusterCount = n;//分几类

Mat points(sampleCount, dims, CV_32F, Scalar(10));

Mat labels;

Mat centers(clusterCount, 1, points.type());

// 图像RGB到数据集转换

int index = 0;

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

Vec3b rgb = src.at(row, col);

points.at(index, 0) = static_cast(rgb[0]);

points.at(index, 1) = static_cast(rgb[1]);

points.at(index, 2) = static_cast(rgb[2]);

}

}

// 运行K-Means数据分类

TermCriteria criteria = TermCriteria(CV_TERMCRIT_EPS + CV_TERMCRIT_ITER, 10, 1.0);

kmeans(points, clusterCount, labels, criteria, 3, KMEANS_PP_CENTERS, centers);

// 显示图像分割结果

Mat result = Mat::zeros(src.size(), CV_8UC3);

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

index = row*width + col;

int label = labels.at(index, 0);

if (label == 1) {

result.at(row, col)[0] = 255;

result.at(row, col)[1] = 0;

result.at(row, col)[2] = 0;

}

else if (label == 2){

result.at(row, col)[0] = 0;

result.at(row, col)[1] = 255;

result.at(row, col)[2] = 0;

}

else if (label == 3) {

result.at(row, col)[0] = 0;

result.at(row, col)[1] = 0;

result.at(row, col)[2] = 255;

}

else if (label == 0) {

result.at(row, col)[0] = 0;

result.at(row, col)[1] = 255;

result.at(row, col)[2] = 255;

}

}

}

return result;

} 图像滤波

多种滤波器

https://blog.csdn.net/zoucharming/article/details/70197863

在图像处理中,尽可能消除图片中的噪声,消除噪声就需要用到滤波,在本次opencv学习中,学习了三个滤波方式。

(1)平均滤波,就是将一个区域内的像素值求和取平均值,然后用这个平均值替换区域中心的像素值。

blur(源Mat对象,目标Mat对象,Size对象,Point对象)//Size对象用来确定区域大小,Point对象如果x,y都是-1则表示更新区域中心的像素。

(2)高斯滤波,也是将一个区域的像素值求取平均值替换区域中心的像素值,但是是加权平均,权重按照二维正态分布。

GaussianBlur(源Mat对象,目标Mat对象,Size对象,x方向正太分布参数,y方向正太分布参数)

(3)中值滤波,之前的两个滤波都有个问题,如果区域中有极端值,很可能影响滤波效果,中值滤波采用区域中的中值来替换,有利于克服椒盐噪声。

medianBlur(源Mat对象,目标Mat对象,int size)//这里的size表示正方形区域的边长

(4)双边滤波,之前的滤波还有个问题,他们都会把轮廓给模糊了,有一些区域之间相差较大的像素,这往往能看出轮廓,所以如果我们给个限制范围,如果两点间的像素值差距大于这个范围就不滤波了,保留图像轮廓

bilateralFilter(源Mat对象,目标Mat对象,int 区域半径,int 限制范围,int space)//space是当区域半径给的是0时,用来计算区域范围的,一般情况下没用,随便给个数就行。

#include

#include

#include

#include

using namespace std;

using namespace cv;

int main()

{

Mat src;

src = imread("1.jpg", 1);

if (src.empty())

{

printf("cannot load!!\n");

return -1;

}

namedWindow("原图");

imshow("原图", src);

Mat dst,dst1;

blur(src, dst, Size(3, 3), Point(-1, -1));

namedWindow("均值滤波");

imshow("均值滤波", dst);

GaussianBlur(src, dst, Size(5, 5), 5, 5);

namedWindow("高斯滤波");

imshow("高斯滤波", dst);

medianBlur(src, dst, 5);

namedWindow("中值滤波");

imshow("中值滤波", dst);

bilateralFilter(src, dst, 5, 100, 3);

namedWindow("双边滤波");

imshow("双边滤波", dst);

waitKey(0);

return 0;

}

轮廓

findContours

Mat grayImage;//输入处理后的二值图

vector> contours;

vector hierarchy;

Mat showImage = Mat::zeros(grayImage.size(), CV_32SC1);

Mat showedge = Mat::zeros(grayImage.size(), CV_8UC1);

findContours(grayImage, contours, hierarchy, RETR_TREE, CHAIN_APPROX_SIMPLE, Point(-1, -1)); drawContours

drawContours(要绘制的图、所有轮廓容器、轮廓编号、颜色填充、线宽);

for (size_t i = 0; i < contours.size(); i++)

{

//这里static_cast(i+1)是为了分水岭的标记不同,区域1、2、3。。。。这样才能分割

drawContours(showImage, contours, static_cast(i), Scalar::all(static_cast(i + 1)), 2);//指定要绘制轮廓的编号

} boundingRect

// 矩形边框 boundingRect

Rect rect = boundingRect(Mat(contours[i]));

rectangle(edge_dst_, rect, Scalar(theRNG().uniform(0, 255), theRNG().uniform(0, 255), theRNG().uniform(0, 255)), 3);minAreaRect

//最小外接边框

RotatedRect box = minAreaRect(Mat(contours[i])); //计算每个轮廓最小外接矩形

Point2f rect[4];

box.points(rect); //把最小外接矩形四个端点复制给rect数组

float angle = box.angle;

for (int j = 0; j<4; j++)

{

line(edge_dst_, rect[j], rect[(j + 1) % 4], Scalar(1), 2, 8); //绘制最小外接矩形每条边

}轮廓子程序

#include

#include

#include

#include

using namespace cv;

using namespace std;

void sear_contours(Mat src, Mat dst)

{

//转换为灰度图并平滑滤波

cvtColor(src, src, COLOR_BGR2GRAY);

// 找—— findContours

vector> contours;

vector hierarchy;

findContours(src, contours, hierarchy, 2, 2);

// 画—— drawContours

dst = Mat::zeros(src.size(), CV_8UC3);

for (int i = 0; i < hierarchy.size(); i++)

{

drawContours(dst, contours, i, Scalar(255, 255, 255), -1, 1, hierarchy);

drawContours(dst, contours, i, Scalar(0, 0, 0), 10, 1, hierarchy);//比实际范围变小,防止边界影响检测

}

// 矩—— 最小外接边框 minAreaRect

for (size_t i = 0; i < hierarchy.size(); i++)

{

RotatedRect box = minAreaRect(Mat(contours[i])); //计算每个轮廓最小外接矩形

Point2f rect[4];

box.points(rect); //把最小外接矩形四个端点复制给rect数组

float angle = box.angle;

for (int j = 0; j<4; j++)

{

line(dst, rect[j], rect[(j + 1) % 4], Scalar(rand() % 255, rand() % 255, rand() % 255), 2, 8); //绘制最小外接矩形每条边

}

}

// 矩—— 矩形边框 boundingRect

for (size_t i = 0; i < hierarchy.size(); i++)

{

Rect rect = boundingRect(Mat(contours[i]));

rectangle(dst, rect, Scalar(theRNG().uniform(0, 255), theRNG().uniform(0, 255), theRNG().uniform(0, 255)), 3);

}

} // 图像增强——边缘增强

// 高斯平滑

cv::GaussianBlur(dst, dst, cv::Size(3, 3), 0, 0);

// 图像增强——伽马变换

Mat imageGamma(dst.size(), CV_32FC3);

for (int i = 0; i < dst.rows; i++)

{

for (int j = 0; j < dst.cols; j++)

{

imageGamma.at(i, j)[0] = (dst.at(i, j)[0])*(dst.at(i, j)[0])*(dst.at(i, j)[0]);

imageGamma.at(i, j)[1] = (dst.at(i, j)[1])*(dst.at(i, j)[1])*(dst.at(i, j)[1]);

imageGamma.at(i, j)[2] = (dst.at(i, j)[2])*(dst.at(i, j)[2])*(dst.at(i, j)[2]);

}

}

//归一化到0~255

normalize(imageGamma, imageGamma, 0, 255, CV_MINMAX);

//转换成8bit图像显示

convertScaleAbs(imageGamma, imageGamma);

//imshow("原图", image);

//imshow("伽马变换图像增强效果", imageGamma);

dst = imageGamma; // 图像增强——边缘增强

// 高斯平滑

//cv::GaussianBlur(dst, dst, cv::Size(3, 3), 0, 0);

Mat temp;

GaussianBlur(dst, temp, Size(3, 3), 1.6, 1.6);

int r = 3;

Mat diff = dst + r*(dst - temp); //高反差保留算法

// 图像增强——伽马变换

Mat imageGamma(dst.size(), CV_32FC3);

for (int i = 0; i < dst.rows; i++)

{

for (int j = 0; j < dst.cols; j++)

{

imageGamma.at(i, j)[0] = (dst.at(i, j)[0])*(dst.at(i, j)[0])*(dst.at(i, j)[0]);

imageGamma.at(i, j)[1] = (dst.at(i, j)[1])*(dst.at(i, j)[1])*(dst.at(i, j)[1]);

imageGamma.at(i, j)[2] = (dst.at(i, j)[2])*(dst.at(i, j)[2])*(dst.at(i, j)[2]);

}

}

//归一化到0~255

normalize(imageGamma, imageGamma, 0, 255, CV_MINMAX);

//转换成8bit图像显示

convertScaleAbs(imageGamma, imageGamma);

//imshow("原图", image);

//imshow("伽马变换图像增强效果", imageGamma);

dst = imageGamma; 图像特征提取思路

颜色:白平衡——同态滤波——亮度归一

纹理:差分高通滤波器——直方图均衡化——纹理加强

矩:仿射原理

ATL::CImage读取图片

#include //ATL

#include

using namespace std;

// 图片读取\保存

void test()

{

ATL::CImage Image;

Image.Load(_T("条纹1.bmp"));

if (Image.IsNull())

{

cout << "没加载成功" << endl;

}

else

{

cout << "读取成功" << endl;

Image.Save(_T("image1.bmp"));

cout << "通道(int):" << Image.GetBPP() / 8 << endl;

cout << "宽(int):" << Image.GetWidth() << endl;

cout << "高(int)" << Image.GetHeight() << endl;

cout << "每行字节数(int):" << Image.GetPitch() << endl;

}

} ATL::CImage转Mat

//CImage 转 Mat

void CImage2Mat(CImage& Image, Mat& src)

{

// CImage 转 Mat

if (Image.IsNull())

{

cout << "没加载成功" << endl;

//MessageBox(_T("没有加载成功"));

}

if (1 == Image.GetBPP() / 8)

{

src.create(Image.GetHeight(), Image.GetWidth(), CV_8UC1);

}

else if (3 == Image.GetBPP() / 8)

{

src.create(Image.GetHeight(), Image.GetWidth(), CV_8UC3);

}

//拷贝数据

uchar* pucRow; //指向 Mat 数据区的行指针

uchar* pucImage = (uchar*)Image.GetBits(); //指向 CImage 数据区的指针

int nStep = Image.GetPitch();//每行的字节数,注意这个返回值有正有负

for (int nRow = 0; nRow < Image.GetHeight(); nRow++)

{

pucRow = (src.ptr(nRow));

for (int nCol = 0; nCol < Image.GetWidth(); nCol++)

{

if (1 == Image.GetBPP() / 8)

{

pucRow[nCol] = *(pucImage + nRow * nStep + nCol);

}

else if (3 == Image.GetBPP() / 8)

{

for (int nCha = 0; nCha < 3; nCha++)

{

pucRow[nCol * 3 + nCha] = *(pucImage + nRow * nStep + nCol * 3 + nCha);

}

}

}

}

} C++ 图像处理——滤波

#include

#include

#include

#include

#include

using namespace cv;

using namespace std;

/*

边缘突出

用高通——拉普拉斯

锐化处理

去噪点

用低通——均值、中值

偏色问题解决

用白平衡进行处理

【空域与频域之间是有一定关系。均值滤波器是一种低通滤波;拉普拉斯算子滤波(边缘检测)是一种高通滤波】

*/

class Blur_Class

{

public:

/*

双边滤波——保留纹理的滤波

三通道图,返回三通道图

Shuangbian_Bialteral_Filter(src, src);

*/

void Shuangbian_Bialteral_Filter(Mat src, Mat dst, int R = 10, double sigmaC = 20, double sigmaS = 20);

/*

高分差滤波——类似锐化增加噪声点

三通道图,返回三通道图

HighPass(src, dst);

*/

void HighPass(Mat src, Mat dst, int r = 3, int k = 3);

/*

LOG变换——使整体图片亮度均衡(强度大)

三通道图,返回三通道图

log_Filter(src, src);

*/

void log_Filter(Mat src, Mat dst);

/*

均衡化——一定程度上亮度均衡,增强边缘强度

输入单通道、三通道

junheng_equalizeHist(src, src);

*/

void junheng_equalizeHist(Mat src, Mat dst);

/*

中值滤波——可以去除多个零散密集的噪声点

输入单通道、三通道图

zhongzhi_Median_Filter(src, src);

*/

void zhongzhi_Median_Filter(Mat src, Mat dst, int k = 10);

/*

膨胀腐蚀——默认膨胀

输入单通道、三通道

PF_Filter(src, dst);

*/

void PF_Filter(Mat src, Mat dst, bool flag = true, int k = 1);

/*

自己定义卷积核

0 -1 0

-1 5 -1

0 -1 0

filter(dst2, dst2)

*/

void filter(Mat src, Mat dst, Mat kerne = (Mat_(3, 3) << 0, -1, 0, -1, 5, -1, 0, -1, 0));

public:

/* RGB分离 */

void RGB_Split(Mat src, Mat r = Mat(), Mat g = Mat(), Mat b = Mat(), Mat R = Mat(), Mat G = Mat(), Mat B = Mat());

/*白平衡*/

void baipingheng(Mat src, Mat dst);

public:

/*

lab

*/

void RGB2LAB(Mat& rgb, Mat& Lab);

/*

偏色检验

*/

float colorCheck(const Mat& imgLab);

};

/*——————————————————————————————————————————————————————————————*/

/*

双边滤波——保留纹理的滤波

三通道图,返回三通道图

Shuangbian_Bialteral_Filter(src, src);

*/

void Blur_Class::Shuangbian_Bialteral_Filter(Mat src, Mat dst, int R, double sigmaC, double sigmaS)

{

Mat dst_;

bilateralFilter(src, dst_, R, sigmaC, sigmaS);

dst_.copyTo(dst);

}

/*

高反差滤波——类似锐化增加噪声点——叠加边缘后的效果图 = 原图 + r*(原图-滤波)

三通道图,返回三通道图

HighPass(src, dst);

*/

void Blur_Class::HighPass(Mat src, Mat dst, int r, int k)

{

Mat temp;

int n = 2 * k + 1;

Size ksize = Size(n, n);

GaussianBlur(src, temp, ksize, 1.6, 1.6);

Mat diff = src + r*(src - temp); //高反差保留算法

diff.copyTo(dst);

}

/*

LOG变换——使整体图片亮度均衡(强度大)

三通道图,返回三通道图

log_Filter(src, src);

*/

void Blur_Class::log_Filter(Mat src, Mat dst)

{

Mat imageLog(src.size(), CV_32FC3);

for (int i = 0; i < src.rows; i++)

{

for (int j = 0; j < src.cols; j++)

{

imageLog.at(i, j)[0] = log(1 + src.at(i, j)[0]);

imageLog.at(i, j)[1] = log(1 + src.at(i, j)[1]);

imageLog.at(i, j)[2] = log(1 + src.at(i, j)[2]);

}

}

//归一化到0~255

normalize(imageLog, imageLog, 0, 255, CV_MINMAX);

//转换成8bit图像显示

convertScaleAbs(imageLog, imageLog);

imageLog.copyTo(dst);

}

/*

均衡化——一定程度上亮度均衡,增强边缘强度

输入单通道、三通道

junheng_equalizeHist(src, src);

*/

void Blur_Class::junheng_equalizeHist(Mat src, Mat dst)

{

if (src.channels() == 3)

{

dst.create(src.size(), src.type());

Mat imageRGB[3];

split(src, imageRGB);

for (int i = 0; i < 3; i++)

{

equalizeHist(imageRGB[i], imageRGB[i]);

}

merge(imageRGB, 3, dst);

}

else if (src.channels() == 1)

{

dst.create(src.size(), src.type());

equalizeHist(src, dst);

}

}

/*

中值滤波——可以去除多个零散密集的噪声点

输入单通道、三通道图

zhongzhi_Median_Filter(src, src);

*/

void Blur_Class::zhongzhi_Median_Filter(Mat src, Mat dst, int k)

{

int n = 2 * k + 1;

dst.create(src.size(), src.type());

medianBlur(src, dst, n);

}

/*

膨胀腐蚀——默认膨胀

输入单通道、三通道

PF_Filter(src, dst);

*/

void Blur_Class::PF_Filter(Mat src, Mat dst, bool flag, int k)

{

int n = 2 * k + 1;

Size Ksize = Size(k, k);

Mat element = getStructuringElement(MORPH_RECT, Ksize);

if (flag == true)

{

// 进行膨胀操作

dilate(src, dst, element);

}

else

{

// 进行腐蚀操作

erode(src, dst, element);

}

}

/*

自己定义卷积核

0 -1 0

-1 5 -1

0 -1 0

filter(dst2, dst2)

*/

void Blur_Class::filter(Mat src, Mat dst, Mat kerne)

{

//Mat kerne = (Mat_(3, 3) << 0, -1, 0, -1, 5, -1, 0, -1, 0); // 生成一个掩模核 大小为 3x3 , 通过<< 输入到矩阵Mat_ 中,然后隐式转换成Mat类型

dst.create(src.size(), src.type());

filter2D(src, dst, CV_8UC3, kerne);

}

/*

RGB

分离成r、g、b

合并成R、G、B

*/

void Blur_Class::RGB_Split(Mat src, Mat r, Mat g, Mat b, Mat R, Mat G, Mat B)

{

Mat imageRGB[3];

split(src, imageRGB);

r.create(src.size(), src.type());

g.create(src.size(), src.type());

b.create(src.size(), src.type());

b = imageRGB[0];

g = imageRGB[1];

r = imageRGB[2];

Mat black = Mat::zeros(Size(src.cols, src.rows), CV_8UC1);

vector channels_r;

channels_r.push_back(black);

channels_r.push_back(black);

channels_r.push_back(r);

merge(channels_r, R);

vector channels_g;

channels_g.push_back(black);

channels_g.push_back(g);

channels_g.push_back(black);

merge(channels_g, G);

vector channels_b;

channels_b.push_back(b);

channels_b.push_back(black);

channels_b.push_back(black);

merge(channels_b, B);

}

/*

白平衡

*/

void Blur_Class::baipingheng(Mat src, Mat dst)

{

vector imageRGB;

split(src, imageRGB);

//求原始图像的RGB分量的均值

double R, G, B;

B = mean(imageRGB[0])[0];

G = mean(imageRGB[1])[0];

R = mean(imageRGB[2])[0];

//需要调整的RGB分量的增益

double KR, KG, KB;

KB = (R + G + B) / (3 * B);

KG = (R + G + B) / (3 * G);

KR = (R + G + B) / (3 * R);

//调整RGB三个通道各自的值

imageRGB[0] = imageRGB[0] * KB;

imageRGB[1] = imageRGB[1] * KG;

imageRGB[2] = imageRGB[2] * KR;

//RGB三通道图像合并

dst.create(src.size(), src.type());

merge(imageRGB, dst);

namedWindow("白平衡调整后", 0);

imshow("白平衡调整后", dst);

waitKey();

}

/*转换成lab*/

void Blur_Class::RGB2LAB(Mat& rgb, Mat& Lab)

{

Mat XYZ(rgb.size(), rgb.type());

Mat_::iterator begainRGB = rgb.begin();

Mat_::iterator endRGB = rgb.end();

Mat_::iterator begainXYZ = XYZ.begin();

int shift = 22;

for (; begainRGB != endRGB; begainRGB++, begainXYZ++)

{

(*begainXYZ)[0] = ((*begainRGB)[0] * 199049 + (*begainRGB)[1] * 394494 + (*begainRGB)[2] * 455033 + 524288) >> (shift - 2);

(*begainXYZ)[1] = ((*begainRGB)[0] * 75675 + (*begainRGB)[1] * 749900 + (*begainRGB)[2] * 223002 + 524288) >> (shift - 2);

(*begainXYZ)[2] = ((*begainRGB)[0] * 915161 + (*begainRGB)[1] * 114795 + (*begainRGB)[2] * 18621 + 524288) >> (shift - 2);

}

int LabTab[1024];

for (int i = 0; i < 1024; i++)

{

if (i>9)

LabTab[i] = (int)(pow((float)i / 1020, 1.0F / 3) * (1 << shift) + 0.5);

else

LabTab[i] = (int)((29 * 29.0 * i / (6 * 6 * 3 * 1020) + 4.0 / 29) * (1 << shift) + 0.5);

}

const int ScaleLC = (int)(16 * 2.55 * (1 << shift) + 0.5);

const int ScaleLT = (int)(116 * 2.55 + 0.5);

const int HalfShiftValue = 524288;

begainXYZ = XYZ.begin();

Mat_::iterator endXYZ = XYZ.end();

Lab.create(rgb.size(), rgb.type());

Mat_::iterator begainLab = Lab.begin();

for (; begainXYZ != endXYZ; begainXYZ++, begainLab++)

{

int X = LabTab[(*begainXYZ)[0]];

int Y = LabTab[(*begainXYZ)[1]];

int Z = LabTab[(*begainXYZ)[2]];

int L = ((ScaleLT * Y - ScaleLC + HalfShiftValue) >> shift);

int A = ((500 * (X - Y) + HalfShiftValue) >> shift) + 128;

int B = ((200 * (Y - Z) + HalfShiftValue) >> shift) + 128;

(*begainLab)[0] = L;

(*begainLab)[1] = A;

(*begainLab)[2] = B;

}

}

/*偏色检测*/

float Blur_Class::colorCheck(const Mat& imgLab)

{

Mat_::const_iterator begainIt = imgLab.begin();

Mat_::const_iterator endIt = imgLab.end();

float aSum = 0;

float bSum = 0;

for (; begainIt != endIt; begainIt++)

{

aSum += (*begainIt)[1];

bSum += (*begainIt)[2];

}

int MN = imgLab.cols*imgLab.rows;

double Da = aSum / MN - 128; // 必须归一化到[-128,,127]范围内

double Db = bSum / MN - 128;

//平均色度

double D = sqrt(Da*Da + Db*Db);

begainIt = imgLab.begin();

double Ma = 0;

double Mb = 0;

for (; begainIt != endIt; begainIt++)

{

Ma += abs((*begainIt)[1] - 128 - Da);

Mb += abs((*begainIt)[2] - 128 - Db);

}

Ma = Ma / MN;

Mb = Mb / MN;

//色度中心距

double M = sqrt(Ma*Ma + Mb*Mb);

//偏色因子

float K = (float)(D / M);

cout << "K=" << K << endl;

return K;

}

C++ Opencv HSV

Opencv的HSV范围:

![]()

C++ Opencv 双边滤波

转:

双边滤波是一种非线性滤波器,它可以达到保持边缘、降噪平滑的效果。和其他滤波原理一样,双边滤波也是采用加权平均的方法,用周边像素亮度值的加权平均代表某个像素的强度,所用的加权平均基于高斯分布。最重要的是,双边滤波的权重不仅考虑了像素的欧氏距离(如普通的高斯低通滤波,只考虑了位置对中心像素的影响),还考虑了像素范围域中的辐射差异(例如卷积核中像素与中心像素之间相似程度、颜色强度,深度距离等),在计算中心像素的时候同时考虑这两个权重。

高斯滤波:

![]()

双边滤波:

![]()

![]()

![]()

![]()

![]()

g(i, j)代表输出点;

S(i, j)的是指以(i,j)为中心的(2N+1)(2N+1)的大小的范围;

f(k, l)代表(多个)输入点;

w(i, j, k, l)代表经过两个高斯函数计算出的值(这里还不是权值)

上述公式我们进行转化,假设公式中w(i,j,k,l)为m,则有![]()

设 m1+m2+m3 … +mn = M,则有![]()

w(i, j, k, l):ws为空间临近高斯函数,wr为像素值相似度高斯函数

![]()

![]()

![]()

![]()

![]()

![]()

双边滤波的核函数是空间域核(空间域(spatial domain S))与像素范围域核(像素范围域(range domain R))的综合结果:在图像的平坦区域,像素值变化很小,对应的像素范围域权重接近于1,此时空间域权重起主要作用,相当于进行高斯模糊;在图像的边缘区域,像素值变化很大,像素范围域权重变大,从而保持了边缘的信息。

![]()

算法实现:

void bilateralFilter(src, dst, d, sigmaColor, sigmaSpace, BORDER_DEFAULT)

/*

. InputArray src: 输入图像,可以是Mat类型,图像必须是8位或浮点型单通道、三通道的图像。

. OutputArray dst: 输出图像,和原图像有相同的尺寸和类型。

. int d: (直径范围)表示在过滤过程中每个像素邻域的直径范围。如果这个值是非正数,则函数会从第五个参数sigmaSpace计算该值。

. double sigmaColor:(sigma颜色) 颜色空间过滤器的sigma值,这个参数的值月大,表明该像素邻域内有月宽广的颜色会被混合到一起,产生较大的半相等颜色区域。

. double sigmaSpace:(sigma空间) 坐标空间中滤波器的sigma值,如果该值较大,则意味着颜色相近的较远的像素将相互影响,从而使更大的区域中足够相似的颜色获取相同的颜色。当d>0时,d指定了邻域大小且与sigmaSpace五官,否则d正比于sigmaSpace.

. int borderType=BORDER_DEFAULT: 用于推断图像外部像素的某种边界模式,有默认值BORDER_DEFAULT.

*/

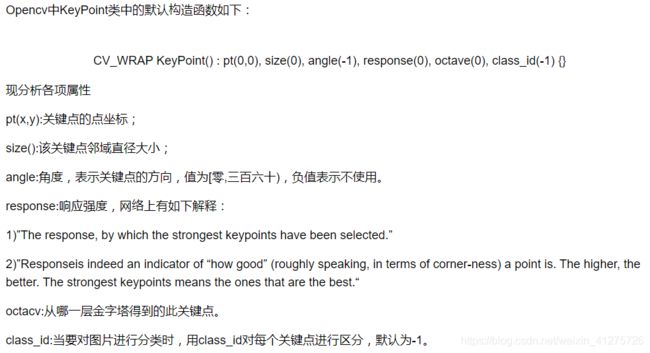

bilateralFilter(src, dst, 10, 10, 10);C++ Opencv 图像特征( Opencv3)

// 特征点

void FeatureAndCompare(cv::Mat srcImage1)

{

CV_Assert(srcImage1.data != NULL);

Mat c_src1 = srcImage1.clone();

// 转换为灰度

cv::Mat grayMat1;

cv::cvtColor(srcImage1, grayMat1, CV_RGB2GRAY);

加强

equalizeHist(grayMat1, grayMat1);

锐化

sharpenImage1(grayMat1, grayMat1);

//

cv::Ptr ptrBrisk = cv::BRISK::create();

vector kp1;

Mat des1;//descriptor

ptrBrisk->detectAndCompute(grayMat1, Mat(), kp1, des1);

Mat res1;

int drawmode = DrawMatchesFlags::DRAW_RICH_KEYPOINTS;

drawKeypoints(c_src1, kp1, res1, Scalar::all(-1), drawmode);//画出特征点

//

//std::cout << "size of description of Img1: " << kp1.size() << endl;

//

namedWindow("drawKeypoints", 0);

imshow("drawKeypoints", c_src1);

cvWaitKey(0);

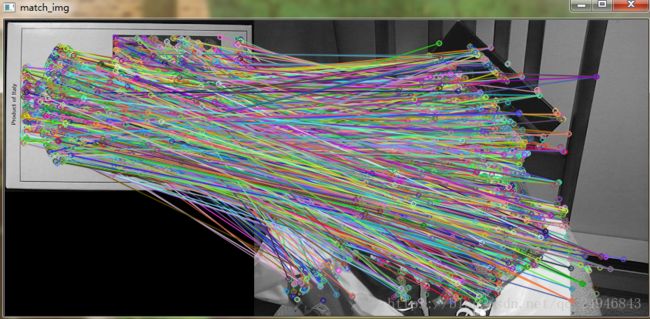

} //brisk

void brisk_feature(Mat src1, Mat src2)

{

cv::cvtColor(src1, src1, CV_RGB2GRAY);

cv::cvtColor(src2, src2, CV_RGB2GRAY);

// 加强

equalizeHist(src1, src1);

equalizeHist(src2, src2);

// 锐化

//sharpenImage1(src1, src1);

//sharpenImage1(src2, src2);

Ptr brisk = BRISK::create();

vectorkeypoints1, keypoints2;

Mat descriptors1, descriptors2;

brisk->detectAndCompute(src1, Mat(), keypoints1, descriptors1);

brisk->detectAndCompute(src2, Mat(), keypoints2, descriptors2);

Mat dst1,dst2;

drawKeypoints(src1, keypoints1, dst1);

drawKeypoints(src2, keypoints2, dst2);

namedWindow("output1", 0);

imshow("output1", dst1);

waitKey();

namedWindow("output2", 0);

imshow("output2", dst2);

waitKey();

BFMatcher matcher;

vectormatches;

matcher.match(descriptors1, descriptors2, matches);

Mat match_img;

drawMatches(src1, keypoints1, src2, keypoints2, matches, match_img);

namedWindow("match_img", 0);

imshow("match_img", match_img);

double minDist = 1000;

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < minDist)

{

minDist = dist;

}

}

printf("min distance is:%f\n", minDist);

vectorgoodMatches;

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < max(1.8*minDist, 0.02))

{

goodMatches.push_back(matches[i]);

}

}

Mat good_match_img;

drawMatches(src1, keypoints1, src2, keypoints2, goodMatches, good_match_img, Scalar::all(-1), Scalar::all(-1), vector(), 2);

namedWindow("goodMatch", 0);

imshow("goodMatch", good_match_img);

waitKey(0);

} C++ Opencv 图像特征brisk s

#include

#include

using namespace cv;

using namespace xfeatures2d;

using namespace std;

int main(int arc, char** argv) {

Mat src1 = imread("1.png",IMREAD_GRAYSCALE);

Mat src2 = imread("2.png",IMREAD_GRAYSCALE);

namedWindow("input", CV_WINDOW_AUTOSIZE);

imshow("input", src1);

Ptr brisk = BRISK::create();

vectorkeypoints1, keypoints2;

Mat descriptors1, descriptors2;

brisk->detectAndCompute(src1, Mat(), keypoints1, descriptors1);

brisk->detectAndCompute(src2, Mat(), keypoints2, descriptors2);

/*Mat dst1;

drawKeypoints(src1, keypoints1, dst1);

imshow("output1", dst1);*/

BFMatcher matcher;

vectormatches;

matcher.match(descriptors1, descriptors2, matches);

Mat match_img;

drawMatches(src1, keypoints1, src2, keypoints2, matches, match_img);

imshow("match_img", match_img);

double minDist = 1000;

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < minDist)

{

minDist = dist;

}

}

printf("min distance is:%f\n", minDist);

vectorgoodMatches;

for (int i = 0; i < descriptors1.rows; i++)

{

double dist = matches[i].distance;

if (dist < max( 1.8*minDist, 0.02))

{

goodMatches.push_back(matches[i]);

}

}

Mat good_match_img;

drawMatches(src1, keypoints1, src2, keypoints2, goodMatches, good_match_img, Scalar::all(-1), Scalar::all(-1), vector(), 2);

imshow("goodMatch", good_match_img);

vectorsrc1GoodPoints, src2GoodPoints;

for (int i = 0; i < goodMatches.size(); i++)

{

src1GoodPoints.push_back(keypoints1[goodMatches[i].queryIdx].pt);

src2GoodPoints.push_back(keypoints2[goodMatches[i].trainIdx].pt);

}

Mat P = findHomography(src1GoodPoints, src2GoodPoints, RANSAC);

vector src1corner(4);

vector src2corner(4);

src1corner[0] = Point(0, 0);

src1corner[1] = Point(src1.cols, 0);

src1corner[2] = Point(src1.cols, src1.rows);

src1corner[3] = Point(0, src1.rows);

perspectiveTransform(src1corner, src2corner, P);

line(good_match_img, src2corner[0] + Point2f(src1.cols, 0), src2corner[1] + Point2f(src1.cols, 0), Scalar(0, 0, 255), 2);

line(good_match_img, src2corner[1] + Point2f(src1.cols, 0), src2corner[2] + Point2f(src1.cols, 0), Scalar(0, 0, 255), 2);

line(good_match_img, src2corner[2] + Point2f(src1.cols, 0), src2corner[3] + Point2f(src1.cols, 0), Scalar(0, 0, 255), 2);

line(good_match_img, src2corner[3] + Point2f(src1.cols, 0), src2corner[0] + Point2f(src1.cols, 0), Scalar(0, 0, 255), 2);

imshow("result", good_match_img);

waitKey(0);

return 0;

}

C++ Opencv hog+svm

思路:

// HOG描述子向量

std::vector descriptors;

cv::HOGDescriptor hog(cv::Size(48, 48), cv::Size(16, 16), cv::Size(8, 8), cv::Size(8, 8), 9);

hog.compute(src, descriptors, cv::Size(8, 8));

int DescriptorDim = descriptors.size();

// SVM 样本+标签

int num;

Mat sampleFeatureMat = cv::Mat::zeros(num, DescriptorDim, CV_32FC1);

int i, j;//i为样本序列,j为样本特征值

sampleFeatureMat.at(i, j) = descriptors[j];

Mat sampleLabelMat;

int label;

sampleLabelMat.at(i, 0) = label; C++ Opencv 颜色、纹理、形状+svm

主函数

#include

#include

#include

#include

#include

#include "Water_Cut.h"

#include "Feature.h"

using namespace cv;

using namespace std;

using namespace cv::ml;

Feature feature_class;

int main()

{

// 训练——训练时候关闭测试

#if 0

// 遍历图片——为了循环提取特征用

string train_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\训练";

string train_img_namehead = "test";

string train_img_type = "bmp";

//size_t train_img_num = 4;

string img_name = train_img_dir + "\\" + "*." + train_img_type; //cout << img_name << endl;

int train_img_num = feature_class.read_images_in_folder(img_name);

cout << "训练图个数:" << train_img_num << endl;

// 训练用的输入和标签

Mat trainmat;

trainmat = cv::Mat::zeros(train_img_num, 32, CV_32FC1);

Mat labelmat;

labelmat = cv::Mat::zeros(train_img_num, 1, CV_32SC1);

// 遍历图并提取特征

vector train_img = feature_class.data_search(train_img_dir, train_img_namehead, train_img_type, train_img_num);

for (size_t i = 0; i < train_img_num; i++)

{

resize(train_img[i], train_img[i], Size(train_img[i].cols / 2, train_img[i].rows / 2));

namedWindow("vetmat", 0);

imshow("vetmat", train_img[i]);//train_img[i].clone();

waitKey(0);

// 图像分割

Mat src = train_img[i].clone();

Mat dst = Mat::zeros(train_img[i].size(), CV_8UC3);

Mat edge = Mat::zeros(train_img[i].size(), CV_8UC3);

Water_Cut(src, dst, edge);

// 图像特征_HU

Mat hu_dst = dst.clone();

double Hu[7] = { 0 };

feature_class.feature_hu(hu_dst, Hu);

// 图像特征_COLOR

Mat color_dst = dst.clone();

float Mom[9] = { 0 };

feature_class.feature_color(color_dst, Mom);

// 图像特征_GLCM

Mat glcm_dst = dst.clone();

cv::cvtColor(glcm_dst, glcm_dst, CV_RGB2GRAY);

float glcm_data[16] = { 0 };

feature_class.feature_glcm(glcm_dst, glcm_data);

float train_data[32] = { 0 };

for (size_t j = 0; j < 7; j++)

{

train_data[j] = (float)Hu[j];

}

for (size_t j = 0; j < 9; j++)

{

train_data[7 + j] = (float)Mom[j];

}

for (size_t j = 0; j < 16; j++)

{

train_data[16 + j] = (float)glcm_data[j];

}

vector traindata; // 特征值——一类(一张图)的特征

for (size_t k = 0; k < 32; k++)

{

traindata.push_back(train_data[k]);

}

std::cout << "traindata size:";

std::cout << traindata.size() << endl;

for (size_t j = 0; j < traindata.size(); j++)

{

trainmat.at(i, j) = traindata[j];

}

labelmat.at(i, 0) = i + 1; //每张一类

}

// 训练的初始化

Ptr svm = SVM::create();

svm->setType(SVM::C_SVC);

svm->setKernel(SVM::LINEAR);

svm->setTermCriteria(TermCriteria(TermCriteria::MAX_ITER, 100, 1e-6));

std::cout << "开始训练" << endl;

svm->train(trainmat, ROW_SAMPLE, labelmat);

std::cout << "开始结束" << endl;

svm->save("svm.xml");

#endif

// 测试——测试时候关闭训练

#if 1

// 遍历测试文件

// 遍历图片——为了循环提取特征用

//string test_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\测试\\方格1号";

//string test_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\测试\\花纹2号";

//string test_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\测试\\空纹理3号";

//string test_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\测试\\条纹4号";

string test_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\测试\\";

string test_img_namehead = "test";

string test_img_type = "bmp";

string img_name = test_img_dir + "\\" + "*." + test_img_type; //cout << img_name << endl;

int test_img_num = feature_class.read_images_in_folder(img_name);

std::cout << "测试图个数:" << test_img_num << endl;

// 训练用的输入和标签

Mat testmat;

testmat = cv::Mat::zeros(test_img_num, 32, CV_32F);

// 遍历图并提取特征

vector test_img = feature_class.data_search(test_img_dir, test_img_namehead, test_img_type, test_img_num);

for (size_t i = 0; i < test_img_num; i++)

{

resize(test_img[i], test_img[i], Size(test_img[i].cols / 2, test_img[i].rows / 2));

cv::namedWindow("vetmat", 0);

cv::imshow("vetmat", test_img[i]);//test_img[i].clone();

// 图像分割

Mat src = test_img[i].clone();

Mat dst = Mat::zeros(test_img[i].size(), CV_8UC3);

Mat edge = Mat::zeros(test_img[i].size(), CV_8UC3);

Water_Cut(src, dst, edge);

// 图像特征_HU

Mat hu_dst = dst.clone();

double Hu[7] = { 0 };

feature_class.feature_hu(hu_dst, Hu);

// 图像特征_COLOR

Mat color_dst = dst.clone();

float Mom[9] = { 0 };

feature_class.feature_color(color_dst, Mom);

// 图像特征_GLCM

Mat glcm_dst = dst.clone();

cv::cvtColor(glcm_dst, glcm_dst, CV_RGB2GRAY);

float glcm_data[16] = { 0 };

feature_class.feature_glcm(glcm_dst, glcm_data);

cv::waitKey();

float test_data[32] = { 0 };

for (size_t j = 0; j < 7; j++)

{

test_data[j] = (float)Hu[j];

}

for (size_t j = 0; j < 9; j++)

{

test_data[7 + j] = (float)Mom[j];

}

for (size_t j = 0; j < 16; j++)

{

test_data[16 + j] = (float)glcm_data[j];

}

vector testdata; // 特征值——一类(一张图)的特征

for (size_t k = 0; k < 32; k++)

{

testdata.push_back(test_data[k]);

}

std::cout << "testdata size:";

std::cout << testdata.size() << endl;

for (size_t j = 0; j < testdata.size(); j++)

{

testmat.at(i, j) = testdata[j];

}

}

Ptr svmtest = Algorithm::load("svm.xml"); // SVM::load()是一个静态函数,不能单独用

Mat result;

float temp = svmtest->predict(testmat, result);

std::cout << "分类结果" << endl;

std::cout << result << endl;

for (size_t i = 0; i < test_img_num; i++)

{

int a = result.at(i, 0).x;

std::cout << "最终分类为:" << "第" << a << "号瓷砖" << endl;

}

#endif

system("pause");

return 0;

} 子程序

#include

#include

#include

#include "time.h"

using namespace cv;

using namespace std;

using namespace cv::ml;

class Feature

{

public:

/*

第一步:建立类

#include

#include

#include

#include "time.h"

using namespace cv;

using namespace std;

第二步:包含类

Feature feature_class;

第三步:

集合颜色+形状+纹理

// 图像特征_HU

Mat hu_dst = dst.clone();

double Hu[7] = { 0 };

feature_class.feature_hu(hu_dst, Hu);

// 图像特征_COLOR

Mat color_dst = dst.clone();

float Mom[9] = { 0 };

feature_class.feature_color(color_dst, Mom);

// 图像特征_GLCM

Mat glcm_dst = dst.clone();

cv::cvtColor(glcm_dst, glcm_dst, CV_RGB2GRAY);

float glcm_data[16] = { 0 };

feature_class.feature_glcm(glcm_dst, glcm_data);

第四步:

// 特征集合7+9+16

float test_data[32] = { 0 };

for (size_t j = 0; j < 7; j++)

{

test_data[j] = (float)Hu[j];

}

for (size_t j = 0; j < 9; j++)

{

test_data[7 + j] = (float)Mom[j];

}

for (size_t j = 0; j < 16; j++)

{

test_data[16 + j] = (float)glcm_data[j];

}

*/

/* 【颜色】 */

// 颜色 计算三阶矩

double calc3orderMom(Mat &channel) //计算三阶矩

{

uchar *p;

double mom = 0;

double m = mean(channel)[0]; //计算单通道图像的均值

int nRows = channel.rows;

int nCols = channel.cols;

if (channel.isContinuous()) //连续存储有助于提升图像扫描速度

{

nCols *= nRows;

nRows = 1;

}

for (int i = 0; i < nRows; i++) //计算立方和

{

p = channel.ptr(i);

for (int j = 0; j < nCols; j++)

mom += pow((p[j] - m), 3);

}

float temp;

temp = cvCbrt((float)(mom / (nRows*nCols))); //求均值的立方根

mom = (double)temp;

return mom;

}

// 颜色 计算9个颜色矩:3个通道的1、2、3阶矩

double *colorMom(Mat &img)

{

double *Mom = new double[9]; //存放9个颜色矩

if (img.channels() != 3)

std::cout << "Error,input image must be a color image" << endl;

Mat b(img.rows, img.cols, CV_8U);

Mat r(img.rows, img.cols, CV_8U);

Mat g(img.rows, img.cols, CV_8U);

Mat channels[] = { b, g, r };

split(img, channels);

//cv::imshow("r", channels[0]);

//cv::imshow("g", channels[1]);

//cv::imshow("b", channels[2]);

//waitKey(0);

Mat tmp_m, tmp_sd;

//计算b通道的颜色矩

meanStdDev(b, tmp_m, tmp_sd);

Mom[0] = tmp_m.at(0, 0);

Mom[3] = tmp_sd.at(0, 0);

Mom[6] = calc3orderMom(b);

// cout << Mom[0] << " " << Mom[1] << " " << Mom[2] << " " << endl;

//计算g通道的颜色矩

meanStdDev(g, tmp_m, tmp_sd);

Mom[1] = tmp_m.at(0, 0);

Mom[4] = tmp_sd.at(0, 0);

Mom[7] = calc3orderMom(g);

// cout << Mom[3] << " " << Mom[4] << " " << Mom[5] << " " << endl;

//计算r通道的颜色矩

meanStdDev(r, tmp_m, tmp_sd);

Mom[2] = tmp_m.at(0, 0);

Mom[5] = tmp_sd.at(0, 0);

Mom[8] = calc3orderMom(r);

// cout << Mom[6] << " " << Mom[7] << " " << Mom[8] << " " << endl;

return Mom;//返回颜色矩数组

}

// 颜色

bool feature_color(Mat src, float Mom[9])

{

if (src.channels() == 3)

{

// 图像特征_COLOR

Mat color_dst = src.clone();

cv::cvtColor(color_dst, color_dst, CV_RGB2HSV);

double *MOM;

MOM = colorMom(color_dst);

for (int i = 0; i < 9; i++)

{

std::cout << (float)MOM[i] << endl;

Mom[i] = (float)MOM[i];

}

return true;

}

else

{

std::cout << "channels!=3";

return false;

}

}

/* 【形状】 */

bool feature_hu(Mat src, double Hu[7])

{

if (src.channels() == 3)

{

// 图像特征_HU

Mat hu_dst = src.clone();

cv::cvtColor(hu_dst, hu_dst, CV_RGB2GRAY);

Canny(hu_dst, hu_dst, 0, 120);

//double Hu[7]; //存储得到的Hu矩阵

Moments mo = moments(hu_dst);//矩变量

HuMoments(mo, Hu);

for (int i = 0; i < 7; i++)

{

std::cout << (float)Hu[i] << endl;

}

return true;

}

else if ((src.channels() == 1))

{

Mat hu_dst = src.clone();

Canny(hu_dst, hu_dst, 0, 120);

//double Hu[7]; //存储得到的Hu矩阵

Moments mo = moments(hu_dst);//矩变量

HuMoments(mo, Hu);

for (int i = 0; i < 7; i++)

{

std::cout << (float)Hu[i] << endl;

}

return true;

}

else

{

return false;

}

}

// 纹理

const int gray_level = 16;//纹理区域块的大小,通常将图像划分成若干个纹理块计算

vector glamvalue;//全局变量

//【】第一步:j计算共生矩阵

void getglcm_0(Mat& input, Mat& dst)//0度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)//寻找像素灰度最大值

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;//像素灰度最大值加1即为该矩阵所拥有的灰度级数

if (max_gray_level > 16)//若灰度级数大于16,则将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

else//若灰度级数小于16,则生成相应的灰度共生矩阵

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

}

void getglcm_45(Mat& input, Mat& dst)//45度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;

if (max_gray_level > 16)

{

for (int i = 0; i < height; i++)//将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

else

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

}

void getglcm_90(Mat& input, Mat& dst)//90度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;

if (max_gray_level > 16)

{

for (int i = 0; i < height; i++)//将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j];

dst.ptr(rows)[cols]++;

}

}

}

else

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j];

dst.ptr(rows)[cols]++;

}

}

}

}

void getglcm_135(Mat& input, Mat& dst)//135度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;

if (max_gray_level > 16)

{

for (int i = 0; i < height; i++)//将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 1; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j - 1];

dst.ptr(rows)[cols]++;

}

}

}

else

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 1; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j - 1];

dst.ptr(rows)[cols]++;

}

}

}

}

// 【】第二步:计算纹理特征 // 特征值计算—— double& Asm, double& Con, double& Ent, double& Idm

void feature_computer(Mat&src, float& Asm, float& Con, float& Ent, float& Idm)//计算特征值

{

int height = src.rows;

int width = src.cols;

int total = 0;

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

total += srcdata[j];//求图像所有像素的灰度值的和

}

}

Mat copy;

copy.create(height, width, CV_64FC1);

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

float*copydata = copy.ptr(i);

for (int j = 0; j < width; j++)

{

copydata[j] = (float)srcdata[j] / (float)total;//图像每一个像素的的值除以像素总和

}

}

for (int i = 0; i < height; i++)

{

float*srcdata = copy.ptr(i);

for (int j = 0; j < width; j++)

{

Asm += srcdata[j] * srcdata[j]; //能量

if (srcdata[j]>0)

{

Ent -= srcdata[j] * log(srcdata[j]); //熵

}

Con += (float)(i - j)*(float)(i - j)*srcdata[j]; //对比度

Idm += srcdata[j] / (1 + (float)(i - j)*(float)(i - j)); //逆差矩

}

}

}

// 【】融合第一、二步

/*

Mat src_gray;

float data[16] = {0};

*/

void feature_glcm(Mat src_gray, float data[16])

{

Mat dst_0, dst_90, dst_45, dst_135;

getglcm_0(src_gray, dst_0);

float asm_0 = 0, con_0 = 0, ent_0 = 0, idm_0 = 0;

feature_computer(dst_0, asm_0, con_0, ent_0, idm_0);

getglcm_45(src_gray, dst_45);

float asm_45 = 0, con_45 = 0, ent_45 = 0, idm_45 = 0;

feature_computer(dst_45, asm_45, con_45, ent_45, idm_45);

getglcm_90(src_gray, dst_90);

float asm_90 = 0, con_90 = 0, ent_90 = 0, idm_90 = 0;

feature_computer(dst_90, asm_90, con_90, ent_90, idm_90);

getglcm_135(src_gray, dst_135);

float asm_135 = 0, con_135 = 0, ent_135 = 0, idm_135 = 0;

feature_computer(dst_135, asm_135, con_135, ent_135, idm_135);

float AMS[4] = { asm_0, asm_45, asm_90, asm_135 };

float COM[4] = { con_0, con_45, con_90, con_135 };

float ENT[4] = { ent_0, ent_45, ent_90, ent_135 };

float IDM[4] = { idm_0, idm_45, idm_90, idm_135 };

float glcm_data[16] = {

asm_0, asm_45, asm_90, asm_135,

con_0, con_45, con_90, con_135,

ent_0, ent_45, ent_90, ent_135,

idm_0, idm_45, idm_90, idm_135

};

/*std::cout << "特征数据:" << endl;*/

for (size_t i = 0; i < 16; i++)

{

data[i] = glcm_data[i];

//std::cout << data[i] << " ";

}

}

// 读取当前文件夹图片的个数子程序

/*

cv::String pattern = "./save/*.bmp";

int cout = read_images_in_folder(pattern);

*/

size_t read_images_in_folder(cv::String pattern)//读取当前指定目录的图片的个数

{

vector fn;

glob(pattern, fn, false);//OpenCV自带一个函数glob()可以遍历文件

size_t count = fn.size(); //number of png files in images folder

return count;

}

// 【】文件检索

/*

string train_img_dir = "C:\\Users\\Administrator\\Desktop\\样品\\训练";

string train_img_namehead = "test";

string train_img_type = "bmp";

size_t train_img_num = 4;

vector train_img = data_search(train_img_dir, train_img_namehead, train_img_type, train_img_num);

for (size_t i = 0; i < train_img_num; i++)

{

namedWindow("vetmat", 0);

imshow("vetmat", train_img[i]);

waitKey(0);

}

*/

vector data_search(string &img_dir, string &img_namehead, string &img_type, size_t n)

{

float train_data[4][16] = { 0 };

vector src;

for (int i = 0; i < n; i++)

{

string pos;

stringstream ss;

ss << i;

ss >> pos;

string img_name = img_dir + "\\" + img_namehead + pos + "." + img_type; //cout << img_name << endl;

Mat outsrc = imread(img_name);

src.push_back(outsrc);

}

return src;

}

private:

};

子函数

#include

#include

using namespace cv;

using namespace std;

/*遗留问题:两点的确立*/

void Water_Cut(InputArray& src, OutputArray& dst, OutputArray& edge)

{

Mat srcImage;

src.copyTo(srcImage);

//cv::resize(srcImage, srcImage, Size(srcImage.cols / 2, srcImage.rows / 2));

cv::namedWindow("resImage", 0);

cv::imshow("resImage", srcImage);

//waitKey();

// 【mask两点】

//mask的第一点 maskImage

Mat maskImage;

maskImage = Mat(srcImage.size(), CV_8UC1); // 掩模,在上面做标记,然后传给findContours

maskImage = Scalar::all(0);

Point point1(0, 0), point2(100, 10);

//line(maskImage, point1, point2, Scalar::all(255), 5, 8, 0);

circle(maskImage, point1, 10, Scalar::all(255), 100);

//mask的第二点 maskImage

Point point3(srcImage.cols / 2, srcImage.rows / 2), point4(srcImage.cols / 2+200, srcImage.rows / 2);

//line(maskImage, point3, point4, Scalar::all(255), 5, 8, 0);

circle(maskImage, point3, 10, Scalar::all(255), 100);

/*namedWindow("resImage", 0);

imshow("resImage", maskImage);

waitKey();*/

// 【轮廓】

vector> contours;

vector hierarchy;

findContours(maskImage, contours, hierarchy, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

// 【分水岭】

// 参数二:maskWaterShed(CV_32S)

Mat maskWaterShed; // watershed()函数的参数

maskWaterShed = Mat(maskImage.size(), CV_32S);//空白掩码 maskWaterShed

maskWaterShed = Scalar::all(0);

/* 在maskWaterShed上绘制轮廓 */

for (int index = 0; index < contours.size(); index++)

drawContours(maskWaterShed, contours, index, Scalar::all(index + 1), -1, 8, hierarchy, INT_MAX);

/* 如果imshow这个maskWaterShed,我们会发现它是一片黑,原因是在上面我们只给它赋了1,2,3这样的值,通过代码80行的处理我们才能清楚的看出结果 */

// 参数一:srcImage(CV_8UC3)

watershed(srcImage, maskWaterShed); //int index = maskWaterShed.at(row, col);操作

// 【随机生成几种颜色】

vector colorTab;

for (int i = 0; i < contours.size(); i++)

{

int b = theRNG().uniform(0, 255);

int g = theRNG().uniform(0, 255);

int r = theRNG().uniform(0, 255);

colorTab.push_back(Vec3b((uchar)b, (uchar)g, (uchar)r));

}

Mat dst_ = Mat::zeros(maskWaterShed.size(), CV_8UC3);

Mat dst_edge = Mat::zeros(maskWaterShed.size(), CV_8UC3);

int index = maskWaterShed.at(maskWaterShed.rows / 2, maskWaterShed.cols / 2);

int index_temp = 0;

for (int i = 0; i < maskWaterShed.rows; i++)

{

for (int j = 0; j < maskWaterShed.cols; j++)

{

index_temp = maskWaterShed.at(i, j);

//cout << index_temp << endl;

if (index_temp == index)//取中心的标签区域

{

dst_edge.at(i, j) = Vec3b((uchar)255, (uchar)255, (uchar)255); //colorTab[index - 1];

dst_.at(i, j) = srcImage.at(i, j);

}

}

}

cv::namedWindow("分割结果", 0);

cv::imshow("分割结果", dst_);

imwrite("条纹1.bmp", dst_);

/*Mat dst_add;

addWeighted(dst_edge, 0.3, srcImage, 0.7, 0, dst_add);

namedWindow("加权结果", 0);

imshow("加权结果", dst_add);*/

cv::namedWindow("连通域", 0);

cv::imshow("连通域", dst_edge);

imwrite("条纹1_.bmp", dst_edge);

dst_.copyTo(dst);

dst_edge.copyTo(edge);

} C++ Opencv HU、MOM、GLCM

/*

第一步:建立类

#include

#include

#include

#include "time.h"

using namespace cv;

using namespace std;

第二步:包含类

Feature feature_class;

第三步:

集合颜色+形状+纹理

// 图像特征_HU

Mat hu_dst = dst.clone();

double Hu[7] = { 0 };

feature_class.feature_hu(hu_dst, Hu);

// 图像特征_COLOR

Mat color_dst = dst.clone();

float Mom[9] = { 0 };

feature_class.feature_color(color_dst, Mom);

// 图像特征_GLCM

Mat glcm_dst = dst.clone();

cv::cvtColor(glcm_dst, glcm_dst, CV_RGB2GRAY);

float glcm_data[16] = { 0 };

feature_class.feature_glcm(glcm_dst, glcm_data);

第四步:

// 特征集合7+9+16

float test_data[32] = { 0 };

for (size_t j = 0; j < 7; j++)

{

test_data[j] = (float)Hu[j];

}

for (size_t j = 0; j < 9; j++)

{

test_data[7 + j] = (float)Mom[j];

}

for (size_t j = 0; j < 16; j++)

{

test_data[16 + j] = (float)glcm_data[j];

}

*/

/* 【颜色】 */

// 颜色 计算三阶矩

double calc3orderMom(Mat &channel) //计算三阶矩

{

uchar *p;

double mom = 0;

double m = mean(channel)[0]; //计算单通道图像的均值

int nRows = channel.rows;

int nCols = channel.cols;

if (channel.isContinuous()) //连续存储有助于提升图像扫描速度

{

nCols *= nRows;

nRows = 1;

}

for (int i = 0; i < nRows; i++) //计算立方和

{

p = channel.ptr(i);

for (int j = 0; j < nCols; j++)

mom += pow((p[j] - m), 3);

}

float temp;

temp = cvCbrt((float)(mom / (nRows*nCols))); //求均值的立方根

mom = (double)temp;

return mom;

}

// 颜色 计算9个颜色矩:3个通道的1、2、3阶矩

double *colorMom(Mat &img)

{

double *Mom = new double[9]; //存放9个颜色矩

if (img.channels() != 3)

std::cout << "Error,input image must be a color image" << endl;

Mat b(img.rows, img.cols, CV_8U);

Mat r(img.rows, img.cols, CV_8U);

Mat g(img.rows, img.cols, CV_8U);

Mat channels[] = { b, g, r };

split(img, channels);

//cv::imshow("r", channels[0]);

//cv::imshow("g", channels[1]);

//cv::imshow("b", channels[2]);

//waitKey(0);

Mat tmp_m, tmp_sd;

//计算b通道的颜色矩

meanStdDev(b, tmp_m, tmp_sd);

Mom[0] = tmp_m.at(0, 0);

Mom[3] = tmp_sd.at(0, 0);

Mom[6] = calc3orderMom(b);

// cout << Mom[0] << " " << Mom[1] << " " << Mom[2] << " " << endl;

//计算g通道的颜色矩

meanStdDev(g, tmp_m, tmp_sd);

Mom[1] = tmp_m.at(0, 0);

Mom[4] = tmp_sd.at(0, 0);

Mom[7] = calc3orderMom(g);

// cout << Mom[3] << " " << Mom[4] << " " << Mom[5] << " " << endl;

//计算r通道的颜色矩

meanStdDev(r, tmp_m, tmp_sd);

Mom[2] = tmp_m.at(0, 0);

Mom[5] = tmp_sd.at(0, 0);

Mom[8] = calc3orderMom(r);

// cout << Mom[6] << " " << Mom[7] << " " << Mom[8] << " " << endl;

return Mom;//返回颜色矩数组

}

// 颜色

bool feature_color(Mat src, float Mom[9])

{

if (src.channels() == 3)

{

// 图像特征_COLOR

Mat color_dst = src.clone();

cv::cvtColor(color_dst, color_dst, CV_RGB2HSV);

double *MOM;

MOM = colorMom(color_dst);

for (int i = 0; i < 9; i++)

{

std::cout << (float)MOM[i] << endl;

Mom[i] = (float)MOM[i];

}

return true;

}

else

{

std::cout << "channels!=3";

return false;

}

}

/* 【形状】 */

bool feature_hu(Mat src, double Hu[7])

{

if (src.channels() == 3)

{

// 图像特征_HU

Mat hu_dst = src.clone();

cv::cvtColor(hu_dst, hu_dst, CV_RGB2GRAY);

Canny(hu_dst, hu_dst, 0, 120);

//double Hu[7]; //存储得到的Hu矩阵

Moments mo = moments(hu_dst);//矩变量

HuMoments(mo, Hu);

for (int i = 0; i < 7; i++)

{

std::cout << (float)Hu[i] << endl;

}

return true;

}

else if ((src.channels() == 1))

{

Mat hu_dst = src.clone();

Canny(hu_dst, hu_dst, 0, 120);

//double Hu[7]; //存储得到的Hu矩阵

Moments mo = moments(hu_dst);//矩变量

HuMoments(mo, Hu);

for (int i = 0; i < 7; i++)

{

std::cout << (float)Hu[i] << endl;

}

return true;

}

else

{

return false;

}

}

// 纹理

const int gray_level = 16;//纹理区域块的大小,通常将图像划分成若干个纹理块计算

vector glamvalue;//全局变量

//【】第一步:j计算共生矩阵

void getglcm_0(Mat& input, Mat& dst)//0度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)//寻找像素灰度最大值

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;//像素灰度最大值加1即为该矩阵所拥有的灰度级数

if (max_gray_level > 16)//若灰度级数大于16,则将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

else//若灰度级数小于16,则生成相应的灰度共生矩阵

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

}

void getglcm_45(Mat& input, Mat& dst)//45度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;

if (max_gray_level > 16)

{

for (int i = 0; i < height; i++)//将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

else

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width - 1; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j + 1];

dst.ptr(rows)[cols]++;

}

}

}

}

void getglcm_90(Mat& input, Mat& dst)//90度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;

if (max_gray_level > 16)

{

for (int i = 0; i < height; i++)//将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j];

dst.ptr(rows)[cols]++;

}

}

}

else

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 0; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j];

dst.ptr(rows)[cols]++;

}

}

}

}

void getglcm_135(Mat& input, Mat& dst)//135度灰度共生矩阵

{

Mat src = input;

CV_Assert(1 == src.channels());

src.convertTo(src, CV_32S);

int height = src.rows;

int width = src.cols;

int max_gray_level = 0;

for (int j = 0; j < height; j++)

{

int* srcdata = src.ptr(j);

for (int i = 0; i < width; i++)

{

if (srcdata[i] > max_gray_level)

{

max_gray_level = srcdata[i];

}

}

}

max_gray_level++;

if (max_gray_level > 16)

{

for (int i = 0; i < height; i++)//将图像的灰度级缩小至16级,减小灰度共生矩阵的大小。

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

srcdata[j] = (int)srcdata[j] / gray_level;

}

}

dst.create(gray_level, gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 1; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j - 1];

dst.ptr(rows)[cols]++;

}

}

}

else

{

dst.create(max_gray_level, max_gray_level, CV_32SC1);

dst = Scalar::all(0);

for (int i = 0; i < height - 1; i++)

{

int*srcdata = src.ptr(i);

int*srcdata1 = src.ptr(i + 1);

for (int j = 1; j < width; j++)

{

int rows = srcdata[j];

int cols = srcdata1[j - 1];

dst.ptr(rows)[cols]++;

}

}

}

}

// 【】第二步:计算纹理特征 // 特征值计算—— double& Asm, double& Con, double& Ent, double& Idm

void feature_computer(Mat&src, float& Asm, float& Con, float& Ent, float& Idm)//计算特征值

{

int height = src.rows;

int width = src.cols;

int total = 0;

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

for (int j = 0; j < width; j++)

{

total += srcdata[j];//求图像所有像素的灰度值的和

}

}

Mat copy;

copy.create(height, width, CV_64FC1);

for (int i = 0; i < height; i++)

{

int*srcdata = src.ptr(i);

float*copydata = copy.ptr(i);

for (int j = 0; j < width; j++)

{

copydata[j] = (float)srcdata[j] / (float)total;//图像每一个像素的的值除以像素总和

}

}

for (int i = 0; i < height; i++)

{

float*srcdata = copy.ptr(i);

for (int j = 0; j < width; j++)

{

Asm += srcdata[j] * srcdata[j]; //能量

if (srcdata[j]>0)

{

Ent -= srcdata[j] * log(srcdata[j]); //熵

}

Con += (float)(i - j)*(float)(i - j)*srcdata[j]; //对比度

Idm += srcdata[j] / (1 + (float)(i - j)*(float)(i - j)); //逆差矩

}

}

}

// 【】融合第一、二步

/*

Mat src_gray;

float data[16] = {0};

*/

void feature_glcm(Mat src_gray, float data[16])

{

Mat dst_0, dst_90, dst_45, dst_135;

getglcm_0(src_gray, dst_0);

float asm_0 = 0, con_0 = 0, ent_0 = 0, idm_0 = 0;

feature_computer(dst_0, asm_0, con_0, ent_0, idm_0);

getglcm_45(src_gray, dst_45);

float asm_45 = 0, con_45 = 0, ent_45 = 0, idm_45 = 0;

feature_computer(dst_45, asm_45, con_45, ent_45, idm_45);

getglcm_90(src_gray, dst_90);

float asm_90 = 0, con_90 = 0, ent_90 = 0, idm_90 = 0;

feature_computer(dst_90, asm_90, con_90, ent_90, idm_90);

getglcm_135(src_gray, dst_135);

float asm_135 = 0, con_135 = 0, ent_135 = 0, idm_135 = 0;

feature_computer(dst_135, asm_135, con_135, ent_135, idm_135);

float AMS[4] = { asm_0, asm_45, asm_90, asm_135 };

float COM[4] = { con_0, con_45, con_90, con_135 };

float ENT[4] = { ent_0, ent_45, ent_90, ent_135 };

float IDM[4] = { idm_0, idm_45, idm_90, idm_135 };

float glcm_data[16] = {

asm_0, asm_45, asm_90, asm_135,

con_0, con_45, con_90, con_135,

ent_0, ent_45, ent_90, ent_135,

idm_0, idm_45, idm_90, idm_135

};

/*std::cout << "特征数据:" << endl;*/

for (size_t i = 0; i < 16; i++)

{

data[i] = glcm_data[i];

//std::cout << data[i] << " ";

}

}

// 读取当前文件夹图片的个数子程序

/*

cv::String pattern = "./save/*.bmp";

int cout = read_images_in_folder(pattern);

*/

size_t read_images_in_folder(cv::String pattern)//读取当前指定目录的图片的个数

{

vector fn;

glob(pattern, fn, false);//OpenCV自带一个函数glob()可以遍历文件

size_t count = fn.size(); //number of png files in images folder

return count;

}

C++ Opencv hog+SVM(opencv3)

// opencv3

#include

#include

#include

#include

#include

using namespace cv::ml;

#define PosSamNO 30 //正样本个数

#define NegSamNO 30 //负样本个数

#define TestSamNO 5 //测试个数

void train_svm_hog()

{

//HOG检测器,用来计算HOG描述子的

//检测窗口(48,48),块尺寸(16,16),块步长(8,8),cell尺寸(8,8),直方图bin个数9

cv::HOGDescriptor hog(cv::Size(48, 48), cv::Size(16, 16), cv::Size(8, 8), cv::Size(8, 8), 9);

int DescriptorDim;//HOG描述子的维数,由图片大小、检测窗口大小、块大小、细胞单元中直方图bin个数决定

//设置SVM参数

cv::Ptr svm = cv::ml::SVM::create();

svm->setType(cv::ml::SVM::Types::C_SVC);

svm->setKernel(cv::ml::SVM::KernelTypes::LINEAR);

svm->setTermCriteria(cv::TermCriteria(cv::TermCriteria::MAX_ITER, 100, 1e-6));

std::string ImgName;

//正样本图片的文件列表

std::ifstream finPos("positive_samples.txt");

//负样本图片的文件列表

std::ifstream finNeg("negative_samples.txt");

//所有训练样本的特征向量组成的矩阵,行数等于所有样本的个数,列数等于HOG描述子维数

cv::Mat sampleFeatureMat;

//训练样本的类别向量,行数等于所有样本的个数,列数等于1;1表示有目标,-1表示无目标

cv::Mat sampleLabelMat;

//依次读取正样本图片,生成HOG描述子

for (int num = 0; num < PosSamNO && getline(finPos, ImgName); num++)

{

std::cout << "Processing:" << ImgName << std::endl;

cv::Mat image = cv::imread(ImgName);

cv::resize(image, image, cv::Size(48, 48));

//HOG描述子向量

std::vector descriptors;

//计算HOG描述子,检测窗口移动步长(8,8)

hog.compute(image, descriptors, cv::Size(8, 8));

//处理第一个样本时初始化特征向量矩阵和类别矩阵,因为只有知道了特征向量的维数才能初始化特征向量矩阵

if (0 == num)

{

//HOG描述子的维数

DescriptorDim = descriptors.size();

//初始化所有训练样本的特征向量组成的矩阵,行数等于所有样本的个数,列数等于HOG描述子维数sampleFeatureMat

sampleFeatureMat = cv::Mat::zeros(PosSamNO + NegSamNO, DescriptorDim, CV_32FC1);

//初始化训练样本的类别向量,行数等于所有样本的个数,列数等于1

sampleLabelMat = cv::Mat::zeros(PosSamNO + NegSamNO, 1, CV_32SC1);

}

//将计算好的HOG描述子复制到样本特征矩阵sampleFeatureMat

for (int i = 0; i < DescriptorDim; i++)

{

//第num个样本的特征向量中的第i个元素

sampleFeatureMat.at(num, i) = descriptors[i];

} //正样本类别为1,判别为无喷溅

sampleLabelMat.at(num, 0) = 1;

}

//依次读取负样本图片,生成HOG描述子

for (int num = 0; num < NegSamNO && getline(finNeg, ImgName); num++)

{

std::cout << "Processing:" << ImgName << std::endl;

cv::Mat src = cv::imread(ImgName);

cv::resize(src, src, cv::Size(48, 48));

//HOG描述子向量

std::vector descriptors;

//计算HOG描述子,检测窗口移动步长(8,8)

hog.compute(src, descriptors, cv::Size(8, 8));

//处理第一个样本时初始化特征向量矩阵和类别矩阵,因为只有知道了特征向量的维数才能初始化特征向量矩阵

//std::cout << "descriptor dimention:" << descriptors.size() << std::endl;

//将计算好的HOG描述子复制到样本特征矩阵sampleFeatureMat

for (int i = 0; i < DescriptorDim; i++)

{

//第PosSamNO+num个样本的特征向量中的第i个元素

sampleFeatureMat.at(num + PosSamNO, i) = descriptors[i];

}

//负样本类别为-1,判别为喷溅

sampleLabelMat.at(num + PosSamNO, 0) = -1;

}

//训练SVM分类器

std::cout << "开始训练SVM分类器" << std::endl;

cv::Ptr td = cv::ml::TrainData::create(sampleFeatureMat, cv::ml::SampleTypes::ROW_SAMPLE, sampleLabelMat);

svm->train(td);

std::cout << "SVM分类器训练完成" << std::endl;

//将训练好的SVM模型保存为xml文件

svm->save("SVM_HOG.xml");

return;

}

void svm_hog_classification()

{

//HOG检测器,用来计算HOG描述子的

//检测窗口(48,48),块尺寸(16,16),块步长(8,8),cell尺寸(8,8),直方图bin个数9

cv::HOGDescriptor hog(cv::Size(48, 48), cv::Size(16, 16), cv::Size(8, 8), cv::Size(8, 8), 9);

//HOG描述子的维数,由图片大小、检测窗口大小、块大小、细胞单元中直方图bin个数决定

int DescriptorDim;

//测试样本图片的文件列表

std::ifstream finTest("test_samples.txt");

std::string ImgName;

for (int num = 0; num < TestSamNO && getline(finTest, ImgName); num++)

{

//从XML文件读取训练好的SVM模型

cv::Ptr svm = cv::ml::SVM::load("SVM_HOG.xml ");

if (svm->empty())

{

std::cout << "load svm detector failed!!!" << std::endl;

return;

}

//针对测试集进行识别

std::cout << "开始识别..." << std::endl;

std::cout << "Processing:" << ImgName << std::endl;

cv::Mat test = cv::imread(ImgName);

cv::resize(test, test, cv::Size(48, 48));

std::vector descriptors;

hog.compute(test, descriptors);

cv::Mat testDescriptor = cv::Mat::zeros(1, descriptors.size(), CV_32FC1);

for (size_t i = 0; i < descriptors.size(); i++)

{

testDescriptor.at(0, i) = descriptors[i];

}

float label = svm->predict(testDescriptor);

imshow("test image", test);

std::cout << "这张图属于:" << label << std::endl;

cv::waitKey(0);

}

return;

}

int main(int argc, char** argv)

{

train_svm_hog();

svm_hog_classification();

return 0;

} C++ Opencv 特征AKAZE(opencv3.3.0)

特征检测

第一步:检测器

Ptr detector = AKAZE::create(); 第二步:检测器子类—检测

detector->detect(img, keypoints, Mat());计算检测时间(通用):

double t1 = getTickCount();

/*加入你要计算时间的代码段*/

double t2 = getTickCount();

double tkaze = (t2 - t1) / getTickFrequency();

printf("Time consume(s) : %f\n", tkaze);第三步:画出特征点图

drawKeypoints(img, keypoints, keypointImg, Scalar::all(-1), DrawMatchesFlags::DEFAULT);总体程序

// Demo_Feature.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include

#include

using namespace cv;

using namespace std;

int _tmain(int argc, _TCHAR* argv[])

{

Mat img1 = imread("C:\\Users\\Administrator\\Desktop\\样品\\瓷砖\\方格.bmp", IMREAD_GRAYSCALE);

Mat img2 = imread("C:\\Users\\Administrator\\Desktop\\样品\\瓷砖\\方格.bmp", IMREAD_GRAYSCALE);

if (img1.empty() && img2.empty()) {

printf("could not load image...\n");

return -1;

}

imshow("input image", img1);

// kaze detection

Ptr detector = AKAZE::create();

vector keypoints;

double t1 = getTickCount();

detector->detect(img1, keypoints, Mat());

double t2 = getTickCount();

double tkaze = 1000 * (t2 - t1) / getTickFrequency();

printf("KAZE Time consume(ms) : %f", tkaze);

Mat keypointImg;

drawKeypoints(img1, keypoints, keypointImg, Scalar::all(-1), DrawMatchesFlags::DEFAULT);

imshow("kaze key points", keypointImg);

waitKey(0);

return 0;

} 特征匹配

第一步:检测器

Ptr detector = AKAZE::create(); 第二步: 检测器子类—检测和计算

detector->detectAndCompute(img1, Mat(), keypoints_obj, descriptor_obj);

detector->detectAndCompute(img2, Mat(), keypoints_scene, descriptor_scene);第三步: 计算结果匹配

// 构建匹配器

FlannBasedMatcher matcher(new flann::LshIndexParams(20, 10, 2));

// 进行匹配

matcher.match(descriptor_obj, descriptor_scene, matches);第四步:匹配结果画出

drawMatches(img1, keypoints_obj, img2, keypoints_scene, matches, akazeMatchesImg);第五步:最佳匹配选取