TextCNN 代码+图文对应解释

TextCNN 代码+图文对应解释

前提

适合了解TextCNN大体思路,但是对Pytorch不了解的小伙伴阅读

建议:遇到不懂的函数如squeeze()和unsqueeze(),查阅官方文档,或者单独在百度上搜搜这个函数,你就懂了,如图

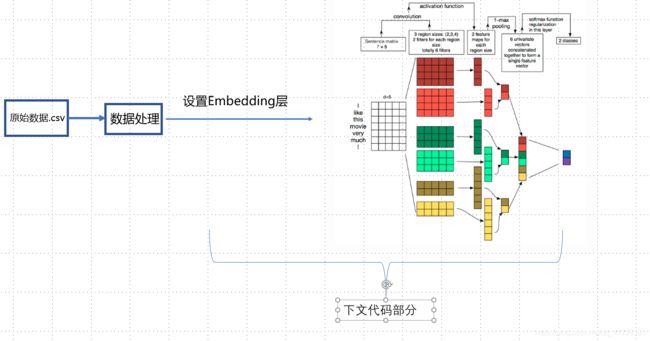

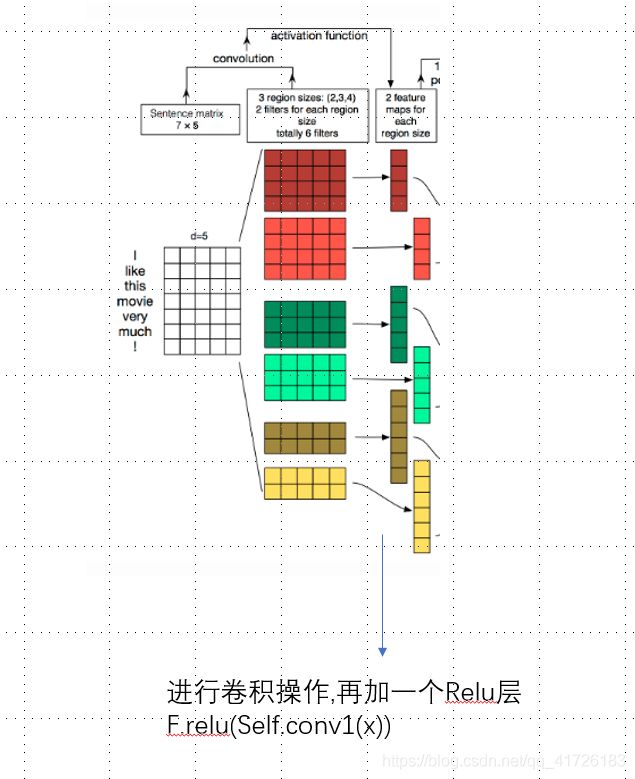

流程图

模型代码

import torch.nn as nn

import torch.nn.functional as F

class CNN(nn.Module):

def __init__(self):

super(CNN,self).__init__()

# 设置embeding层

self.embedding_choice=embedding_choice

if self.embedding_choice== 'rand':

self.embedding=nn.Embedding(num_embeddings,embedding_dim)

if self.embedding_choice== 'glove':

self.embedding = nn.Embedding(num_embeddings, embedding_dim,

padding_idx=PAD_INDEX).from_pretrained(TEXT.vocab.vectors, freeze=True)

#三层卷积层设置 convolution

self.conv1 = nn.Conv2d(in_channels=1,out_channels=filters_num , #卷积产生的通道

kernel_size=(3, embedding_dim), padding=(2,0))

self.conv2 = nn.Conv2d(in_channels=1,out_channels=filters_num , #卷积产生的通道

kernel_size=(4, embedding_dim), padding=(3,0))

self.conv3 = nn.Conv2d(in_channels=1,out_channels=filters_num , #卷积产生的通道

kernel_size=(5, embedding_dim), padding=(4,0))

# 正则化,防止过拟合

self.dropout = nn.Dropout(dropout_p)

#全连接层 functional connect

self.fc = nn.Linear(filters_num * 3, label_num)

# 前向传播

def forward(self,x): # (Batch_size, Length)

x=self.embedding(x).unsqueeze(1) #(Batch_size, Length, Dimention)

#(Batch_size, 1, Length, Dimention)

# Relu函数 提供非线性

x1 = F.relu(self.conv1(x)).squeeze(3) #(Batch_size, filters_num, length+padding, 1)

#(Batch_size, filters_num, length+padding)

# 池化层-降维

x1 = F.max_pool1d(x1, x1.size(2)).squeeze(2) #(Batch_size, filters_num, 1)

#(Batch_size, filters_num)

x2 = F.relu(self.conv2(x)).squeeze(3)

x2 = F.max_pool1d(x2, x2.size(2)).squeeze(2)

x3 = F.relu(self.conv3(x)).squeeze(3)

x3 = F.max_pool1d(x3, x3.size(2)).squeeze(2)

# 拼接

x = torch.cat((x1, x2, x3), dim=1) #(Batch_size, filters_num *3 )

x = self.dropout(x) #(Batch_size, filters_num *3 )

out = self.fc(x) #(Batch_size, label_num )

return out

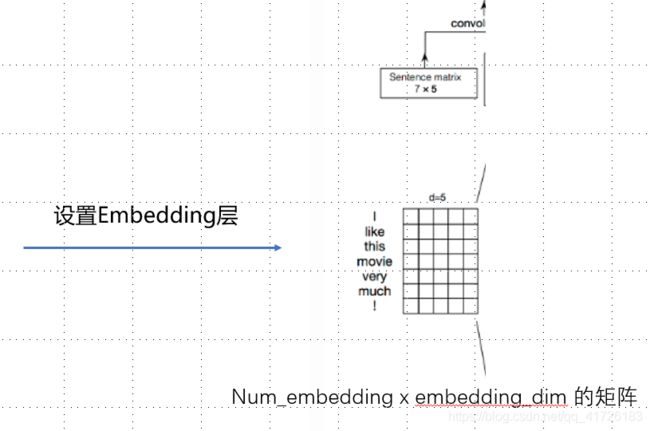

设置 Embedding 层

self.embedding_choice=embedding_choice

if self.embedding_choice== 'rand':

self.embedding=nn.Embedding(num_embeddings,embedding_dim)

if self.embedding_choice== 'glove':

self.embedding = nn.Embedding(num_embeddings, embedding_dim,

padding_idx=PAD_INDEX).from_pretrained(TEXT.vocab.vectors, freeze=True)

其中 rand模式 就是每个词向量随机赋值,glove模式就是从预训练的glove词向量中选择,如果这个词在glove中没有,就按照均匀分布给他随机赋值,padding_idx 参数为指定某行全为0

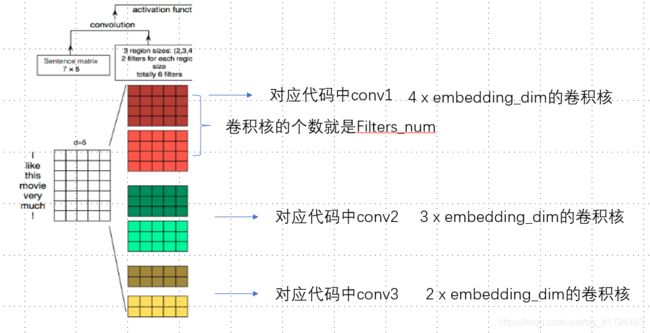

设置卷积层

#三层卷积层设置 convolution

self.conv1 = nn.Conv2d(in_channels=1,out_channels=filters_num , #卷积产生的通道

kernel_size=(3, embedding_dim), padding=(2,0))

self.conv2 = nn.Conv2d(in_channels=1,out_channels=filters_num , #卷积产生的通道

kernel_size=(4, embedding_dim), padding=(3,0))

self.conv3 = nn.Conv2d(in_channels=1,out_channels=filters_num , #卷积产生的通道

kernel_size=(5, embedding_dim), padding=(4,0))

padding是在外围补多少0

如图

卷积运算

池化层

x1 = F.max_pool1d(x1, x1.size(2)).squeeze(2) #(Batch_size, filters_num, 1)

#(Batch_size, filters_num)

全连接层

# 拼接

x = torch.cat((x1, x2, x3), dim=1) #(Batch_size, filters_num *3 )

x = self.dropout(x) #(Batch_size, filters_num *3 )

out = self.fc(x) #(Batch_size, label_num )