【Pytorch项目实战】之机器翻译:编码器-解码器、注意力机制AM

文章目录

- 机器翻译 - 中英文翻译

-

- 算法一:编码器-解码器(Encoder - Decoder)

- 算法二:注意力机制(Attention Model,AM)

-

- 2.1为何要引入注意力机制?

- 2.2注意力机制的语义向量表示C计算

- 2.3每个输入单词的概率分布值计算

- 算法三:自注意力机制(Self-Attention Model,AM)

- (一)实战:基于注意力机制的中英文翻译(数据集:MNIST)

机器翻译 - 中英文翻译

(1)传统的统计机器翻译(Statistical Machine Translation,SMT)。如:Encoder-Decoder模型。

(2)神经网络机器翻译(Neural Machine Translation,NMT)。如:带有注意力机制的Encoder-Decoder模型。

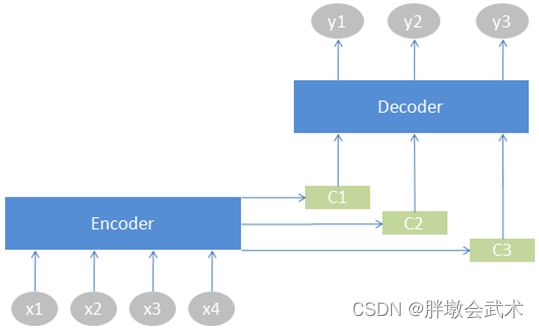

算法一:编码器-解码器(Encoder - Decoder)

Encoder - Decoder又被称为Seq2Seq模型。如:CNN/RNN/LSTM/Transformer

直观理解: 由一个句子(或篇章)生成另外一个句子(或篇章)的通用处理模型。对于句子对,我们的目标是给定输入句子Source,期待通过Encoder-Decoder框架来生成目标句子Target。其中,Source和Target可以是同一种语言,也可以是两种不同的语言。

算法二:注意力机制(Attention Model,AM)

2.1为何要引入注意力机制?

(1)在翻译“杰瑞”中文单词时,Encoder-Decoder模型中每个英文单词在翻译目标单词“杰瑞”的贡献相同(即中间语义表示C相同),这显然是不合理的,因为“Jerry”对于翻译“杰瑞”更重要。

(2)而引入AM模型,会在翻译“杰瑞”时,体现出Jerry的重要性(即中间语义表示C随当前输出单词变化而变化)。如:[Tom, Chase, Jerry] = [0.3, 0.2, 0.5]。

2.2注意力机制的语义向量表示C计算

2.3每个输入单词的概率分布值计算

在

中(英文句子 - 中文句子),给定Target(目标句子)中的某个元素Query(单词)。

(1)计算Query与每个Key的相似性得到**权重系数向量(网络训练),进而得到Target的权重系数矩阵(W是可学习权重参数矩阵);

(2)通过激活函数softmax将元素Query对应的系数向量变成0~1的概率分布值;

(3)对Value进行加权求和**,得到Attention值。

算法三:自注意力机制(Self-Attention Model,AM)

自注意力机制的原理

(一)实战:基于注意力机制的中英文翻译(数据集:MNIST)

数据链接:https://pan.baidu.com/s/1U1t690L0g0Om_ZXXFXC_lw?pwd=o6p4

提取码:o6p4

中文分词库:jieba(安装+介绍+实例)

# 主要流程

# (1)数据读取:读取txt文件、数据过滤、中英文分词

# (2)构建模型:编码器、解码器、带注意力机制的解码器

# (3)开始训练:迭代训练函数、训练函数、数据格式转换、计算时长、画图

# (4)评估模型:评估模型并可视化注意力、随机语句评估、评估模型、可视化注意力

########################################################################################################################

from __future__ import unicode_literals, print_function, division

import time

import math

import random

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

from io import open

import unicodedata

import re

import jieba

import torch

import torch.nn as nn

from torch import optim

import torch.nn.functional as F

import os

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True' # "OMP: Error #15: Initializing libiomp5md.dll"

# import matplotlib.font_manager as fm # 用于存储和操作字体的属性

# myfont = fm.FontProperties(fname='simhei.ttf') # 自定义字体

########################################################################################################################

"""(1)读取数据并预处理"""

########################################################

def prepareData(txt_path, lang1, lang2, reverse=False):

input_lang, output_lang, pairs = readLangs(txt_path, lang1, lang2, reverse) # 读取txt文本数据

print("In the txt file, there are %s sentence pairs (lines)" % len(pairs)) # 打印:中英文句子的总数(原文件)

pairs = filterPairs(pairs) # 数据过滤

print("After data processing, there are %s sentence pairs (lines)" % len(pairs)) # 打印:中英文句子的总数(过滤后)

# 遍历中英文句子(过滤后),并分别进行分词

for pair in pairs:

input_lang.addSentence_cn(pair[0]) # 中文分词:使用jieba库

output_lang.addSentence(pair[1]) # 英文分词:使用空格

# 打印:中文名+分词后的中文字词数

# 打印:英文名+分词后的英文单词数

print("Counted words: (1)%s - %s (2)%s - %s" % (input_lang.name, input_lang.n_words, output_lang.name, output_lang.n_words))

return input_lang, output_lang, pairs

def readLangs(txt_path, lang1, lang2, reverse=False):

"""txt文本路径,英文名,中文名,位置变换"""

print("Reading txt file and processing...")

# 打开指定txt文件,按行读取数据。(txt样本:前部分为英文,后部分为中文,中间用Tab分割)

lines = open(txt_path % (lang1, lang2), encoding='utf-8').read().strip().split('\n')

# 遍历所有行,然后分别提取英文与中文,最后进行字符串标准化处理。得到列表结构:pairs[英文, 中文]

pairs = [[normalizeString(s) for s in l.split('\t')] for l in lines]

# 判断是否需要转换语句对的次序。如:[英文,中文]转换为[中文,英文]

if reverse:

# 数据存放位置变换:pairs[中文, 英文]

pairs = [list(reversed(p)) for p in pairs]

input_lang = Lang(lang2) # 输入中文

output_lang = Lang(lang1) # 输出英文(翻译后)

else:

input_lang = Lang(lang1)

output_lang = Lang(lang2)

return input_lang, output_lang, pairs

def normalizeString(s):

"""字符串标准化处理:英文全部转换为小写、去掉空格及非字母符号"""

s = unicodeToAscii(s.lower().strip())

s = re.sub(r"([.!?])", r" \1", s)

return s

def unicodeToAscii(s):

"""Unicode字符串转换为ASCII编码"""

return ''.join(c for c in unicodedata.normalize('NFD', s) if unicodedata.category(c) != 'Mn')

class Lang:

"""中英文分词"""

def __init__(self, name):

self.name = name

self.word2index = {}

self.word2count = {}

self.index2word = {0: "SOS", 1: "EOS"}

self.n_words = 2 # Count SOS and EOS

def addSentence(self, sentence):

"""英文分词:使用空格"""

for word in sentence.split(' '):

self.addWord(word)

def addSentence_cn(self, sentence):

"""中文分词:使用jieba库"""

for word in list(jieba.cut(sentence)):

self.addWord(word)

def addWord(self, word):

if word not in self.word2index:

self.word2index[word] = self.n_words

self.word2count[word] = 1

self.index2word[self.n_words] = word

self.n_words += 1

else:

self.word2count[word] += 1

def filterPairs(pairs):

"""遍历所有中英文句子,并进行数据过滤"""

return [pair for pair in pairs if filterPair(pair)]

def filterPair(pair):

"""数据过滤"""

max_length = 20 # 中英文句子最大长度限制

# prefix词根:通俗上讲就是语文的偏旁部首,词根在单词开头,词缀在结尾。

english_prefixes = ("i am ", "i m ", "he is", "he s ", "she is", "she s ", "you are", "you re ", "we are", "we re ", "they are", "they re ")

# 英文开头固定:english_prefixes。且分别判断中文/英文的句子长度是否大于20,是则丢弃。

return len(pair[0].split(' ')) < max_length and len(pair[1].split(' ')) < max_length and pair[1].startswith(english_prefixes)

########################################################################################################################

"""(2)搭建网络模型"""

########################################################

class EncoderRNN(nn.Module):

"""编码器"""

def __init__(self, input_size, hidden_size):

"""所有训练句子的(中文)分词数,训练尺度"""

super(EncoderRNN, self).__init__()

self.hidden_size = hidden_size

self.embedding = nn.Embedding(input_size, hidden_size)

self.gru = nn.GRU(hidden_size, hidden_size)

def forward(self, input, hidden): # 前向传播

output = self.embedding(input).view(1, 1, -1) # 随机初始化词向量,词向量值在正态分布(0,1)中随机取值

output, hidden = self.gru(output, hidden) # GRU循环网络

return output, hidden

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

class DecoderRNN(nn.Module):

"""解码器"""

def __init__(self, hidden_size, output_size):

super(DecoderRNN, self).__init__()

self.hidden_size = hidden_size

self.embedding = nn.Embedding(output_size, hidden_size)

self.gru = nn.GRU(hidden_size, hidden_size)

self.out = nn.Linear(hidden_size, output_size)

self.softmax = nn.LogSoftmax(dim=1)

def forward(self, input, hidden): # 前向传播

output = self.embedding(input).view(1, 1, -1) # 随机初始化词向量,词向量值在正态分布(0,1)中随机取值

output = F.relu(output) # 激活函数ReLU

output, hidden = self.gru(output, hidden) # 循环网络GRU

output = self.softmax(self.out(output[0])) # 分类器

return output, hidden

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

class AttnDecoderRNN(nn.Module):

"""带注意力机制的解码器"""

def __init__(self, hidden_size, output_size, dropout_p=0.1, max_length=20):

"""训练尺寸,所有训练句子的(英文)分次数,丢弃率,句子最大长度"""

super(AttnDecoderRNN, self).__init__()

self.hidden_size = hidden_size

self.output_size = output_size

self.dropout_p = dropout_p

self.max_length = max_length

self.embedding = nn.Embedding(self.output_size, self.hidden_size)

self.attn = nn.Linear(self.hidden_size * 2, self.max_length)

self.attn_combine = nn.Linear(self.hidden_size * 2, self.hidden_size)

self.dropout = nn.Dropout(self.dropout_p)

self.gru = nn.GRU(self.hidden_size, self.hidden_size)

self.out = nn.Linear(self.hidden_size, self.output_size)

def forward(self, input, hidden, encoder_outputs):

embedded = self.embedding(input).view(1, 1, -1) # 随机初始化词向量,词向量值在正态分布(0,1)中随机取值

embedded = self.dropout(embedded) # Dropout丢弃率:随机杀死神经元

attn_weights = F.softmax(self.attn(torch.cat((embedded[0], hidden[0]), 1)), dim=1) # 分类器(全连接层1)

attn_applied = torch.bmm(attn_weights.unsqueeze(0), encoder_outputs.unsqueeze(0)) # 计算两个tensor的矩阵乘法

output = torch.cat((embedded[0], attn_applied[0]), 1) # 将两个tensor拼接到一起

output = self.attn_combine(output).unsqueeze(0) # 全连接层2

output = F.relu(output) # 激活函数ReLU

output, hidden = self.gru(output, hidden) # 循环网络GRU

output = F.log_softmax(self.out(output[0]), dim=1) # 逻辑回归分类器(全连接层3)

return output, hidden, attn_weights

def initHidden(self):

return torch.zeros(1, 1, self.hidden_size, device=device)

########################################################################################################################

"""(3)开始训练"""

########################################################

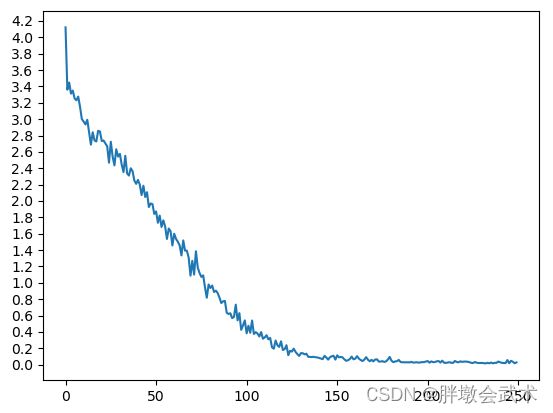

def trainIters(encoder, decoder, n_iters, print_every=1000, plot_every=100, learning_rate=0.01):

"""迭代训练"""

start = time.time()

plot_losses = []

print_loss_total = 0

plot_loss_total = 0

encoder_optimizer = optim.SGD(encoder.parameters(), lr=learning_rate) # 优化器SGD(编码器)

decoder_optimizer = optim.SGD(decoder.parameters(), lr=learning_rate) # 优化器SGD(解码器)

training_pairs = [tensorsFromPair(random.choice(pairs)) for i in range(n_iters)]

criterion = nn.NLLLoss() # NLL损失函数

for iter in range(1, n_iters + 1):

training_pair = training_pairs[iter - 1]

input_tensor = training_pair[0] # 输入

target_tensor = training_pair[1] # 输出

# 调用训练函数

loss = train(input_tensor, target_tensor, encoder, decoder, encoder_optimizer, decoder_optimizer, criterion)

print_loss_total += loss

plot_loss_total += loss

if iter % print_every == 0: # 每隔print_every个批次,打印loss值

print_loss_avg = print_loss_total / print_every

print_loss_total = 0

print('Time: %s; Iter: %d; Completed: %d %%; avg_loss: %.4f'

% (timeSince(start, iter / n_iters), iter, iter / n_iters * 100, print_loss_avg))

if iter % plot_every == 0: # 每隔plot_every个批次,绘制loss图

plot_loss_avg = plot_loss_total / plot_every

plot_losses.append(plot_loss_avg)

plot_loss_total = 0

showPlot(plot_losses) # 绘制loss图

def train(input_tensor, target_tensor, encoder, decoder, encoder_optimizer, decoder_optimizer, criterion, max_length=20):

"""训练函数"""

teacher_forcing_ratio = 0.5

encoder_hidden = encoder.initHidden() # 参数初始化

encoder_optimizer.zero_grad() # 梯度清零(编码器)

decoder_optimizer.zero_grad() # 梯度清零(解码器)

input_length = input_tensor.size(0) # 获取输入句子长度

target_length = target_tensor.size(0) # 获取目标句子长度

encoder_outputs = torch.zeros(max_length, encoder.hidden_size, device=device)

for ei in range(input_length):

encoder_output, encoder_hidden = encoder(input_tensor[ei], encoder_hidden) # Encoder编码器

encoder_outputs[ei] = encoder_output[0, 0]

decoder_input = torch.tensor([[SOS_token]], device=device)

decoder_hidden = encoder_hidden

use_teacher_forcing = True if random.random() < teacher_forcing_ratio else False

loss = 0

if use_teacher_forcing:

# Teacher forcing: Feed the target as the next input

for di in range(target_length):

decoder_output, decoder_hidden, decoder_attention = decoder(

decoder_input, decoder_hidden, encoder_outputs)

loss += criterion(decoder_output, target_tensor[di])

decoder_input = target_tensor[di] # Teacher forcing

else:

# Without teacher forcing: use its own predictions as the next input

for di in range(target_length):

decoder_output, decoder_hidden, decoder_attention = decoder(

decoder_input, decoder_hidden, encoder_outputs)

topv, topi = decoder_output.topk(1)

decoder_input = topi.squeeze().detach() # detach from history as input

loss += criterion(decoder_output, target_tensor[di])

if decoder_input.item() == EOS_token:

break

loss.backward()

encoder_optimizer.step()

decoder_optimizer.step()

return loss.item() / target_length

SOS_token = 0 # 中文词典

EOS_token = 1 # 英文词典

def indexesFromSentence(lang, sentence):

"""将【英文句子】拆分为词,并获取每个词的索引号(0:"SOS", 1:"EOS")"""

return [lang.word2index[word] for word in sentence.split(' ')]

def indexesFromSentence_cn(lang, sentence):

"""将【中文句子】拆分为词,并获取每个词的索引号(0:"SOS", 1:"EOS")"""

return [lang.word2index[word] for word in list(jieba.cut(sentence))]

def tensorFromSentence(lang, sentence):

indexes = indexesFromSentence(lang, sentence)

indexes.append(EOS_token)

return torch.tensor(indexes, dtype=torch.long, device=device).view(-1, 1)

def tensorFromSentence_cn(lang, sentence):

indexes = indexesFromSentence_cn(lang, sentence)

indexes.append(EOS_token)

return torch.tensor(indexes, dtype=torch.long, device=device).view(-1, 1)

def tensorsFromPair(pair):

input_tensor = tensorFromSentence_cn(input_lang, pair[0]) # 输入语句转化为Tensor

target_tensor = tensorFromSentence(output_lang, pair[1]) # 输出语句转化为Tensor

return (input_tensor, target_tensor)

def timeSince(since, percent):

"""未训练前的时间,当前批次在所有训练批次中的占比"""

now = time.time() # 获取当前时间

s = now - since # 计算从开始到当前批次数据的训练总耗时

es = s / (percent) # 当前批次总耗时/占比 = 项目总耗时

rs = es - s

return '%s / %s' % (asMinutes(s), asMinutes(es))

def asMinutes(s):

"""计算训练所耗时间(分/秒)"""

m = math.floor(s / 60) # 计算分钟:向上取整

s -= m * 60 # 计算秒

return '%dm %ds' % (m, s)

def showPlot(points):

"""画图"""

fig = plt.figure() # 新建画板

ax = fig.add_subplot(111) # 添加Axes

# this locator puts ticks at regular intervals

loc = ticker.MultipleLocator(base=0.2)

ax.yaxis.set_major_locator(loc)

plt.plot(points)

########################################################################################################################

"""(4)评估模型"""

########################################################

def evaluateRandomly(encoder, decoder, n=10):

"""随机语句评估"""

for i in range(n):

pair = random.choice(pairs) # 随机采样

print('>', pair[0]) # 输入

print('=', pair[1]) # 目标

output_words, attentions = evaluate(encoder, decoder, pair[0])

output_sentence = ' '.join(output_words)

print('<', output_sentence) # 输出

print('')

def evaluateAndShowAttention(input_sentence):

"""评估模型并可视化注意力"""

output_words, attentions = evaluate(encoder1, attn_decoder1, input_sentence) # 评估模型

showAttention(input_sentence, output_words, attentions) # 可视化模型

print('input =', input_sentence) # 打印输入语句

print('output =', ' '.join(output_words)) # 打印输出语句

def evaluate(encoder, decoder, sentence, max_length=20):

"""评估模型"""

with torch.no_grad(): # 强制之后的内容不及逆行计算图构建

input_tensor = tensorFromSentence_cn(input_lang, sentence)

input_length = input_tensor.size()[0]

encoder_hidden = encoder.initHidden()

encoder_outputs = torch.zeros(max_length, encoder.hidden_size, device=device)

for ei in range(input_length):

encoder_output, encoder_hidden = encoder(input_tensor[ei], encoder_hidden) # Encoder编码器

encoder_outputs[ei] += encoder_output[0, 0]

decoder_input = torch.tensor([[SOS_token]], device=device) # SOS

decoder_hidden = encoder_hidden

decoded_words = []

decoder_attentions = torch.zeros(max_length, max_length)

for di in range(max_length):

decoder_output, decoder_hidden, decoder_attention = decoder(decoder_input, decoder_hidden, encoder_outputs)

decoder_attentions[di] = decoder_attention.data

topv, topi = decoder_output.data.topk(1)

if topi.item() == EOS_token:

decoded_words.append('' )

break

else:

decoded_words.append(output_lang.index2word[topi.item()])

decoder_input = topi.squeeze().detach()

return decoded_words, decoder_attentions[:di + 1]

def showAttention(input_sentence, output_words, attentions):

"""可视化注意力"""

fig = plt.figure() # 新建画板

ax = fig.add_subplot(111) # 添加Axes

cax = ax.matshow(attentions.numpy(), cmap='bone') # 在窗口显示数组矩阵

fig.colorbar(cax) # 显示colorbar

ax.set_xticklabels([''] + list(jieba.cut(input_sentence)) + ['' ], rotation=90, fontproperties=None)

ax.set_yticklabels([''] + output_words)

###########################################################

# MultipleLocator(n):将刻度间隔设置为n的倍数

# FormaStrFormatter('%1.1f'):设置主刻度标签文本的格式

#

# ax.xaxis.set_major_locator(MultipleLocator(n)):设置主刻度标签的位置

# ax.xaxis.set_major_formatter(FormaStrFormatter('%1.1f')):设置主刻度标签文本的格式

###########################################################

ax.xaxis.set_major_locator(ticker.MultipleLocator(1)) # 设置主刻度标签的位置

ax.yaxis.set_major_locator(ticker.MultipleLocator(1)) # 设置主刻度标签的位置

plt.show()

########################################################################################################################

if __name__ == '__main__':

###########################################################

# (51)读取数据、数据预处理

txt_path = r'E:\Pytorch\eng-cmn\%s-%s.txt'

input_lang, output_lang, pairs = prepareData(txt_path, lang1='English', lang2='Chinese', reverse=True)

print(random.choice(pairs)) # 随机打印一组中英文句子

###########################################################

# (52)开始训练

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

encoder1 = EncoderRNN(input_size=input_lang.n_words, hidden_size=256).to(device) # 编码器Encoder

attn_decoder1 = AttnDecoderRNN(hidden_size=256, output_size=output_lang.n_words,

dropout_p=0.1, max_length=20).to(device) # 带注意力机制的解码器

trainIters(encoder=encoder1, decoder=attn_decoder1, n_iters=25000, print_every=500, plot_every=100) # 迭代训练

###########################################################

# (53)随机语句评估

evaluateRandomly(encoder1, attn_decoder1, n=5)

###########################################################

# (54)评估模型并可视化注意力

evaluateAndShowAttention("我很幸福。")

evaluateAndShowAttention("我们在严肃地谈论你的未来。")

evaluateAndShowAttention("我在家。")

evaluateAndShowAttention("我们在严肃地谈论你的未来。")