【神经网络】(12) MobileNetV2 代码复现,网络解析,附Tensorflow完整代码

各位同学好,今天和大家分享一下如何使用 Tensorflow 复现谷歌轻量化神经网络 MobileNetV2。

在上一篇中我介绍了MobileNetV1,探讨了深度可分离卷积,感兴趣的可以看一下:https://blog.csdn.net/dgvv4/article/details/123415708,本节还会继续用到深度可分离卷积的知识。那我们开始吧。

1. MobileNetV1的局限

上一节中我们认识到,深度可分离卷积能显著地减少参数量和计算量,占用更少的内存。

![]()

但是,由于MobileNet研发的时间比较早,没有和许多前沿的技术相结合,比如没有使用残差连接。

此外,网络训练时,很多深度可分离卷积核训练出来的卷积核权重是0,(1)因为卷积核权重数量和通道数太少,卷积核只有一个通道,太单薄;(2)rule激活函数会将所有小于0的输入都变成0,导致前向传播的张量为0,反向传播的梯度也为0,权重永远是0,梯度消失;(3)低精度的浮点数表示。如果使用16bit或8bit等很少的bit表示一个数,表示的范围很有限,导致训练出来的是一个非常小的数。

2. MobileNetV2网络

2.1 改进的深度可分离卷积

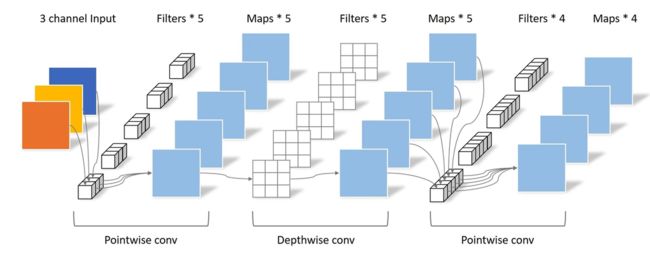

MobileNetV1 先使用3*3的深度卷积再使用1*1的逐点卷积,全部采用relu6激活函数。

MobileNetV2 先使用1*1卷积升维,在高维空间下使用3*3的深度卷积,再用1*1卷积降维,在降维时采用线性激活函数。当步长为1时,残差连接输入和输出;当步长为2时,不使用残差连接,因为为此时的输出和输出特征图的size不相同。

将 MobileNetV2 深度可分离卷积 和 RestNet 残差模块 做对比。ResNet 先使用1*1卷积降维,在降维空间下使用标准卷积,再用1*1卷积升维,残差连接两个高维的部分。

![]()

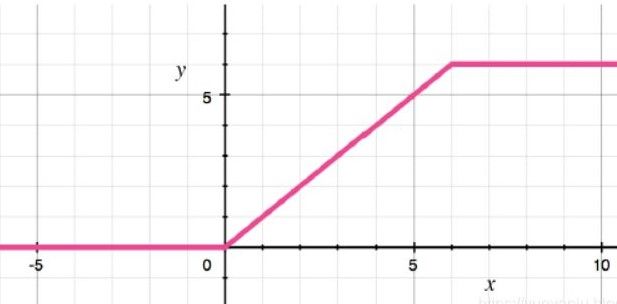

2.2 ReLu6激活函数

ReLu6激活函数是,当x>6时 将y值限制在6,那么relu的值域为[0,6]。因为在低精度浮点数表示下,如8bit或16bit表示一个数,表示的数范围比较小,而如果使用传统的relu激活函数,函数值域是[0,∞],这时用比较少的bit数无法表示很大的数。因此用relu6函数,在低精度浮点数下有比较好的表示性能

2.3 总体流程

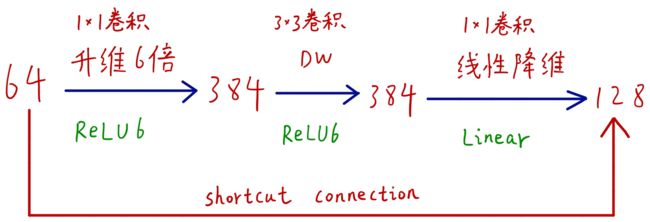

如下图,输入图像,先使用1*1逐点卷积升维(增加通道数);然后使用3*3的深度卷积;再使用1*1逐点卷积降维,使用线性激活函数。通过控制1*1卷积核个数来控制输出特征图的通道数。

举个例子如下图:

输出的图像shape为5*5*64;先通过1*1*(64*6)的逐点卷积升维,将通道数提升6倍,使用relu6激活函数;在384个通道数的高维空间下使用3*3*1的卷积核进行深度卷积,生成特征图shape为3*3*384;再使用1*1*128的逐点卷积降维,线性激活函数;输出特征图shape为3*3*128;残差连接输入和输出

3. 代码复现

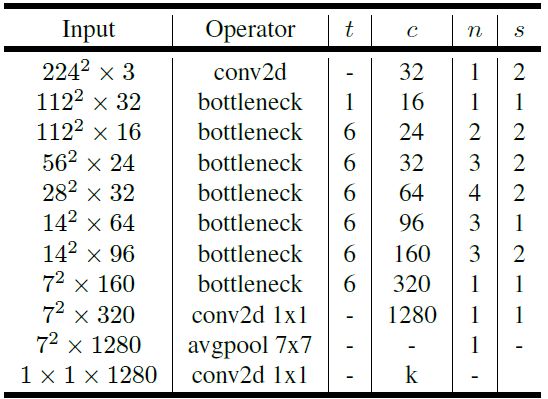

论文中给出的MobileNetV2网络结构如下图所示。Conv2d代表标准卷积;bottleneck代表残差连接优化后的深度可分离卷积(inverted residual block);avgpool代表全局平均池化;t 列代表1*1卷积升维倍数(特征图通道数提升多少倍);c 列代表1*1卷积降维的通道数;n 列代表该网络层重复次数;s列代表步长。

3.1 构建残差模块

残差连接的深度可分离卷积模块是由四部分构成。下面代码中的第(1)部分的①代表是1*1卷积在通道维度上升维,block_id用来判断是否需要升维,如上面的结构图,网络的第一个bottleneck就不需要升维操作,让它的block_id=0,直接进入深度卷积操作。第②部分是深度卷积操作。第③部分是1*1卷积降维。第④部分shotcut首尾短接。如果步长为1且输入和输出特征图的shape相同,那就使用残差连接,将输出输出特征图对应像素值相加。

对深度可分离卷积有疑问的可见我的上一篇文章:【神经网络】(11) 轻量化网络MobileNetV1代码复现、解析,附Tensorflow完整代码

# 定义函数,使超参数处理过后的卷积核个数满足网络要求,被8整除

def make_divisible(value, divisor):

# 与value最接近的8的倍数。若value=30,那么处理后的卷积核=((30+4)//8)*8=32

new_value = max(divisor, int(value + divisor/2) // divisor * divisor)

# 如果计算出的结果比原值要小,那就再加上一个倍数

if new_value < 0.9 * value:

new_value += divisor

# 返回新的卷积核个数

return new_value

#(1)残差连接深度可分离卷积块

# expension代表升维的倍数,超参数alpha控制卷积核个数,block_id控制是否需要升维

def inverted_res_block(input_tensor, expansion, stride, alpha, filters, block_id):

x = input_tensor # 先用x表示输入,如果不执行①的话,那么x没机会接收input_tensor

# 超参数控制逐点卷积的卷积核个数,使用int()函数取整,防止alpha是小数

points_filters = int(filters*alpha)

# 由于网络结构图中特征图的个数为32、16、24、64...都是8的倍数,

# 保证超参数处理过后的卷积核个数能满足网络特征图个数的要求,因此需要保证逐点卷积的卷积核个数为8的倍数

points_filters = make_divisible(points_filters, 8)

# keras.backend.int_shape得到图像的shape,这里只需要最后一个维度即通道维度的大小

in_channels = keras.backend.int_shape(input_tensor)[-1]

# ① 在通道维度上升维

if block_id:

# 1*1卷积提升通道数,

x = layers.Conv2D(expansion * in_channels, # 卷积核个数,通道维度提升expansion倍

kernel_size = (1,1), # 卷积核大小1*1

strides = (1,1), # 步长1*1

padding = 'same', # 卷积过程中特征图size不变

use_bias = False)(input_tensor) # 有BN层就不需要计算偏置

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU(6.0)(x) # relu6激活函数

# ② 深度可分离卷积

x = layers.DepthwiseConv2D(kernel_size=(3,3), # 卷积核个数默认3*3

strides=stride, # 步长

use_bias=False, # 有BN层就不需要偏置

padding = 'same')(x) # 卷积过程中,步长=1,size不变;步长=2,size减半

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU(6.0)(x) # relu6激活函数

# ③ 1*1卷积下降通道数(压缩特征),不使用relu激活函数(保证特征不被破坏)

x = layers.Conv2D(points_filters, # 卷积核个数(即通道数)下降到起始状态

kernel_size = (1,1), # 卷积核大小为1*1

strides = (1,1), # 步长为1*1

padding = 'same', # 卷积过程中特征图size不变

use_bias = False)(x) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

# ④ 残差连接,输入和输出短接

# 步长为1,且输入特征图的shape和输出特征图的shape相同时,残差连接

if stride == 1 and in_channels == points_filters:

# 将输入和输出的tensor的对应元素值相加,要求shape完全一致

x = layers.Add()([input_tensor, x])

return x

# 步长为2,不需要进行短接

return x3.2 MobileNetV2 网络完整代码

网络核心部分就是残差连接优化的深度可分离卷积,inverted_res_block()模块,上面已经解释过了。其次网络涉及两个超参数,alpha:宽度超参数,控制卷积核个数; depth_multiplier:分辨率超参数,控制输入图像的尺寸,进而控制中间层特征图的大小。具体可以看我的前一篇MobileNetV1的文章(文章开头有链接),这里就不作过多解释了。

其他就是基本的网络层的堆叠,每一行都有注释,有疑问的可以在评论区留言。

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, Model

# 定义函数,使超参数处理过后的卷积核个数满足网络要求,被8整除

def make_divisible(value, divisor):

# 与value最接近的8的倍数。若value=30,那么处理后的卷积核=((30+4)//8)*8=32

new_value = max(divisor, int(value + divisor/2) // divisor * divisor)

# 如果计算出的结果比原值要小,那就再加上一个倍数

if new_value < 0.9 * value:

new_value += divisor

# 返回新的卷积核个数

return new_value

#(1)残差连接深度可分离卷积块

# expension代表升维的倍数,超参数alpha控制卷积核个数,block_id控制是否需要升维

def inverted_res_block(input_tensor, expansion, stride, alpha, filters, block_id):

x = input_tensor # 先用x表示输入,如果不执行①的话,那么x没机会接收input_tensor

# 超参数控制逐点卷积的卷积核个数,使用int()函数取整,防止alpha是小数

points_filters = int(filters*alpha)

# 由于网络结构图中特征图的个数为32、16、24、64...都是8的倍数,

# 保证超参数处理过后的卷积核个数能满足网络特征图个数的要求,因此需要保证逐点卷积的卷积核个数为8的倍数

points_filters = make_divisible(points_filters, 8)

# keras.backend.int_shape得到图像的shape,这里只需要最后一个维度即通道维度的大小

in_channels = keras.backend.int_shape(input_tensor)[-1]

# ① 在通道维度上升维

if block_id:

# 1*1卷积提升通道数,

x = layers.Conv2D(expansion * in_channels, # 卷积核个数,通道维度提升expansion倍

kernel_size = (1,1), # 卷积核大小1*1

strides = (1,1), # 步长1*1

padding = 'same', # 卷积过程中特征图size不变

use_bias = False)(input_tensor) # 有BN层就不需要计算偏置

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU(6.0)(x) # relu6激活函数

# ② 深度可分离卷积

x = layers.DepthwiseConv2D(kernel_size=(3,3), # 卷积核个数默认3*3

strides=stride, # 步长

use_bias=False, # 有BN层就不需要偏置

padding = 'same')(x) # 卷积过程中,步长=1,size不变;步长=2,size减半

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU(6.0)(x) # relu6激活函数

# ③ 1*1卷积下降通道数(压缩特征),不使用relu激活函数(保证特征不被破坏)

x = layers.Conv2D(points_filters, # 卷积核个数(即通道数)下降到起始状态

kernel_size = (1,1), # 卷积核大小为1*1

strides = (1,1), # 步长为1*1

padding = 'same', # 卷积过程中特征图size不变

use_bias = False)(x) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

# ④ 残差连接,输入和输出短接

# 步长为1,且输入特征图的shape和输出特征图的shape相同时,残差连接

if stride == 1 and in_channels == points_filters:

# 将输入和输出的tensor的对应元素值相加,要求shape完全一致

x = layers.Add()([input_tensor, x])

return x

# 步长为2,不需要进行短接

return x

#(2)网络模型结构

# 传入classes分类数,输入图片大小,超参数alpha默认=1

def MobileNetV2(classes, input_shape, alpha=1.0):

# 规定超参数的取值范围

if alpha not in [0.5, 0.75, 1.0, 1.3]:

raise ValueError('alpha should use 0.5, 0.75, 1.0, 1.3')

# 超参数控制卷积核个数,先处理第一次卷积的卷积核个数(32*alpha)使它能被8整除

first_conv_filters = make_divisible(32*alpha, 8)

# 构建网络输入图像

inputs = keras.Input(shape=input_shape)

# [224,224,3]==>[112,112,32]

x = layers.Conv2D(first_conv_filters, # 卷积核个数

kernel_size = (3,3), # 卷积核大小3*3

strides = (2,2), # 步长=2,压缩特征图size

padding = 'same', # 步长=2,卷积过程中size减半

use_bias = 'False')(inputs) # 有BN层就不需要偏置

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU(6.0)(x) # relu6激活函数

# [112, 112, 32]==>[112,112,16]

# 第一个残差卷积由于expansion=1,不用上升维度,因此不用执行第①步的标准卷积操作,所以block_id=0

x = inverted_res_block(x, expansion=1, stride=1, alpha=alpha, filters=16, block_id=0)

# [112,112,16]==>[56,56,24]

x = inverted_res_block(x, expansion=6, stride=2, alpha=alpha, filters=24, block_id=1)

# [56,56,24]==>[56,56,24]

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=24, block_id=1)

# [56,56,24]==>[28,28,32]

x = inverted_res_block(x, expansion=6, stride=2, alpha=alpha, filters=32, block_id=1)

# [28,28,32]==>[28,28,32]

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=32, block_id=1)

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=32, block_id=1)

# [28,28,32]==>[14,14,64]

x = inverted_res_block(x, expansion=6, stride=2, alpha=alpha, filters=64, block_id=1)

# [14,14,64]==>[14,14,64]

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=64, block_id=1)

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=64, block_id=1)

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=64, block_id=1)

# [14,14,64]==>[14,14,96]

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=96, block_id=1)

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=96, block_id=1)

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=96, block_id=1)

# [14,14,96]==>[7,7,160]

x = inverted_res_block(x, expansion=6, stride=2, alpha=alpha, filters=160, block_id=1)

# [7,7,160]==>[7,7,160]

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=160, block_id=1)

x = inverted_res_block(x, expansion=6, stride=1, alpha=alpha, filters=160, block_id=1)

# [7,7,160]==>[7,7,320]

x = inverted_res_block(x, expansion=1, stride=1, alpha=alpha, filters=320, block_id=1)

# [7,7,320]==>[7,7,1280]

x = layers.Conv2D(filters=1280, kernel_size=(1,1), strides=1, padding='same', use_bias=False)(x) # 普通卷积

x = layers.BatchNormalization()(x) # 批标准化

x = layers.ReLU(6.0)(x) # relu6激活函数

# [7,7,1280]==>[None,1280]

x = layers.GlobalAveragePooling2D()(x) # 通道维度上进行全剧平均池化

# [None,1280]==>[1,1,1280]

# 超参数调整卷积核个数

shape = (1,1,make_divisible(1280*alpha, 8))

# 调整输出特征图的shape

x = layers.Reshape(target_shape=shape)(x)

# Dropout层随机杀死神经元,防止过拟合

x = layers.Dropout(rate=1e-3)(x)

# [1,1,1280]==>[1,1,1000]

# 卷积层,将特征图x的个数转换成分类数

x = layers.Conv2D(classes, kernel_size=(1,1), padding='same')(x)

# 经过softmax函数,变成分类概率

x = layers.Activation('softmax')(x)

# [1,1,1000]==>[None,1000]

# 重塑概率数排列形式

x = layers.Reshape(target_shape=(classes,))(x)

# 构建网络

model = Model(inputs, x)

return model # 返回网络模型

if __name__ == '__main__':

# 获得网络模型结构

model = MobileNetV2(classes=1000, # 1000个分类

input_shape=[224,224,3], # 网络输入的shape

alpha=1.0) # 超参数控制卷积核个数

# 查看网络模型结构

model.summary() 3.3 查看网络结构

通过model.summary()查看网络模型结构,可见MobileNetV2的参数量只有三百万个,相比于MobileNetV1四百万的参数量,模型变得更加轻量化。

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 112, 112, 32) 896 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 112, 112, 32) 128 conv2d[0][0]

__________________________________________________________________________________________________

re_lu (ReLU) (None, 112, 112, 32) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

depthwise_conv2d (DepthwiseConv (None, 112, 112, 32) 288 re_lu[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 112, 112, 32) 128 depthwise_conv2d[0][0]

__________________________________________________________________________________________________

re_lu_1 (ReLU) (None, 112, 112, 32) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 112, 112, 16) 512 re_lu_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 112, 112, 16) 64 conv2d_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 112, 112, 96) 1536 batch_normalization_2[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 112, 112, 96) 384 conv2d_2[0][0]

__________________________________________________________________________________________________

re_lu_2 (ReLU) (None, 112, 112, 96) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_1 (DepthwiseCo (None, 56, 56, 96) 864 re_lu_2[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 56, 56, 96) 384 depthwise_conv2d_1[0][0]

__________________________________________________________________________________________________

re_lu_3 (ReLU) (None, 56, 56, 96) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 56, 56, 24) 2304 re_lu_3[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 56, 56, 24) 96 conv2d_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 56, 56, 144) 3456 batch_normalization_5[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 56, 56, 144) 576 conv2d_4[0][0]

__________________________________________________________________________________________________

re_lu_4 (ReLU) (None, 56, 56, 144) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_2 (DepthwiseCo (None, 56, 56, 144) 1296 re_lu_4[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 56, 56, 144) 576 depthwise_conv2d_2[0][0]

__________________________________________________________________________________________________

re_lu_5 (ReLU) (None, 56, 56, 144) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 56, 56, 24) 3456 re_lu_5[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 56, 56, 24) 96 conv2d_5[0][0]

__________________________________________________________________________________________________

add (Add) (None, 56, 56, 24) 0 batch_normalization_5[0][0]

batch_normalization_8[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 56, 56, 144) 3456 add[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 56, 56, 144) 576 conv2d_6[0][0]

__________________________________________________________________________________________________

re_lu_6 (ReLU) (None, 56, 56, 144) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_3 (DepthwiseCo (None, 28, 28, 144) 1296 re_lu_6[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 28, 28, 144) 576 depthwise_conv2d_3[0][0]

__________________________________________________________________________________________________

re_lu_7 (ReLU) (None, 28, 28, 144) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 28, 28, 32) 4608 re_lu_7[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 28, 28, 32) 128 conv2d_7[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 28, 28, 192) 6144 batch_normalization_11[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 28, 28, 192) 768 conv2d_8[0][0]

__________________________________________________________________________________________________

re_lu_8 (ReLU) (None, 28, 28, 192) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_4 (DepthwiseCo (None, 28, 28, 192) 1728 re_lu_8[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 28, 28, 192) 768 depthwise_conv2d_4[0][0]

__________________________________________________________________________________________________

re_lu_9 (ReLU) (None, 28, 28, 192) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 28, 28, 32) 6144 re_lu_9[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 28, 28, 32) 128 conv2d_9[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 28, 28, 32) 0 batch_normalization_11[0][0]

batch_normalization_14[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 28, 28, 192) 6144 add_1[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 28, 28, 192) 768 conv2d_10[0][0]

__________________________________________________________________________________________________

re_lu_10 (ReLU) (None, 28, 28, 192) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_5 (DepthwiseCo (None, 28, 28, 192) 1728 re_lu_10[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 28, 28, 192) 768 depthwise_conv2d_5[0][0]

__________________________________________________________________________________________________

re_lu_11 (ReLU) (None, 28, 28, 192) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 28, 28, 32) 6144 re_lu_11[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 28, 28, 32) 128 conv2d_11[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 28, 28, 32) 0 add_1[0][0]

batch_normalization_17[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 28, 28, 192) 6144 add_2[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 28, 28, 192) 768 conv2d_12[0][0]

__________________________________________________________________________________________________

re_lu_12 (ReLU) (None, 28, 28, 192) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_6 (DepthwiseCo (None, 14, 14, 192) 1728 re_lu_12[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 14, 14, 192) 768 depthwise_conv2d_6[0][0]

__________________________________________________________________________________________________

re_lu_13 (ReLU) (None, 14, 14, 192) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 14, 14, 64) 12288 re_lu_13[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 14, 14, 64) 256 conv2d_13[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 14, 14, 384) 24576 batch_normalization_20[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 14, 14, 384) 1536 conv2d_14[0][0]

__________________________________________________________________________________________________

re_lu_14 (ReLU) (None, 14, 14, 384) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_7 (DepthwiseCo (None, 14, 14, 384) 3456 re_lu_14[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 14, 14, 384) 1536 depthwise_conv2d_7[0][0]

__________________________________________________________________________________________________

re_lu_15 (ReLU) (None, 14, 14, 384) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 14, 14, 64) 24576 re_lu_15[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 14, 14, 64) 256 conv2d_15[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 14, 14, 64) 0 batch_normalization_20[0][0]

batch_normalization_23[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 14, 14, 384) 24576 add_3[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 14, 14, 384) 1536 conv2d_16[0][0]

__________________________________________________________________________________________________

re_lu_16 (ReLU) (None, 14, 14, 384) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_8 (DepthwiseCo (None, 14, 14, 384) 3456 re_lu_16[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 14, 14, 384) 1536 depthwise_conv2d_8[0][0]

__________________________________________________________________________________________________

re_lu_17 (ReLU) (None, 14, 14, 384) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 14, 14, 64) 24576 re_lu_17[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 14, 14, 64) 256 conv2d_17[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 14, 14, 64) 0 add_3[0][0]

batch_normalization_26[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 14, 14, 384) 24576 add_4[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 14, 14, 384) 1536 conv2d_18[0][0]

__________________________________________________________________________________________________

re_lu_18 (ReLU) (None, 14, 14, 384) 0 batch_normalization_27[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_9 (DepthwiseCo (None, 14, 14, 384) 3456 re_lu_18[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 14, 14, 384) 1536 depthwise_conv2d_9[0][0]

__________________________________________________________________________________________________

re_lu_19 (ReLU) (None, 14, 14, 384) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 14, 14, 64) 24576 re_lu_19[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 14, 14, 64) 256 conv2d_19[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 14, 14, 64) 0 add_4[0][0]

batch_normalization_29[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 14, 14, 384) 24576 add_5[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 14, 14, 384) 1536 conv2d_20[0][0]

__________________________________________________________________________________________________

re_lu_20 (ReLU) (None, 14, 14, 384) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_10 (DepthwiseC (None, 14, 14, 384) 3456 re_lu_20[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 14, 14, 384) 1536 depthwise_conv2d_10[0][0]

__________________________________________________________________________________________________

re_lu_21 (ReLU) (None, 14, 14, 384) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 14, 14, 96) 36864 re_lu_21[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 14, 14, 96) 384 conv2d_21[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 14, 14, 576) 55296 batch_normalization_32[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 14, 14, 576) 2304 conv2d_22[0][0]

__________________________________________________________________________________________________

re_lu_22 (ReLU) (None, 14, 14, 576) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_11 (DepthwiseC (None, 14, 14, 576) 5184 re_lu_22[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 14, 14, 576) 2304 depthwise_conv2d_11[0][0]

__________________________________________________________________________________________________

re_lu_23 (ReLU) (None, 14, 14, 576) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 14, 14, 96) 55296 re_lu_23[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 14, 14, 96) 384 conv2d_23[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 14, 14, 96) 0 batch_normalization_32[0][0]

batch_normalization_35[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 14, 14, 576) 55296 add_6[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 14, 14, 576) 2304 conv2d_24[0][0]

__________________________________________________________________________________________________

re_lu_24 (ReLU) (None, 14, 14, 576) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_12 (DepthwiseC (None, 14, 14, 576) 5184 re_lu_24[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 14, 14, 576) 2304 depthwise_conv2d_12[0][0]

__________________________________________________________________________________________________

re_lu_25 (ReLU) (None, 14, 14, 576) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 14, 14, 96) 55296 re_lu_25[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 14, 14, 96) 384 conv2d_25[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 14, 14, 96) 0 add_6[0][0]

batch_normalization_38[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 14, 14, 576) 55296 add_7[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 14, 14, 576) 2304 conv2d_26[0][0]

__________________________________________________________________________________________________

re_lu_26 (ReLU) (None, 14, 14, 576) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_13 (DepthwiseC (None, 7, 7, 576) 5184 re_lu_26[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 7, 7, 576) 2304 depthwise_conv2d_13[0][0]

__________________________________________________________________________________________________

re_lu_27 (ReLU) (None, 7, 7, 576) 0 batch_normalization_40[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 7, 7, 160) 92160 re_lu_27[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 7, 7, 160) 640 conv2d_27[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 7, 7, 960) 153600 batch_normalization_41[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 7, 7, 960) 3840 conv2d_28[0][0]

__________________________________________________________________________________________________

re_lu_28 (ReLU) (None, 7, 7, 960) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_14 (DepthwiseC (None, 7, 7, 960) 8640 re_lu_28[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 7, 7, 960) 3840 depthwise_conv2d_14[0][0]

__________________________________________________________________________________________________

re_lu_29 (ReLU) (None, 7, 7, 960) 0 batch_normalization_43[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 7, 7, 160) 153600 re_lu_29[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 7, 7, 160) 640 conv2d_29[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 7, 7, 160) 0 batch_normalization_41[0][0]

batch_normalization_44[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 7, 7, 960) 153600 add_8[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 7, 7, 960) 3840 conv2d_30[0][0]

__________________________________________________________________________________________________

re_lu_30 (ReLU) (None, 7, 7, 960) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_15 (DepthwiseC (None, 7, 7, 960) 8640 re_lu_30[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 7, 7, 960) 3840 depthwise_conv2d_15[0][0]

__________________________________________________________________________________________________

re_lu_31 (ReLU) (None, 7, 7, 960) 0 batch_normalization_46[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 7, 7, 160) 153600 re_lu_31[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 7, 7, 160) 640 conv2d_31[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 7, 7, 160) 0 add_8[0][0]

batch_normalization_47[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 7, 7, 160) 25600 add_9[0][0]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 7, 7, 160) 640 conv2d_32[0][0]

__________________________________________________________________________________________________

re_lu_32 (ReLU) (None, 7, 7, 160) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

depthwise_conv2d_16 (DepthwiseC (None, 7, 7, 160) 1440 re_lu_32[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 7, 7, 160) 640 depthwise_conv2d_16[0][0]

__________________________________________________________________________________________________

re_lu_33 (ReLU) (None, 7, 7, 160) 0 batch_normalization_49[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 7, 7, 320) 51200 re_lu_33[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 7, 7, 320) 1280 conv2d_33[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 7, 7, 1280) 409600 batch_normalization_50[0][0]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 7, 7, 1280) 5120 conv2d_34[0][0]

__________________________________________________________________________________________________

re_lu_34 (ReLU) (None, 7, 7, 1280) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

global_average_pooling2d (Globa (None, 1280) 0 re_lu_34[0][0]

__________________________________________________________________________________________________

reshape (Reshape) (None, 1, 1, 1280) 0 global_average_pooling2d[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 1, 1, 1280) 0 reshape[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 1, 1, 1000) 1281000 dropout[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 1, 1, 1000) 0 conv2d_35[0][0]

__________________________________________________________________________________________________

reshape_1 (Reshape) (None, 1000) 0 activation[0][0]

==================================================================================================

Total params: 3,141,416

Trainable params: 3,110,504

Non-trainable params: 30,912

__________________________________________________________________________________________________