kafka学习记录—Kafka-Eagle监控

kafka学习记录—Kafka-Eagle监控

安装MySQL

略

kafka环境

-

关闭kafka集群

-

修改内存,一个G不够

[root@hadoop101 bin] vim kafka-server-start.sh if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then export KAFKA_HEAP_OPTS="-server -Xms2G -Xmx2G -XX:PermSize=128m -XX:+UseG1GC -XX:MaxGCPauseMillis=200 -XX:ParallelGCThreads=8 -XX:ConcGCThreads=5 -XX:InitiatingHeapOccupancyPercent=70" export JMX_PORT="9999" # export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G" #分发kafka [root@hadoop101 bin] xsync kafka-server-start.sh #再次启动 [root@hadoop101 kafka] bin/kafka-server-start.sh -daemon config/server.properties

安装kafka-eagle

-

官网:https://www.kafka-eagle.org/

-

解压

[root@hadoop101 software] tar -zxvf kafka-eagle-bin-2.0.8.tar.gz kafka-eagle-bin-2.0.8/ kafka-eagle-bin-2.0.8/efak-web-2.0.8-bin.tar.gz [root@hadoop101 software] ll 总用量 506016 -rw-r--r--. 1 root root 12516362 4月 21 17:06 apache-zookeeper-3.6.3-bin.tar.gz -rw-r--r--. 1 root root 338075860 4月 21 15:26 hadoop-3.1.3.tar.gz -rw-r--r--. 1 root root 86486610 4月 21 14:27 kafka_2.12-3.0.0.tgz drwxrwxr-x. 2 root root 39 10月 13 2021 kafka-eagle-bin-2.0.8 -rw-r--r--. 1 root root 81074069 4月 26 14:39 kafka-eagle-bin-2.0.8.tar.gz [root@hadoop101 software] cd kafka-eagle-bin-2.0.8/ [root@hadoop101 kafka-eagle-bin-2.0.8]# ll 总用量 79164 -rw-rw-r--. 1 root root 81062577 10月 13 2021 efak-web-2.0.8-bin.tar.gz [root@hadoop101 kafka-eagle-bin-2.0.8] tar -zxvf efak-web-2.0.8-bin.tar.gz -C /opt/module/ #查看 [root@hadoop101 kafka-eagle-bin-2.0.8] cd /opt/module/ [root@hadoop101 module]# ll 总用量 0 drwxr-xr-x. 8 root root 74 4月 26 14:40 efak-web-2.0.8 drwxr-xr-x. 9 qiyou qiyou 149 9月 12 2019 hadoop-3.1.3 drwxr-xr-x. 8 qiyou qiyou 273 12月 16 03:30 jdk1.8.0_321 drwxr-xr-x. 9 qiyou qiyou 130 4月 26 14:30 kafka drwxr-xr-x. 8 root root 159 4月 21 20:07 zookeeper-3.6.3 [root@hadoop101 module] mv efak-web-2.0.8/ efak [root@hadoop101 module] ll 总用量 0 drwxr-xr-x. 8 root root 74 4月 26 14:40 efak drwxr-xr-x. 9 qiyou qiyou 149 9月 12 2019 hadoop-3.1.3 drwxr-xr-x. 8 qiyou qiyou 273 12月 16 03:30 jdk1.8.0_321 drwxr-xr-x. 9 qiyou qiyou 130 4月 26 14:30 kafka drwxr-xr-x. 8 root root 159 4月 21 20:07 zookeeper-3.6.3 [root@hadoop101 module] cd efak/ [root@hadoop101 efak] ll 总用量 0 drwxr-xr-x. 2 root root 33 4月 26 14:40 bin drwxr-xr-x. 2 root root 62 4月 26 14:40 conf #监控的数据放在db中(mysql) drwxr-xr-x. 2 root root 6 9月 13 2021 db drwxr-xr-x. 2 root root 23 4月 26 14:40 font drwxr-xr-x. 9 root root 91 9月 13 2021 kms drwxr-xr-x. 2 root root 6 9月 13 2021 logs [root@hadoop101 efak] -

修改配置文件,添加环境变量

[root@hadoop101 conf] vim system-config.properties [root@hadoop101 conf] vim /etc/profile [root@hadoop101 conf] source /etc/profile [root@hadoop101 conf] cd .. [root@hadoop101 efak] bin/ke.sh Usage: bin/ke.sh {start|stop|restart|status|stats|find|gc|jdk|version|sdate|worknode} -

启动

[root@hadoop101 efak] bin/ke.sh start 已解压: META-INF/maven/org.smartloli.kafka.eagle/efak-web/pom.properties [2022-04-26 14:51:52] INFO: Port Progress: [##################################################] | 100% [2022-04-26 14:51:55] INFO: Config Progress: [##################################################] | 100% [2022-04-26 14:51:58] INFO: Startup Progress: [##################################################] | 100% [2022-04-26 14:51:47] INFO: Status Code[0] [2022-04-26 14:51:47] INFO: [Job done!] Welcome to ______ ______ ___ __ __ / ____/ / ____/ / | / //_/ / __/ / /_ / /| | / ,< / /___ / __/ / ___ | / /| | /_____/ /_/ /_/ |_|/_/ |_| ( Eagle For Apache Kafka® ) Version 2.0.8 -- Copyright 2016-2021 ******************************************************************* * EFAK Service has started success. * Welcome, Now you can visit 'http://192.168.126.129:8048' * Account:admin ,Password:123456 ******************************************************************* * <Usage> ke.sh [start|status|stop|restart|stats] </Usage> * <Usage> https://www.kafka-eagle.org/ </Usage> *******************************************************************

kafka-kraft模式

-

发送

-

编写启停脚本

[root@hadoop101 bin] vim kf2.sh [root@hadoop101 bin] chmod 777 kf2.sh [root@hadoop101 bin] ll 总用量 8 -rwxrwxrwx. 1 root root 453 4月 26 15:24 kf2.sh -rwxr-xr-x. 1 root root 872 4月 23 00:06 xsync #脚本内容 #! /bin/bash case $1 in "start"){ for i in hadoop101 hadoop102 hadoop103 do echo"========启动 $i Kafka2=========" ssh $i "/opt/module/kafka2/bin/kafka-server-start.sh -daemon /opt/module/kafka2/config/kraft/server.properties" done };; "stop"){ for i in hadoop101 hadoop102 hadoop103 do echo "========停止 $i Kafka2=========" ssh $i "/opt/module/kafka2/bin/kafka-server-stop.sh" done };; esac

kafka调优

消费者如何提高吞吐量

- 增加分区数

-

如何提高kafka总体吞吐量

- 生产者:适当提高batch.size大小,适当提高linger.ms(5-100),压缩等

- 增加分区

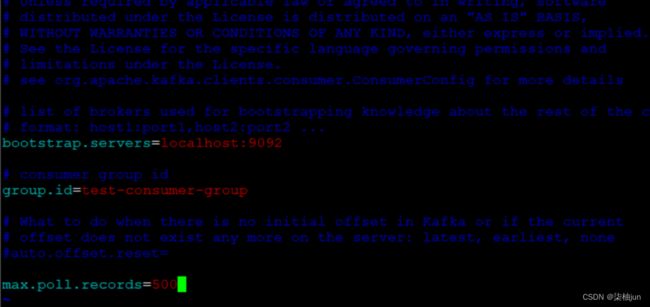

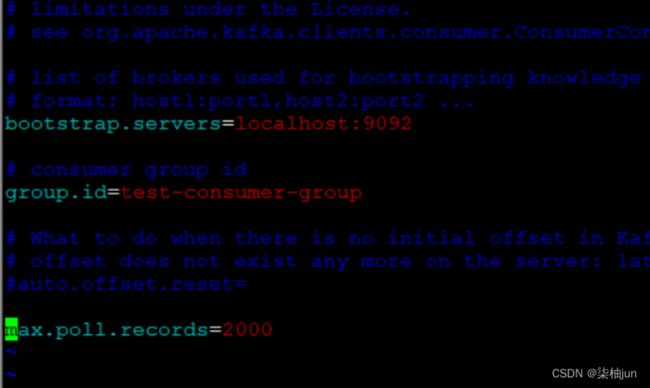

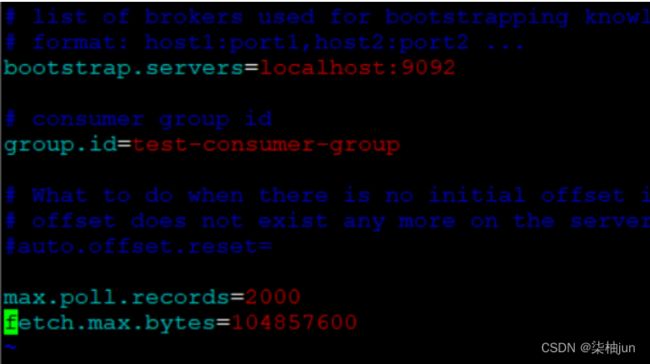

- 消费者:调整fetch.max.bytes大小,默认50m,调整max.poll.records大小,默认500条

-

数据精准一次

- 生产者:acks,幂等性+事务

- broker:分区副本大于2,ISR应答最小副本数量大于等于2

- 消费者:事务+手动提交offset,输出目的地必须支持事务(MySQL,kafka)

-

合理设置分区数

- 灵活调整分区,不是越多或者越少越好,搭建完集群进行压测调整分区

-

单条日志大于1m

-

服务器挂了(某个kafka节点挂掉)

- 尝试重启可以则直接解决

- 重启不行,查看报错,考虑增加内存、CPU、网络带宽

- 若误删除,如果副本数大于等于2,可以服役新节点重新服役,再执行负载均衡操作

-

集群压力测试

-

除了三个kafka节点,再准备一个hadoop104作为测试服务器(客户端)

-

启动hadoop101,hadoop102,hadoop103

-

创建test topic,设置为三个分区三个副本

#失误报错:忘记开启zookeeper,开启zookeeper后要重启开启kafka集群才能创建 [root@hadoop101 kafka] bin/kafka-topics.sh --bootstrap-server hadoop101:9092 --create --replication-factor 3 --partitions 3 --topic test Created topic test. -

生产者测试

-

–record-size 1024 :一条信息有多大,设置为1k

-

–num-records 1000000 :总共发送多少条信息,设置为100万条

-

–throughput 10000 :每秒多少条信息,设置-1不限流,测试生产者最大吞吐量

-

–producer-props bootstrap.servers=hadoop101:9092,hadoop102:9092,hadoop103:9092:配置生产者相关参数

-

测试环境:batch.size=16384 linger.ms=0

响应:(5.95 MB/sec)

-

-

[root@hadoop105 kafka] bin/kafka-producer-perf-test.sh --topic test --record-size 1024 --num-records 1000000 --throughput 10000 --producer-props bootstrap.servers=hadoop101:9092,hadoop102:9092,hadoop103:9092 batch.size=16384 linger.ms=0

#一分钟左右,如下:

[root@hadoop104 kafka]# bin/kafka-producer-perf-test.sh --topic test --record-size 1024 --num-records 1000000 --throughput 10000 --producer-props bootstrap.servers=hadoop101:9092,hadoop102:9092,hadoop103:9092 batch.size=16384 linger.ms=0

10441 records sent, 2085.3 records/sec (2.04 MB/sec), 2106.0 ms avg latency, 3730.0 ms max latency.

15675 records sent, 3134.4 records/sec (3.06 MB/sec), 5710.4 ms avg latency, 7582.0 ms max latency.

17655 records sent, 3531.0 records/sec (3.45 MB/sec), 8691.5 ms avg latency, 9983.0 ms max latency.

18270 records sent, 3649.6 records/sec (3.56 MB/sec), 8686.5 ms avg latency, 9694.0 ms max latency.

19335 records sent, 3867.0 records/sec (3.78 MB/sec), 8309.2 ms avg latency, 9088.0 ms max latency.

17145 records sent, 3006.3 records/sec (2.94 MB/sec), 8012.4 ms avg latency, 10313.0 ms max latency.

13905 records sent, 2779.9 records/sec (2.71 MB/sec), 9947.1 ms avg latency, 12161.0 ms max latency.

15150 records sent, 3030.0 records/sec (2.96 MB/sec), 10812.7 ms avg latency, 13652.0 ms max latency.

20805 records sent, 4157.7 records/sec (4.06 MB/sec), 9360.5 ms avg latency, 13782.0 ms max latency.

22215 records sent, 4443.0 records/sec (4.34 MB/sec), 7348.5 ms avg latency, 11173.0 ms max latency.

24465 records sent, 4891.0 records/sec (4.78 MB/sec), 6600.2 ms avg latency, 9541.0 ms max latency.

20340 records sent, 4067.2 records/sec (3.97 MB/sec), 6767.3 ms avg latency, 9675.0 ms max latency.

23325 records sent, 4528.2 records/sec (4.42 MB/sec), 6609.7 ms avg latency, 10104.0 ms max latency.

18825 records sent, 3764.2 records/sec (3.68 MB/sec), 7367.5 ms avg latency, 11177.0 ms max latency.

14805 records sent, 2932.8 records/sec (2.86 MB/sec), 8416.9 ms avg latency, 13300.0 ms max latency.

15750 records sent, 3150.0 records/sec (3.08 MB/sec), 10160.2 ms avg latency, 15486.0 ms max latency.

28710 records sent, 5739.7 records/sec (5.61 MB/sec), 7469.9 ms avg latency, 14489.0 ms max latency.

32460 records sent, 6492.0 records/sec (6.34 MB/sec), 4938.4 ms avg latency, 10993.0 ms max latency.

35790 records sent, 7158.0 records/sec (6.99 MB/sec), 4501.6 ms avg latency, 8484.0 ms max latency.

44175 records sent, 8835.0 records/sec (8.63 MB/sec), 3726.8 ms avg latency, 7918.0 ms max latency.

48795 records sent, 9759.0 records/sec (9.53 MB/sec), 3308.4 ms avg latency, 6467.0 ms max latency.

49475 records sent, 9895.0 records/sec (9.66 MB/sec), 3084.0 ms avg latency, 5976.0 ms max latency.

48067 records sent, 9601.9 records/sec (9.38 MB/sec), 3147.5 ms avg latency, 6126.0 ms max latency.

46845 records sent, 9369.0 records/sec (9.15 MB/sec), 3261.2 ms avg latency, 6239.0 ms max latency.

45390 records sent, 9078.0 records/sec (8.87 MB/sec), 3323.2 ms avg latency, 6282.0 ms max latency.

46169 records sent, 9217.2 records/sec (9.00 MB/sec), 3272.2 ms avg latency, 6283.0 ms max latency.

46620 records sent, 9322.1 records/sec (9.10 MB/sec), 3383.3 ms avg latency, 6596.0 ms max latency.

45795 records sent, 9157.2 records/sec (8.94 MB/sec), 3179.9 ms avg latency, 6402.0 ms max latency.

46783 records sent, 9356.6 records/sec (9.14 MB/sec), 3411.4 ms avg latency, 6705.0 ms max latency.

48030 records sent, 9600.2 records/sec (9.38 MB/sec), 3244.2 ms avg latency, 6743.0 ms max latency.

43382 records sent, 8676.4 records/sec (8.47 MB/sec), 3302.1 ms avg latency, 6618.0 ms max latency.

42195 records sent, 8439.0 records/sec (8.24 MB/sec), 4195.7 ms avg latency, 7130.0 ms max latency.

1000000 records sent, 6092.842738 records/sec (5.95 MB/sec), 4884.89 ms avg latency, 15486.00 ms max latency, 5030 ms 50th, 10589 ms 95th, 13630 ms 99th, 15449 ms 99.9th.

若设置batch.size设置为4k,时间大概三分钟左右(变长):3.81MB/sec

测试环境下,设置linger.ms影响不大:3.83 MB/sec

压缩:5.68 MB/sec

- 消费者压力测试

- –bootstrap-server hadoop101:9092,hadoop102:9092,hadoop103:9092 :指定kafka集群地址

- –topic test :指定topic名称

- –messages 1000000 :总共消费的消息个数,设置100万条

[root@hadoop104 kafka] bin/kafka-consumer-perf-test.sh --bootstrap-server hadoop101:9092,hadoop102:9092,hadoop103:9092 --topic test --messages 1000000 --consumer.config config/consumer.properties

#如下:

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2022-04-26 19:11:28:496, 2022-04-26 19:11:40:711, 976.5625, 79.9478, 1000000, 81866.5575, 1373, 10842, 90.0722, 92233.9052

- –consumer.config config/consumer.properties:修改:

[root@hadoop104 kafka] bin/kafka-consumer-perf-test.sh --bootstrap-server hadoop101:9092,hadoop102:9092,hadoop103:9092 --topic test --messages 1000000 --consumer.config config/consumer.properties

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2022-04-26 19:16:48:018, 2022-04-26 19:16:57:562, 976.5625, 102.3221, 1000000, 104777.8709, 1285, 8259, 118.2422, 121080.0339

- 一次拉取处理条数为2000 117.1922m/s

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2022-04-26 19:19:33:340, 2022-04-26 19:19:41:673, 976.5625, 117.1922, 1000000, 120004.8002, 1183, 7150, 136.5822, 139860.1399

- 调整fetch.max.bytes大小

[root@hadoop104 kafka] bin/kafka-consumer-perf-test.sh --bootstrap-server hadoop101:9092,hadoop102:9092,hadoop103:9092 --topic test --messages 1000000 --consumer.config config/consumer.properties

start.time, end.time, data.consumed.in.MB, MB.sec, data.consumed.in.nMsg, nMsg.sec, rebalance.time.ms, fetch.time.ms, fetch.MB.sec, fetch.nMsg.sec

2022-04-26 19:23:29:651, 2022-04-26 19:23:38:660, 976.5625, 145.3985, 1000000, 111000.1110, 973, 8036, 121.5235, 124440.0199