点云深度学习--制作自己的PointConv数据集modelnet-40

点云深度学习--制作自己的PointConv数据集modelnet-40

-

- 一、论文及程序地址

- 二、运行环境

- 三、生成PCD文件

- 四、将PCD文件修改为符合规范的txt文件

- 五、将生成的数据放进至源数据集

- 六、实验验证

一、论文及程序地址

论文原文

PointConv: Deep Convolutional Networks on 3D Point Clouds

原数据集模板

modelnet40_normal_resampled

二、运行环境

硬件:i7-6700HQ、GTX960M-2G

软件:Ubuntu18.04、C++11、PCL1.9.1,OpenCV4.4.0

三、生成PCD文件

pcd shengcheng/2.cpp

使用Azure Kinect、RealSense等深度相机获取深度图以及RGB图,本实验以Azure Kinect为例,使用到的是Azure Kinect DK。

运行程序命令

cd build

cmake ..

make -j4

./2

CMakeLists.txt

cmake_minimum_required(VERSION 2.6)

project(2)

set(CMAKE_CXX_FLAGS "-std=c++11")

find_package(OpenCV REQUIRED)

find_package(k4a REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(2 2.cpp)

target_link_libraries(2 ${OpenCV_LIBS} k4a::k4a)

find_package(PCL 1.2 REQUIRED)

include_directories(${PCL_INCLUDE_DIRS})

link_directories(${PCL_LIBRARY_DIRS})

add_definitions(${PCL_DEFINITIONS})

target_link_libraries (2 ${PCL_LIBRARIES})

install(TARGETS 2 RUNTIME DESTINATION bin)

2.cpp

#include 运行结果

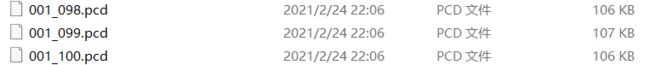

文件保存在build/001或者build/002文件夹下,共有三种文件类型。

1.pcd文件共计100个

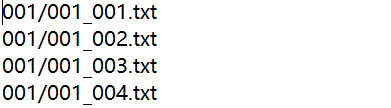

2.list.txt

3.name.txt

四、将PCD文件修改为符合规范的txt文件

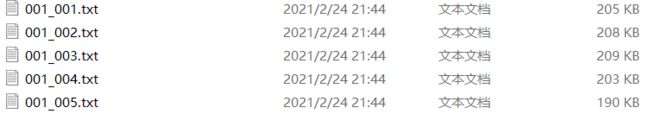

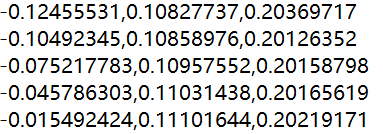

原始数据集每行有6个数据,为x、y、z、normalx、normaly、normalz,并分别用逗号隔开,相比于PCD文件没有文件头。CMakelists文件通用,暂不赘述。

zhizuoshujuji/2.cpp

2.cpp

#include 运行结果

文件保存在build/0001或者build/0002文件夹下,pcd文件共计100个

txt文件内容

五、将生成的数据放进至源数据集

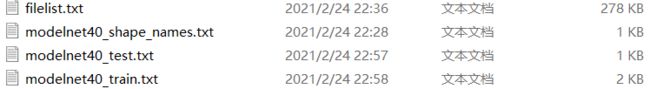

将0001和0002内的txt点云文件放进至pointconv_pytorch-master/data/modelnet40_normal_resampled下,并根据需要将生成的name.txt和list.txt放进至以下文件

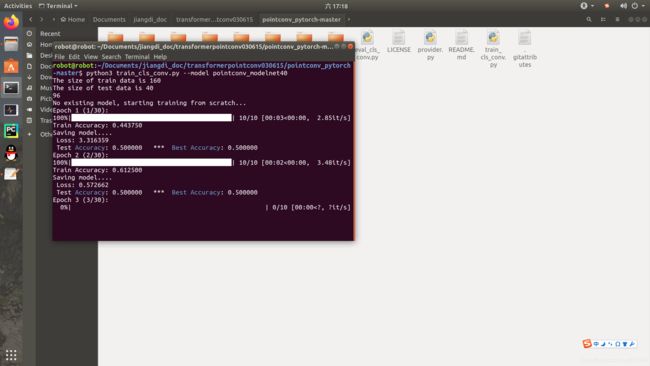

六、实验验证

由于点云文件中点数不固定,为防止出现点数不足问题,将train_cls_conv.py和eval_cls_conv.py中的–num_point修改为512 ,结果如下: