03.fourier transform(傅立叶变换)

一:演示

原图

傅里叶变换

原本四个角比较亮(低频),经过四个角平移到中心,中心会比较亮

中心亮的是低频;

四周暗的是高频;

将图像四周置0(暗的区域)后,傅里叶反变换图像:

将图像中心置0(亮的区域)后,傅里叶反变换图像:

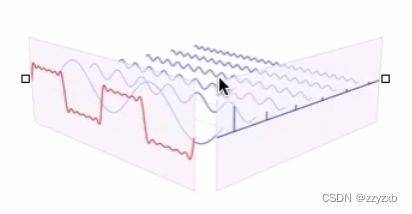

二:什么是频域?

红色图像:时域图像;

蓝色图像:频域图像;

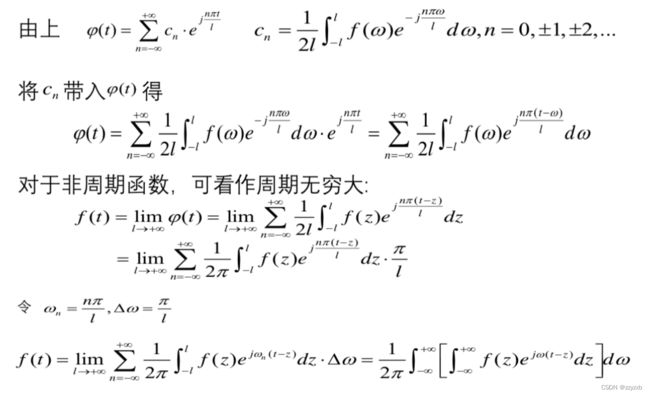

三:傅里叶变换的由来

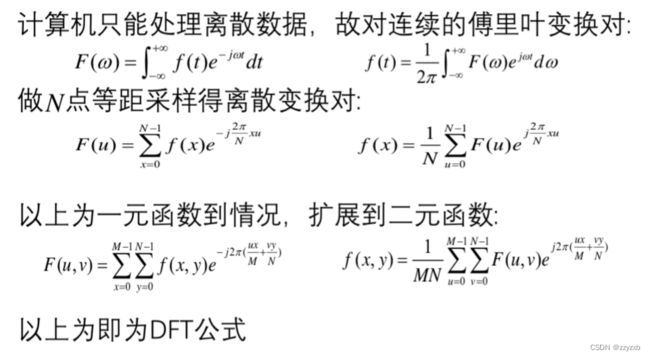

三:傅里叶变换算法(DFT / FFT)

四:源码

fft2d.py

# -*- coding: utf-8 -*-

import numpy as np

import matplotlib.pyplot as plt

def rawFFT(Img, Wr, axis):

if Img.shape[axis] == 2:

pic = np.zeros(Img.shape, dtype=complex)

if axis == 1:

pic[:, 0] = Img[:, 0] + Img[:, 1] * Wr[:, 0]

pic[:, 1] = Img[:, 0] - Img[:, 1] * Wr[:, 0]

elif axis == 0:

pic[0, :] = Img[0, :] + Img[1, :] * Wr[0, :]

pic[1, :] = Img[0, :] - Img[1, :] * Wr[0, :]

return pic

else:

pic = np.empty(Img.shape, dtype=complex)

if axis == 1:

A = rawFFT(Img[:, ::2], Wr[:, ::2], axis)

B = rawFFT(Img[:, 1::2], Wr[:, ::2], axis)

pic[:, 0:Img.shape[1] // 2] = A + Wr * B

pic[:, Img.shape[1] // 2:Img.shape[1]] = A - Wr * B

elif axis == 0:

A = rawFFT(Img[::2, :], Wr[::2, :], axis)

B = rawFFT(Img[1::2, :], Wr[::2, :], axis)

pic[0:Img.shape[0] // 2, :] = A + Wr * B

pic[Img.shape[0] // 2:Img.shape[0], :] = A - Wr * B

return pic

def FFT_1d(Img, axis):

Wr = np.zeros(Img.shape, dtype=complex)

if axis == 0:

Wr = np.zeros((Img.shape[0] // 2, Img.shape[1]), dtype=complex)

temp = np.array([

np.cos(2 * np.pi * i / Img.shape[0]) - 1j * np.sin(2 * np.pi * i / Img.shape[0]) for i in

range(Img.shape[0] // 2)])

for i in range(Wr.shape[1]):

Wr[:, i] = temp

elif axis == 1:

Wr = np.zeros((Img.shape[0], Img.shape[1] // 2), dtype=complex)

temp = np.array([

np.cos(2 * np.pi * i / Img.shape[1]) - 1j * np.sin(2 * np.pi * i / Img.shape[1]) for i in

range(Img.shape[1] // 2)])

for i in range(Wr.shape[0]):

Wr[i, :] = temp

return rawFFT(Img, Wr, axis)

def iFFT_1d(Img, axis):

Wr = np.zeros(Img.shape, dtype=complex)

if axis == 0:

Wr = np.zeros((Img.shape[0] // 2, Img.shape[1]), dtype=complex)

temp = np.array([

np.cos(2 * np.pi * i / Img.shape[0]) + 1j * np.sin(2 * np.pi * i / Img.shape[0]) for i in

range(Img.shape[0] // 2)])

for i in range(Wr.shape[1]):

Wr[:, i] = temp

elif axis == 1:

Wr = np.zeros((Img.shape[0], Img.shape[1] // 2), dtype=complex)

temp = np.array([

np.cos(2 * np.pi * i / Img.shape[1]) + 1j * np.sin(2 * np.pi * i / Img.shape[1]) for i in

range(Img.shape[1] // 2)])

for i in range(Wr.shape[0]):

Wr[i, :] = temp

return rawFFT(Img, Wr, axis) * (1.0 / Img.shape[axis])

def FFT_2d(Img):

'''

only for gray scale 2d-img. otherwise return 0 img with the same size of Img

:param Img: img to be fourior transform

:return: img been transformed

'''

imgsize = Img.shape

pic = np.zeros(imgsize, dtype=complex)

if len(imgsize) == 2:

N = 2

while N < imgsize[0]:

N = N << 1

num1 = N - imgsize[0]

N = 2

while N < imgsize[1]:

N = N << 1

num2 = N - imgsize[1]

pic = FFT_1d(np.pad(Img, ((num1 // 2, num1 - num1 // 2), (0, 0)), 'edge'), 0)[

num1 // 2:num1 // 2 + imgsize[0], :]

pic = FFT_1d(np.pad(pic, ((0, 0), (num2 // 2, num2 - num2 // 2)), 'edge'), 1)[:,

num2 // 2:num2 // 2 + imgsize[1]]

return pic

def iFFT_2d(Img):

'''

only for gray scale 2d-img. otherwise return 0 img with the same size of Img

:param Img: img to be fourior transform

:return: img been transformed

'''

imgsize = Img.shape

pic = np.zeros(imgsize, dtype=complex)

if len(imgsize) == 2:

N = 2

while N < imgsize[0]:

N = N << 1

num1 = N - imgsize[0]

N = 2

while N < imgsize[1]:

N = N << 1

num2 = N - imgsize[1]

pic = iFFT_1d(np.pad(Img, ((num1 // 2, num1 - num1 // 2), (0, 0)), 'edge'), 0)[num1 // 2:num1 // 2 + imgsize[0],

:] # ,constant_values=(255,255)

pic = iFFT_1d(np.pad(pic, ((0, 0), (num2 // 2, num2 - num2 // 2)), 'edge'), 1)[:,

num2 // 2:num2 // 2 + imgsize[1]] # ,constant_values=(255,255)

return pic

if __name__ == "__main__":

img = plt.imread('./img/1.jpg')

img = img.mean(2) # gray

plt.imshow(img.astype(np.uint8), cmap='gray')

plt.axis('off')

plt.show()

F1 = np.fft.fft2(img[:256, :256])

F2 = FFT_2d(img[:256, :256])

print((abs(F1 - F2) < 0.0000001).all())

F1 = np.fft.ifft2(F1)

F2 = iFFT_2d(F2)

print((abs(F1 - F2) < 0.0000001).all())

F2 = np.abs(F2)

F2[F2 > 255] = 255

plt.imshow(F2.astype(np.uint8), cmap='gray')

plt.axis('off')

plt.show()

F1 = np.abs(F1)

F1[F1 > 255] = 255

plt.imshow(F1.astype(np.uint8), cmap='gray')

plt.axis('off')

plt.show()

Presentation.py

import matplotlib.pyplot as plt

import numpy as np

if __name__ == '__main__':

img = plt.imread('./img/raccoon.jpg')

plt.imshow(img, cmap=plt.cm.gray)

plt.axis('off')

plt.show()

img = img.mean(axis=-1)

# plt.imsave('gray_raccoon.jpg', np.dstack((img.astype(np.uint8), img.astype(np.uint8), img.astype(np.uint8))))

img = np.fft.fft2(img)

img = np.fft.fftshift(img)

fourier = np.abs(img)

magnitude_spectrum = np.log(fourier)

plt.imshow(magnitude_spectrum.astype(np.uint8), cmap=plt.cm.gray)

plt.axis('off')

plt.show() # image after fourier transform

# plt.imsave('fourier_raccoon.jpg', 14*np.dstack((magnitude_spectrum.astype(np.uint8),magnitude_spectrum.astype(np.uint8),magnitude_spectrum.astype(np.uint8))))

x, y = img.shape

lowF = np.zeros((x, y))

lowF = lowF.astype(np.complex128)

window_shape = (20, 20)

lowF[int(x / 2) - window_shape[0]:int(x / 2) + window_shape[0],

int(y / 2) - window_shape[1]:int(y / 2) + window_shape[1]] = \

img[int(x / 2) - window_shape[0]:int(x / 2) + window_shape[0],

int(y / 2) - window_shape[1]:int(y / 2) + window_shape[1]]

lowF_im = np.fft.ifft2(lowF)

lowF_im = np.abs(lowF_im)

lowF_im[lowF_im > 255] = 255

plt.imshow(lowF_im.astype(np.uint8), cmap='gray')

plt.axis('off')

plt.show()

# plt.imsave('LowF_raccoon.jpg', np.dstack((lowF_im.astype(np.uint8), lowF_im.astype(np.uint8), lowF_im.astype(np.uint8))))

highF = np.zeros((x, y))

highF = highF.astype(np.complex128)

window_shape = (370, 370)

highF[0:window_shape[0], :] = img[0:window_shape[0], :]

highF[x - window_shape[0]:x, :] = img[x - window_shape[0]:x, :]

highF[:, 0:window_shape[1]] = img[:, 0:window_shape[1]]

highF[:, y - window_shape[1]:y] = img[:, y - window_shape[1]:y]

highF_im = np.fft.ifft2(highF)

highF_im = np.abs(highF_im)

highF_im[highF_im > 255] = 255

plt.imshow(highF_im.astype(np.uint8), cmap='gray')

plt.axis('off')

plt.show()

# plt.imsave('HighF_raccoon.jpg', np.dstack((highF_im.astype(np.uint8), highF_im.astype(np.uint8), highF_im.astype(np.uint8))))

LiveFourierTransform.py

import numpy as np

import time

import cv2

def LiveFFT(rows, columns, numShots=1e5, nContrIms=30):

imMin = .004 # minimum allowed value of any pixel of the captured image

contrast = np.concatenate((

np.zeros((10, 1)), np.ones((10, 1))), axis=1) # internal use.

window_name = 'Livefft by lixin'

vc = cv2.VideoCapture(0) # camera device

cv2.namedWindow(window_name, 0) # 0 makes it work a bit better

cv2.resizeWindow(window_name, 1024, 768) # this doesn't keep

rval, frame = vc.read()

# we need to wait a bit before we get decent images

print("warming up camera... (.1s)")

time.sleep(.1)

rval = vc.grab()

rval, frame = vc.retrieve()

# determine if we are not asking too much

frameShape = np.shape(frame)

if rows > frameShape[1]:

rows = frameShape[1]

if columns > frameShape[0]:

columns = frameShape[0]

# calculate crop

vCrop = np.array([np.ceil(frameShape[0] / 2. - columns / 2.),

np.floor(frameShape[0] / 2. + columns / 2.)], dtype=int)

hCrop = np.array([np.ceil(frameShape[1] / 2. - rows / 2.),

np.floor(frameShape[1] / 2. + rows / 2.)], dtype=int)

# start image cleanup with something like this:

# for a running contrast of nContrIms frames

contrast = np.concatenate((

np.zeros((nContrIms, 1)),

np.ones((nContrIms, 1))),

axis=1)

Nr = 0

# main loop

while Nr <= numShots:

a = time.time()

Nr += 1

contrast = work_func(vCrop, hCrop, vc, imMin, window_name, contrast)

print('framerate = {} fps \r'.format(1. / (time.time() - a)))

# stop camera

vc.release()

def work_func(vCrop, hCrop, vc, imMin, figid, contrast):

# read image

rval = vc.grab()

rval, im = vc.retrieve()

im = np.array(im, dtype=float)

# crop image

im = im[vCrop[0]: vCrop[1], hCrop[0]: hCrop[1], :]

# pyramid downscaling

# im = cv2.pyrDown(im)

# reduce dimensionality

im = np.mean(im, axis=2, dtype=float)

# make sure we have no zeros

im = (im - im.min()) / (im.max() - im.min())

im = np.maximum(im, imMin)

Intensity = np.abs(np.fft.fftshift(np.fft.fft2(im))) ** 2

Intensity += imMin

# kill the center lines for higher dynamic range

# by copying the next row/column

# h, w = np.shape(Intensity)

# Intensity[(h / 2 - 1):(h / 2 + 1), :] = Intensity[(h / 2 + 1):(h / 2 + 3), :]

# Intensity[:, (w / 2 - 1):(w / 2 + 1)] = Intensity[:, (w / 2 + 1):(w / 2 + 3)]

# running average of contrast

##circshift contrast matrix up

contrast = contrast[np.arange(1, np.size(contrast, 0) + 1) % np.size(contrast, 0), :]

##replace bottom values with new values for minimum and maximum

contrast[-1, :] = [np.min(Intensity), np.max(Intensity)]

maxContrast = 1

minContrast = 7 # to be modify

# openCV draw

vmin = np.log(contrast[:, 0].mean()) + minContrast

vmax = np.log(contrast[:, 1].mean()) - maxContrast

Intensity = (np.log(Intensity + imMin) - vmin) / (vmax - vmin)

Intensity = Intensity.clip(0., 1.)

# Intensity = (Intensity - Intensity.min()) / (Intensity.max() - Intensity.min())

time.sleep(.01)

cv2.imshow(figid, np.concatenate((im, Intensity), axis=1))

cv2.waitKey(1)

return contrast

if __name__ == '__main__':

LiveFFT(400, 400)

摘录:会飞的吴克笔记

b站有视频!