目标检测学习笔记——Yolo v1原理解读及其Tensorflow代码实现(图文并茂)

目录

1、简介

2、YOLO V1的模型思想

2.1、定制的网格单元及其相关细节

2.2、网络的设计

2.3、YOLO V1 的损失函数

2.3.1、classification loss 分类损失

2.3.2、localization loss 定位损失

2.3.3、confidence loss边界框置信度损失

2.3.4、最终的多任务损失函数

2.4、非最大值抑制过程的解读(Non-maximal suppression)

2.4.1、非最大值抑制的过程解读

2.5、YOLO V1的网络框架流程

2.6、YOLO V1的优缺点

2.6.1、优点

2.6.2、缺点

3、YOLO V1的Tensorflow代码

3.1、pascal_voc.py代码

3.2、timer.py代码

3.3、config.py代码

3.4、yolo_net.py代码

3.5、train.py代码

3.6、test.py代码

4、参考

1、简介

Faster RCNN虽然检测精度已经很高,但是依然无法满足实时性的要求,而基于回归方法的深度学习目标检测算法则显得格外的重要,Yolo 就使用了回归的思想,输入图像后直接在其不同的位置上回归出这个位置的目标边框及目标类别。

Yolo v1没有选择基于候选框区域算法的方式进行网络的训练,而是直接采用全图进行训练的模式,其好处在于可以更加快捷地区分目标物体和背景区域,缺点是在提升检测速度的同时牺牲了检测的精度。

下图为YOLO v1的基本信息结构图:

2、YOLO V1的模型思想

2.1、定制的网格单元及其相关细节

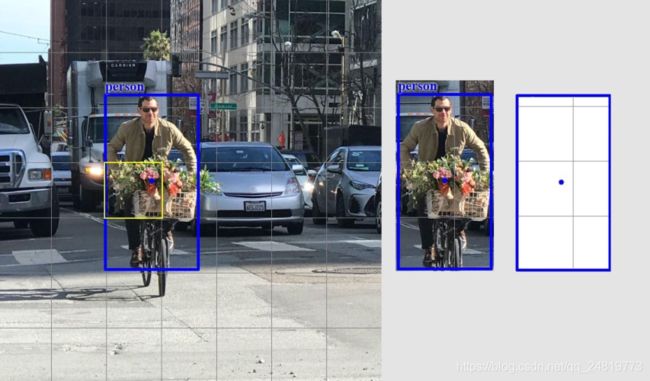

YOLO V1 将输入的图像分割为 SxS 的网格。每个网络单元仅预测一个目标。

如下图所示:"Person"的目标中心点(蓝点)就落在黄色的网格中。

对于每个网格单元:

1、预测B个边界框,每个框计算一个框置信度得分(Box Confidence Score);

2、只检测一个目标而不管边界框B的数量;

3、预测C个条件类别概率(conditional class probabilities),对于可能的目标类别,每个类别预测一个值。

为了评估Pascal voc数据集,YOLO V1使用了7x7的网格(SxS), 每个网格使用2个边界框(B),和20个类别(C)。

-

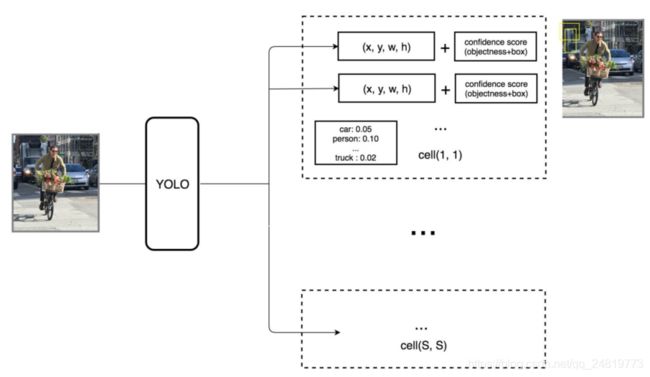

系统将检测目标模型作为回归问题,将图像划分为SxS个网格,并且每个网格单元预测B个边界框、框的置信度以及C个类别概率;

-

为了评估Pascal voc数据集,YOLO V1使用了7x7的网格(SxS), 每个网格使用2个边界框(B),和20个类别(C),最终一张图像的预测结果是一个7x7x30的张量Tensor;

-

每个边界框有5个元素:(x, y, w, h, s)==>框的中心位置坐标(x,y),边界框的宽度和高度(w,h)以及框的置信度得分score=s;

-

框置信度得分(Box Confidence Score):反映了框包含目标物体(Objectness)的可能性大小,以及边框的准确度。

-

将边界框宽度w和高度h用图像宽度和高度进行归一化。因此,x,y的值都在0-1之间

2.2、网络的设计

YOLO V1有24层卷积层,后面是2个全连接层;一些卷积层交替使用1x1的reduction层以减少特征图的深度。对于最后一个卷积层,它的输出为一个形状为(7, 7, 1024)的tensor,然后tensor展开,使用2个全连接层作为线性回归的形式,全连接层最后一层的输出为7*7*30的参数量,然后reshape为一个(7,7,30)的张量Tensor,也就是说YOLO V1的网络结构对于一张图像的检测最终输出的是一个7*7*30的 Tensor 张量,其中Tensor包括 SxSx(Bx5+C),B表示每个网格单元使用的边界框数目,C表示数据集中要分类的类别概率的数目,针对论文中提出的每个输入图像别分为7x7的网格,每个网格使用2个边界框去检测目标物体,同时针对Pascal voc数据集的类别数目为20。

网络中的激活函数皆选择的Leaky Relu激活函数,避免了Relu激活函数带来的Dead Area的情况出现,同时在大于0的区间达到了Relu的同等效果,可以使得网络很快的收敛,也很好的避免了梯度的消失。

网络中更多的使用了1x1和3x3的小卷积核,避免了大卷积带来的巨大的参数数目,加快了网络的训练,同时也使得预测的速度得到了大大的提升。

YOLO V1的基本网络架构图:

输出7*7*30的Tensor分析:

![]()

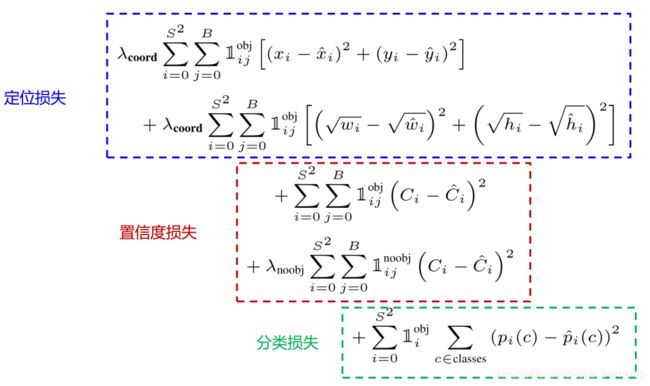

2.3、YOLO V1 的损失函数

损失函数包括:

1、classification loss:分类损失

2、localization loss:定位损失

3、confidence loss:边界框置信度损失

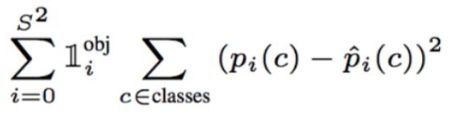

2.3.1、classification loss 分类损失

如果检测到目标,则每个单元格的分类损失是每个类别的条件类别概率的平方误差:

其中:1_obj表示对象目标是否出现在第i个网格中;

pi_header(c)表示网格i中出现c类的条件概率;

pi(c)表示为第i个网格分类的概率;

2.3.2、localization loss 定位损失

定位损失测量预测的边界框位置和框大小的误差。 只计算负责检测目标的框。

其中:1_obj_ij:表示第 i 个网格中第j个边界框预测器是否负责相关预测;

(xi, yi, wi, hi,):表示第i个网格 grid cell 中框的中心位置坐标(x,y),边界框的宽度和高度(w,h);

注意:

-

不希望在大框和小框中同等地加权绝对误差。 即不认为大框中的 2 像素误差对于小框是相同的。

-

为了部分解决这个问题,YOLO预测边界框宽度和高度的平方根,而不是宽度和高度。 另外,为更加强调边界框的精度,将损失乘以

(默认值:5)

(默认值:5)

2.3.3、confidence loss边界框置信度损失

1、如果在框中检测到目标,则置信度损失为:

2、如果在框中没有检测到目标,则置信度损失为:

注意:

-

大多数框不包含任何目标。 这导致类不平衡问题,即训练模型时更频繁地检测到背景而不是检测目标。为了解决这个问题, 将这个损失用因子乘以

(默认值: 0.5)降低。

(默认值: 0.5)降低。

2.3.4、最终的多任务损失函数

最终损失将定位损失、置信度损失和分类损失加在一起:

2.4、非最大值抑制过程的解读(Non-maximal suppression)

YOLO可能对同一个目标进行重复检测。 为了解决这个问题, YOLO采用非最大抑制来消除置信度较低的重复。 非最大抑制可以增加2~3%的mAP。

可能的非最大抑制实现之一:

-

按置信度分数对预测进行排序;

-

从最高分开始,如果发现任何先前的预测具有相同的类别并且当前预测的IoU> 0.5,则忽略当前预测;

-

重复步骤2,直到检查完所有预测。

2.4.1、非最大值抑制的过程解读

首先,针对每个图片进行7x7的网格分割,然后经过卷积网络和全连接网络后在reshape后每个cell得到一个1x30的Tensor。长度为30的向量(一维的Tensor可以成为一维向量)中,1—>5为第一个边界框的预测参数,包括x1,y1,w1,h1,score1,6—>10为第二个边界框的预测参数,包括x2,y2,w2,h2,score2.

然后,每个网格cell的边界框都会输出一个1x30的Tensor,因此得到了7*7*2=98个Bounding Boxes的参数Tensor。

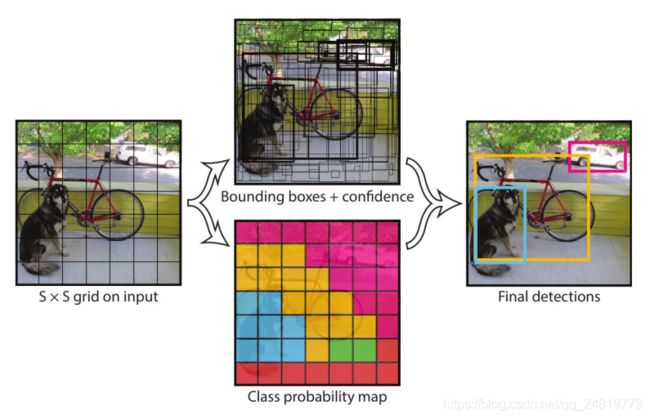

2.5、YOLO V1的网络框架流程

-

将输入的图像缩放成固定大小(如448x448),并将其分割成SxS个网格;

-

原始图像经过CNN网络,直到卷积神经网络的第一层全连接网络层(FC1);

-

原图中每个网格负责预测B个Bounding-Boxes,每个Bounding-Box有4个位置信息和一个物体分类信息的概率值。另外每个网格还负责预测C个分类,最终得到SxSx(Bx5+C)大小的特征矩阵,并对该特征进行Softmax分类;

-

根据阈值去除物体分类概率较低的边界框,最后通过非最大值抑制(NMS)的方法去除冗余的边界框,最终得到目标边界框。

2.6、YOLO V1的优缺点

2.6.1、优点

- 快速 (45Fps),Fast YOLO更是达到155Fps,适合实时处理。

- 预测目标位置和类别由单个网络完成。 可以端到端训练以提高准确性。

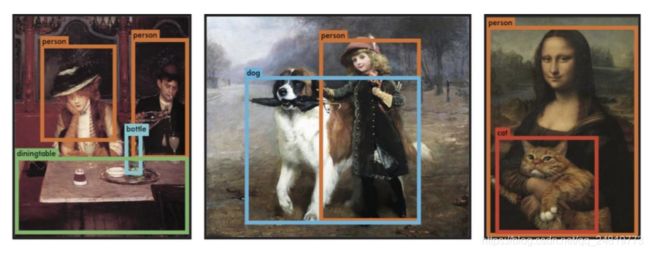

- YOLO更加一般化。 当从自然图像推广到其它领域(如艺术图像)时,它优于其他方法。

2.6.2、缺点

-

对小目标及邻近目标检测效果差,当一个小格中出现多于两个小目标或者一个小格中出现多个不同目标时效果欠佳。

-

原因: B表示每个小格预测边界框数,而YOLO默认落入同一格子里的所有边界框均为同种类的目标。

3、YOLO V1的Tensorflow代码

3.1、pascal_voc.py代码

pascal_voc.py定义了网络的输入情况,包括对于voc2007数据的读取、归一化、分块等操作。

import os

import xml.etree.ElementTree as ET

import numpy as np

import cv2

import pickle

import copy

import yolo.config as cfg

class pascal_voc(object):

def __init__(self, phase, rebuild=False):

self.devkil_path = os.path.join(cfg.PASCAL_PATH, 'VOCdevkit')

self.data_path = os.path.join(self.devkil_path, 'VOC2007')

self.cache_path = cfg.CACHE_PATH

self.batch_size = cfg.BATCH_SIZE

self.image_size = cfg.IMAGE_SIZE

self.cell_size = cfg.CELL_SIZE

self.classes = cfg.CLASSES

self.class_to_ind = dict(zip(self.classes, range(len(self.classes))))

self.flipped = cfg.FLIPPED

self.phase = phase

self.rebuild = rebuild

self.cursor = 0

self.epoch = 1

self.gt_labels = None

self.prepare()

def get(self):

images = np.zeros(

(self.batch_size, self.image_size, self.image_size, 3))

labels = np.zeros(

(self.batch_size, self.cell_size, self.cell_size, 25))

count = 0

while count < self.batch_size:

imname = self.gt_labels[self.cursor]['imname']

flipped = self.gt_labels[self.cursor]['flipped']

images[count, :, :, :] = self.image_read(imname, flipped)

labels[count, :, :, :] = self.gt_labels[self.cursor]['label']

count += 1

self.cursor += 1

if self.cursor >= len(self.gt_labels):

np.random.shuffle(self.gt_labels)

self.cursor = 0

self.epoch += 1

return images, labels

def image_read(self, imname, flipped=False):

image = cv2.imread(imname)

image = cv2.resize(image, (self.image_size, self.image_size))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB).astype(np.float32)

image = (image / 255.0) * 2.0 - 1.0

if flipped:

image = image[:, ::-1, :]

return image

def prepare(self):

gt_labels = self.load_labels()

if self.flipped:

print('Appending horizontally-flipped training examples ...')

gt_labels_cp = copy.deepcopy(gt_labels)

for idx in range(len(gt_labels_cp)):

gt_labels_cp[idx]['flipped'] = True

gt_labels_cp[idx]['label'] =\

gt_labels_cp[idx]['label'][:, ::-1, :]

for i in range(self.cell_size):

for j in range(self.cell_size):

if gt_labels_cp[idx]['label'][i, j, 0] == 1:

gt_labels_cp[idx]['label'][i, j, 1] = \

self.image_size - 1 -\

gt_labels_cp[idx]['label'][i, j, 1]

gt_labels += gt_labels_cp

np.random.shuffle(gt_labels)

self.gt_labels = gt_labels

return gt_labels

def load_labels(self):

cache_file = os.path.join(

self.cache_path, 'pascal_' + self.phase + '_gt_labels.pkl')

if os.path.isfile(cache_file) and not self.rebuild:

print('Loading gt_labels from: ' + cache_file)

with open(cache_file, 'rb') as f:

gt_labels = pickle.load(f)

return gt_labels

print('Processing gt_labels from: ' + self.data_path)

if not os.path.exists(self.cache_path):

os.makedirs(self.cache_path)

if self.phase == 'train':

txtname = os.path.join(

self.data_path, 'ImageSets', 'Main', 'trainval.txt')

else:

txtname = os.path.join(

self.data_path, 'ImageSets', 'Main', 'test.txt')

with open(txtname, 'r') as f:

self.image_index = [x.strip() for x in f.readlines()]

gt_labels = []

for index in self.image_index:

label, num = self.load_pascal_annotation(index)

if num == 0:

continue

imname = os.path.join(self.data_path, 'JPEGImages', index + '.jpg')

gt_labels.append({'imname': imname,

'label': label,

'flipped': False})

print('Saving gt_labels to: ' + cache_file)

with open(cache_file, 'wb') as f:

pickle.dump(gt_labels, f)

return gt_labels

def load_pascal_annotation(self, index):

"""

Load image and bounding boxes info from XML file in the PASCAL VOC

format.

"""

imname = os.path.join(self.data_path, 'JPEGImages', index + '.jpg')

im = cv2.imread(imname)

h_ratio = 1.0 * self.image_size / im.shape[0]

w_ratio = 1.0 * self.image_size / im.shape[1]

# im = cv2.resize(im, [self.image_size, self.image_size])

label = np.zeros((self.cell_size, self.cell_size, 25))

filename = os.path.join(self.data_path, 'Annotations', index + '.xml')

tree = ET.parse(filename)

objs = tree.findall('object')

for obj in objs:

bbox = obj.find('bndbox')

# Make pixel indexes 0-based

x1 = max(min((float(bbox.find('xmin').text) - 1) * w_ratio, self.image_size - 1), 0)

y1 = max(min((float(bbox.find('ymin').text) - 1) * h_ratio, self.image_size - 1), 0)

x2 = max(min((float(bbox.find('xmax').text) - 1) * w_ratio, self.image_size - 1), 0)

y2 = max(min((float(bbox.find('ymax').text) - 1) * h_ratio, self.image_size - 1), 0)

cls_ind = self.class_to_ind[obj.find('name').text.lower().strip()]

boxes = [(x2 + x1) / 2.0, (y2 + y1) / 2.0, x2 - x1, y2 - y1]

x_ind = int(boxes[0] * self.cell_size / self.image_size)

y_ind = int(boxes[1] * self.cell_size / self.image_size)

if label[y_ind, x_ind, 0] == 1:

continue

label[y_ind, x_ind, 0] = 1

label[y_ind, x_ind, 1:5] = boxes

label[y_ind, x_ind, 5 + cls_ind] = 1

return label, len(objs)3.2、timer.py代码

timer.py定义了网络训练过程中总时间和剩余时间的计算以及显示。

import time

import datetime

class Timer(object):

'''

A simple timer.

'''

def __init__(self):

self.init_time = time.time()

self.total_time = 0.

self.calls = 0

self.start_time = 0.

self.diff = 0.

self.average_time = 0.

self.remain_time = 0.

def tic(self):

# using time.time instead of time.clock because time time.clock

# does not normalize for multithreading

self.start_time = time.time()

def toc(self, average=True):

self.diff = time.time() - self.start_time

self.total_time += self.diff

self.calls += 1

self.average_time = self.total_time / self.calls

if average:

return self.average_time

else:

return self.diff

def remain(self, iters, max_iters):

if iters == 0:

self.remain_time = 0

else:

self.remain_time = (time.time() - self.init_time) * \

(max_iters - iters) / iters

return str(datetime.timedelta(seconds=int(self.remain_time)))3.3、config.py代码

config.py定义了网络的一些初始化参数和超参数,输入的大小,学习率等。

import os

# path and dataset parameter

DATA_PATH = 'data'

PASCAL_PATH = os.path.join(DATA_PATH, 'pascal_voc')

CACHE_PATH = os.path.join(PASCAL_PATH, 'cache')

OUTPUT_DIR = os.path.join(PASCAL_PATH, 'output')

WEIGHTS_DIR = os.path.join(PASCAL_PATH, 'weights')

WEIGHTS_FILE = None

# WEIGHTS_FILE = os.path.join(DATA_PATH, 'weights', 'YOLO_small.ckpt')

CLASSES = ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus',

'car', 'cat', 'chair', 'cow', 'diningtable', 'dog', 'horse',

'motorbike', 'person', 'pottedplant', 'sheep', 'sofa',

'train', 'tvmonitor']

FLIPPED = True

# model parameter

IMAGE_SIZE = 448

CELL_SIZE = 7

BOXES_PER_CELL = 2

ALPHA = 0.1

DISP_CONSOLE = False

OBJECT_SCALE = 1.0

NOOBJECT_SCALE = 1.0

CLASS_SCALE = 2.0

COORD_SCALE = 5.0

# solver parameter

GPU = ''

LEARNING_RATE = 0.0001

DECAY_STEPS = 30000

DECAY_RATE = 0.1

STAIRCASE = True

BATCH_SIZE = 45

MAX_ITER = 15000

SUMMARY_ITER = 10

SAVE_ITER = 1000

# test parameter

THRESHOLD = 0.2

IOU_THRESHOLD = 0.53.4、yolo_net.py代码

yolo_net.py代码定义了网络的结构情况,包括网络的各层的结构。

import numpy as np

import tensorflow as tf

import yolo.config as cfg

slim = tf.contrib.slim

class YOLONet(object):

def __init__(self, is_training=True):

self.classes = cfg.CLASSES

self.num_class = len(self.classes)

self.image_size = cfg.IMAGE_SIZE

self.cell_size = cfg.CELL_SIZE

self.boxes_per_cell = cfg.BOXES_PER_CELL

self.output_size = (self.cell_size * self.cell_size) *\

(self.num_class + self.boxes_per_cell * 5)

self.scale = 1.0 * self.image_size / self.cell_size

self.boundary1 = self.cell_size * self.cell_size * self.num_class

self.boundary2 = self.boundary1 +\

self.cell_size * self.cell_size * self.boxes_per_cell

self.object_scale = cfg.OBJECT_SCALE

self.noobject_scale = cfg.NOOBJECT_SCALE

self.class_scale = cfg.CLASS_SCALE

self.coord_scale = cfg.COORD_SCALE

self.learning_rate = cfg.LEARNING_RATE

self.batch_size = cfg.BATCH_SIZE

self.alpha = cfg.ALPHA

self.offset = np.transpose(np.reshape(np.array(

[np.arange(self.cell_size)] * self.cell_size * self.boxes_per_cell),

(self.boxes_per_cell, self.cell_size, self.cell_size)), (1, 2, 0))

self.images = tf.placeholder(

tf.float32, [None, self.image_size, self.image_size, 3],

name='images')

self.logits = self.build_network(

self.images, num_outputs=self.output_size, alpha=self.alpha,

is_training=is_training)

if is_training:

self.labels = tf.placeholder(

tf.float32,

[None, self.cell_size, self.cell_size, 5 + self.num_class])

self.loss_layer(self.logits, self.labels)

self.total_loss = tf.losses.get_total_loss()

tf.summary.scalar('total_loss', self.total_loss)

def build_network(self,

images,

num_outputs,

alpha,

keep_prob=0.5,

is_training=True,

scope='yolo'):

with tf.variable_scope(scope):

with slim.arg_scope(

[slim.conv2d, slim.fully_connected],

activation_fn=leaky_relu(alpha),

weights_regularizer=slim.l2_regularizer(0.0005),

weights_initializer=tf.truncated_normal_initializer(0.0, 0.01)

):

net = tf.pad(

images, np.array([[0, 0], [3, 3], [3, 3], [0, 0]]),

name='pad_1')

net = slim.conv2d(

net, 64, 7, 2, padding='VALID', scope='conv_2')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_3')

net = slim.conv2d(net, 192, 3, scope='conv_4')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_5')

net = slim.conv2d(net, 128, 1, scope='conv_6')

net = slim.conv2d(net, 256, 3, scope='conv_7')

net = slim.conv2d(net, 256, 1, scope='conv_8')

net = slim.conv2d(net, 512, 3, scope='conv_9')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_10')

net = slim.conv2d(net, 256, 1, scope='conv_11')

net = slim.conv2d(net, 512, 3, scope='conv_12')

net = slim.conv2d(net, 256, 1, scope='conv_13')

net = slim.conv2d(net, 512, 3, scope='conv_14')

net = slim.conv2d(net, 256, 1, scope='conv_15')

net = slim.conv2d(net, 512, 3, scope='conv_16')

net = slim.conv2d(net, 256, 1, scope='conv_17')

net = slim.conv2d(net, 512, 3, scope='conv_18')

net = slim.conv2d(net, 512, 1, scope='conv_19')

net = slim.conv2d(net, 1024, 3, scope='conv_20')

net = slim.max_pool2d(net, 2, padding='SAME', scope='pool_21')

net = slim.conv2d(net, 512, 1, scope='conv_22')

net = slim.conv2d(net, 1024, 3, scope='conv_23')

net = slim.conv2d(net, 512, 1, scope='conv_24')

net = slim.conv2d(net, 1024, 3, scope='conv_25')

net = slim.conv2d(net, 1024, 3, scope='conv_26')

net = tf.pad(

net, np.array([[0, 0], [1, 1], [1, 1], [0, 0]]),

name='pad_27')

net = slim.conv2d(

net, 1024, 3, 2, padding='VALID', scope='conv_28')

net = slim.conv2d(net, 1024, 3, scope='conv_29')

net = slim.conv2d(net, 1024, 3, scope='conv_30')

net = tf.transpose(net, [0, 3, 1, 2], name='trans_31')

net = slim.flatten(net, scope='flat_32')

net = slim.fully_connected(net, 512, scope='fc_33')

net = slim.fully_connected(net, 4096, scope='fc_34')

net = slim.dropout(

net, keep_prob=keep_prob, is_training=is_training,

scope='dropout_35')

net = slim.fully_connected(

net, num_outputs, activation_fn=None, scope='fc_36')

return net

def calc_iou(self, boxes1, boxes2, scope='iou'):

"""calculate ious

Args:

boxes1: 5-D tensor [BATCH_SIZE, CELL_SIZE, CELL_SIZE, BOXES_PER_CELL, 4] ====> (x_center, y_center, w, h)

boxes2: 5-D tensor [BATCH_SIZE, CELL_SIZE, CELL_SIZE, BOXES_PER_CELL, 4] ===> (x_center, y_center, w, h)

Return:

iou: 4-D tensor [BATCH_SIZE, CELL_SIZE, CELL_SIZE, BOXES_PER_CELL]

"""

with tf.variable_scope(scope):

# transform (x_center, y_center, w, h) to (x1, y1, x2, y2)

boxes1_t = tf.stack([boxes1[..., 0] - boxes1[..., 2] / 2.0,

boxes1[..., 1] - boxes1[..., 3] / 2.0,

boxes1[..., 0] + boxes1[..., 2] / 2.0,

boxes1[..., 1] + boxes1[..., 3] / 2.0],

axis=-1)

boxes2_t = tf.stack([boxes2[..., 0] - boxes2[..., 2] / 2.0,

boxes2[..., 1] - boxes2[..., 3] / 2.0,

boxes2[..., 0] + boxes2[..., 2] / 2.0,

boxes2[..., 1] + boxes2[..., 3] / 2.0],

axis=-1)

# calculate the left up point & right down point

lu = tf.maximum(boxes1_t[..., :2], boxes2_t[..., :2])

rd = tf.minimum(boxes1_t[..., 2:], boxes2_t[..., 2:])

# intersection

intersection = tf.maximum(0.0, rd - lu)

inter_square = intersection[..., 0] * intersection[..., 1]

# calculate the boxs1 square and boxs2 square

square1 = boxes1[..., 2] * boxes1[..., 3]

square2 = boxes2[..., 2] * boxes2[..., 3]

union_square = tf.maximum(square1 + square2 - inter_square, 1e-10)

return tf.clip_by_value(inter_square / union_square, 0.0, 1.0)

def loss_layer(self, predicts, labels, scope='loss_layer'):

with tf.variable_scope(scope):

predict_classes = tf.reshape(

predicts[:, :self.boundary1],

[self.batch_size, self.cell_size, self.cell_size, self.num_class])

predict_scales = tf.reshape(

predicts[:, self.boundary1:self.boundary2],

[self.batch_size, self.cell_size, self.cell_size, self.boxes_per_cell])

predict_boxes = tf.reshape(

predicts[:, self.boundary2:],

[self.batch_size, self.cell_size, self.cell_size, self.boxes_per_cell, 4])

response = tf.reshape(

labels[..., 0],

[self.batch_size, self.cell_size, self.cell_size, 1])

boxes = tf.reshape(

labels[..., 1:5],

[self.batch_size, self.cell_size, self.cell_size, 1, 4])

boxes = tf.tile(

boxes, [1, 1, 1, self.boxes_per_cell, 1]) / self.image_size

classes = labels[..., 5:]

offset = tf.reshape(

tf.constant(self.offset, dtype=tf.float32),

[1, self.cell_size, self.cell_size, self.boxes_per_cell])

offset = tf.tile(offset, [self.batch_size, 1, 1, 1])

offset_tran = tf.transpose(offset, (0, 2, 1, 3))

predict_boxes_tran = tf.stack(

[(predict_boxes[..., 0] + offset) / self.cell_size,

(predict_boxes[..., 1] + offset_tran) / self.cell_size,

tf.square(predict_boxes[..., 2]),

tf.square(predict_boxes[..., 3])], axis=-1)

iou_predict_truth = self.calc_iou(predict_boxes_tran, boxes)

# calculate I tensor [BATCH_SIZE, CELL_SIZE, CELL_SIZE, BOXES_PER_CELL]

object_mask = tf.reduce_max(iou_predict_truth, 3, keep_dims=True)

object_mask = tf.cast(

(iou_predict_truth >= object_mask), tf.float32) * response

# calculate no_I tensor [CELL_SIZE, CELL_SIZE, BOXES_PER_CELL]

noobject_mask = tf.ones_like(

object_mask, dtype=tf.float32) - object_mask

boxes_tran = tf.stack(

[boxes[..., 0] * self.cell_size - offset,

boxes[..., 1] * self.cell_size - offset_tran,

tf.sqrt(boxes[..., 2]),

tf.sqrt(boxes[..., 3])], axis=-1)

# class_loss

class_delta = response * (predict_classes - classes)

class_loss = tf.reduce_mean(

tf.reduce_sum(tf.square(class_delta), axis=[1, 2, 3]),

name='class_loss') * self.class_scale

# object_loss

object_delta = object_mask * (predict_scales - iou_predict_truth)

object_loss = tf.reduce_mean(

tf.reduce_sum(tf.square(object_delta), axis=[1, 2, 3]),

name='object_loss') * self.object_scale

# noobject_loss

noobject_delta = noobject_mask * predict_scales

noobject_loss = tf.reduce_mean(

tf.reduce_sum(tf.square(noobject_delta), axis=[1, 2, 3]),

name='noobject_loss') * self.noobject_scale

# coord_loss

coord_mask = tf.expand_dims(object_mask, 4)

boxes_delta = coord_mask * (predict_boxes - boxes_tran)

coord_loss = tf.reduce_mean(

tf.reduce_sum(tf.square(boxes_delta), axis=[1, 2, 3, 4]),

name='coord_loss') * self.coord_scale

tf.losses.add_loss(class_loss)

tf.losses.add_loss(object_loss)

tf.losses.add_loss(noobject_loss)

tf.losses.add_loss(coord_loss)

tf.summary.scalar('class_loss', class_loss)

tf.summary.scalar('object_loss', object_loss)

tf.summary.scalar('noobject_loss', noobject_loss)

tf.summary.scalar('coord_loss', coord_loss)

tf.summary.histogram('boxes_delta_x', boxes_delta[..., 0])

tf.summary.histogram('boxes_delta_y', boxes_delta[..., 1])

tf.summary.histogram('boxes_delta_w', boxes_delta[..., 2])

tf.summary.histogram('boxes_delta_h', boxes_delta[..., 3])

tf.summary.histogram('iou', iou_predict_truth)

def leaky_relu(alpha):

def op(inputs):

return tf.nn.leaky_relu(inputs, alpha=alpha, name='leaky_relu')

return op3.5、train.py代码

train.py定义了网络的训练情况,包括训练数据的读入和存储路径、训练参数的设置、训练过程的打印显示和训练模型的保存等内容。

import os

import argparse

import datetime

import tensorflow as tf

import yolo.config as cfg

from yolo.yolo_net import YOLONet

from utils.timer import Timer

from utils.pascal_voc import pascal_voc

slim = tf.contrib.slim

class Solver(object):

def __init__(self, net, data):

self.net = net

self.data = data

self.weights_file = cfg.WEIGHTS_FILE

self.max_iter = cfg.MAX_ITER

self.initial_learning_rate = cfg.LEARNING_RATE

self.decay_steps = cfg.DECAY_STEPS

self.decay_rate = cfg.DECAY_RATE

self.staircase = cfg.STAIRCASE

self.summary_iter = cfg.SUMMARY_ITER

self.save_iter = cfg.SAVE_ITER

self.output_dir = os.path.join(

cfg.OUTPUT_DIR, datetime.datetime.now().strftime('%Y_%m_%d_%H_%M'))

if not os.path.exists(self.output_dir):

os.makedirs(self.output_dir)

self.save_cfg()

self.variable_to_restore = tf.global_variables()

self.saver = tf.train.Saver(self.variable_to_restore, max_to_keep=None)

self.ckpt_file = os.path.join(self.output_dir, 'yolo')

self.summary_op = tf.summary.merge_all()

self.writer = tf.summary.FileWriter(self.output_dir, flush_secs=60)

self.global_step = tf.train.create_global_step()

self.learning_rate = tf.train.exponential_decay(

self.initial_learning_rate, self.global_step, self.decay_steps,

self.decay_rate, self.staircase, name='learning_rate')

self.optimizer = tf.train.GradientDescentOptimizer(

learning_rate=self.learning_rate)

self.train_op = slim.learning.create_train_op(

self.net.total_loss, self.optimizer, global_step=self.global_step)

gpu_options = tf.GPUOptions()

config = tf.ConfigProto(gpu_options=gpu_options)

self.sess = tf.Session(config=config)

self.sess.run(tf.global_variables_initializer())

if self.weights_file is not None:

print('Restoring weights from: ' + self.weights_file)

self.saver.restore(self.sess, self.weights_file)

self.writer.add_graph(self.sess.graph)

def train(self):

train_timer = Timer()

load_timer = Timer()

for step in range(1, self.max_iter + 1):

load_timer.tic()

images, labels = self.data.get()

load_timer.toc()

feed_dict = {self.net.images: images,

self.net.labels: labels}

if step % self.summary_iter == 0:

if step % (self.summary_iter * 10) == 0:

train_timer.tic()

summary_str, loss, _ = self.sess.run(

[self.summary_op, self.net.total_loss, self.train_op],

feed_dict=feed_dict)

train_timer.toc()

log_str = '''{} Epoch: {}, Step: {}, Learning rate: {},'''

''' Loss: {:5.3f}\nSpeed: {:.3f}s/iter,'''

'''' Load: {:.3f}s/iter, Remain: {}'''.format(

datetime.datetime.now().strftime('%m-%d %H:%M:%S'),

self.data.epoch,

int(step),

round(self.learning_rate.eval(session=self.sess), 6),

loss,

train_timer.average_time,

load_timer.average_time,

train_timer.remain(step, self.max_iter))

print(log_str)

else:

train_timer.tic()

summary_str, _ = self.sess.run(

[self.summary_op, self.train_op],

feed_dict=feed_dict)

train_timer.toc()

self.writer.add_summary(summary_str, step)

else:

train_timer.tic()

self.sess.run(self.train_op, feed_dict=feed_dict)

train_timer.toc()

if step % self.save_iter == 0:

print('{} Saving checkpoint file to: {}'.format(

datetime.datetime.now().strftime('%m-%d %H:%M:%S'),

self.output_dir))

self.saver.save(

self.sess, self.ckpt_file, global_step=self.global_step)

def save_cfg(self):

with open(os.path.join(self.output_dir, 'config.txt'), 'w') as f:

cfg_dict = cfg.__dict__

for key in sorted(cfg_dict.keys()):

if key[0].isupper():

cfg_str = '{}: {}\n'.format(key, cfg_dict[key])

f.write(cfg_str)

def update_config_paths(data_dir, weights_file):

cfg.DATA_PATH = data_dir

cfg.PASCAL_PATH = os.path.join(data_dir, 'pascal_voc')

cfg.CACHE_PATH = os.path.join(cfg.PASCAL_PATH, 'cache')

cfg.OUTPUT_DIR = os.path.join(cfg.PASCAL_PATH, 'output')

cfg.WEIGHTS_DIR = os.path.join(cfg.PASCAL_PATH, 'weights')

cfg.WEIGHTS_FILE = os.path.join(cfg.WEIGHTS_DIR, weights_file)

def main():

parser = argparse.ArgumentParser()

parser.add_argument('--weights', default="YOLO_small.ckpt", type=str)

parser.add_argument('--data_dir', default="data", type=str)

parser.add_argument('--threshold', default=0.2, type=float)

parser.add_argument('--iou_threshold', default=0.5, type=float)

parser.add_argument('--gpu', default='', type=str)

args = parser.parse_args()

if args.gpu is not None:

cfg.GPU = args.gpu

if args.data_dir != cfg.DATA_PATH:

update_config_paths(args.data_dir, args.weights)

os.environ['CUDA_VISIBLE_DEVICES'] = cfg.GPU

yolo = YOLONet()

pascal = pascal_voc('train')

solver = Solver(yolo, pascal)

print('Start training ...')

solver.train()

print('Done training.')

if __name__ == '__main__':

# python train.py --weights YOLO_small.ckpt --gpu 0

main()3.6、test.py代码

test.py定义了网络的测试情况,包括测试数据的读入和存储路径、已训练模型的调用和测试过程的打印显示等内容。

import os

import cv2

import argparse

import numpy as np

import tensorflow as tf

import yolo.config as cfg

from yolo.yolo_net import YOLONet

from utils.timer import Timer

class Detector(object):

def __init__(self, net, weight_file):

self.net = net

self.weights_file = weight_file

self.classes = cfg.CLASSES

self.num_class = len(self.classes)

self.image_size = cfg.IMAGE_SIZE

self.cell_size = cfg.CELL_SIZE

self.boxes_per_cell = cfg.BOXES_PER_CELL

self.threshold = cfg.THRESHOLD

self.iou_threshold = cfg.IOU_THRESHOLD

self.boundary1 = self.cell_size * self.cell_size * self.num_class

self.boundary2 = self.boundary1 +\

self.cell_size * self.cell_size * self.boxes_per_cell

self.sess = tf.Session()

self.sess.run(tf.global_variables_initializer())

print('Restoring weights from: ' + self.weights_file)

self.saver = tf.train.Saver()

self.saver.restore(self.sess, self.weights_file)

def draw_result(self, img, result):

for i in range(len(result)):

x = int(result[i][1])

y = int(result[i][2])

w = int(result[i][3] / 2)

h = int(result[i][4] / 2)

cv2.rectangle(img, (x - w, y - h), (x + w, y + h), (0, 255, 0), 2)

cv2.rectangle(img, (x - w, y - h - 20),

(x + w, y - h), (125, 125, 125), -1)

lineType = cv2.LINE_AA if cv2.__version__ > '3' else cv2.CV_AA

cv2.putText(

img, result[i][0] + ' : %.2f' % result[i][5],

(x - w + 5, y - h - 7), cv2.FONT_HERSHEY_SIMPLEX, 0.5,

(0, 0, 0), 1, lineType)

def detect(self, img):

img_h, img_w, _ = img.shape

inputs = cv2.resize(img, (self.image_size, self.image_size))

inputs = cv2.cvtColor(inputs, cv2.COLOR_BGR2RGB).astype(np.float32)

inputs = (inputs / 255.0) * 2.0 - 1.0

inputs = np.reshape(inputs, (1, self.image_size, self.image_size, 3))

result = self.detect_from_cvmat(inputs)[0]

for i in range(len(result)):

result[i][1] *= (1.0 * img_w / self.image_size)

result[i][2] *= (1.0 * img_h / self.image_size)

result[i][3] *= (1.0 * img_w / self.image_size)

result[i][4] *= (1.0 * img_h / self.image_size)

return result

def detect_from_cvmat(self, inputs):

net_output = self.sess.run(self.net.logits,

feed_dict={self.net.images: inputs})

results = []

for i in range(net_output.shape[0]):

results.append(self.interpret_output(net_output[i]))

return results

def interpret_output(self, output):

probs = np.zeros((self.cell_size, self.cell_size,

self.boxes_per_cell, self.num_class))

class_probs = np.reshape(

output[0:self.boundary1],

(self.cell_size, self.cell_size, self.num_class))

scales = np.reshape(

output[self.boundary1:self.boundary2],

(self.cell_size, self.cell_size, self.boxes_per_cell))

boxes = np.reshape(

output[self.boundary2:],

(self.cell_size, self.cell_size, self.boxes_per_cell, 4))

offset = np.array(

[np.arange(self.cell_size)] * self.cell_size * self.boxes_per_cell)

offset = np.transpose(

np.reshape(

offset,

[self.boxes_per_cell, self.cell_size, self.cell_size]),

(1, 2, 0))

boxes[:, :, :, 0] += offset

boxes[:, :, :, 1] += np.transpose(offset, (1, 0, 2))

boxes[:, :, :, :2] = 1.0 * boxes[:, :, :, 0:2] / self.cell_size

boxes[:, :, :, 2:] = np.square(boxes[:, :, :, 2:])

boxes *= self.image_size

for i in range(self.boxes_per_cell):

for j in range(self.num_class):

probs[:, :, i, j] = np.multiply(

class_probs[:, :, j], scales[:, :, i])

filter_mat_probs = np.array(probs >= self.threshold, dtype='bool')

filter_mat_boxes = np.nonzero(filter_mat_probs)

boxes_filtered = boxes[filter_mat_boxes[0],

filter_mat_boxes[1], filter_mat_boxes[2]]

probs_filtered = probs[filter_mat_probs]

classes_num_filtered = np.argmax(

filter_mat_probs, axis=3)[

filter_mat_boxes[0], filter_mat_boxes[1], filter_mat_boxes[2]]

argsort = np.array(np.argsort(probs_filtered))[::-1]

boxes_filtered = boxes_filtered[argsort]

probs_filtered = probs_filtered[argsort]

classes_num_filtered = classes_num_filtered[argsort]

for i in range(len(boxes_filtered)):

if probs_filtered[i] == 0:

continue

for j in range(i + 1, len(boxes_filtered)):

if self.iou(boxes_filtered[i], boxes_filtered[j]) > self.iou_threshold:

probs_filtered[j] = 0.0

filter_iou = np.array(probs_filtered > 0.0, dtype='bool')

boxes_filtered = boxes_filtered[filter_iou]

probs_filtered = probs_filtered[filter_iou]

classes_num_filtered = classes_num_filtered[filter_iou]

result = []

for i in range(len(boxes_filtered)):

result.append(

[self.classes[classes_num_filtered[i]],

boxes_filtered[i][0],

boxes_filtered[i][1],

boxes_filtered[i][2],

boxes_filtered[i][3],

probs_filtered[i]])

return result

def iou(self, box1, box2):

tb = min(box1[0] + 0.5 * box1[2], box2[0] + 0.5 * box2[2]) - \

max(box1[0] - 0.5 * box1[2], box2[0] - 0.5 * box2[2])

lr = min(box1[1] + 0.5 * box1[3], box2[1] + 0.5 * box2[3]) - \

max(box1[1] - 0.5 * box1[3], box2[1] - 0.5 * box2[3])

inter = 0 if tb < 0 or lr < 0 else tb * lr

return inter / (box1[2] * box1[3] + box2[2] * box2[3] - inter)

def camera_detector(self, cap, wait=10):

detect_timer = Timer()

ret, _ = cap.read()

while ret:

ret, frame = cap.read()

detect_timer.tic()

result = self.detect(frame)

detect_timer.toc()

print('Average detecting time: {:.3f}s'.format(

detect_timer.average_time))

self.draw_result(frame, result)

cv2.imshow('Camera', frame)

cv2.waitKey(wait)

ret, frame = cap.read()

def image_detector(self, imname, wait=0):

detect_timer = Timer()

image = cv2.imread(imname)

detect_timer.tic()

result = self.detect(image)

detect_timer.toc()

print('Average detecting time: {:.3f}s'.format(

detect_timer.average_time))

self.draw_result(image, result)

cv2.imshow('Image', image)

cv2.waitKey(wait)

def main():

parser = argparse.ArgumentParser()

parser.add_argument('--weights', default="YOLO_small.ckpt", type=str)

parser.add_argument('--weight_dir', default='weights', type=str)

parser.add_argument('--data_dir', default="data", type=str)

parser.add_argument('--gpu', default='', type=str)

args = parser.parse_args()

os.environ['CUDA_VISIBLE_DEVICES'] = args.gpu

yolo = YOLONet(False)

weight_file = os.path.join(args.data_dir, args.weight_dir, args.weights)

detector = Detector(yolo, weight_file)

# detect from camera

# cap = cv2.VideoCapture(-1)

# detector.camera_detector(cap)

# detect from image file

imname = 'test/person.jpg'

detector.image_detector(imname)

if __name__ == '__main__':

main()4、参考

-

https://github.com/hizhangp/yolo_tensorflow

-

深度学习——卷积神经网络从入门到精通

-

深度学习原理与实践

-

基于深度学习的计算机视觉:原理与实践