pytorch入门笔记

pytorch的使用【入门级】

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文

文章目录

-

- pytorch的使用【入门级】

- 一、数据集

-

- 1.tensorboard(数据可视化)

- 2.tensorforms(数据处理)

- 3.torchvision(数据集下载)

- 4.dataloade

- 二、pytorch模型介绍

-

- 1.卷积

-

- 1.1卷积在数字上的应用

- 1.2卷积在图片上的应用

- 2.池化

-

- 2.1池化在数字上的应用

- 2.2池化在图片上的应用

- 3.非线性激活

-

- 3.1非线性激活在数字上的应用

- 3.2非线性激活在图片上的应用

- 4.全连接层

-

- 4.1全连接层在数字上的应用

- 4.2全连接层在图片上的应用

- 5.损失函数(附带反向传播)

-

- 5.1损失函数在数字上的应用

- 5.2损失函数在图片上的应用

- 6.梯度优化(优化器)

- 7.模型的制作以及对先有模型的修改、调用、保存

-

- 7.1模型的制作(附带模型结构可视化)

- 7.2现有的模型的保存与调用

- 7.3现有的模型修改

- 三、pytorch完整模型

-

- 1.利用GPU训练模型

- 2.验证上面训练好的模型

一、数据集

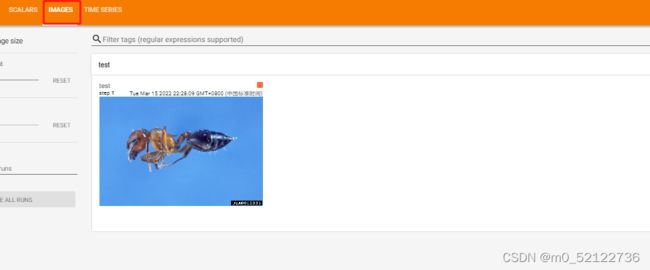

1.tensorboard(数据可视化)

tensorboard主要将数据可视化,包括图片、公式、模型结构可视化

代码:

from torch.utils.tensorboard import SummaryWriter, writer

import numpy as np

from PIL import Image

#创建一个logs的文件夹

writer = SummaryWriter("logs")

#创建需要导入的图片(文件)路径

image_path = r'D:\pytorch\tensor\dataset\train\ants_image\0013035.jpg'

img_PIL = Image.open( image_path)

#将图片从PIL格式转化成tensor格式

img_array = np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

#将图片在tensorboard中以test的名字可视化处来

writer.add_image("test",img_array,1,dataformats='HWC')

#也可以在tensorboard中绘制数学函数

for i in range(100):

writer.add_scalar("y=2x",3*i,i)

#结束

writer.close()

在后台输入:tensorboard --logdir=‘D:\pytorch\tensor\logs’

(后面用到tensorboard来可视化数据,都需要在后台输入tensorboard --logdir='D:\pytorch\tensor\logs’来查看,注意logdir的路径随着命名的变化而变化,在后面介绍就不一一列举)

效果:

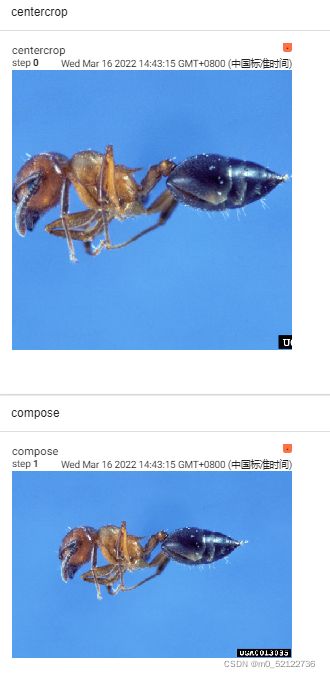

2.tensorforms(数据处理)

tensorforms主要改变数据的格式,大小等

以图片为例:图片格式以及对应打开方式有三种

1,PIL-----Image.open()

2,tensor------ToTensor()

3,narrrays------cv.imread()

代码:

from torchvision import transforms

from PIL import Image

from torch.utils.tensorboard import SummaryWriter, writer

#将图片PIL转成tensor类型

writer = SummaryWriter("logs")

img_path = r'D:\pytorch\tensor\dataset\train\ants_image\0013035.jpg'

img = Image.open(img_path)

tensor_trans = transforms.ToTensor()

tensor_img = tensor_trans(img)

#绘制图片

writer.add_image("tensor_img",tensor_img)

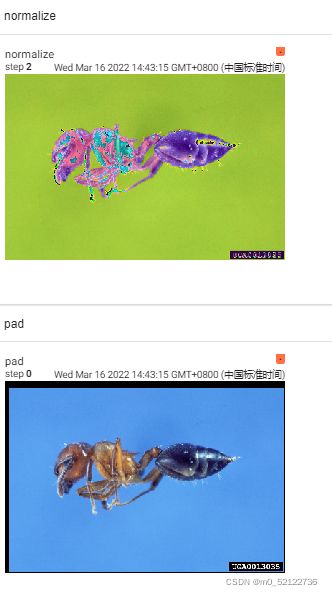

#将图片tensor类型进行归一化处理[normalize]

#add_image(2)是生成第二张图片

trans_norm = transforms.Normalize([0.6,0.8,0.7],[0.7,0.8,1])

img_norm = trans_norm(tensor_img)

#绘制图片

writer.add_image('normalize',img_norm,2)

#改变图片的分辨率[resize]

#resize需要用到图片的PIL格式,输入图像用变量IMG即可

print(img.size)

trans_resize = transforms.Resize((512,512))

img_size = trans_resize(img)

print((img_size).size)

#将img_size从pil变成totensor格式,方便writer绘图

img_size = tensor_trans(img_size)

#绘制图片

writer.add_image('resize',img_size,0)

#利用组合compose()完成等比缩放

#compose(1,2),即在完成方法1后,在完成方法2,将两种方法合并在一起(注意,1,是列表形式,2,具有顺序)

trans_resize_2 = transforms.Resize(512)

#在这里,在pil格式下完成大小改变-----再将图片变成tensor格式

trans_compose = transforms.Compose([trans_resize_2,tensor_trans])

img_size_2 = trans_compose(img)

print(trans_resize_2)

writer.add_image('compose',img_size_2,1)

#中心裁剪CenterCrop

trans_resize_3 = transforms.CenterCrop((512,512))

trans_compose_3 = transforms.Compose([trans_resize_3,tensor_trans])

img_size_3 = trans_compose_3(img)

writer.add_image("centercrop",img_size_3)

#填充pad

trans_resize_4 = transforms.Pad([10,20,3,4])

trans_compose_4 = transforms.Compose([trans_resize_4,tensor_trans])

img_size_4 = trans_compose_4(img)

writer.add_image("pad",img_size_4,0)

#随机裁剪RandomCrop

trans_resize_5 = transforms.RandomCrop([512,512])

trans_compose_5 = transforms.Compose([trans_resize_5,tensor_trans])

for i in range(10):

img_size_5 = trans_compose_5(img)

writer.add_image("randomcrop",img_size_5,i)

writer.close()

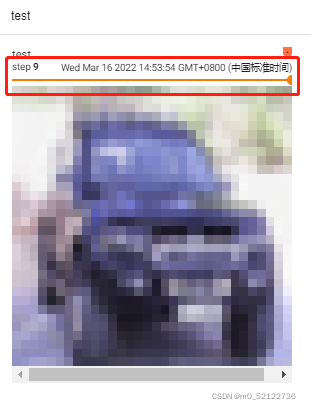

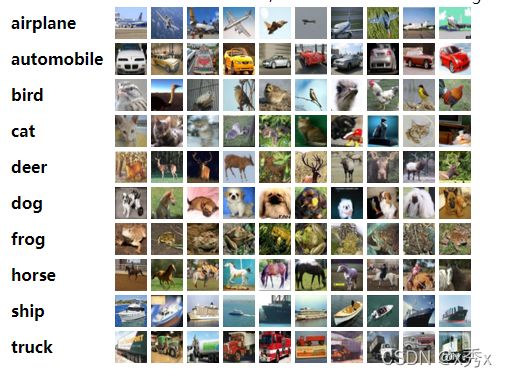

3.torchvision(数据集下载)

torchvision有很多功能,现在主要介绍torchvision.datasets

代码:

import torchvision

from torch.utils.tensorboard import SummaryWriter

#将数据集的数据转化成tensor格式

dataset_transform = torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

])

#root是路径,(./)是在当前文件下创建文件夹,(../)是在上级文件下创建文件夹

#train=True,是下载训练集。train=false,是下载测试集

#download=true,则从 Internet 下载数据集并将其放在根目录中。 如果已下载数据集,则不会再次下载。。

train_set = torchvision.datasets.CIFAR10(root="./cifardt",train=True,transform=dataset_transform,download=True)

test_set = torchvision.datasets.CIFAR10(root="./cifardt",train=False,transform=dataset_transform,download=True)

print(test_set[0])

#创建文件夹将图片可视化

writer = SummaryWriter('p10')

#读取测试集前十个图片

for i in range(10):

img,target = test_set[i]

writer.add_image('test',img,i)

writer.close()

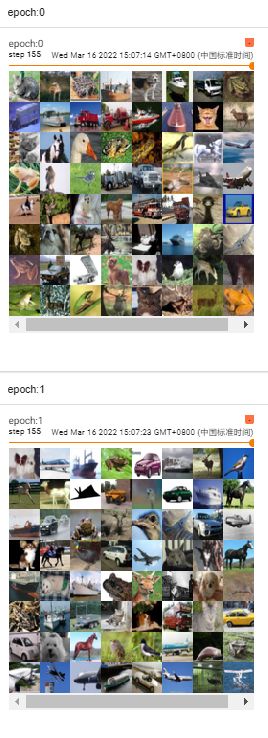

4.dataloade

代码:

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch.utils.data import DataLoader

#将数据集的数据转化成tensor格式

train_set = torchvision.datasets.CIFAR10(root="./cifardt",train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_set = torchvision.datasets.CIFAR10(root="./cifardt",train=False,transform=torchvision.transforms.ToTensor(),download=True)

#batch_size=4每步读取4张图片

#shuffle意味着第二次读取数据时候是否重新打乱,true=打乱

#num_workers=0,单线程

#drop_last=True:总的图片数量除以batch-size的余数是否舍弃,true=舍弃

test_loader = DataLoader(dataset=test_set,batch_size=64,shuffle=True,num_workers=0,drop_last=True)

#创建文件夹将图片可视化

writer = SummaryWriter('p11')

#读取两轮数据集

for epoch in range(2):

#读取数据集

step=0

for data in test_loader:

imgs,targets = data

#每一轮重新给文件夹命名

#特别注意,这里是add_images,不是add_image。多加一个s

writer.add_images('epoch:{}'.format(epoch),imgs,step)

step+=1

writer.close()

二、pytorch模型介绍

1.卷积

卷积的作用是提取特征

1.1卷积在数字上的应用

代码如下(示例):

import torch

import torch.nn.functional as F

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

kernel = torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

print(input.shape)

print(kernel.shape)

#由于conv需要四维参数,需要reshape一下

#将二维参数(宽,高)--四维参数(batch_size,通道数,宽,高)

#batch_size是每次送入神经网络的图片数量

input = torch.reshape(input,(1,1,5,5))

kernel = torch.reshape(kernel,(1,1,3,3))

print(input.shape)

print(kernel.shape)

#调用conv2d,全称是torch.nn.functional.conv2d

#这里的权重weight,就等于卷积核

output = F.conv2d(input,kernel,stride=1)

#输出的宽和高计算公式:输出=输入-卷积核/strido+1

print(output.shape)

#练习stride的作用

output_2 = F.conv2d(input,kernel,stride=2)

print(output_2.shape)

#练习padding的作用

output_3 = F.conv2d(input,kernel,stride=1,padding=1)

print(output_3.shape)

#padding=1,对输入的每条(宽+高)都多填充维度为一的0

#例如本例中,input=【5*5】--【7*7】,最后输出维度为7-3/1-1=5 output=【5*5】.使得input=output

数据变化如下:

torch.Size([5, 5])

torch.Size([3, 3])

torch.Size([1, 1, 5, 5])

torch.Size([1, 1, 3, 3])

torch.Size([1, 1, 3, 3])

torch.Size([1, 1, 2, 2])

torch.Size([1, 1, 5, 5])

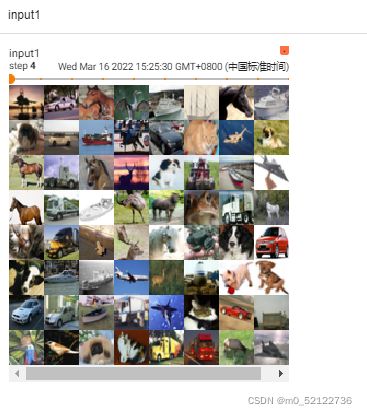

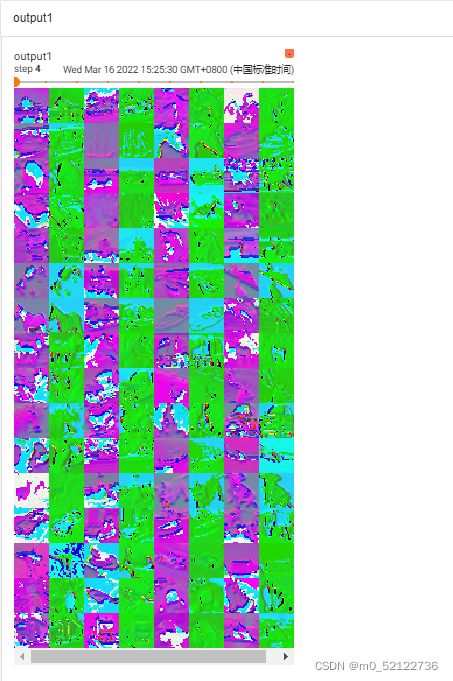

1.2卷积在图片上的应用

代码如下(示例):

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root="./cifardt",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset=dataset,batch_size=64)

class NN(nn.Module):

def __init__(self):

# super(NN,self).__init__()

super(NN,self).__init__()

self.conv2 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1)

def forward(self,x):

x = self.conv2(x)

return x

writer = SummaryWriter('p12')

model = NN()

i=0

for data in dataloader:

imgs,targets = data

#由于forward是个魔法方法__call__,前向传播

#在这里等同于:output = nn.forward(imgs)

output = model(imgs)

i+=1

#注意这里是add_images,后面有个s

#torch.size([64,3,32,32])

writer.add_images('input1',imgs,i)

# torch.size([64,3,32,32]) ->[xxx,3,30,30]

#将6通道变成3通道,bize_size会增加,但具体不知道增加多少,所以前面设置为-1,来自动计算batchsize的大小 ->(-1,3,30,30)

output = torch.reshape(output,(-1,3,30,30))

writer.add_images('output1', output, i)

writer.close()

2.池化

池化的作用;对数据进行收集(多变少)并总结(最大值或者平均值)

2.1池化在数字上的应用

代码如下(示例):

import torch

import torch.nn.functional as F

#这里需要将矩阵转化成浮点数

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype=torch.float32)

input = torch.reshape(input,(1,1,5,5))

print(input.shape)

#这里介绍一下torch.nn与torch.nn.functional 的区别

#如果用torch.nn。则需要重新建class,如下图所示

#建好组后,在调用

# class NN(nn.Module):

# def __init__(self):

# super(NN, self).__init__()

# self.maxpool = MaxPool2d(3,ceil_mode=True)

# def forward(self,x):

# output = self.maxpool(x)

# return output

# model = NN()

# output = model(input)

#torch.nn.functional就相当于以经创建好组了,直接用就好了。

#kernel_size=3----窗口移动的步长

#ceil_mode =True-----计算输出信号大小的时候,会使用向上取整.。反之,向下取整。

output = F.max_pool2d(input,kernel_size=3,ceil_mode =True)

print(output)

数据变化如下:

torch.Size([1, 1, 5, 5])

tensor([[[[2., 3.],

[5., 1.]]]])

2.2池化在图片上的应用

代码如下(示例):

import torch

import torchvision

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from torch import nn

dataset= torchvision.datasets.CIFAR10(r'D:\pytorch\tensor\cifardt',train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=64)

class NN(nn.Module):

def __init__(self):

super(NN, self).__init__()

self.maxpool = MaxPool2d(3,ceil_mode=True)

def forward(self,x):

output = self.maxpool(x)

return output

model = NN()

i=0

writer = SummaryWriter('p13')

for data in dataloader:

imgs,targets = data

output = model(imgs)

i+=1

writer.add_images('input',imgs, i)

writer.add_images('output',output,i)

writer.close()

3.非线性激活

非线性激活的作用;对特征进行非线性变换,赋予神经网络深度的意义,提高泛化能力。

3.1非线性激活在数字上的应用

代码如下(示例):

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1,-2],

[-2,2]])

print(input.shape)

input = torch.reshape(input,(1,1,2,2))

class NN(nn.Module):

def __init__(self):

super(NN, self).__init__()

#relu,if x<0,print:y=0。if x>=0,print:y=x

self.relu = ReLU()

def forward(self,x):

output = self.relu(x)

return output

model = NN()

x = model(input)

print(x)

print(x.shape)

数据变化如下:

torch.Size([2, 2])

tensor([[[[1, 0],

[0, 2]]]])

torch.Size([1, 1, 2, 2])

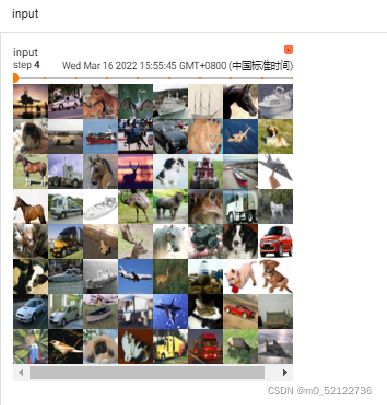

3.2非线性激活在图片上的应用

代码如下(示例):

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10('./cifardt',train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=64)

class NN(nn.Module):

def __init__(self):

super(NN, self).__init__()

self.relu = ReLU()

self.sigmoid = Sigmoid()

def forward(self,x):

output=self.sigmoid(x)

return output

model = NN()

writer =SummaryWriter('p14')

i=0

for data in dataloader:

imgs,targets=data

output = model(imgs)

#正则化

m = nn.BatchNorm2d(3,affine=False)

output1=m(output)

i+=1

#原图

writer.add_images('input',imgs,i)

#非线性激活后的图

writer.add_images('output',output,i)

#正则化后的图

writer.add_images('output1', output1, i)

writer.close()

4.全连接层

全连接层;把卷积层提取的特征加以整合从而进行分类。

(4.2中将imgs整合为一行数据)

4.1全连接层在数字上的应用

代码如下(示例):

import torch

from torch import nn

from torch.nn import ReLU

input = torch.tensor([[1,-2],

[-2,2]])

print(input.shape)

input = torch.reshape(input,(1,1,2,2))

class NN(nn.Module):

def __init__(self):

super(NN, self).__init__()

self.relu = ReLU()

def forward(self,x):

output = self.relu(x)

return output

model = NN()

x = model(input)

print(x)

print(x.shape)

数据变化如下:

torch.Size([2, 2])

tensor([[[[1, 0],

[0, 2]]]])

torch.Size([1, 1, 2, 2])

4.2全连接层在图片上的应用

代码如下(示例):

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10('./cifardt',train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader =DataLoader(dataset,batch_size=64)

class NN(nn.Module):

def __init__(self):

super(NN, self).__init__()

self.linear = Linear(196608,10)

def forward(self,x):

output=self.linear(x)

return output

model =NN()

i=0

for data in dataloader:

imgs,targets = data

print(imgs.shape)

#提示; output = torch.reshape(imgs,(1,1,1,196608))=torch.flatten(imgs)

output =torch.flatten(imgs)

print(output.shape)

output1= model(output)

print(output1.shape)

i+=1

writer.close()

数据变化如下:

torch.Size([64, 3, 32, 32])

torch.Size([196608])

torch.Size([10])

5.损失函数(附带反向传播)

损失函数的作用:衡量模型模型预测的好坏。

反向传播的作用:快速算出所有参数的偏导数

5.1损失函数在数字上的应用

代码如下(示例):

import torch

from torch import nn

input = torch.tensor([1,2,3],dtype=torch.float32)

output = torch.tensor([4,3,2],dtype=torch.float32)

#注意,利用nn.loss需要将数据从二维参 ->四维参

input = torch.reshape(input,(1,1,1,3))

output = torch.reshape(output,(1,1,1,3))

#不能写成result =nn.L1Loss(input,output)

#L1Loss默认计算平均绝对误差

loss = nn.L1Loss()

result =loss(input,output)

print(result)

#计算平均方差

loss_mse = nn.MSELoss()

result_mse =loss_mse(input,output)

print(result_mse)

计算结果如下:

tensor(1.6667)

tensor(3.6667)

5.2损失函数在图片上的应用

代码如下(示例):

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10('./cifardt',train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader =DataLoader(dataset,batch_size=64)

class NN(nn.Module):

#用sequential的模型结构,优化模型

def __init__(self):

super(NN, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(64 * 4 * 4, 64),

Linear(64, 10))

def forward(self,x):

x=self.model(x)

return x

model = NN()

for data in dataloader:

imgs,targets = data

output = model(imgs)

#计算交叉损失

loss = nn.CrossEntropyLoss()

loss_l1 = loss(output,targets)

#反向传播:快速算出所有参数的偏导数

loss_l1.backward()

print(loss_l1)

计算结果如下:

tensor(2.2951, grad_fn=<NllLossBackward0>)

tensor(2.3012, grad_fn=<NllLossBackward0>)

tensor(2.3028, grad_fn=<NllLossBackward0>)

tensor(2.3009, grad_fn=<NllLossBackward0>)

.....

6.梯度优化(优化器)

梯度优化的作用;用来更新和计算影响模型训练和模型输出的网络参数,使其逼近或达到最优值,从而最小化(或最大化)损失函数

代码如下(示例):

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10('./cifardt',train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader =DataLoader(dataset,batch_size=64)

class NN(nn.Module):

#用sequential的模型结构,优化模型

def __init__(self):

super(NN, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(64 * 4 * 4, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model(x)

return x

model = NN()

loss = nn.CrossEntropyLoss()

#model.parameters()-----模型的参数

optim = torch.optim.SGD(model.parameters(),lr=0.01)

for epoch in range(20):

running_loss = 0

print(epoch)

for data in dataloader:

imgs,targets = data

output = model(imgs)

#计算交叉损失

loss_l1 = loss(output,targets)

#梯度清零

optim.zero_grad()

#损失反向传播

loss_l1.backward()

#梯度优化

optim.step()

running_loss+=loss_l1

print(running_loss)

计算结果如下:

0

tensor(360.4875, grad_fn=<AddBackward0>)

1

tensor(355.5713, grad_fn=<AddBackward0>)

2

tensor(339.9232, grad_fn=<AddBackward0>)

3

tensor(318.6790, grad_fn=<AddBackward0>)

4

tensor(311.9515, grad_fn=<AddBackward0>)

...

随着epoch的增加,损失不断减少

7.模型的制作以及对先有模型的修改、调用、保存

7.1模型的制作(附带模型结构可视化)

利用sequential的模型结构,来优化模型

可以通过被注释的代码,对比利用sequential的简洁性

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class NN(nn.Module):

#用sequential的模型结构,优化模型

def __init__(self):

super(NN, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(64 * 4 * 4, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model(x)

return x

#不用sequential的模型结构

# def __init__(self):

# super(NN, self).__init__()

# #前面只算通道,关键计算padding,padding=(kernel_size-1)/2

# self.conv1 = Conv2d(in_channels=3,out_channels=32,kernel_size=5,padding=2)

# self.maxpool1 = MaxPool2d(2)

# self.conv2 = Conv2d(32,32,5,padding=2)

# self.maxpool2 = MaxPool2d(2)

# self.conv3 =Conv2d(32,64,5,padding=2)

# self.maxpool3 = MaxPool2d(2)

# #到这里,将向量铺平后,用两个全连接层不断减少向量大小

# self.flatten = Flatten()

# self.linear1 = Linear(64*4*4,64)

# self.linear2 = Linear(64,10)

#

# def forward(self,x):

# x = self.conv1(x)

# x = self.maxpool1(x)

# x = self.conv2(x)

# x = self.maxpool2(x)

# x = self.conv3(x)

# x = self.maxpool3(x)

# x = self.flatten(x)

# x = self.linear1(x)

# x = self.linear2(x)

# return x

nn =NN()

input = torch.ones((64,3,32,32))

output = nn(input)

print(output.shape)

#利用tensorboard将模型结构画出来

writer = SummaryWriter('p16')

writer.add_graph(nn,input)

writer.close()

最后,在后台输入

tensorboard --logdir='D:\pytorch\tensor\p16'

7.2现有的模型的保存与调用

代码如下(示例):

import torch

import torchvision

vgg16 = torchvision.models.vgg16(pretrained=False)

#模型的保存方式

torch.save(vgg16.state_dict(),'vgg16_method1.pth')

#模型的调用方式

#注意,已经保存为字典形式,不再是网络模型

model = torch.load('vgg16_method1.pth')

print(model)

#一般用一下方式调用

vgg16.load_state_dict(torch.load('vgg16_method1.pth'))

print(vgg16)

7.3现有的模型修改

修改vgg16最后一层模型

import torchvision

from torch import nn

#只继承模型结构,不继承参数

vgg16_false = torchvision.models.vgg16(pretrained=False)

#继承模型结构和参数

vgg16_true = torchvision.models.vgg16(pretrained=True)

print(vgg16_true)

# 1,./当前文件夹 2,../上一级文件夹

train_data = torchvision.datasets.CIFAR10('./cifardt',train=True,transform=torchvision.transforms.ToTensor(),download=True)

#将vgg16的classifier下的第(6)out_features=1000改为10(因为CIFAR10只有10类,vgg分别1000类)

#方法一

vgg16_true.classifier.add_module('add_linear',nn.Linear(1000,0))

print(vgg16_true)

#方法二

vgg16_false.classifier[6]=nn.Linear(1000,0)

print(vgg16_false)

三、pytorch完整模型

1.利用GPU训练模型

import torch

import torchvision

from torch.utils.tensorboard import SummaryWriter

import time

from torch.utils.data import DataLoader

#定义训练设备

device = torch.device('cuda')

#调用gpu:device = torch.device('cuda')

#在不确定有gpu的条件下,可以写成:device = torch.device('cuda' if torch.cuda.is_available() else "cpu")

train_data = torchvision.datasets.CIFAR10('./cifardt',train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_data = torchvision.datasets.CIFAR10('./cifardt',train=False,transform=torchvision.transforms.ToTensor(),download=True)

train_dataloader = DataLoader(train_data,64)

test_dataloader = DataLoader(test_data,64)

train_data_size = len(train_data)

test_data_size = len(test_data)

print('训练数据集长度为:{}'.format(train_data_size))

print('测试数据集长度为:{}'.format(test_data_size))

#创建模型

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,32,5,1,2),

nn.MaxPool2d(2),

nn.Conv2d(32,64,5,1,2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4,64),

nn.Linear(64,10)

)

def forward(self,x):

x = self.model(x)

return x

#调用模型

#创建模型

model = Model()

model = model.to(device)

#创建损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

#创建优化器.SGD随机梯度下降

learning_rate = 1e-2

optimizer = torch.optim.SGD(model.parameters(),lr=learning_rate)

#定义训练次数

total_train_step = 0

#定义训练的轮数

epoch=21

#创建可视化文件夹

writer = SummaryWriter('p17')

#记录开始时间

start_time = time.time()

for i in range(epoch):

print('-----第{}轮训练开始-----'.format(i+1))

for data in train_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

output = model(imgs)

loss = loss_fn(output, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

#每隔100epoch打印一次

if total_train_step % 100 ==0:

#记录结束时间

end_time = time.time()

print(end_time-start_time)

#loss.item() --> 将数据由tensor[60],变为60,方便后面将损失可视化

print('训练次数为:{},损失值为:{}'.format(total_train_step, loss.item()))

writer.add_scalar('train_loss',loss.item(),total_train_step)

#定义初始训练损失为0

total_test_loss = 0

#定义初始准确率

total_accuracy = 0

#定义测试步长

total_test_step = 0

for i in range(epoch):

print('-----第{}轮训练开始-----'.format(i + 1))

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

# 利用gpu

imgs = imgs.to(device)

targets = targets.to(device)

output = model(imgs)

# 计算损失

loss = loss_fn(output, targets)

total_test_loss += loss

# 计算预测正确的个数

# output.argmax(1):将横坐标得分最多的标签,所对应的标签排序号输出

# 下面的意思是,求得 预测的位置=与实际的标签位置 的个数求和

accuracy = (output.argmax(1) == targets).sum()

accuracy += accuracy

total_test_step += 1

if total_test_step % 50 == 0:

print('测试步数为;{}'.format(total_test_step))

print('----整体的训练损失为{}----'.format(total_test_loss.item()))

print('整体测试集的正确率:{}'.format(accuracy / test_data_size))

writer.add_scalar('test_loss', loss.item(), total_test_step)

writer.add_scalar('accuracy', accuracy / test_data_size, total_test_step)

#保存模型

if i%5==0:

torch.save(model,'model_{}.pth'.format(i))

print('模型以保存')

writer.close()

在后台输入:

tensorboard --logdir='D:\pytorch\tensor\p17'

2.验证上面训练好的模型

import torch

import torchvision.transforms

from PIL import Image

from torch import nn

img_path = r"D:\pytorch\images\1.png"

image = Image.open(img_path)

#注意,输入图像是RGBA四通道,需要转化成RGB才能作为输入

image =image.convert('RGB')

print(image)

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image)

#注意,这里需要加map_location=torch.device('cpu'),否则会报错

#报错原因为,pth文件是gpu训练的,查看也需要gpu才能查看,如果用cpu查看,需要定义map_location

model = torch.load('model_20.pth',

map_location=torch.device('cpu'))

print(model)

image = torch.reshape(image,(1,3,32,32))

model.eval()

with torch.no_grad():

input = model(image)

print(input)

#argmax(1),输入横坐标最大值的位置。

#argmax(0),输入纵坐标最大值的位置。

print(input.argmax(1))

#[0]--对应飞机。[5]--对应狗

if int(input.argmax(1))==0:

print('这张图片是飞机')

if int(input.argmax(1))==5:

print('这张图片是狗')

输出结果如下:

#这张图片在数据集里面十个样本的得分

tensor([[-0.8269, -4.7926, 4.6858, 4.5988, 0.8839, 4.3825, 5.5920, 0.9652,

-5.3038, -3.5787]])

#图片在十个数据样本里得分最高的是第7位标签(因为从0开始数)

tensor([6])