项目实训(七)—场景视频切割

场景边界预测:

二分类问题,即对每个镜头进行是否为场景边界的分类预测。

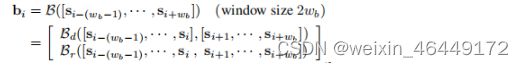

(1)首先采用一个边界网络(BNet)对镜头的差异与关系进行提取,BNet由两个分支网络构建,Bd捕捉镜头前后两幕的差异,Br用于捕捉镜头关系,由一个卷积加一个最大池化层构建。

class BNet(nn.Module):

def __init__(self, cfg):

super(BNet, self).__init__()

self.shot_num = cfg.shot_num

self.channel = cfg.model.sim_channel

self.conv1 = nn.Conv2d(1, self.channel, kernel_size=(cfg.shot_num, 1))

self.max3d = nn.MaxPool3d(kernel_size=(self.channel, 1, 1))

self.cos = Cos(cfg)

self.feat_extractor = feat_extractor(cfg)

def forward(self, x): # [batch_size, seq_len, shot_num, 3, 224, 224]

feat = self.feat_extractor(x)

# [batch_size, seq_len, shot_num, feat_dim]

context = feat.view(

feat.shape[0]*feat.shape[1], 1, feat.shape[-2], feat.shape[-1])

context = self.conv1(context)

# batch_size*seq_len,sim_channel,1,feat_dim

context = self.max3d(context)

# batch_size*seq_len,1,1,feat_dim

context = context.squeeze()

sim = self.cos(feat)

bound = torch.cat((context, sim), dim=1)

return bound

(2)基于获得通过Bnet得到的镜头代表,构建一个LSTM模型获得镜头构成场景边界的概率,通过设定场景个数阈值得到结果。

class LGSSone(nn.Module):

def __init__(self, cfg, mode="image"):

super(LGSSone, self).__init__()

self.seq_len = cfg.seq_len

self.num_layers = 1

self.lstm_hidden_size = cfg.model.lstm_hidden_size

if mode == "image":

self.bnet = BNet(cfg)

self.input_dim = (

self.bnet.feat_extractor.backbone.inplanes +

cfg.model.sim_channel)

self.lstm = nn.LSTM(input_size=self.input_dim,

hidden_size=self.lstm_hidden_size,

num_layers=self.num_layers,

batch_first=True,

bidirectional=cfg.model.bidirectional)

if cfg.model.bidirectional:

self.fc1 = nn.Linear(self.lstm_hidden_size*2, 100)

else:

self.fc1 = nn.Linear(self.lstm_hidden_size, 100)

self.fc2 = nn.Linear(100, 2)

def forward(self, x):

x = self.bnet(x)

x = x.view(-1, self.seq_len, x.shape[-1])

# torch.Size([128, seq_len, 3*channel])

self.lstm.flatten_parameters()

out, (_, _) = self.lstm(x, None)

# out: tensor of shape (batch_size, seq_length, hidden_size)

out = F.relu(self.fc1(out))

out = self.fc2(out)

out = out.view(-1, 2)

return out

(3)在获得每个镜头边界bi的代表之后,问题变成根据表示序列[b1,···,bn-1]的序列来预测序列二进制labels [o1,o2,···,on-1],使用序列模型Bi-LSTM,步幅为wt / 2,预测一系列粗略得分[p1,…,pn-1],pi∈[0,1]是镜头边界成为场景边界的概率。

![]()

然后得到一个粗略的预测oi∈{0,1},该预测表明第i个镜头边界是否为场景边界。通过将pi通过阈值τ进行二值化得到:

def test(cfg, model, test_loader, criterion, mode='test'):

global test_iter, val_iter

model.eval()

test_loss = 0

correct1, correct0 = 0, 0

gt1, gt0, all_gt = 0, 0, 0

prob_raw, gts_raw = [], []

preds, gts = [], []

batch_num = 0

with torch.no_grad():

for data_place, data_cast, data_act, data_aud, target in test_loader:

batch_num += 1

data_place = data_place.cuda() if 'place' in cfg.dataset.mode or 'image' in cfg.dataset.mode else []

data_cast = data_cast.cuda() if 'cast' in cfg.dataset.mode else []

data_act = data_act.cuda() if 'act' in cfg.dataset.mode else []

data_aud = data_aud.cuda() if 'aud' in cfg.dataset.mode else []

target = target.view(-1).cuda()

output = model(data_place, data_cast, data_act, data_aud)

output = output.view(-1, 2)

loss = criterion(output, target)

if mode == 'test':

test_iter += 1

if loss.item() > 0:

writer.add_scalar('test/loss', loss.item(), test_iter)

elif mode == 'val':

val_iter += 1

if loss.item() > 0:

writer.add_scalar('val/loss', loss.item(), val_iter)

test_loss += loss.item()

output = F.softmax(output, dim=1)

prob = output[:, 1]

gts_raw.append(to_numpy(target))

prob_raw.append(to_numpy(prob))

gt = target.cpu().detach().numpy()

prediction = np.nan_to_num(prob.squeeze().cpu().detach().numpy()) > 0.5

idx1 = np.where(gt == 1)[0]

idx0 = np.where(gt == 0)[0]

gt1 += len(idx1)

gt0 += len(idx0)

all_gt += len(gt)

correct1 += len(np.where(gt[idx1] == prediction[idx1])[0])

correct0 += len(np.where(gt[idx0] == prediction[idx0])[0])

for x in gts_raw:

gts.extend(x.tolist())

for x in prob_raw:

preds.extend(x.tolist())

场景视频切割:

调用 ffmpeg 来进行是视频切割:

def scene2video(cfg,scene_list):

print("...scene list to videos process of {}".format(cfg.video_name))

source_movie_fn = '{}.mp4'.format(osp.join(cfg.data_root,"Original_Video",cfg.video_name))

vcap = cv2.VideoCapture(source_movie_fn)

fps = vcap.get(cv2.CAP_PROP_FPS) #video.fps

out_video_dir_fn = osp.join(cfg.data_root,"SceneSeg_Video/scene_video")

mkdir_ifmiss(out_video_dir_fn)

for scene_ind,scene_item in tqdm(enumerate(scene_list)):

scene = str(scene_ind).zfill(4)

start_frame = int(scene_item[0])

end_frame = int(scene_item[1])

start_time, end_time = start_frame/fps, end_frame/fps

duration_time = end_time - start_time

out_video_fn = osp.join(out_video_dir_fn,"scene_{}.mp4".format(scene))

if osp.exists(out_video_fn):

continue

call_list = ['ffmpeg']

call_list += ['-v', 'quiet']

call_list += [

'-y',

'-ss',

str(start_time),

'-t',

str(duration_time),

'-i',

source_movie_fn]

call_list += ['-map_chapters', '-1']

call_list += [out_video_fn]

subprocess.call(call_list)

print("...scene videos has been saved in {}".format(out_video_dir_fn))