- 内存受限编程:从原理到实践的全面指南

景彡先生

C++进阶c++缓存

在嵌入式系统、物联网设备、移动应用等场景中,内存资源往往极为有限。如何在内存受限的环境中设计高效、稳定的程序,是每个开发者都可能面临的挑战。本文将从硬件原理、操作系统机制、算法优化到代码实现技巧,全面解析内存受限编程的核心技术。一、内存受限环境概述1.1典型内存受限场景场景可用内存范围典型应用8位单片机几KB-64KB传感器节点、简单控制器32位嵌入式系统64KB-512MB智能家居设备、工业控制

- 深入探索C++ STL:从基础到进阶

目录引言一、什么是STL二、STL的版本三、STL的六大组件容器(Container)算法(Algorithm)迭代器(Iterator)仿函数(Functor)空间配置器(Allocator)配接器(Adapter)四、STL的重要性五、如何学习STL六、STL的缺陷总结引言在C++的世界里,标准模板库(STL)是一项极为强大的工具。它不仅为开发者提供了可复用的组件库,更是一个融合了数据结构与算

- 【加解密与C】Rot系列(二)Rot13

Rot13简介Rot13(Rotateby13places)是一种简单的字母替换加密算法,属于凯撒密码(Caesarcipher)的特例。它将字母表中的每个字母替换为字母表中距离它13个位置的字母。例如,字母A替换为N,B替换为O,以此类推。由于英文字母有26个字符,Rot13的特点是加密和解密使用相同的算法。Rot13算法规则对字母表中的每个字母,进行如下替换:大写字母A-Z:A→N,B→O,…

- 学习笔记(39):结合生活案例,介绍 10 种常见模型

宁儿数据安全

#机器学习学习笔记生活

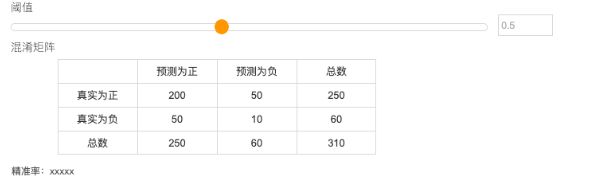

学习笔记(39):结合生活案例,介绍10种常见模型线性回归只是机器学习的“冰山一角”!根据不同的任务场景(分类、回归、聚类等),还有许多强大的模型可以选择。下面我用最通俗易懂的语言,结合生活案例,介绍10种常见模型及其适用场景:一、回归模型(预测连续值,如房价)1.决策树(DecisionTree)原理:像玩“20个问题”游戏,通过一系列判断(如“面积是否>100㎡?”“房龄是否0.5就判为“会”

- LLM系统性学习完全指南(初学者必看系列)

GA琥珀

LLM学习人工智能语言模型

前言这篇文章将系统性的讲解LLM(LargeLanguageModels,LLM)的知识和应用。我们将从支撑整个领域的数学与机器学习基石出发,逐步剖析自然语言处理(NLP)的经典范式,深入探究引发革命的Transformer架构,并按时间顺序追溯从BERT、GPT-2到GPT-4、Llama及Gemini等里程碑式模型的演进。随后,我们将探讨如何将这些强大的基础模型转化为实用、安全的应用,涵盖对齐

- 探索OpenCV 3.2源码:计算机视觉的架构与实现

轩辕姐姐

本文还有配套的精品资源,点击获取简介:OpenCV是一个全面的计算机视觉库,提供广泛的功能如图像处理、对象检测和深度学习支持。OpenCV3.2版本包含了改进的深度学习和GPU加速特性,以及丰富的示例程序。本压缩包文件提供了完整的OpenCV3.2源代码,对于深入学习计算机视觉算法和库实现机制十分宝贵。源码的模块化设计、C++接口、算法实现、多平台支持和性能优化等方面的深入理解,都将有助于开发者的

- LeetCode-268-丢失的数字

醉舞经阁半卷书

丢失的数字题目描述:给定一个包含[0,n]中n个数的数组nums,找出[0,n]这个范围内没有出现在数组中的那个数。进阶:你能否实现线性时间复杂度、仅使用额外常数空间的算法解决此问题?示例说明请见LeetCode官网。来源:力扣(LeetCode)链接:https://leetcode-cn.com/problems/missing-number/著作权归领扣网络所有。商业转载请联系官方授权,非商

- python automl_自动化的机器学习(AutoML):将AutoML部署到云中

编辑推荐:在本文中,将介绍一种AutoML设置,使用Python、Flask在云中训练和部署管道;以及两个可自动完成特征工程和模型构建的AutoML框架。本文来自于搜狐网,由火龙果软件Alice编辑、推荐。AutoML到底是什么?AutoML是一个很宽泛的术语,理论上来说,它囊括从数据探索到模型构建这一完整的数据科学循环周期。但是,我发现这个术语更多时候是指自动的特征预处理和选择、模型算法选择和超

- 云原生环境中Consul的动态服务发现实践

AI云原生与云计算技术学院

AI云原生与云计算云原生consul服务发现ai

云原生环境中Consul的动态服务发现实践关键词:云原生,服务发现,Consul,微服务,动态注册,健康检查,Raft算法摘要:本文深入探讨云原生环境下Consul在动态服务发现中的核心原理与实践方法。通过剖析Consul的架构设计、核心算法和关键机制,结合具体代码案例演示服务注册、发现和健康检查的全流程。详细阐述在Kubernetes、Docker等云原生技术栈中的集成方案,分析实际应用场景中的

- 云原生环境里Nginx的故障排查思路

AI云原生与云计算技术学院

AI云原生与云计算云原生nginx运维ai

云原生环境里Nginx的故障排查思路关键词:云原生、Nginx、故障排查、容器化、Kubernetes摘要:本文聚焦于云原生环境下Nginx的故障排查思路。随着云原生技术的广泛应用,Nginx作为常用的高性能Web服务器和反向代理服务器,在容器化和编排的环境中面临着新的故障场景和挑战。文章首先介绍云原生环境及Nginx的相关背景知识,接着阐述核心概念和联系,详细讲解故障排查的核心算法原理与操作步骤

- 谷歌云(GCP)入门指南:从零开始搭建你的第一个云应用

AI云原生与云计算技术学院

AI云原生与云计算perl服务器网络ai

谷歌云(GCP)入门指南:从零开始搭建你的第一个云应用关键词:谷歌云、GCP、云应用搭建、入门指南、云计算摘要:本文旨在为初学者提供一份全面的谷歌云(GCP)入门指南,详细介绍如何从零开始搭建第一个云应用。通过逐步分析推理,我们将涵盖背景知识、核心概念、算法原理、数学模型、项目实战、实际应用场景、工具资源推荐等多个方面,帮助读者深入理解GCP的使用方法和搭建云应用的流程,为后续的云计算实践打下坚实

- 院级医疗AI管理流程—基于数据共享、算法开发与工具链治理的系统化框架

Allen_Lyb

医疗高效编程研发人工智能算法时序数据库经验分享健康医疗

医疗AI:从“单打独斗”到“协同共进”在科技飞速发展的今天,医疗人工智能(AI)正以前所未有的速度改变着传统医疗模式。从最初在影像诊断、临床决策支持、药物发现等单一领域的“单点突破”,医疗AI如今已迈向“系统级协同”的新阶段。曾经,医疗AI的应用多集中在某一特定环节,比如利用深度学习算法分析医学影像,辅助医生进行疾病诊断。这种单点突破式的应用虽然在一定程度上提高了医疗效率,但随着医疗行业对AI技术

- 【数据结构与算法】力扣 88. 合并两个有序数组

秀秀_heo

数据结构与算法leetcode算法职场和发展

题目描述88.合并两个有序数组给你两个按非递减顺序排列的整数数组nums1**和nums2,另有两个整数m和n,分别表示nums1和nums2中的元素数目。请你合并nums2**到nums1中,使合并后的数组同样按非递减顺序排列。注意:最终,合并后数组不应由函数返回,而是存储在数组nums1中。为了应对这种情况,nums1的初始长度为m+n,其中前m个元素表示应合并的元素,后n个元素为0,应忽略。

- python--自动化的机器学习(AutoML)

Q_ytsup5681

python自动化机器学习

自动化机器学习(AutoML)是一种将自动化技术应用于机器学习模型开发流程的方法,旨在简化或去除需要专业知识的复杂步骤,让非专家用户也能轻松创建和部署机器学习模型**[^3^]。具体介绍如下:1.自动化的概念:自动化是指使设备在无人或少量人参与的情况下完成一系列任务的过程。这一概念随着电子计算机的发明和发展而不断进化,从最初的物理机械到后来的数字程序控制,再到现在的人工智能和机器学习,自动化已经渗

- 面试高频题 力扣 130. 被围绕的区域 洪水灌溉(FloodFill) 深度优先遍历(dfs) 暴力搜索 C++解题思路 每日一题

Q741_147

C/C++每日一题:从语法到算法面试leetcode深度优先c++洪水灌溉

目录零、题目描述一、为什么这道题值得你花时间掌握?二、题目拆解:提取核心关键点三、解题思路:从边界入手,反向标记四、算法实现:深度优先遍历(DFS)+两次遍历五、C++代码实现:一步步拆解代码拆解时间复杂度空间复杂度七、坑点总结八、举一反三九、总结零、题目描述题目链接:被围绕的区域题目描述:示例1:输入:board=[[“X”,“X”,“X”,“X”],[“X”,“O”,“O”,“X”],[“X”

- 2007. 从双倍数组中还原原数组

【算法题解析】还原双倍数组—从打乱的数组恢复原数组题目描述给定一个整数数组changed,该数组是通过对一个原始数组original的每个元素乘以2并打乱顺序后得到的。你的任务是判断给定的changed是否为某个original数组的双倍数组,并返回该原数组。具体来说,存在一个数组original,使得对original中的每个元素x,changed中都包含x和2*x两个元素(顺序可能被打乱)。如

- 最新1区9+非肿瘤纯生信,逻辑清晰易懂,机器学习筛选关键基因的纯生信也可以发高水平期刊,抓紧上车!

生信小课堂

影响因子:9.186关于非肿瘤生信,我们也解读过很多,主要有以下类型1单个疾病WGCNA+PPI分析筛选hub基因2单个疾病结合免疫浸润,热点基因集,机器学习算法等。3两种相关疾病联合分析,包括非肿瘤结合非肿瘤,非肿瘤结合肿瘤或者非肿瘤结合泛癌分析4基于分型的非肿瘤生信分析5单细胞结合普通转录组生信分析目前非肿瘤生信发文的门槛较低,欢迎大家!研究概述:本研究首先使用R语言在三个基因表达数据集中找到

- 聚众识别漏检难题?陌讯多尺度检测实测提升 92%

一、开篇痛点:复杂场景下的聚众识别困境在安防监控、大型赛事等场景中,实时聚众识别是保障公共安全的核心技术。但传统视觉算法常面临三大难题:一是密集人群重叠导致小目标漏检率超30%,二是光照变化(如夜间逆光)引发误报率飙升,三是复杂背景干扰下实时性不足(FPS<15)。某景区监控项目曾反馈,开源模型在节假日人流高峰时,因漏检导致预警延迟达20秒,存在严重安全隐患。这些问题的根源在于传统算法的局限性:单

- Python 算法基础篇之线性搜索算法:顺序搜索、二分搜索

挣扎的蓝藻

Python算法初阶:入门篇python算法开发语言

Python算法基础篇之线性搜索算法:顺序搜索、二分搜索引用1.顺序搜索算法2.二分搜索算法3.顺序搜索和二分搜索的对比a)适用性b)时间复杂度c)前提条件4.实例演示实例1:顺序搜索实例2:二分搜索总结引用在算法和数据结构中,搜索是一种常见的操作,用于查找特定元素在数据集合中的位置。线性搜索算法是最简单的搜索算法之一,在一组数据中逐一比较查找目标元素。本篇博客将介绍线性搜索算法的两种实现方式:顺

- 【算法】哈希映射(C/C++)

摆烂小白敲代码

哈希算法算法c语言c++数据结构

目录算法引入:算法介绍:优点:缺点:哈希映射实现:mapunordered_map题目链接:“蓝桥杯”练习系统解析:代码实现:哈希映射算法是一种通过哈希函数将键映射到数组索引以快速访问数据的数据结构。它的核心思想是利用哈希函数的快速计算能力,将键(Key)转换为数组索引,从而实现对数据的快速访问和存储。哈希映射在现代软件开发中非常重要,它提供了高效的数据查找、插入和删除操作。算法引入:小白算法学校

- 计算机视觉算法实战——关键点检测

✨个人主页欢迎您的访问✨期待您的三连✨✨个人主页欢迎您的访问✨期待您的三连✨✨个人主页欢迎您的访问✨期待您的三连✨1.引言关键点检测(KeypointDetection)是计算机视觉领域中的一个重要研究方向,旨在从图像或视频中检测出具有特定语义信息的关键点。这些关键点通常代表了物体的特定部位或特征,例如人体的关节、面部特征点、车辆的轮子等。关键点检测在姿态估计、动作识别、目标跟踪、三维重建等任务中

- 博弈算法

有一种很有意思的游戏,就是有物体若干堆,可以是火柴棍或是围棋子等等均可。两个人轮流从堆中取物体若干,规定最后取光物体者取胜。这是我国民间很古老的一个游戏,别看这游戏极其简单,却蕴含着深刻的数学原理。下面我们来分析一下要如何才能够取胜。(一)巴什博奕(BashGame):只有一堆n个物品,两个人轮流从这堆物品中取物,规定每次至少取一个,最多取m个。最后取光者得胜。显然,如果n=m+1,那么由于一次最

- STL 简介(标准模板库)

前言通过对C++的特性,类和对象的学习和C++的内存管理对C++基本上有了全面的认识,但是C++的核心在于STL一、STL简介什么是STLC++STL(StandardTemplateLibrary,标准模板库)是C++编程语言中一个功能强大的模板库,它提供了一系列通用的数据结构和算法。STL的设计基于泛型编程,这意味着它使用模板来编写独立于任何特定数据类型的代码。STL的核心组件包括容器(如向量

- C++博弈论

善良的小乔

博弈c++算法开发语言

C++中的博弈算法主要用于解决两人对弈或多方博弈中的策略问题,常用于解决在棋类、卡牌、游戏等情景下的最优策略。这类算法通常基于数学博弈论,重点在于模拟玩家的策略选择并寻找最优解。下面将逐步介绍博弈算法的基本思想、常用算法以及具体实现思路。一、博弈算法的基本思想博弈算法的核心在于状态空间搜索,通过模拟玩家的所有可能动作,推导出局面评价和策略选择,常见特性包括:零和博弈:一个玩家的得分增加意味着另一个

- 工服误检率高达40%?陌讯改进YOLOv7实战降噪50%

2501_92487859

YOLO算法视觉检测目标检测计算机视觉

开篇痛点:工业场景的视觉检测困境在工地、化工厂等高危场景,传统视觉算法面临三重挑战:环境干扰:强光/阴影导致工服颜色失真目标微小:安全帽反光标识仅占图像0.1%像素遮挡密集:工人簇拥时漏检率超35%(数据来源:CVPR2023工业检测白皮书)行业真相:某安监部门实测显示,开源YOLOv5在雾天场景误报率高达41%技术解析:陌讯算法的三大创新设计1.多模态特征融合架构#伪代码示例:可见光+红外特征融

- 渣土车识别漏检率高?陌讯算法实测降 90%

2501_92487936

目标跟踪人工智能计算机视觉目标检测算法智慧城市

在城市建筑垃圾运输管理中,渣土车的合规性监测一直是行业痛点。传统视觉算法在复杂工况下常常出现误判——阴雨天车牌识别模糊、夜间车灯眩光导致车型误分类、不同品牌渣土车混检时准确率骤降。某市政管理局的统计显示,采用传统方案时,日均漏检率高达23%,由此引发的违规倾倒投诉占比超60%。技术解析:从单模态到多特征融合的突破传统渣土车识别多依赖单一目标检测模型(如FasterR-CNN),其核心缺陷在于:特征

- 路面裂缝漏检率高?陌讯多尺度检测降 30%

2501_92487936

计算机视觉opencv人工智能深度学习算法目标检测

在市政工程与公路养护领域,路面裂缝检测是保障交通安全的关键环节。传统人工巡检不仅效率低下(日均检测≤50公里),且受主观因素影响漏检率高达15-20%[1]。而主流开源视觉算法在面对阴影干扰、多类型裂缝混杂等场景时,往往陷入"精度与速度不可兼得"的困境。本文将结合实战案例,解析陌讯视觉算法在路面裂缝检测中的技术突破与落地经验。一、技术解析:从传统方法到多模态融合架构传统裂缝检测多采用"边缘检测+形

- 考场/工厂违规用机难捕捉?3维度优化方案部署成本直降40%

2501_92487762

视觉检测计算机视觉算法目标检测

开篇痛点工业场景中传统玩手机识别面临三重挑战:小目标检测(手机平均像素占比<0.5%)、遮挡干扰(人手/物体遮挡率超60%)、实时性要求(需200ms内响应)。某安检企业反馈,开源YOLOv5在车间场景误报率高达34%。技术解析:双流特征融合架构陌讯算法创新性融合双路径特征(图1):#陌讯核心代码逻辑(简化版)defdual_path_fusion(backbone):shallow_path=C

- 复杂场景检测失效?陌讯多模态算法在千万级监控网的落地实战

2501_92473061

算法视觉检测安全计算机视觉

开篇痛点:安防监控的检测困境"明明人就在画面里,系统却毫无反应!"——这是某智慧园区安防负责人的吐槽。传统目标检测模型在安防监控场景面临三大死穴:漏报:夜间、遮挡场景下召回率骤降(实测ResNet50漏报率>40%)误报:树叶晃动、光影变化引发的误报占比超35%延迟:1080P视频流检测延迟普遍>100ms,难以满足实时响应需求技术解析:陌讯算法的三阶优化架构陌讯视觉算法采用多模态特征金字塔(MM

- 复杂场景检测老翻车?陌讯算法实测提升 40%

2501_92453489

算法视觉计算机视觉视觉检测

在工业质检、安防监控等计算机视觉落地场景中,工程师常面临棘手问题:传统算法在光照突变、目标遮挡等复杂环境下,漏检率高达20%以上,泛化能力不足成为项目落地的最大阻碍。而陌讯AI视觉算法通过架构创新,正在重新定义复杂场景下的检测精度标准。技术解析:从单模态到多模态的跨越传统目标检测模型多依赖单一RGB图像输入,在特征提取阶段容易受环境干扰。以经典的FasterR-CNN为例,其区域提议网络(RPN)

- 枚举的构造函数中抛出异常会怎样

bylijinnan

javaenum单例

首先从使用enum实现单例说起。

为什么要用enum来实现单例?

这篇文章(

http://javarevisited.blogspot.sg/2012/07/why-enum-singleton-are-better-in-java.html)阐述了三个理由:

1.enum单例简单、容易,只需几行代码:

public enum Singleton {

INSTANCE;

- CMake 教程

aigo

C++

转自:http://xiang.lf.blog.163.com/blog/static/127733322201481114456136/

CMake是一个跨平台的程序构建工具,比如起自己编写Makefile方便很多。

介绍:http://baike.baidu.com/view/1126160.htm

本文件不介绍CMake的基本语法,下面是篇不错的入门教程:

http:

- cvc-complex-type.2.3: Element 'beans' cannot have character

Cb123456

springWebgis

cvc-complex-type.2.3: Element 'beans' cannot have character

Line 33 in XML document from ServletContext resource [/WEB-INF/backend-servlet.xml] is i

- jquery实例:随页面滚动条滚动而自动加载内容

120153216

jquery

<script language="javascript">

$(function (){

var i = 4;$(window).bind("scroll", function (event){

//滚动条到网页头部的 高度,兼容ie,ff,chrome

var top = document.documentElement.s

- 将数据库中的数据转换成dbs文件

何必如此

sqldbs

旗正规则引擎通过数据库配置器(DataBuilder)来管理数据库,无论是Oracle,还是其他主流的数据都支持,操作方式是一样的。旗正规则引擎的数据库配置器是用于编辑数据库结构信息以及管理数据库表数据,并且可以执行SQL 语句,主要功能如下。

1)数据库生成表结构信息:

主要生成数据库配置文件(.conf文

- 在IBATIS中配置SQL语句的IN方式

357029540

ibatis

在使用IBATIS进行SQL语句配置查询时,我们一定会遇到通过IN查询的地方,在使用IN查询时我们可以有两种方式进行配置参数:String和List。具体使用方式如下:

1.String:定义一个String的参数userIds,把这个参数传入IBATIS的sql配置文件,sql语句就可以这样写:

<select id="getForms" param

- Spring3 MVC 笔记(一)

7454103

springmvcbeanRESTJSF

自从 MVC 这个概念提出来之后 struts1.X struts2.X jsf 。。。。。

这个view 层的技术一个接一个! 都用过!不敢说哪个绝对的强悍!

要看业务,和整体的设计!

最近公司要求开发个新系统!

- Timer与Spring Quartz 定时执行程序

darkranger

springbean工作quartz

有时候需要定时触发某一项任务。其实在jdk1.3,java sdk就通过java.util.Timer提供相应的功能。一个简单的例子说明如何使用,很简单: 1、第一步,我们需要建立一项任务,我们的任务需要继承java.util.TimerTask package com.test; import java.text.SimpleDateFormat; import java.util.Date;

- 大端小端转换,le32_to_cpu 和cpu_to_le32

aijuans

C语言相关

大端小端转换,le32_to_cpu 和cpu_to_le32 字节序

http://oss.org.cn/kernel-book/ldd3/ch11s04.html

小心不要假设字节序. PC 存储多字节值是低字节为先(小端为先, 因此是小端), 一些高级的平台以另一种方式(大端)

- Nginx负载均衡配置实例详解

avords

[导读] 负载均衡是我们大流量网站要做的一个东西,下面我来给大家介绍在Nginx服务器上进行负载均衡配置方法,希望对有需要的同学有所帮助哦。负载均衡先来简单了解一下什么是负载均衡,单从字面上的意思来理解就可以解 负载均衡是我们大流量网站要做的一个东西,下面我来给大家介绍在Nginx服务器上进行负载均衡配置方法,希望对有需要的同学有所帮助哦。

负载均衡

先来简单了解一下什么是负载均衡

- 乱说的

houxinyou

框架敏捷开发软件测试

从很久以前,大家就研究框架,开发方法,软件工程,好多!反正我是搞不明白!

这两天看好多人研究敏捷模型,瀑布模型!也没太搞明白.

不过感觉和程序开发语言差不多,

瀑布就是顺序,敏捷就是循环.

瀑布就是需求、分析、设计、编码、测试一步一步走下来。而敏捷就是按摸块或者说迭代做个循环,第个循环中也一样是需求、分析、设计、编码、测试一步一步走下来。

也可以把软件开发理

- 欣赏的价值——一个小故事

bijian1013

有效辅导欣赏欣赏的价值

第一次参加家长会,幼儿园的老师说:"您的儿子有多动症,在板凳上连三分钟都坐不了,你最好带他去医院看一看。" 回家的路上,儿子问她老师都说了些什么,她鼻子一酸,差点流下泪来。因为全班30位小朋友,惟有他表现最差;惟有对他,老师表现出不屑,然而她还在告诉她的儿子:"老师表扬你了,说宝宝原来在板凳上坐不了一分钟,现在能坐三分钟。其他妈妈都非常羡慕妈妈,因为全班只有宝宝

- 包冲突问题的解决方法

bingyingao

eclipsemavenexclusions包冲突

包冲突是开发过程中很常见的问题:

其表现有:

1.明明在eclipse中能够索引到某个类,运行时却报出找不到类。

2.明明在eclipse中能够索引到某个类的方法,运行时却报出找不到方法。

3.类及方法都有,以正确编译成了.class文件,在本机跑的好好的,发到测试或者正式环境就

抛如下异常:

java.lang.NoClassDefFoundError: Could not in

- 【Spark七十五】Spark Streaming整合Flume-NG三之接入log4j

bit1129

Stream

先来一段废话:

实际工作中,业务系统的日志基本上是使用Log4j写入到日志文件中的,问题的关键之处在于业务日志的格式混乱,这给对日志文件中的日志进行统计分析带来了极大的困难,或者说,基本上无法进行分析,每个人写日志的习惯不同,导致日志行的格式五花八门,最后只能通过grep来查找特定的关键词缩小范围,但是在集群环境下,每个机器去grep一遍,分析一遍,这个效率如何可想之二,大好光阴都浪费在这上面了

- sudoku solver in Haskell

bookjovi

sudokuhaskell

这几天没太多的事做,想着用函数式语言来写点实用的程序,像fib和prime之类的就不想提了(就一行代码的事),写什么程序呢?在网上闲逛时发现sudoku游戏,sudoku十几年前就知道了,学生生涯时也想过用C/Java来实现个智能求解,但到最后往往没写成,主要是用C/Java写的话会很麻烦。

现在写程序,本人总是有一种思维惯性,总是想把程序写的更紧凑,更精致,代码行数最少,所以现

- java apache ftpClient

bro_feng

java

最近使用apache的ftpclient插件实现ftp下载,遇见几个问题,做如下总结。

1. 上传阻塞,一连串的上传,其中一个就阻塞了,或是用storeFile上传时返回false。查了点资料,说是FTP有主动模式和被动模式。将传出模式修改为被动模式ftp.enterLocalPassiveMode();然后就好了。

看了网上相关介绍,对主动模式和被动模式区别还是比较的模糊,不太了解被动模

- 读《研磨设计模式》-代码笔记-工厂方法模式

bylijinnan

java设计模式

声明: 本文只为方便我个人查阅和理解,详细的分析以及源代码请移步 原作者的博客http://chjavach.iteye.com/

package design.pattern;

/*

* 工厂方法模式:使一个类的实例化延迟到子类

* 某次,我在工作不知不觉中就用到了工厂方法模式(称为模板方法模式更恰当。2012-10-29):

* 有很多不同的产品,它

- 面试记录语

chenyu19891124

招聘

或许真的在一个平台上成长成什么样,都必须靠自己去努力。有了好的平台让自己展示,就该好好努力。今天是自己单独一次去面试别人,感觉有点小紧张,说话有点打结。在面试完后写面试情况表,下笔真的好难,尤其是要对面试人的情况说明真的好难。

今天面试的是自己同事的同事,现在的这个同事要离职了,介绍了我现在这位同事以前的同事来面试。今天这位求职者面试的是配置管理,期初看了简历觉得应该很适合做配置管理,但是今天面

- Fire Workflow 1.0正式版终于发布了

comsci

工作workflowGoogle

Fire Workflow 是国内另外一款开源工作流,作者是著名的非也同志,哈哈....

官方网站是 http://www.fireflow.org

经过大家努力,Fire Workflow 1.0正式版终于发布了

正式版主要变化:

1、增加IWorkItem.jumpToEx(...)方法,取消了当前环节和目标环节必须在同一条执行线的限制,使得自由流更加自由

2、增加IT

- Python向脚本传参

daizj

python脚本传参

如果想对python脚本传参数,python中对应的argc, argv(c语言的命令行参数)是什么呢?

需要模块:sys

参数个数:len(sys.argv)

脚本名: sys.argv[0]

参数1: sys.argv[1]

参数2: sys.argv[

- 管理用户分组的命令gpasswd

dongwei_6688

passwd

NAME: gpasswd - administer the /etc/group file

SYNOPSIS:

gpasswd group

gpasswd -a user group

gpasswd -d user group

gpasswd -R group

gpasswd -r group

gpasswd [-A user,...] [-M user,...] g

- 郝斌老师数据结构课程笔记

dcj3sjt126com

数据结构与算法

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

- yii2 cgridview加上选择框进行操作

dcj3sjt126com

GridView

页面代码

<?=Html::beginForm(['controller/bulk'],'post');?>

<?=Html::dropDownList('action','',[''=>'Mark selected as: ','c'=>'Confirmed','nc'=>'No Confirmed'],['class'=>'dropdown',])

- linux mysql

fypop

linux

enquiry mysql version in centos linux

yum list installed | grep mysql

yum -y remove mysql-libs.x86_64

enquiry mysql version in yum repositoryyum list | grep mysql oryum -y list mysql*

install mysq

- Scramble String

hcx2013

String

Given a string s1, we may represent it as a binary tree by partitioning it to two non-empty substrings recursively.

Below is one possible representation of s1 = "great":

- 跟我学Shiro目录贴

jinnianshilongnian

跟我学shiro

历经三个月左右时间,《跟我学Shiro》系列教程已经完结,暂时没有需要补充的内容,因此生成PDF版供大家下载。最近项目比较紧,没有时间解答一些疑问,暂时无法回复一些问题,很抱歉,不过可以加群(334194438/348194195)一起讨论问题。

----广告-----------------------------------------------------

- nginx日志切割并使用flume-ng收集日志

liyonghui160com

nginx的日志文件没有rotate功能。如果你不处理,日志文件将变得越来越大,还好我们可以写一个nginx日志切割脚本来自动切割日志文件。第一步就是重命名日志文件,不用担心重命名后nginx找不到日志文件而丢失日志。在你未重新打开原名字的日志文件前,nginx还是会向你重命名的文件写日志,linux是靠文件描述符而不是文件名定位文件。第二步向nginx主

- Oracle死锁解决方法

pda158

oracle

select p.spid,c.object_name,b.session_id,b.oracle_username,b.os_user_name from v$process p,v$session a, v$locked_object b,all_objects c where p.addr=a.paddr and a.process=b.process and c.object_id=b.

- java之List排序

shiguanghui

list排序

在Java Collection Framework中定义的List实现有Vector,ArrayList和LinkedList。这些集合提供了对对象组的索引访问。他们提供了元素的添加与删除支持。然而,它们并没有内置的元素排序支持。 你能够使用java.util.Collections类中的sort()方法对List元素进行排序。你既可以给方法传递

- servlet单例多线程

utopialxw

单例多线程servlet

转自http://www.cnblogs.com/yjhrem/articles/3160864.html

和 http://blog.chinaunix.net/uid-7374279-id-3687149.html

Servlet 单例多线程

Servlet如何处理多个请求访问?Servlet容器默认是采用单实例多线程的方式处理多个请求的:1.当web服务器启动的