KITTI数据集评估方法小结

KITTI数据集评估方法小结

- 1 平台与资源

- 2 编译与运行

- 3 文件格式及目录结构

-

- 3.1 格式

- 3.2 文件结构

- 4 运行

- 5 代码

- 6 注意

-

- 1) AP_R11与AP_R40的选择

- 2) MIN_OVERLAP的选择

- 7 救苦救难的Python验证代码

建议先通篇阅读,了解需要修改什么、文件如何组织等,再进行实践,因为涉及到一些代码的问题。你既可以使用

.cpp代码+Linux系统的原汁原味操作,也可以选择python代码直接评估的方式,便捷、优雅。

1 平台与资源

Windows上我试过Visual Studio 2022编译、下载安装CMake、cmd操作等,不是报头文件错误,就是Boost缺少,反正过程就不说了,之后我才知道缺少的那些好多包其实是Linux系统上自带的。最后是在腾讯云上购买了Ubuntu系统一年使用权,正好最近春节有活动,hh

链接:(1)github上较多的evaluate_object_3d_offline文件以及(2)我后面主要使用的CSDN博主修改evaluate_object文件,两个都可以。

2 编译与运行

编译的时候在放置.cpp文件与.h文件的目录下运行指令,主要参考链接(2)这位老哥的方法:

cmake .

make

g++ -O3 -DNDEBUG -o evaluate_object evaluate_object.cpp

一步一步来,反正基本上每一步都会报错,报啥错、缺啥包,直接去网上搜ubuntu系统安装某个包的方法 (我也是小白)。

编译好了后没有问题了,会生成一个不带后缀的evaluate_object文件,下面就只剩下数据准备(可以先进行)与运行了。另外,我只是第一次用了cmake .与 make 指令,后面再编译的时候发现只用第三行指令也可以编译好。

3 文件格式及目录结构

在运行之前,我们还需要准备数据噻,label与results。

3.1 格式

labels就是kitti数据集里面的label_2,选择你需要的N个测试文件,如3769个,格式如下:

| type | truncated | occluded | alpha | bbox | dimensions | location | rotation_y |

|---|---|---|---|---|---|---|---|

| Pedestrian | -1 | -1 | 0.29 | 873.70 152.10 933.44 256.07 | 1.87 0.50 0.90 | 5.42 1.50 13.43 | 0.67 |

预测结果格式如下:(注意,最后一个数字是预测结果特有的score)

| type | truncated | occluded | alpha | bbox | dimensions | location | rotation_y | score |

|---|---|---|---|---|---|---|---|---|

| Pedestrian | -1 | -1 | 0.29 | 873.70 152.10 933.44 256.07 | 1.87 0.50 0.90 | 5.42 1.50 13.43 | 0.67 | 0.99 |

The detection format should be simillar to the KITTI dataset label format with 15 columns representing:

| Values | Name | Description |

|---|---|---|

| 1 | type | Describes the type of object: ‘Car’, ‘Van’, ‘Truck’, ‘Pedestrian’, ‘Person_sitting’, ‘Cyclist’, ‘Tram’, ‘Misc’ or ‘DontCare’ |

| 1 | truncated | -1 |

| 1 | occluded | -1 |

| 1 | alpha | Observation angle of object, ranging [-pi…pi] |

| 4 | bbox | 2D bounding box of object in the image (0-based index): contains left, top, right, bottom pixel coordinates |

| 3 | dimensions | 3D object dimensions: height, width, length (in meters) |

| 3 | location | 3D object location x,y,z in camera coordinates (in meters) |

| 1 | rotation_y | Rotation ry around Y-axis in camera coordinates [-pi…pi] |

| 1 | score | Only for results: Float, indicating confidence in detection, needed for p/r curves, higher is better. |

3.2 文件结构

evaluate_object

evaluate_object.cpp

mail.h

label

--000001.txt

--000002.txt

-- ...

results

--data

--000001.txt

--000002.txt

-- ...

4 运行

./evaluate_object label results

成功的话,会在results目录下生成plot文件夹和一些 .txt 文件,我一般只查看 result.txt 来记录。

5 代码

参见博客文章(2)

#include 6 注意

1) AP_R11与AP_R40的选择

不知道大家有没有注意到KITTI官网下面有这么一句话:

Note 2: On 08.10.2019, we have followed the suggestions of the Mapillary team in their paper Disentangling Monocular 3D Object Detection and use 40 recall positions instead of the 11 recall positions proposed in the original Pascal VOC benchmark. This results in a more fair comparison of the results, please check their paper. The last leaderboards right before this change can be found here: Object Detection Evaluation, 3D Object Detection Evaluation, Bird’s Eye View Evaluation.

在上面的代码部分,saveAndPlotPlots函数里面有这么一段:

float sum[3] = {0, 0, 0};

static float results[3] = {0, 0, 0};

for (int v = 0; v < 3; v++)

for (int i = 1; i < vals[v].size(); i++) // 1, 2, 3, ..., 40, 40 points in total

sum[v] += vals[v][i];

/*

if (N_SAMPLE_PTS==11) {

for (int v = 0; v < 3; ++v)

for (int i = 0; i < vals[v].size(); i = i + 4)

sum[v] += vals[v][i];

} else {

for (int v = 0; v < 3; ++v)

for (int i = 1; i < vals[v].size(); i = i + 1)

sum[v] += vals[v][i];

}

*/

for (int i = 0; i < 3; i++)

results[i] = sum[i] / (N_SAMPLE_PTS-1.0) * 100;

printf("%s AP: %f %f %f\n", file_name.c_str(), sum[0] / (N_SAMPLE_PTS-1.0) * 100, sum[1] / (N_SAMPLE_PTS-1.0) * 100, sum[2] / (N_SAMPLE_PTS-1.0) * 100);

而这是用于40个点的插值得到的mAP代码,如果想得11点插值采样的结果,可以改为下面的代码:(只改这里,其他地方不用改)

float sum[3] = {0, 0, 0};

static float results[3] = {0, 0, 0};

for (int v = 0; v < 3; v++)

for (int i = 0; i < vals[v].size(); i=i+4) // 0, 4, 8, ..., 40, 11 points in total

sum[v] += vals[v][i];

for (int i = 0; i < 3; i++)

results[i] = sum[i] / 11 * 100; // 注意是11

printf("%s AP: %f %f %f\n", file_name.c_str(), sum[0] / 11 * 100, sum[1] / 11 * 100, sum[2] / 11 * 100);

亲自动手一遍,相信你也知道它大致是怎么计算的了。

2) MIN_OVERLAP的选择

在evaluate_object.cpp文件里有这么一行代码。

// the minimum overlap required for 2D evaluation on the image/ground plane and 3D evaluation

const double MIN_OVERLAP[3][3] = {{0.7, 0.5, 0.5}, {0.5, 0.25, 0.25}, {0.5, 0.25, 0.25}};

// const double MIN_OVERLAP[3][3] = {{0.7, 0.5, 0.5}, {0.7, 0.5, 0.5}, {0.7, 0.5, 0.5}};

使用第一行IOU threshold的结果比第二行要好一些,一些paper也是用的第一行但没有告诉读者,我一开始用的默认第二行,导致我怀疑自己的评估文件,emmm

========== 后期补充:

7 救苦救难的Python验证代码

只想说 靠(一个动作)!牛!代码我放在我的GitHub与gitee上面,内网外网均可到达。地址:

1. https://github.com/fyancy/kitti_evaluation_codes

2. https://gitee.com/fyancy/kitti_evaluation_codes

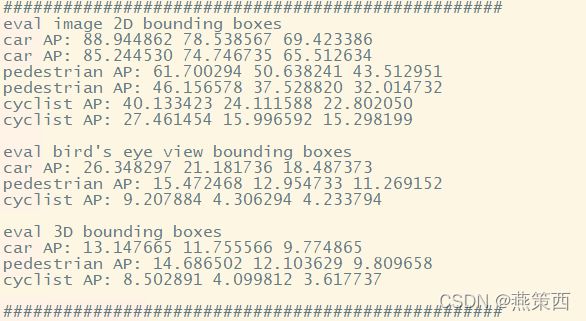

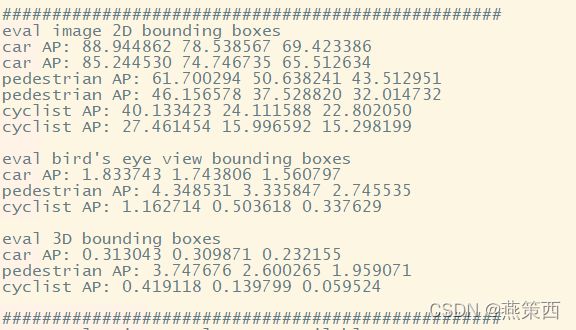

下面贴出c++代码与OpenPCDet的python代码的比较结果(有误差,但问题不大):

| C++ | iou=0.5 | 40点插值采样 |

|---|

| OpenPCDet | iou=0.5 | 40点插值采样 |

|---|

****Car [email protected], 0.50, 0.50:

bbox AP:90.5915, 78.5379, 69.4228

bev AP:26.3483, 21.1817, 18.4874

3d AP:13.1477, 11.7556, 9.7749

aos AP:86.79, 74.75, 65.51

****Pedestrian [email protected], 0.25, 0.25:

bbox AP:61.7003, 50.6382, 43.5130

bev AP:15.4725, 12.9547, 11.2692

3d AP:14.6865, 12.1036, 9.8097

aos AP:46.16, 37.53, 32.01

****Cyclist [email protected], 0.25, 0.25:

bbox AP:40.1334, 24.1116, 22.8021

bev AP:9.2079, 4.3063, 4.2338

3d AP:8.5029, 4.0998, 3.6177

aos AP:27.46, 16.00, 15.30

| C++ | iou=0.7 | 40点插值采样 |

|---|

| OpenPCDet | iou=0.7 | 40点插值采样 |

|---|

****Car [email protected], 0.70, 0.70:

bbox AP:90.5915, 78.5379, 69.4228

bev AP:1.8337, 1.7438, 1.5608

3d AP:0.3130, 0.3099, 0.2322

aos AP:86.79, 74.75, 65.51

****Pedestrian [email protected], 0.50, 0.50:

bbox AP:61.7003, 50.6382, 43.5130

bev AP:4.3485, 3.3358, 2.7455

3d AP:3.7477, 2.6003, 1.9591

aos AP:46.16, 37.53, 32.01

****Cyclist [email protected], 0.50, 0.50:

bbox AP:40.1334, 24.1116, 22.8021

bev AP:1.1627, 0.5036, 0.3376

3d AP:0.4191, 0.1398, 0.0595

aos AP:27.46, 16.00, 15.30

| C++ | iou=0.5 | 11点插值采样 |

|---|

| OpenPCDet | iou=0.5 | 11点插值采样 |

|---|

####Car [email protected], 0.50, 0.50:

bbox AP:86.1249, 76.4279, 68.0968

bev AP:32.1401, 26.3529, 22.4785

3d AP:19.2953, 18.1446, 15.6865

aos AP:82.88, 73.04, 64.68

####Pedestrian [email protected], 0.25, 0.25:

bbox AP:59.6004, 51.0441, 42.6922

bev AP:19.7851, 16.6067, 15.2265

3d AP:19.1647, 15.9138, 14.6982

aos AP:46.12, 39.23, 33.18

####Cyclist [email protected], 0.25, 0.25:

bbox AP:43.5243, 26.8833, 26.7038

bev AP:14.7729, 10.8957, 10.6765

3d AP:14.4502, 10.7494, 10.5691

aos AP:32.11, 20.45, 20.40