pytorch入门——tensorboard,transforms,dataset,dataloader,model,train,test

目录

- tensorboard

- transforms

- Dataset

- Dataloader

- model

- train

- test

tensorboard

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

data_test=torchvision.datasets.CIFAR10("./CIFAR10",train=False,transform=torchvision.transforms.ToTensor())

data_loder=DataLoader(dataset=data_test,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

writer=SummaryWriter("logs")#logs为存放目录

x = range(100)

for i in x:

writer.add_scalar('y=2x', i * 2, i)

step=0

for imgs,labels in data_loder:

writer.add_images('images', imgs, step)

step+=1

writer.close()

启动tensorboard:tensorboard --logdir [E:\\桌面\\pytorch\\logs]

![]()

transforms

《常用图像处理与数据增强方法合集》

from PIL import Image

from torchvision import transforms

from torch.utils.tensorboard import SummaryWriter

writer=SummaryWriter("logs")

image_path='./data/ants/5650366_e22b7e1065.jpg'

image=Image.open(image_path)

#totensor使用

trans_totenser=transforms.ToTensor()

image_tensor=trans_totenser(image)

writer.add_image("tensor",image_tensor)

#normalize

trans_norm=transforms.Normalize([0.5,0.5,0.5],[0.5,0.5,0.5])

image_norm=trans_norm(image_tensor)

writer.add_image("Normalize",image_norm)

#resize

trans_resize=transforms.Resize((1000,300))

image_resize=trans_resize(image)

image_resize_tensor=trans_totenser(image_resize)

writer.add_image("resize",image_resize_tensor)

#compose

trans_resize2=transforms.Resize(512)

trans_compose=transforms.Compose([trans_resize2,trans_totenser])

image_resize2=trans_compose(image)

writer.add_image("resize",image_resize2,1)

#randomcrop

trans_crop=transforms.RandomCrop(100,200)

trans_compose=transforms.Compose([trans_crop,trans_totenser])

for i in range(10):

image_crop=trans_compose(image)

writer.add_image("crop",image_crop,i)

writer.close()

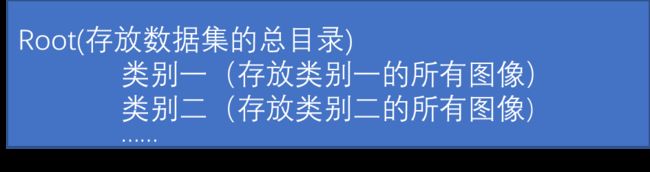

Dataset

先了解其作用,将我们的数据与标签进行匹配。使数据训练数据能够按index索引到对应数据。

以:

import os

from PIL import Image

from torch.utils.data import Dataset

from torchvision import transforms

class mydata(Dataset):

def __init__(self,root_dir,label):

self.root_dir=root_dir

self.label_dir=label

self.path=os.path.join(self.root_dir,self.label_dir)

self.image_list=os.listdir(self.path)

#索引的话需要对文件夹里的所有图片按照id索引,需提供文件夹的位置

def __getitem__(self, index):

image_name=self.image_list[index]

image_path=os.path.join(self.root_dir,self.label_dir,image_name)

image=Image.open(image_path)

#trans转换

trans_totenser = transforms.ToTensor()

trans_resize = transforms.Resize((300, 300))

trans=transforms.Compose([trans_resize,trans_totenser])

image_tensor = trans(image)

label=self.label_dir

return image_tensor,label

def __len__(self):

return len(self.image_list)

if __name__=="__main__":

root_dir='./hymenoptera_data/train'

bees_label='bees'

ants_label='ants'

ants_data=mydata(root_dir,ants_label)

bees_data=mydata(root_dir,bees_label)

test_data=ants_data+bees_data

print(len(test_data))

print(ants_data[0][0].shape)

Dataloader

import torchvision

from torch.utils.data import DataLoader

data_test=torchvision.datasets.CIFAR10("./CIFAR10",train=False,transform=torchvision.transforms.ToTensor())

data_loder=DataLoader(dataset=data_test,batch_size=64,shuffle=True,num_workers=0,drop_last=False)

model

import torch

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear,Sequential

from torch.utils.tensorboard import SummaryWriter

#方式1

class mymodel1(nn.Module):

def __init__(self):

super(mymodel1, self).__init__()

self.conv1=Conv2d(3,32,5,stride=1,padding=2)

self.maxpool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,stride=1,padding=2)

self.maxpool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,stride=1,padding=2)

self.maxpool3=MaxPool2d(2)

self.flatten=Flatten()

self.liner1=Linear(1024,64)

self.liner2=Linear(64,10)

def forward(self,x):

x=self.conv1(x)

x=self.maxpool1(x)

x=self.conv2(x)

x=self.maxpool2(x)

x=self.conv3(x)

x=self.maxpool3(x)

x=self.flatten(x)

x=self.liner1(x)

x=self.liner2(x)

return x

#方式2

class mymodel2(nn.Module):

def __init__(self):

super(mymodel2, self).__init__()

self.compos=Sequential(

Conv2d(3, 32, 5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, stride=1, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10),

)

def forward(self,x):

x=self.compos(x)

return x

mymodel=mymodel2()

print(mymodel)

input=torch.ones(64,3,32,32)

writer=SummaryWriter("logs_seq")

writer.add_graph(mymodel,input)

writer.close()

print(mymodel(input).shape)

train

import torch

import torchvision

from torch.optim import SGD

from torch.utils.data import DataLoader,Dataset

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.tensorboard import SummaryWriter

#定义训练设备

device=torch.device("cuda" if torch.cuda.is_available() else "cpu")

train_data=torchvision.datasets.CIFAR10("./CIFAR10",train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_data=torchvision.datasets.CIFAR10("./CIFAR10",train=False,transform=torchvision.transforms.ToTensor(),download=True)

#输出数据集大小

len_train=len(train_data)

len_test=len(test_data)

print("训练集大小:{} 测试集大小:{}".format(len_train,len_test))

train_dataloder=DataLoader(train_data,batch_size=64)

test_dataloder=DataLoader(test_data,batch_size=64)

writer=SummaryWriter("./logs_train")

class mymodel(nn.Module):

def __init__(self):

super(mymodel, self).__init__()

self.compos=Sequential(

Conv2d(3, 32, 5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, stride=1, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, stride=1, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10),

)

def forward(self,x):

x=self.compos(x)

return x

model=mymodel()

model=model.to(device)

#记录训练次数

train_step=0

test_step=0

loss=nn.CrossEntropyLoss()

loss=loss.to(device)

optim=SGD(model.parameters() ,lr=0.01)

epoch=20

for i in range(epoch):

model.train()

print("——————————第{}轮训练开始————————————".format(i+1))

for imgs,labels in train_dataloder:

imgs=imgs.to(device)

labels=labels.to(device)

output=model(imgs)

result_loss=loss(output,labels)

optim.zero_grad()

result_loss.backward()

optim.step()

train_step+=1

if train_step%100==0:

writer.add_scalar("train_loss",result_loss,train_step)

print("第{}步loss值为:{}".format(train_step,result_loss.item()))

#测试

model.eval()

total_acc=0

total_loss=0

with torch.no_grad():

for imgs,labels in test_dataloder:

imgs = imgs.to(device)

labels = labels.to(device)

output=model(imgs)

result_loss=loss(output,labels)

total_loss+=result_loss

acc=(output.argmax(1)==labels).sum()

total_acc+=acc

print("测试集上整体loss:{}".format(total_loss))

print("测试集上的准确率:{}".format(total_acc/len_test))

writer.add_scalar("test_loss",total_loss,test_step)

writer.add_scalar("test_caa",total_acc/len_test,test_step)

test_step+=1

torch.save(mymodel,"./model/mymodel_{}.pth".format(i))

writer.close()

test

import torch

import torchvision

from PIL import Image

from torch import nn

cla=['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

image_path="./test_image/airplan.jpg"

image=Image.open(image_path)

image=image.convert("RGB")

transfrom=torchvision.transforms.Compose([torchvision.transforms.Resize((32,32)),

torchvision.transforms.ToTensor()])

image=transfrom(image)

image=torch.reshape(image,(1,3,32,32))

print(image.shape)

class mymodel(nn.Module):

def __init__(self):

super(mymodel, self).__init__()

self.compos=nn.Sequential(

nn.Conv2d(3, 32, 5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(1024, 64),

nn.Linear(64, 10),

)

def forward(self,x):

x=self.compos(x)

return x

mymodel=torch.load("mymodel_49.pth")

model=mymodel()

print(model)

model.eval()

with torch.no_grad():

output=model(image)

label=output.argmax(1).item()

print(cla[label])