Hadoop使用MapReduce求ncdc气象数据中的最低温度

一、下载、解压、合并与上传数据

在进行数据处理前首先需要获得所需要的数据,首先下载数据

cd /home/hadoop

mkdir temp #创建文件夹用于存放数据

cd temp

sudo wget ftp://ftp.ncdc.noaa.gov/pub/data/gsod/2016/gsod_2016.tar

sudo wget ftp://ftp.ncdc.noaa.gov/pub/data/gsod/2017/gsod_2017.tar

解压2016与2017年的数据包

tar -xvf gsod_2016.tar

tar -xvf gsod_2017.tar

将这些数据文件解压并合并到一个ncdc.txt文件中

zcat *.gz > ncdc.txt

ll |grep ncdc

显示如下内容

-rw-rw-r-- 1 hadoop hadoop 1133436858 11月 12 15:54 ncdc.txt

查看ncdc.txt文件

head -12 ncdc.txt

显示如下内容

007026 99999 20160622 94.7 7 66.7 7 9999.9 0 9999.9 0 6.2 4 0.0 7 999.9 999.9 100.4* 87.8* 0.00I 999.9 000000

007026 99999 20160623 88.3 24 69.7 24 9999.9 0 9999.9 0 6.2 24 0.0 24 999.9 999.9 98.6* 78.8* 0.00I 999.9 000000

007026 99999 20160624 80.5 24 69.3 24 9999.9 0 9999.9 0 5.8 22 0.0 24 999.9 999.9 93.2* 69.8* 99.99 999.9 010000

007026 99999 20160625 81.4 24 71.8 24 9999.9 0 9999.9 0 5.9 23 0.0 24 999.9 999.9 89.6* 73.4* 0.00I 999.9 000000

007026 99999 20160626 80.5 24 63.4 24 9999.9 0 9999.9 0 6.2 22 0.0 24 999.9 999.9 91.4* 69.8* 0.00I 999.9 000000

007026 99999 20160627 80.6 24 64.2 24 9999.9 0 9999.9 0 6.0 23 0.0 24 999.9 999.9 93.2* 68.0* 0.00I 999.9 000000

007026 99999 20160628 77.4 24 70.8 24 9999.9 0 9999.9 0 5.1 17 0.0 24 999.9 999.9 87.8* 71.6* 99.99 999.9 010000

007026 99999 20160629 74.3 16 71.9 16 9999.9 0 9999.9 0 2.7 13 0.0 16 999.9 999.9 86.0* 69.8* 0.00I 999.9 000000

007026 99999 20170210 46.9 11 19.4 11 9999.9 0 9999.9 0 6.2 11 3.6 11 8.0 13.0 53.6* 35.6* 0.00I 999.9 000000

007026 99999 20170211 54.4 24 35.0 24 9999.9 0 9999.9 0 6.2 24 4.7 24 12.0 21.0 77.0* 42.8* 0.00I 999.9 000000

007026 99999 20170212 70.2 24 52.9 24 9999.9 0 9999.9 0 6.2 24 5.2 24 15.0 21.0 84.2* 62.6* 0.00I 999.9 000000

007026 99999 20170213 61.1 22 35.4 22 9999.9 0 9999.9 0 6.2 22 8.9 22 15.0 24.1 75.2* 50.0* 0.00I 999.9 000000

使用sed命令删除匹配'STN'的行

sed -i '/STN/d' ncdc.txt

将准备好的数据上传至hdfs

cd /usr/local/hadoop/sbin

start-all.sh

cd /usr/local/hadoop/bin

hadoop fs -copyFromLocal /home/hadoop/temp/ncdc.txt input

如果此前未在hdfs中创建input文件夹可用如下指令创建

hadoop fs -mkdir input

二、编写求最低温度的MapReduce代码

首先去/home/hadoop/temp目录下创建文件夹code以保存本地代码

cd /home/hadoop/temp

mkdir code

cd code

编写MinTemperature.java

vim MinTemperature.java

输入以下代码,然后保存并退出

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MinTemperature

{

public static void main(String[] args) throws Exception

{

if (args.length != 2)

{

System.err.println("Usage: MinTemperature );

System.exit(-1);

}

Job job = new Job();

job.setJarByClass(MinTemperature.class);

job.setJobName("Min temperature");

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(MinTemperatureMapper.class);

job.setReducerClass(MinTemperatureReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

编写MinTemperatureMapper.java

vim MinTemperatureMapper.java

输入以下代码,然后保存并退出

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class MinTemperatureMapper extends Mapper<LongWritable, Text, Text, IntWritable>

{

private static final int MISSING = 9999;

@Override

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException

{

String line = value.toString();

String year = line.substring(14, 18);

int airTemperature;

airTemperature = (int)Math.floor(Double.valueOf(line.substring(24, 30).trim()));

if (airTemperature != MISSING)

context.write(new Text(year), new IntWritable(airTemperature));

}

}

编写MinTemperatureReducer.java

vim MinTemperatureReducer.java

输入以下代码,然后保存并退出

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class MinTemperatureReducer extends Reducer<Text, IntWritable, Text, IntWritable>

{

@Override

public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException

{

int minValue = Integer.MAX_VALUE;

for (IntWritable value : values)

minValue = Math.min(minValue, value.get());

context.write(key, new IntWritable(minValue));

}

}

三、编译运行程序

首先编译所有.java文件

javac *.java

如果遇到编译失败或显示API过时可用以下指令重新编译

javac -Xlint:deprecation *.java

将.class文件打包成jar包

jar cvf ./MinTemperature.jar ./*.class

执行程序

cd /usr/local/hadoop/bin

hadoop jar /home/hadoop/temp/code/MinTemperature.jar MinTemperature input/ncdc.txt ncdc

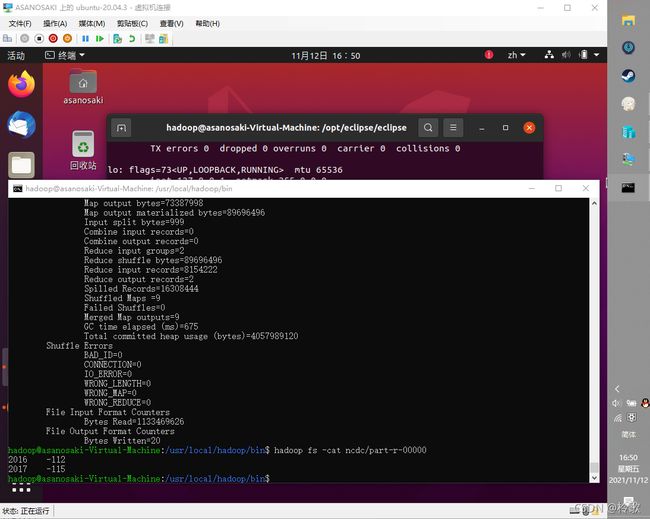

查看结果

hadoop fs -cat ncdc/part-r-00000

执行结果如下图所示