paddle易错总结

paddle易错总结

aistudio可能的错误(这个真)

import文件夹模块错误

%env PYTHONPATH=.:$PYTHONPATH

%env CUDA_VISIBLE_DEVICES=0

二次求导:

y=x**2

# 这样的式子无法进行二次求导,必须写成

y=x*x

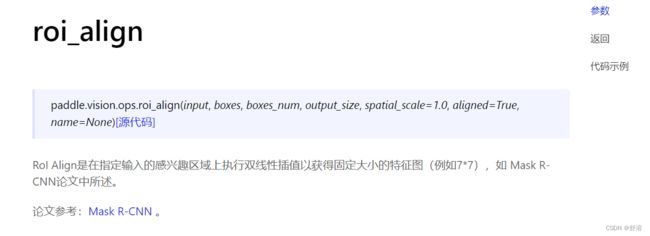

roi_align

这个api,boxes_num,如果应对的是batch大于1,这里要传入list,指定每个batch的num

transform里面的图片调整

这个神奇的框架,居然不支持自己的tensor传入

于是只能自己实现了:

import cv2

import math

import numpy as np

import random

import os

import paddle

import paddle.nn.functional as F

from paddle.vision.transforms.functional import normalize

def _blend(img1, img2, ratio):

ratio = float(ratio)

bound = 1.0 if paddle.is_floating_point(img1) else 255.0

# print(bound)

return (ratio * img1 + (1.0 - ratio) * img2).clip(0, bound)

def _get_image_num_channels(img):

if img.ndim == 2:

return 1

elif img.ndim > 2:

return img.shape[-3]

raise TypeError("Input ndim should be 2 or more. Got {}".format(img.ndim))

def _rgb2hsv(img):

r, g, b = img.unbind(axis=-3)

# Implementation is based on https://github.com/python-pillow/Pillow/blob/4174d4267616897df3746d315d5a2d0f82c656ee/

# src/libImaging/Convert.c#L330

maxc = paddle.max(img, axis=-3)

minc = paddle.min(img, axis=-3)

# The algorithm erases S and H channel where `maxc = minc`. This avoids NaN

# from happening in the results, because

# + S channel has division by `maxc`, which is zero only if `maxc = minc`

# + H channel has division by `(maxc - minc)`.

#

# Instead of overwriting NaN afterwards, we just prevent it from occuring so

# we don't need to deal with it in case we save the NaN in a buffer in

# backprop, if it is ever supported, but it doesn't hurt to do so.

eqc = maxc == minc

cr = maxc - minc

# Since `eqc => cr = 0`, replacing denominator with 1 when `eqc` is fine.

ones = paddle.ones_like(maxc)

s = cr / paddle.where(eqc, ones, maxc)

# Note that `eqc => maxc = minc = r = g = b`. So the following calculation

# of `h` would reduce to `bc - gc + 2 + rc - bc + 4 + rc - bc = 6` so it

# would not matter what values `rc`, `gc`, and `bc` have here, and thus

# replacing denominator with 1 when `eqc` is fine.

cr_divisor = paddle.where(eqc, ones, cr)

rc = (maxc - r) / cr_divisor

gc = (maxc - g) / cr_divisor

bc = (maxc - b) / cr_divisor

t_zero=paddle.zeros_like(bc)

hr=paddle.where(maxc == r, (bc - gc), t_zero)

hg=paddle.where((maxc == g) & (maxc != r), (2.0 + rc - bc), t_zero)

hb=paddle.where((maxc != g) & (maxc != r), (4.0 + gc - rc), t_zero)

h = (hr + hg + hb)

h = paddle.mod((h / 6.0 + 1.0), paddle.to_tensor([1.0]))

return paddle.stack((h, s, maxc), axis=-3)

def _hsv2rgb(img):

h, s, v = img.unbind(axis=-3)

i = paddle.floor(h * 6.0)

f = (h * 6.0) - i

i=paddle.cast(i,dtype='int32')

p = paddle.clip((v * (1.0 - s)), 0.0, 1.0)

q = paddle.clip((v * (1.0 - s * f)), 0.0, 1.0)

t = paddle.clip((v * (1.0 - s * (1.0 - f))), 0.0, 1.0)

i = i % 6

mask = i.unsqueeze(axis=-3) == paddle.arange(6).reshape([-1, 1, 1])

a1 = paddle.stack((v, q, p, p, t, v), axis=-3)

a2 = paddle.stack((t, v, v, q, p, p), axis=-3)

a3 = paddle.stack((p, p, t, v, v, q), axis=-3)

a4 = paddle.stack((a1, a2, a3), axis=-4)

t_zero=paddle.zeros_like(mask,dtype='float32')

t_ones=paddle.ones_like(mask,dtype='float32')

mask=paddle.where(mask,t_ones,t_zero)

return paddle.einsum("...ijk, ...xijk -> ...xjk", mask, a4)

def rgb_to_grayscale(img, num_output_channels = 1) :

if img.ndim < 3:

raise TypeError("Input image tensor should have at least 3 axisensions, but found {}".format(img.ndim))

if num_output_channels not in (1, 3):

raise ValueError('num_output_channels should be either 1 or 3')

r, g, b = img.unbind(axis=-3)

l_img = (0.2989 * r + 0.587 * g + 0.114 * b)

l_img = l_img.unsqueeze(axis=-3)

if num_output_channels == 3:

return l_img.expand(img.shape)

return l_img

def adjust_brightness(img, brightness_factor) :

if brightness_factor < 0:

raise ValueError('brightness_factor ({}) is not non-negative.'.format(brightness_factor))

return _blend(img, paddle.zeros_like(img), brightness_factor)

def adjust_contrast(img, contrast_factor) :

if contrast_factor < 0:

raise ValueError('contrast_factor ({}) is not non-negative.'.format(contrast_factor))

dtype = img.dtype if paddle.is_floating_point(img) else paddle.float32

mean = paddle.mean(paddle.cast(rgb_to_grayscale(img),dtype=dtype), axis=(-3, -2, -1), keepdim=True)

return _blend(img, mean, contrast_factor)

def adjust_hue(img, hue_factor) :

if not (-0.5 <= hue_factor <= 0.5):

raise ValueError('hue_factor ({}) is not in [-0.5, 0.5].'.format(hue_factor))

if not (isinstance(img, paddle.Tensor)):

raise TypeError('Input img should be Tensor image')

if _get_image_num_channels(img) == 1: # Match PIL behaviour

return img

orig_dtype = img.dtype

if img.dtype == paddle.uint8:

img = paddle.cast(img,dtype='float32') / 255.0

img = _rgb2hsv(img)

h, s, v = img.unbind(axis=-3)

h = (h + hue_factor) % 1.0

img = paddle.stack((h, s, v), axis=-3)

img_hue_adj = _hsv2rgb(img)

if orig_dtype == paddle.uint8:

img_hue_adj = paddle.cast(img_hue_adj * 255.0,dtype=orig_dtype)

return img_hue_adj

def adjust_saturation(img, saturation_factor) :

if saturation_factor < 0:

raise ValueError('saturation_factor ({}) is not non-negative.'.format(saturation_factor))

return _blend(img, rgb_to_grayscale(img), saturation_factor)