import numpy as np

def softmax(x):

if x.ndim == 1:

return np.exp(x-np.max(x))/np.sum(np.exp(x-np.max(x)))

elif x.ndim == 2:

val_num = np.zeros_like(x)

for i in range(len(x)):

part_x = x[i]

val_num[i] = np.exp(part_x-np.max(part_x))/np.sum(np.exp(part_x-np.max(part_x)))

return val_num

def cross_entropy_error(y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

batch_size = y.shape[0]

return -np.sum(t * np.log(y[np.arange(batch_size)] + 1e-7))/ batch_size

def numerical_gradient(f, x):

h = 1e-4

x_shape = x.shape

grap = np.zeros_like(x.reshape(-1))

if x.ndim == 2:

x = x.reshape(-1)

for idx in range(x.size):

tmp_val = x[idx]

x[idx] = tmp_val + h

fxh1 = f(x)

x[idx] = tmp_val - h

fxh2 = f(x)

grap[idx] = (fxh1 - fxh2) / (h * 2)

x[idx] = tmp_val

grap = grap.reshape(x_shape)

return grap

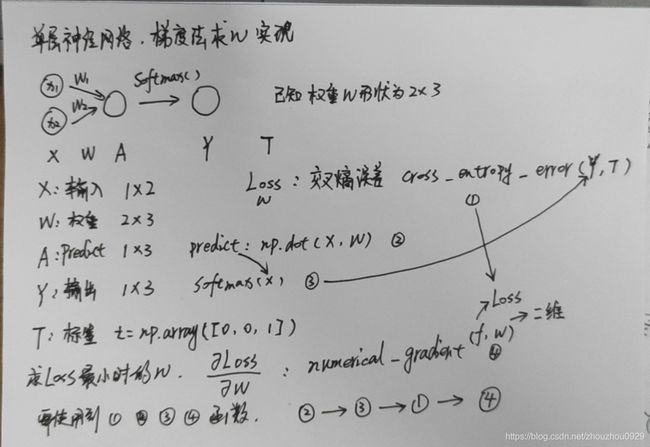

class simpleNet:

def __init__(self):

self.W = np.random.randn(2,3)

def predict(self, x):

return np.dot(x, self.W)

def loss(self, x, t):

z = self.predict(x)

y = softmax(z)

loss = cross_entropy_error(y ,t)

return loss

net = simpleNet()

net.W

array([[-0.86904767, 0.33483853, -1.22539511],

[-0.66378371, 0.09628129, -1.07447355]])

x = np.array([0.3, 0.9])

net.predict(x)

array([-0.85811964, 0.18710472, -1.33464473])

t = np.array([0,0,1])

net.loss(x, t)

1.9727877342731845

def f(W):

return net.loss(x, t)

numerical_gradient(f, net.W)

array([[ 0.06718959, 0.19108966, -0.25827926],

[ 0.20156878, 0.57326898, -0.77483777]])