云原生之kubectl命令详解

目录

1、查看版本信息:kubectl version

2、查看资源对象简写(缩写):kubectl api-resources

3、查看集群信息:kubectl cluster-info

4、查看帮助信息:kubectl --help

5、node节点日志查看:journalctl -u kubelet -f

6、获取一个或多个资源信息:kubectl get

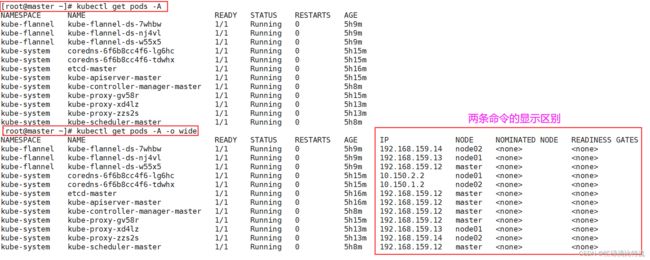

6.1、查看所有命名空间运行的pod信息: kubectl get pods -A

6.2、查看所有命名空间运行的pod详细信息: kubectl get pods -A -o wide

6.3、 查看所有资源对象:kubectl get all -A

6.4、查看node节点上的标签:kube get nodes --show-labels

6.5、查看pod节点上的标签:kubectl get pods --show-labels -A

6.6、查看节点状态信息:kubectl get cs

6.7、查看命名空间:kubectl get namespaces

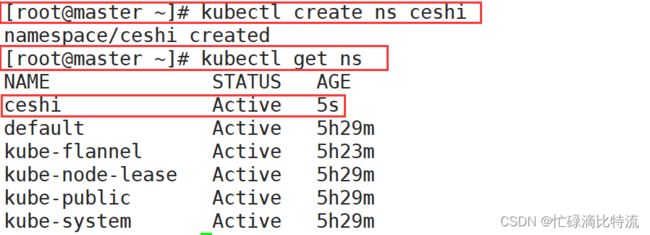

7、创建命名空间 :kubectl create ns app

8、删除命名空间:kubectl delete ns ceshi

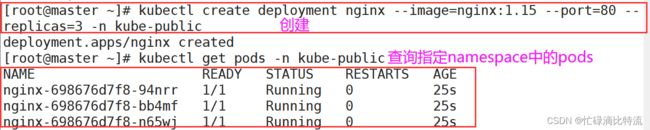

9、在命名空间kube-public创建无状态控制器deployment来启动pod,暴露80端口,副本集为3

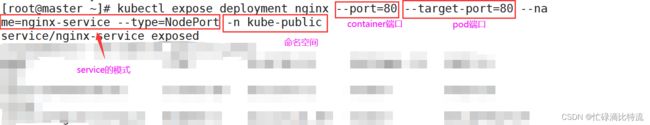

11、暴露发布pod中的服务供用户访问

12、删除pod中的nginx服务及service

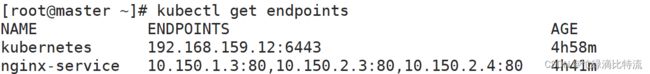

13、查看endpoint的信息

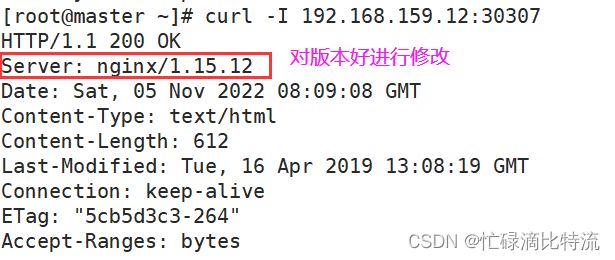

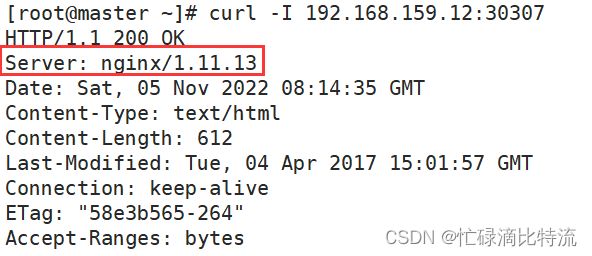

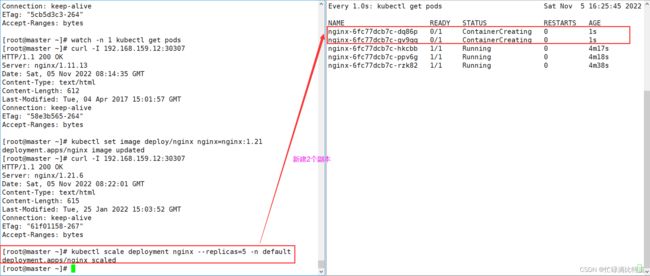

14、修改/更新(镜像、参数......) kubectl set

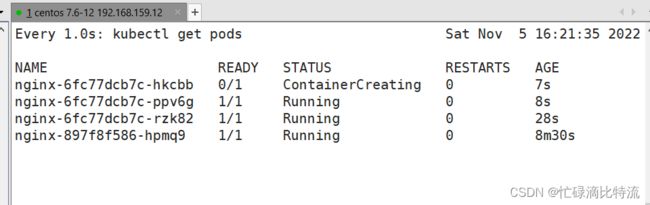

15、调整副本集的数量:kubectl scale

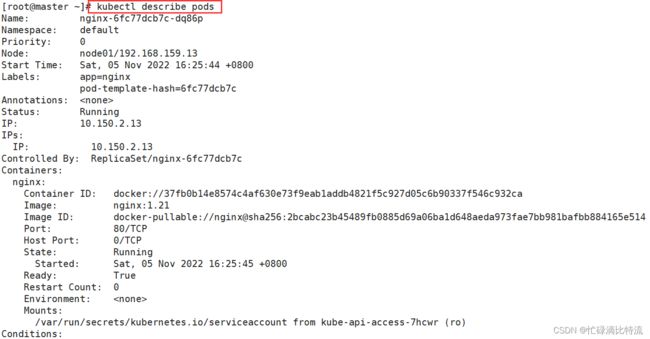

16、查看详细信息:kubectl describe

16.1、显示所有的nodes的详细信息:kubectl describe nodes

16.2、查看某个node的详细信息

16.3、显示所有Pod的详细信息:kubectl describe pods

16.4、显示一个pod的详细信息 :kubectl describe deploy/nginx

16.5、显示所有svc详细信息:kubectl describe svc

16.6、显示指定svc的详细信息:kubectl describe svc nginx-service

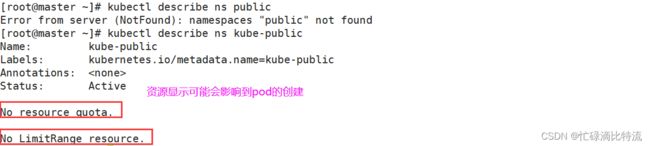

16.7、显示所有namespace的详细信息:kubectl describe ns

16.8、显示指定namespace的详细信息:kubectl describe ns kube-public

1、查看版本信息:kubectl version

[root@master ~]# kubectl version

[root@master ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.3", GitCommit:"ca643a4d1f7bfe34773c74f79527be4afd95bf39", GitTreeState:"clean", BuildDate:"2021-07-15T21:04:39Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.3", GitCommit:"ca643a4d1f7bfe34773c74f79527be4afd95bf39", GitTreeState:"clean", BuildDate:"2021-07-15T20:59:07Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

[root@master ~]# kubectl

2、查看资源对象简写(缩写):kubectl api-resources

[root@master ~]# kubectl api-resources

Client Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.3", GitCommit:"ca643a4d1f7bfe34773c74f79527be4afd95bf39", GitTreeState:"clean", BuildDate:"2021-07-15T21:04:39Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"21", GitVersion:"v1.21.3", GitCommit:"ca643a4d1f7bfe34773c74f79527be4afd95bf39", GitTreeState:"clean", BuildDate:"2021-07-15T20:59:07Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

[root@master ~]# kubectl api-resources

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v1 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

endpointslices discovery.k8s.io/v1 true EndpointSlice

events ev events.k8s.io/v1 true Event

ingresses ing extensions/v1beta1 true Ingress

flowschemas flowcontrol.apiserver.k8s.io/v1beta1 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta1 false PriorityLevelConfiguration

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

podsecuritypolicies psp policy/v1beta1 false PodSecurityPolicy

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1beta1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachment3、查看集群信息:kubectl cluster-info

[root@master ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.159.10:6443

CoreDNS is running at https://192.168.159.10:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

4、查看帮助信息:kubectl --help

[root@master ~]# kubectl --help

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose 使用 replication controller, service, deployment 或者 pod 并暴露它作为一个 新的 Kubernetes

Service

run 在集群中运行一个指定的镜像

set 为 objects 设置一个指定的特征

Basic Commands (Intermediate):

explain 查看资源的文档

get 显示一个或更多 resources

edit 在服务器上编辑一个资源

delete Delete resources by filenames, stdin, resources and names, or by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet or Replication Controller

autoscale Auto-scale a Deployment, ReplicaSet, StatefulSet, or ReplicationController

Cluster Management Commands:

certificate 修改 certificate 资源.

cluster-info 显示集群信息

top 显示 Resource (CPU/Memory) 使用.

cordon 标记 node 为 unschedulable

uncordon 标记 node 为 schedulable

drain Drain node in preparation for maintenance

taint 更新一个或者多个 node 上的 taints

Troubleshooting and Debugging Commands:

describe 显示一个指定 resource 或者 group 的 resources 详情

logs 输出容器在 pod 中的日志

attach Attach 到一个运行中的 container

exec 在一个 container 中执行一个命令

port-forward Forward one or more local ports to a pod

proxy 运行一个 proxy 到 Kubernetes API server

cp 复制 files 和 directories 到 containers 和从容器中复制 files 和 directories.

auth Inspect authorization

debug Create debugging sessions for troubleshooting workloads and nodes

Advanced Commands:

diff Diff live version against would-be applied version

apply 通过文件名或标准输入流(stdin)对资源进行配置

patch Update field(s) of a resource

replace 通过 filename 或者 stdin替换一个资源

wait Experimental: Wait for a specific condition on one or many resources.

kustomize Build a kustomization target from a directory or URL.

Settings Commands:

label 更新在这个资源上的 labels

annotate 更新一个资源的注解

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config 修改 kubeconfig 文件

plugin Provides utilities for interacting with plugins.

version 输出 client 和 server 的版本信息

Usage:

kubectl [flags] [options]

Use "kubectl --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

官方帮助信息:Kubernetes kubectl 命令表 _ Kubernetes(K8S)中文文档_Kubernetes中文社区![]() http://docs.kubernetes.org.cn/683.html

http://docs.kubernetes.org.cn/683.html

5、node节点日志查看:journalctl -u kubelet -f

-- Logs begin at 三 2022-11-02 02:24:50 CST. --

11月 01 19:51:04 master kubelet[13641]: I1101 19:51:04.415651 13641 pod_container_deletor.go:79] "Container not found in pod's containers" containerID="041b38b5bb2ff0161170bea161fd70e9175cc27fdc98877944d899ebe7b90d2f"

11月 01 19:51:06 master kubelet[13641]: I1101 19:51:06.428325 13641 reconciler.go:196] "operationExecutor.UnmountVolume started for volume \"kubeconfig\" (UniqueName: \"kubernetes.io/host-path/771ef2517500c43b40e7df4c76198cac-kubeconfig\") pod \"771ef2517500c43b40e7df4c76198cac\" (UID: \"771ef2517500c43b40e7df4c76198cac\") "

11月 01 19:51:06 master kubelet[13641]: I1101 19:51:06.428370 13641 operation_generator.go:829] UnmountVolume.TearDown succeeded for volume "kubernetes.io/host-path/771ef2517500c43b40e7df4c76198cac-kubeconfig" (OuterVolumeSpecName: "kubeconfig") pod "771ef2517500c43b40e7df4c76198cac" (UID: "771ef2517500c43b40e7df4c76198cac"). InnerVolumeSpecName "kubeconfig". PluginName "kubernetes.io/host-path", VolumeGidValue ""

11月 01 19:51:06 master kubelet[13641]: I1101 19:51:06.529163 13641 reconciler.go:319] "Volume detached for volume \"kubeconfig\" (UniqueName: \"kubernetes.io/host-path/771ef2517500c43b40e7df4c76198cac-kubeconfig\") on node \"master\" DevicePath \"\""

11月 01 19:51:07 master kubelet[13641]: I1101 19:51:07.282148 13641 kubelet_getters.go:300] "Path does not exist" path="/var/lib/kubelet/pods/771ef2517500c43b40e7df4c76198cac/volumes"

11月 01 19:51:10 master kubelet[13641]: I1101 19:51:10.913108 13641 topology_manager.go:187] "Topology Admit Handler"

11月 01 19:51:11 master kubelet[13641]: I1101 19:51:11.079185 13641 reconciler.go:224] "operationExecutor.VerifyControllerAttachedVolume started for volume \"kubeconfig\" (UniqueName: \"kubernetes.io/host-path/5e72c0f5a18f84d50f027106c98ab6b1-kubeconfig\") pod \"kube-scheduler-master\" (UID: \"5e72c0f5a18f84d50f027106c98ab6b1\") "

11月 01 19:51:15 master kubelet[13641]: E1101 19:51:15.849398 13641 cadvisor_stats_provider.go:151] "Unable to fetch pod etc hosts stats" err="failed to get stats failed command 'du' ($ nice -n 19 du -x -s -B 1) on path /var/lib/kubelet/pods/771ef2517500c43b40e7df4c76198cac/etc-hosts with error exit status 1" pod="kube-system/kube-scheduler-master"

11月 01 19:51:25 master kubelet[13641]: E1101 19:51:25.874999 13641 cadvisor_stats_provider.go:151] "Unable to fetch pod etc hosts stats" err="failed to get stats failed command 'du' ($ nice -n 19 du -x -s -B 1) on path /var/lib/kubelet/pods/771ef2517500c43b40e7df4c76198cac/etc-hosts with error exit status 1" pod="kube-system/kube-scheduler-master"

11月 01 19:51:31 master kubelet[13641]: I1101 19:51:31.k8s中查看核心组件日志怎么看

①、通过kubeadm部署的:kubectl logs -f pod_组件名 -n namespace

或者 journalctl -u kubelet -f

②、二进制部署的:journalctl -u kubelet -f

6、获取一个或多个资源信息:kubectl get

语法格式:

kubectl get

注释:

resource:可以是具体资源名称

-o :指定输出格式

-n :指定名称空间

6.1、查看所有命名空间运行的pod信息: kubectl get pods -A

[root@master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-7clld 1/1 Running 0 5h35m

kube-flannel kube-flannel-ds-psgvb 1/1 Running 0 5h35m

kube-flannel kube-flannel-ds-xxncr 1/1 Running 0 5h35m

kube-system coredns-6f6b8cc4f6-lbvl5 1/1 Running 0 5h45m

kube-system coredns-6f6b8cc4f6-m6brz 1/1 Running 0 5h45m

kube-system etcd-master 1/1 Running 0 5h45m

kube-system kube-apiserver-master 1/1 Running 0 5h45m

kube-system kube-controller-manager-master 1/1 Running 0 5h11m

kube-system kube-proxy-jwpnz 1/1 Running 0 5h40m

kube-system kube-proxy-xqcqm 1/1 Running 0 5h41m

kube-system kube-proxy-z6rhl 1/1 Running 0 5h45m

kube-system kube-scheduler-master 1/1 Running 0 5h11m

6.2、查看所有命名空间运行的pod详细信息: kubectl get pods -A -o wide

[root@master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel kube-flannel-ds-7clld 1/1 Running 0 5h39m 192.168.159.13 node02

kube-flannel kube-flannel-ds-psgvb 1/1 Running 0 5h39m 192.168.159.11 node01

kube-flannel kube-flannel-ds-xxncr 1/1 Running 0 5h39m 192.168.159.10 master

kube-system coredns-6f6b8cc4f6-lbvl5 1/1 Running 0 5h49m 10.150.2.2 node02

kube-system coredns-6f6b8cc4f6-m6brz 1/1 Running 0 5h49m 10.150.1.2 node01

kube-system etcd-master 1/1 Running 0 5h49m 192.168.159.10 master

kube-system kube-apiserver-master 1/1 Running 0 5h49m 192.168.159.10 master

kube-system kube-controller-manager-master 1/1 Running 0 5h16m 192.168.159.10 master

kube-system kube-proxy-jwpnz 1/1 Running 0 5h45m 192.168.159.13 node02

kube-system kube-proxy-xqcqm 1/1 Running 0 5h45m 192.168.159.11 node01

kube-system kube-proxy-z6rhl 1/1 Running 0 5h49m 192.168.159.10 master

kube-system kube-scheduler-master 1/1 Running 0 5h15m 192.168.159.10 master

6.3、 查看所有资源对象:kubectl get all -A

[root@master ~]# kubectl get all -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel pod/kube-flannel-ds-7whbw 1/1 Running 0 5h14m

kube-flannel pod/kube-flannel-ds-nj4vl 1/1 Running 0 5h14m

kube-flannel pod/kube-flannel-ds-w55x5 1/1 Running 0 5h14m

kube-system pod/coredns-6f6b8cc4f6-lg6hc 1/1 Running 0 5h20m

kube-system pod/coredns-6f6b8cc4f6-tdwhx 1/1 Running 0 5h20m

kube-system pod/etcd-master 1/1 Running 0 5h20m

kube-system pod/kube-apiserver-master 1/1 Running 0 5h20m

kube-system pod/kube-controller-manager-master 1/1 Running 0 5h13m

kube-system pod/kube-proxy-gv58r 1/1 Running 0 5h20m

kube-system pod/kube-proxy-xd4lz 1/1 Running 0 5h18m

kube-system pod/kube-proxy-zzs2s 1/1 Running 0 5h18m

kube-system pod/kube-scheduler-master 1/1 Running 0 5h12m

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.125.0.1 443/TCP 5h20m

default service/nginx-service NodePort 10.125.18.84 80:32476/TCP 5h3m

kube-system service/kube-dns ClusterIP 10.125.0.10 53/UDP,53/TCP,9153/TCP 5h20m

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel daemonset.apps/kube-flannel-ds 3 3 3 3 3 5h14m

kube-system daemonset.apps/kube-proxy 3 3 3 3 3 kubernetes.io/os=linux 5h20m

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/coredns 2/2 2 2 5h20m

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/coredns-6f6b8cc4f6 2 2 2 5h20m

6.4、查看node节点上的标签:kube get nodes --show-labels

[root@master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

master Ready control-plane,master 5h58m v1.21.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

node01 Ready node 5h53m v1.21.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node01,kubernetes.io/os=linux,node-role.kubernetes.io/node=node

node02 Ready node 5h53m v1.21.3 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node02,kubernetes.io/os=linux,node-role.kubernetes.io/node=node

6.5、查看pod节点上的标签:kubectl get pods --show-labels -A

[root@master ~]# kubectl get pods --show-labels -A

[root@master ~]# kubectl get pods --show-labels -A

NAMESPACE NAME READY STATUS RESTARTS AGE LABELS

kube-flannel kube-flannel-ds-7clld 1/1 Running 0 5h51m app=flannel,controller-revision-hash=5b775b5b5c,pod-template-generation=1,tier=node

kube-flannel kube-flannel-ds-psgvb 1/1 Running 0 5h51m app=flannel,controller-revision-hash=5b775b5b5c,pod-template-generation=1,tier=node

kube-flannel kube-flannel-ds-xxncr 1/1 Running 0 5h51m app=flannel,controller-revision-hash=5b775b5b5c,pod-template-generation=1,tier=node

kube-system coredns-6f6b8cc4f6-lbvl5 1/1 Running 0 6h1m k8s-app=kube-dns,pod-template-hash=6f6b8cc4f6

kube-system coredns-6f6b8cc4f6-m6brz 1/1 Running 0 6h1m k8s-app=kube-dns,pod-template-hash=6f6b8cc4f6

kube-system etcd-master 1/1 Running 0 6h1m component=etcd,tier=control-plane

kube-system kube-apiserver-master 1/1 Running 0 6h1m component=kube-apiserver,tier=control-plane

kube-system kube-controller-manager-master 1/1 Running 0 5h27m component=kube-controller-manager,tier=control-plane

kube-system kube-proxy-jwpnz 1/1 Running 0 5h56m controller-revision-hash=6b87fcb57c,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-proxy-xqcqm 1/1 Running 0 5h56m controller-revision-hash=6b87fcb57c,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-proxy-z6rhl 1/1 Running 0 6h1m controller-revision-hash=6b87fcb57c,k8s-app=kube-proxy,pod-template-generation=1

kube-system kube-scheduler-master 1/1 Running 0 5h26m component=kube-scheduler,tier=control-plane

6.6、查看节点状态信息:kubectl get cs

[root@master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"} 6.7、查看命名空间:kubectl get namespaces

或者使用缩写:[root@master ~]# kubectl get ns

[root@master ~]# kubectl get namespace

NAME STATUS AGE

default Active 6h8m

kube-flannel Active 5h58m

kube-node-lease Active 6h8m

kube-public Active 6h8m

kube-system Active 6h8m

7、创建命名空间 :kubectl create ns app

[root@master ~]# kubectl create ns ceshi

namespace/ceshi created

8、删除命名空间:kubectl delete ns ceshi

[root@master ~]# kubectl delete ns ceshi

namespace "ceshi" deleted

9、在命名空间kube-public创建无状态控制器deployment来启动pod,暴露80端口,副本集为3

在kube-public命名空间创建一个nginx

[root@master ~]# kubectl create deployment nginx --image=nginx:1.15 --port=80 --replicas=3 -n kube-public

[root@master ~]# kubectl create deployment nginx --image=nginx:1.15 --port=80 --replicas=3 -n kube-public

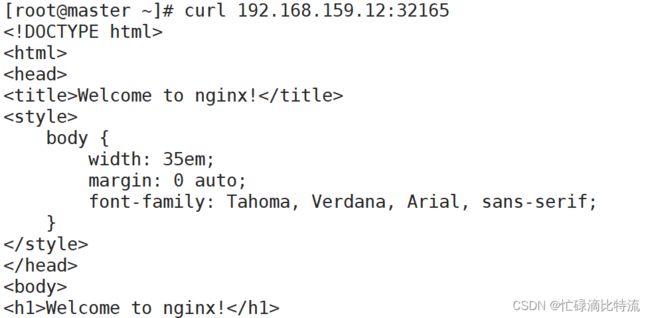

deployment.apps/nginx created11、暴露发布pod中的服务供用户访问

[root@master ~]# kubectl expose deployment nginx --port=80 --target-port=80 --name=nginx-service --type=NodePort -n kube-public

访问:

12、删除pod中的nginx服务及service

[root@master ~]# kubectl delete deployment nginx -n kube-public

[root@master ~]# kubectl delete svc -n kube-public nginx-service

13、查看endpoint的信息

[root@master ~]# kubectl get endpoints

14、修改/更新(镜像、参数......) kubectl set

例如:查看nginx的版本号:

需求:修改这个运行中的nginx的版本号

[root@master ~]# kubectl set image deployment/nginx nginx=nginx:1.11

过程中,他会先下载新的镜像进行创建,启动后删除老版本的容器

15、调整副本集的数量:kubectl scale

[root@master ~]# kubectl scale deployment nginx --replicas=5 -n default

deployment.apps/nginx scaled ## -n 指定namespace,本服务就创建在默认namespace中

16、查看详细信息:kubectl describe

16.1、显示所有的nodes的详细信息:kubectl describe nodes

[root@master ~]# kubectl describe nodes

16.2、查看某个node的详细信息

[root@master ~]# kubectl describe nodes node01

Name: node01

Roles: node

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=node01

kubernetes.io/os=linux

node-role.kubernetes.io/node=node

Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"c2:b4:d2:1b:fa:c2"}

flannel.alpha.coreos.com/backend-type: vxlan

flannel.alpha.coreos.com/kube-subnet-manager: true

flannel.alpha.coreos.com/public-ip: 192.168.159.13

kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sat, 05 Nov 2022 09:26:33 +0800

Taints:

Unschedulable: false

Lease:

HolderIdentity: node01

AcquireTime:

RenewTime: Sat, 05 Nov 2022 17:10:49 +0800

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Sat, 05 Nov 2022 09:31:06 +0800 Sat, 05 Nov 2022 09:31:06 +0800 FlannelIsUp Flannel is running on this node

MemoryPressure False Sat, 05 Nov 2022 17:07:16 +0800 Sat, 05 Nov 2022 09:26:33 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Sat, 05 Nov 2022 17:07:16 +0800 Sat, 05 Nov 2022 09:26:33 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Sat, 05 Nov 2022 17:07:16 +0800 Sat, 05 Nov 2022 09:26:33 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Sat, 05 Nov 2022 17:07:16 +0800 Sat, 05 Nov 2022 09:31:14 +0800 KubeletReady kubelet is posting ready status

Addresses:

InternalIP: 192.168.159.13

Hostname: node01

Capacity:

cpu: 2

ephemeral-storage: 15349Mi

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3861508Ki

pods: 110

Allocatable:

cpu: 2

ephemeral-storage: 14485133698

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3759108Ki

pods: 110

System Info:

Machine ID: 737b63dadf104cdaa76db981253e1baa

System UUID: F0114D56-06E7-3FC5-4619-BAD443CE9F72

Boot ID: ca48a336-8e26-4d35-bd9b-2f403926c2b5

Kernel Version: 3.10.0-957.el7.x86_64

OS Image: CentOS Linux 7 (Core)

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.21

Kubelet Version: v1.21.3

Kube-Proxy Version: v1.21.3

PodCIDR: 10.150.2.0/24

PodCIDRs: 10.150.2.0/24

Non-terminated Pods: (6 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

default nginx-6fc77dcb7c-dq86p 0 (0%) 0 (0%) 0 (0%) 0 (0%) 45m

default nginx-6fc77dcb7c-gv9qq 0 (0%) 0 (0%) 0 (0%) 0 (0%) 45m

default nginx-6fc77dcb7c-hkcbb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 49m

kube-flannel kube-flannel-ds-nj4vl 100m (5%) 100m (5%) 50Mi (1%) 50Mi (1%) 7h40m

kube-system coredns-6f6b8cc4f6-lg6hc 100m (5%) 0 (0%) 70Mi (1%) 170Mi (4%) 7h46m

kube-system kube-proxy-xd4lz 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7h44m

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 200m (10%) 100m (5%)

memory 120Mi (3%) 220Mi (5%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

16.3、显示所有Pod的详细信息:kubectl describe pods

[root@master ~]# kubectl describe pods

16.4、显示一个pod的详细信息 :kubectl describe deploy/nginx

[root@master ~]# kubectl describe deploy/nginx

[root@master ~]# kubectl describe deploy/nginx

Name: nginx

Namespace: default

CreationTimestamp: Sat, 05 Nov 2022 16:06:47 +0800

Labels: app=nginx

Annotations: deployment.kubernetes.io/revision: 3

Selector: app=nginx

Replicas: 5 desired | 5 updated | 5 total | 5 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:1.21

Port: 80/TCP

Host Port: 0/TCP

Environment:

Mounts:

Volumes:

Conditions:

Type Status Reason

---- ------ ------

Progressing True NewReplicaSetAvailable

Available True MinimumReplicasAvailable

OldReplicaSets:

NewReplicaSet: nginx-6fc77dcb7c (5/5 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 56m deployment-controller Scaled up replica set nginx-6fc77dcb7c to 1

Normal ScalingReplicaSet 56m deployment-controller Scaled down replica set nginx-897f8f586 to 2

Normal ScalingReplicaSet 52m (x5 over 56m) deployment-controller (combined from similar events): Scaled up replica set nginx-6fc77dcb7c to 5

16.5、显示所有svc详细信息:kubectl describe svc

[root@master ~]# kubectl describe svc

[root@master ~]# kubectl describe svc

Name: kubernetes

Namespace: default

Labels: component=apiserver

provider=kubernetes

Annotations:

Selector:

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.125.0.1

IPs: 10.125.0.1

Port: https 443/TCP

TargetPort: 6443/TCP

Endpoints: 192.168.159.12:6443

Session Affinity: None

Events:

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations:

Selector: app=nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.125.204.159

IPs: 10.125.204.159

Port: 80/TCP

TargetPort: 80/TCP

NodePort: 30307/TCP

Endpoints: 10.150.1.10:80,10.150.1.11:80,10.150.2.11:80 + 2 more...

Session Affinity: None

External Traffic Policy: Cluster

Events:

16.6、显示指定svc的详细信息:kubectl describe svc nginx-service

[root@master ~]# kubectl describe svc nginx-service

[root@master ~]# kubectl describe svc nginx-service

Name: nginx-service

Namespace: default

Labels: app=nginx

Annotations:

Selector: app=nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.125.204.159

IPs: 10.125.204.159

Port: 80/TCP

TargetPort: 80/TCP

NodePort: 30307/TCP

Endpoints: 10.150.1.10:80,10.150.1.11:80,10.150.2.11:80 + 2 more...

Session Affinity: None

External Traffic Policy: Cluster

Events:

16.7、显示所有namespace的详细信息:kubectl describe ns

[root@master ~]# kubectl describe ns

[root@master ~]# kubectl describe ns

Name: ceshi

Labels: kubernetes.io/metadata.name=ceshi

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

Name: default

Labels: kubernetes.io/metadata.name=default

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

Name: kube-flannel

Labels: kubernetes.io/metadata.name=kube-flannel

pod-security.kubernetes.io/enforce=privileged

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

Name: kube-node-lease

Labels: kubernetes.io/metadata.name=kube-node-lease

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

Name: kube-public

Labels: kubernetes.io/metadata.name=kube-public

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

Name: kube-system

Labels: kubernetes.io/metadata.name=kube-system

Annotations:

Status: Active

No resource quota.

No LimitRange resource.

16.8、显示指定namespace的详细信息:kubectl describe ns kube-public

[root@master ~]# kubectl describe ns kube-public

[root@master ~]# kubectl describe ns public

Error from server (NotFound): namespaces "public" not found

[root@master ~]# kubectl describe ns kube-public

Name: kube-public

Labels: kubernetes.io/metadata.name=kube-public

Annotations:

Status: Active

No resource quota.

No LimitRange resource. 故障现象:节点3个node 1个master1、现在创建一个pod,发现结果是pod无法创建

kubectl describe po pod_name-n namespaces

events:

调度器正常完成工作正常退出pod 创建失败,可能原因之一:资源现在问题