Python深度学习06——Keras循环神经网络实现文本分类

参考书目:陈允杰.TensorFlow与Keras——Python深度学习应用实战.北京:中国水利水电出版社,2021

本系列基本不讲数学原理,只从代码角度去让读者们利用最简洁的Python代码实现深度学习方法。

接着上一节用循环神经网络做回归,本次使用循环神经网络处理文本数据,自然语言。实现分类问题,使用路透社数据集,做文本的情感分类。由于Keras自带该数据集,处理一下可以直接使用(如果想学怎么把纯文本变为数据矩阵,关注下一章的内容)

载入路透社数据集

from keras.datasets import reuters

# 载入 Reuters 数据集, 如果是第一次载入会自行下载数据集

top_words = 10000

(X_train, Y_train), (X_test, Y_test) = reuters.load_data(num_words=top_words)

# 形状

print("X_train.shape: ", X_train.shape)

print("Y_train.shape: ", Y_train.shape)

print("X_test.shape: ", X_test.shape)

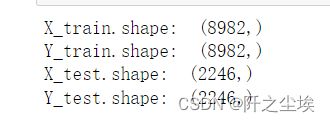

print("Y_test.shape: ", Y_test.shape)可以看到形状不是矩阵,因为还没处理。训练集8982条,测试集2246条。

打印其中一条看看

#显示 Numpy 阵列内容

print(X_train[0])

print(Y_train[0]) # 标签数据很多数字,每个数字都对应一个单词,这是单词索引,查看有多少单词

# 最大的单字索引值

max_index = max(max(sequence) for sequence in X_train)

print("Max Index: ", max_index)![]() 因为刚刚开始是设定的top_words = 10000,所以不超过1w个词。

因为刚刚开始是设定的top_words = 10000,所以不超过1w个词。

建立解码字典,把索引变为单词

# 建立新闻的解码字典

word_index = reuters.get_word_index()

we_index = word_index["social"]

print("'social' index:", we_index)social这个词对应2300.

还可以翻转索引变回单词:

decode_word_map = dict([(value, key) for (key, value)in word_index.items()])

print(decode_word_map[we_index])解码显示第一条新闻是什么

# 解码显示新闻内容

decoded_indices = [decode_word_map.get(i-3, "?")for i in X_train[0]]

print(decoded_indices)

decoded_news = " ".join(decoded_indices)

print(decoded_news)下面将每条新闻预处理,变为张量

import numpy as np

from keras.preprocessing import sequence

from tensorflow.keras.utils import to_categorical

seed = 10

np.random.seed(seed) # 指定乱数种子

# 数据预处理

max_words = 200

X_train = sequence.pad_sequences(X_train, maxlen=max_words)

X_test = sequence.pad_sequences(X_test, maxlen=max_words)

print("X_train.shape: ", X_train.shape)

print("X_test.shape: ", X_test.shape)

# One-hot编码

Y_train = to_categorical(Y_train, 46)

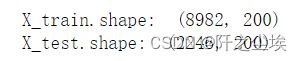

Y_test = to_categorical(Y_test, 46)将X和y都变味数据矩阵,X为200维,y是46维(46分类问题)

MLP实现路透社新闻主题分类

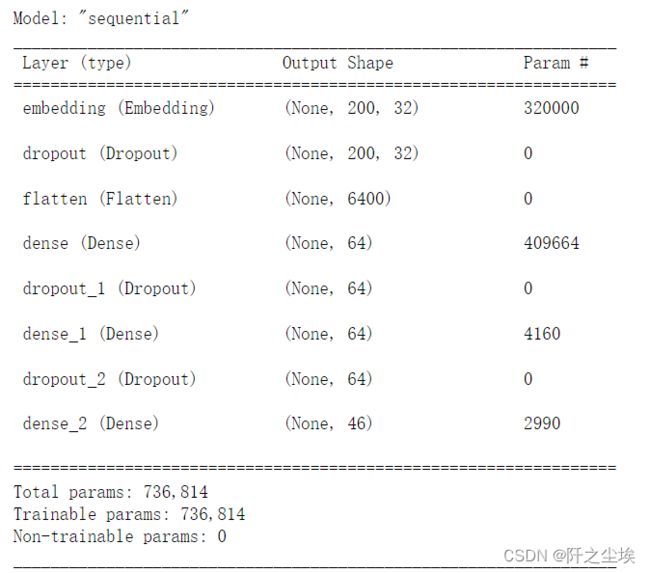

定义模型

from keras.models import Sequential

from keras.layers import Dense, Embedding, Dropout, Flatten

model = Sequential()

model.add(Embedding(top_words, 32, input_length=max_words))

model.add(Dropout(0.75))

model.add(Flatten())

model.add(Dense(64, activation="relu"))

model.add(Dropout(0.25))

model.add(Dense(64, activation="relu"))

model.add(Dropout(0.25))

model.add(Dense(46, activation="softmax"))

model.summary() # 显示模型摘要资讯 编译和训练:

# 编译模型

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

#训练模型

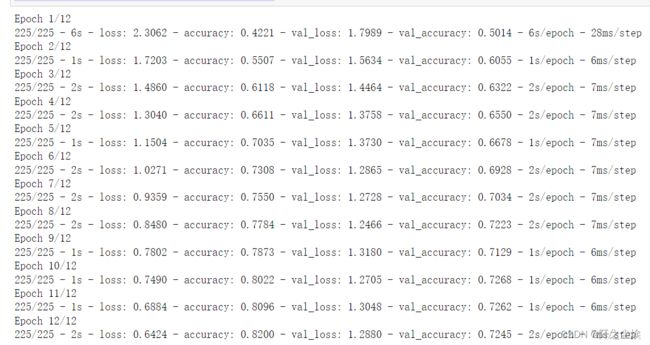

history = model.fit(X_train, Y_train, validation_split=0.2,epochs=12, batch_size=32, verbose=2)评估模型准确率

# 评估模型

loss, accuracy = model.evaluate(X_test, Y_test)

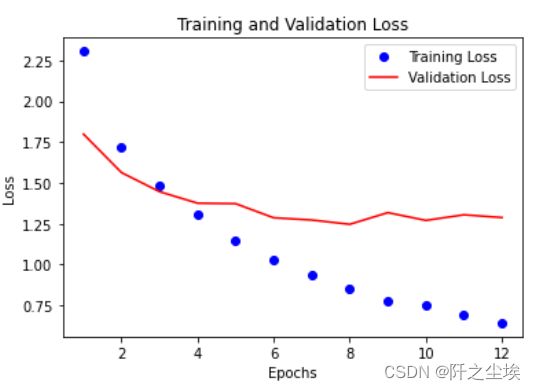

print("测试数据集的准确度 = {:.2f}".format(accuracy))画出损失图

# 显示训练和验证损失图表

import matplotlib.pyplot as plt

loss = history.history["loss"]

epochs = range(1, len(loss)+1)

val_loss = history.history["val_loss"]

plt.plot(epochs, loss, "bo", label="Training Loss")

plt.plot(epochs, val_loss, "r", label="Validation Loss")

plt.title("Training and Validation Loss")

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend()

plt.show()

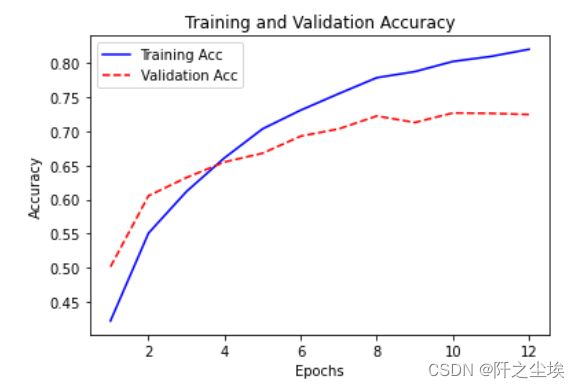

画出准确率图

# 显示训练和验证准确度

acc = history.history["accuracy"]

epochs = range(1, len(acc)+1)

val_acc = history.history["val_accuracy"]

plt.plot(epochs, acc, "b-", label="Training Acc")

plt.plot(epochs, val_acc, "r--", label="Validation Acc")

plt.title("Training and Validation Accuracy")

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend()

plt.show()

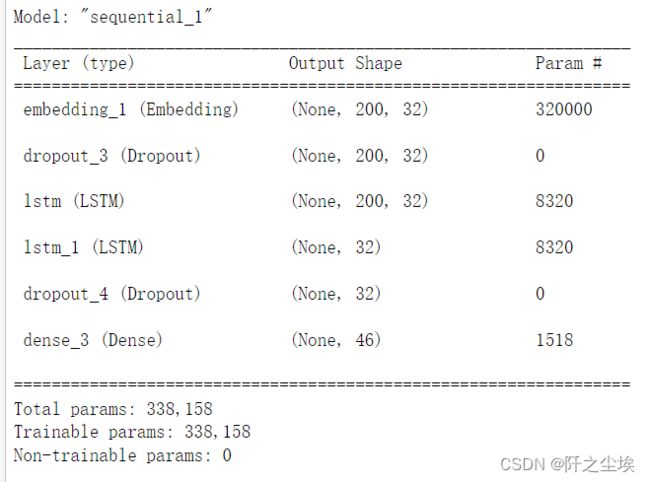

LSTM实现路透社新闻主题分类

定义模型,(若是想用其他循环神经网络,RNN,GRU也是一样的,LSTM改成GRU就行)

from keras.layers import Embedding, Dropout, LSTM, Dense

# 定义模型

model = Sequential()

model.add(Embedding(top_words, 32, input_length=max_words))

model.add(Dropout(0.75))

model.add(LSTM(32, return_sequences=True))

model.add(LSTM(32))

model.add(Dropout(0.5))

model.add(Dense(46, activation="softmax"))

# 编译模型

model.compile(loss="categorical_crossentropy", optimizer="rmsprop",

metrics=["accuracy"])

model.summary() # 显示模型摘要信息同样也是训练,评估

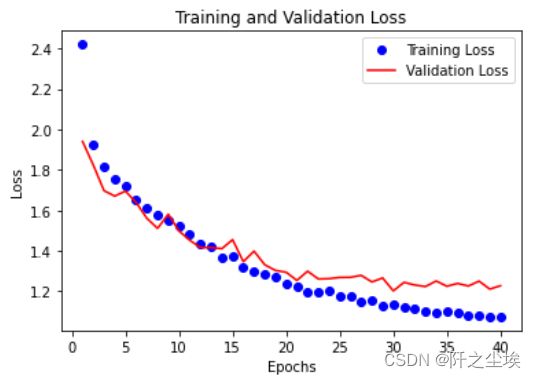

# 训练模型

history = model.fit(X_train, Y_train, validation_split=0.2, epochs=40, batch_size=32, verbose=2)

#评估模型

loss, accuracy = model.evaluate(X_test, Y_test)

print("测试数据集的准确度 = {:.2f}".format(accuracy))画损失和准确率图

# 显示训练和验证损失图表

import matplotlib.pyplot as plt

loss = history.history["loss"]

epochs = range(1, len(loss)+1)

val_loss = history.history["val_loss"]

plt.plot(epochs, loss, "bo", label="Training Loss")

plt.plot(epochs, val_loss, "r", label="Validation Loss")

plt.title("Training and Validation Loss")

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.legend()

plt.show()

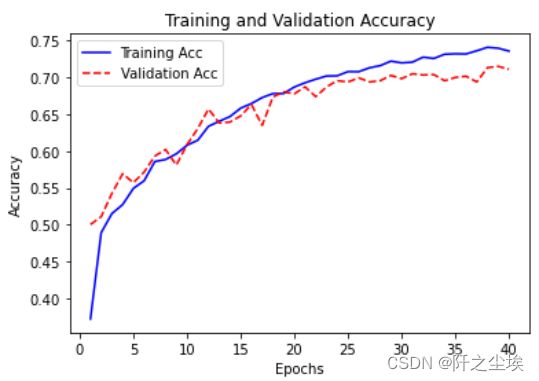

#显示训练和验证准确度

acc = history.history["accuracy"]

epochs = range(1, len(acc)+1)

val_acc = history.history["val_accuracy"]

plt.plot(epochs, acc, "b-", label="Training Acc")

plt.plot(epochs, val_acc, "r--", label="Validation Acc")

plt.title("Training and Validation Accuracy")

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend()

plt.show()也可以使用MLP、CNN、LSTM堆叠等网络,不停调参试试,说不定准确率会更高。下一章会仔细介绍纯文本,就是文字,怎么变成可以运算的张量,然后将网络都组合起来复习一下。