Hudi系列9:Flink SQL操作hudi表

文章目录

- 一. 准备工作

- 二. 插入数据

- 三. 查询数据

- 四. 更新数据

- 五. 流查询

- 六. 删除数据

- 参考:

一. 准备工作

-- 启动yarn-session

/home/flink-1.15.2/bin/yarn-session.sh -d

-- 在yarn session模式下启动flink sql

/home/flink-1.15.2/bin/sql-client.sh embedded -s yarn-session

二. 插入数据

代码:

-- sets up the result mode to tableau to show the results directly in the CLI

set execution.result-mode=tableau;

CREATE TABLE t2(

uuid VARCHAR(20) PRIMARY KEY NOT ENFORCED,

name VARCHAR(10),

age INT,

ts TIMESTAMP(3),

`partition` VARCHAR(20)

)

PARTITIONED BY (`partition`)

WITH (

'connector' = 'hudi',

'path' = 'hdfs://hp5:8020/user/hudi_data/t2',

'table.type' = 'MERGE_ON_READ' -- this creates a MERGE_ON_READ table, by default is COPY_ON_WRITE

);

-- insert data using values

INSERT INTO t2 VALUES

('id1','Danny',23,TIMESTAMP '1970-01-01 00:00:01','par1'),

('id2','Stephen',33,TIMESTAMP '1970-01-01 00:00:02','par1'),

('id3','Julian',53,TIMESTAMP '1970-01-01 00:00:03','par2'),

('id4','Fabian',31,TIMESTAMP '1970-01-01 00:00:04','par2'),

('id5','Sophia',18,TIMESTAMP '1970-01-01 00:00:05','par3'),

('id6','Emma',20,TIMESTAMP '1970-01-01 00:00:06','par3'),

('id7','Bob',44,TIMESTAMP '1970-01-01 00:00:07','par4'),

('id8','Han',56,TIMESTAMP '1970-01-01 00:00:08','par4');

三. 查询数据

select * from t2;

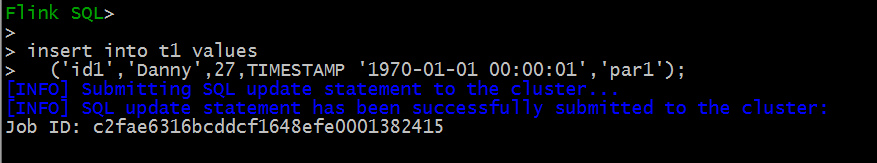

四. 更新数据

更新数据和insert数据类似

-- this would update the record with key 'id1'

insert into t1 values

('id1','Danny',27,TIMESTAMP '1970-01-01 00:00:01','par1');

注意,现在保存模式是追加。通常,总是使用追加模式,除非您试图第一次创建表。再次查询数据将显示更新的记录。每个写操作都会生成一个由时间戳表示的新提交。在之前的提交中查找相同的_hoodie_record_keys的_hoodie_commit_time、age字段的更改。

五. 流查询

Hudi Flink还提供了获取自给定提交时间戳以来更改的记录流的功能。这可以通过使用Hudi的流查询和提供需要流化更改的起始时间来实现。如果我们希望在给定的提交之后进行所有更改(通常是这样),则不需要指定endTime。

CREATE TABLE t1(

uuid VARCHAR(20) PRIMARY KEY NOT ENFORCED,

name VARCHAR(10),

age INT,

ts TIMESTAMP(3),

`partition` VARCHAR(20)

)

PARTITIONED BY (`partition`)

WITH (

'connector' = 'hudi',

'path' = '${path}',

'table.type' = 'MERGE_ON_READ',

'read.streaming.enabled' = 'true', -- this option enable the streaming read

'read.start-commit' = '20210316134557', -- specifies the start commit instant time

'read.streaming.check-interval' = '4' -- specifies the check interval for finding new source commits, default 60s.

);

-- Then query the table in stream mode

select * from t1;

这将给出读取之后发生的所有更改。start-commit提交。该特性的独特之处在于,它现在允许您在流或批处理数据源上编写流管道.

六. 删除数据

在流查询中使用数据时,Hudi Flink源还可以接受来自底层数据源的更改日志,然后按行级应用UPDATE和DELETE。然后,您可以在Hudi上为各种RDBMS同步一个NEAR-REAL-TIME快照。

参考:

- https://hudi.apache.org/docs/0.12.0/flink-quick-start-guide