K8S 集群部署--B站linux超哥视频学习笔记

笔记为根据B站UP主linux超哥的视频进行学习,并记录,如有错漏,心情好的可以指出一下。

https://www.bilibili.com/video/BV1yP4y1x7Vj?spm_id_from=333.999.0.0

机器环境准备:

2C4G的虚拟机

| 主机名 | 节点ip | 角色 | 部署组件 |

|---|---|---|---|

| k8s-master01 | 192.168.131.128 | master01 | etcd,kube-apiserver,kube-controller-manager,kubectl,kubeadm,kubelet,kube-proxy,flannel |

| k8s-note01 | 192.168.131.129 | note01 | kubectl,kubelet,kube-proxy,flannel |

| k8s-note02 | 192.168.131.130 | note02 | kubectl,kubelet,kube-proxy,flannel |

创建虚拟机,并完善基础配置

定义好主机名

配置host解析

cat >> /etc/hosts << EOF

192.168.131.128 k8s-master01

192.168.131.129 k8s-note01

192.168.131.130 k8s-note02

EOF

防火墙规则调整( 防止FORWARD是拒绝的,导致集群直接不可以互相访问)

iptables -P FORWARD ACCEPT

关闭swap缓存#重要

swapoff -a

防止开机自动挂载swap分区

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

关闭selinux,禁用firewall

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

setenforce 0

systemctl disable firewalld && systemctl stop firewalld

开启内核对流量的转发

cat << EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

加载内核参数

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

下载阿里云的基础源

curl -o /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

下载阿里云的docker源

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

配置K8S源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubrenetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

清除缓存并重新建立缓存

yum clean all && yum makecache

开始安装docker (all nodes)

查看docker的版本

yum list docker-ce --showduplicates |sort -r

开始安装

yum install docker-ce -y

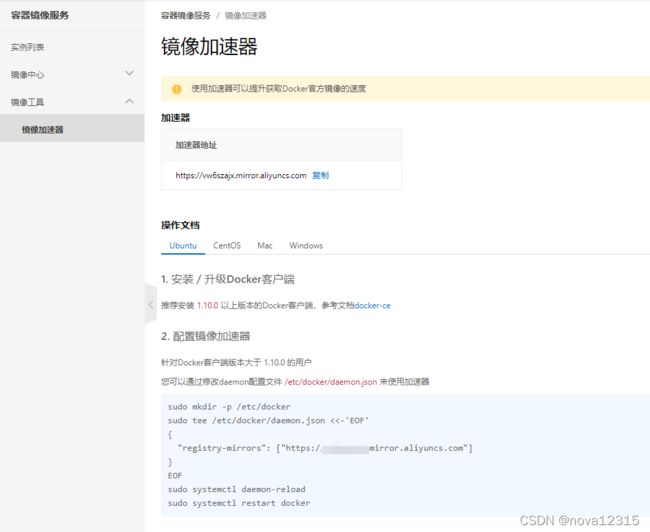

配置docker镜像加速

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://vw6szajx.mirror.aliyuncs.com"]#这个镜像加速地址,为通过阿里云生成而来

}

EOF

补充加速器知识:

- 什么是镜像加速器

由于docker的镜像需要从仓库拉取,这个仓库(dockerHub)国内下载很慢,所以阿里云做了一个镜像加速功能,相当于把dockerHub的仓库拷贝了一份到国内,让国内用户方便下载。

所以,加速器就等同一个转发地址,同样可以作为docker hub的下载地址。

启动docker

systemctl enable docker && systemctl start docker

安装k8s-master01

安装kubeadm,kubelet和kubectl

- kubeadm:用于初始化集群的指令,安装集群的

- kubelet:在集群中的每个节点上用于启动Pod和容器等,管理docker。

- kubectl:用于与集群通信的命令行工具

所有节点安装

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

检查kubeadm版本

[root@k8s-master01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.0", GitCommit:"c2b5237ccd9c0f1d600d3072634ca66cefdf272f", GitTreeState:"clean", BuildDate:"2021-08-04T18:02:08Z", GoVersion:"go1.16.6", Compiler:"gc", Platform:"linux/amd64"}

- 配置kubelet开机启动,作用是管理容器,下载镜像,创建容器,启停容器

- 确保机器一开机,kubelet服务器启动了,就会自动进行管理pod(容器)

root@k8s-master01 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

初始化配置文件(仅master节点执行)

生成配置文件

创建目录用于存放yaml文件

mkdir ~/k8s-install && cd ~/k8s-install

使用命令去初始化生成一个yaml文件

kubeadm config print init-defaults> kubeadm.yaml

配置文件内容如下:

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4#默认的信息为1.2.3.4,这里需更改为k8s-master01内网地址ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: node#这里定义了节点的名字,需要调整为k8s-master01

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io#需要更改这个,为国内的镜像源registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.22.0#这段需要注意,为你的版本,如果存在差异,记得调整为一致状态

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16#这段为新增,添加pod网段,设置容器内网络

serviceSubnet: 10.96.0.0/12

scheduler: {}

提前下载镜像

kubeadm config images pull --config kubeadm.yaml

报错了:

[root@k8s-master01 k8s-install]# kubeadm config print init-defaults> kubeadm.yaml

[root@k8s-master01 k8s-install]# vim kubeadm.yaml

[root@k8s-master01 k8s-install]# kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.22.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.22.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.22.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.5

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.0-0

failed to pull image "registry.aliyuncs.com/google_containers/coredns:v1.8.4": output: Error response from daemon: manifest for registry.aliyuncs.com/google_containers/coredns:v1.8.4 not found: manifest unknown: manifest unknown #这段提示无法拉取这个镜像

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

采用曲线救国的方式

一、 直接使用docker进行拉取

[root@k8s-master01 k8s-install]# docker pull coredns/coredns:1.8.4

1.8.4: Pulling from coredns/coredns

c6568d217a00: Pull complete

bc38a22c706b: Pull complete

Digest: sha256:6e5a02c21641597998b4be7cb5eb1e7b02c0d8d23cce4dd09f4682d463798890

Status: Downloaded newer image for coredns/coredns:1.8.4

docker.io/coredns/coredns:1.8.4

二、 更改镜像标签

[root@k8s-master01 k8s-install]# docker tag coredns/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4

[root@k8s-master01 k8s-install]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.22.0 838d692cbe28 8 days ago 128MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.0 5344f96781f4 8 days ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.22.0 3db3d153007f 8 days ago 52.7MB

registry.aliyuncs.com/google_containers/kube-proxy v1.22.0 bbad1636b30d 8 days ago 104MB

registry.aliyuncs.com/google_containers/etcd 3.5.0-0 004811815584 8 weeks ago 295MB

coredns/coredns 1.8.4 8d147537fb7d 2 months ago 47.6MB

registry.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d 2 months ago 47.6MB

registry.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5b 4 months ago 683kB

初始化集群

kubeadm init --config kubeadm.yaml

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap#不支持在Swap上运行。请禁用交换

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

由于之前做基础环境配置的时候,忘记关闭交换分区的使用,导致这里报错

排查错误

重新执行

swapoff -a

然后再次报错

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

[root@k8s-master01 k8s-install]# swapoff -a

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local node] and IPs [10.96.0.1 192.168.131.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or highe

- 查了很久,执行了systemctl status kubelet和journalctl -xeu kubelet都看不出具体问题

- 查看tailf /var/log/messages |grep kube,发现

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842063 13772 container_manager_linux.go:320] "Creating device plugin manager" devicePluginEnabled=true

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842120 13772 state_mem.go:36] "Initialized new in-memory state store"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842196 13772 kubelet.go:314] "Using dockershim is deprecated, please consider using a full-fledged CRI implementation"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842230 13772 client.go:78] "Connecting to docker on the dockerEndpoint" endpoint="unix:///var/run/docker.sock"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.842248 13772 client.go:97] "Start docker client with request timeout" timeout="2m0s"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.861829 13772 docker_service.go:566] "Hairpin mode is set but kubenet is not enabled, falling back to HairpinVeth" hairpinMode=promiscuous-bridge

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.861865 13772 docker_service.go:242] "Hairpin mode is set" hairpinMode=hairpin-veth

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.861964 13772 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.866488 13772 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.866689 13772 docker_service.go:257] "Docker cri networking managed by the network plugin" networkPluginName="cni"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.866915 13772 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

Aug 13 00:08:12 localhost kubelet: I0813 00:08:12.882848 13772 docker_service.go:264] "Docker Info" dockerInfo=&{ID:TSG7:3K3U:2XNU:OXWS:7CUM:VUO4:EM23:4NLT:GAGI:XIE2:KIQ7:JOZJ Containers:0 ContainersRunning:0 ContainersPaused:0 ContainersStopped:0 Images:7 Driver:overlay2 DriverStatus:[[Backing Filesystem xfs] [Supports d_type true] [Native Overlay Diff true] [userxattr false]] SystemStatus:[] Plugins:{Volume:[local] Network:[bridge host ipvlan macvlan null overlay] Authorization:[] Log:[awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog]} MemoryLimit:true SwapLimit:true KernelMemory:true KernelMemoryTCP:true CPUCfsPeriod:true CPUCfsQuota:true CPUShares:true CPUSet:true PidsLimit:true IPv4Forwarding:true BridgeNfIptables:true BridgeNfIP6tables:true Debug:false NFd:25 OomKillDisable:true NGoroutines:34 SystemTime:2021-08-13T00:08:12.86797053-04:00 LoggingDriver:json-file CgroupDriver:cgroupfs CgroupVersion:1 NEventsListener:0 KernelVersion:3.10.0-1160.36.2.el7.x86_64 OperatingSystem:CentOS Linux 7 (Core) OSVersion:7 OSType:linux Architecture:x86_64 IndexServerAddress:https://index.docker.io/v1/ RegistryConfig:0xc00075dea0 NCPU:2 MemTotal:3953950720 GenericResources:[] DockerRootDir:/var/lib/docker HTTPProxy: HTTPSProxy: NoProxy: Name:k8s-master01 Labels:[] ExperimentalBuild:false ServerVersion:20.10.8 ClusterStore: ClusterAdvertise: Runtimes:map[io.containerd.runc.v2:{Path:runc Args:[] Shim:} io.containerd.runtime.v1.linux:{Path:runc Args:[] Shim:} runc:{Path:runc Args:[] Shim:}] DefaultRuntime:runc Swarm:{NodeID: NodeAddr: LocalNodeState:inactive ControlAvailable:false Error: RemoteManagers:[] Nodes:0 Managers:0 Cluster: Warnings:[]} LiveRestoreEnabled:false Isolation: InitBinary:docker-init ContainerdCommit:{ID:e25210fe30a0a703442421b0f60afac609f950a3 Expected:e25210fe30a0a703442421b0f60afac609f950a3} RuncCommit:{ID:v1.0.1-0-g4144b63 Expected:v1.0.1-0-g4144b63} InitCommit:{ID:de40ad0 Expected:de40ad0} SecurityOptions:[name=seccomp,profile=default] ProductLicense: DefaultAddressPools:[] Warnings:[]}

Aug 13 00:08:12 localhost kubelet: E0813 00:08:12.882894 13772 server.go:294] "Failed to run kubelet" err="failed to run Kubelet: misconfiguration: kubelet cgroup driver: \"systemd\" is different from docker cgroup driver: \"cgroupfs\"" #这里说明了kubelet使用的cgroup driver为systemd,而docker使用的cgroup driver为cgroupfs,不一致。

Aug 13 00:08:12 localhost systemd: kubelet.service: main process exited, code=exited, status=1/FAILURE

Aug 13 00:08:12 localhost systemd: Unit kubelet.service entered failed state.

Aug 13 00:08:12 localhost systemd: kubelet.service failed.

解决错误

- 翻看帖子

https://blog.csdn.net/sinat_28371057/article/details/109895176

里面说到:

由于k8s官方文档中提示使用cgroupfs管理docker和k8s资源,而使用systemd管理节点上其他进程资源在资源压力大时会出现不稳定,因此推荐修改docker和k8s统一使用systemd管理资源。

可以使用这条命令查看docker使用的

docker info |grep "Cgroup Driver"

所以这里需要调整一下docker的cgroup driver

更改方式如下:

在之前创建的daemon.json中加上一条指定使用的driver

[root@k8s-master01 k8s-install]# cat !$

cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://vw6szajx.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]#新增这条配置即可

}

systemctl daemon-reload &&systemctl restart docker

因为有之前的数据了,需要重置一下集群

echo y|kubeadm reset

再次再次进行初始化k8s集群

[root@k8s-master01 k8s-install]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.22.0

[preflight] Running pre-flight checks

[WARNING Hostname]: hostname "node" could not be reached

[WARNING Hostname]: hostname "node": lookup node on 114.114.114.114:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local node] and IPs [10.96.0.1 192.168.131.128]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost node] and IPs [192.168.131.128 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 9.003862 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.22" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node node as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node node as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS #从这里开始,出现下面的这一大段,就表示集群初始化成功了。

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

#根据提示需要执行下面的步骤,创建配置文件

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

#提示,需要创建一个pod网络,你的pod才能正常工作;这个是K8S的集群网络,需要借助额外的插件

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root: #需要使用如下的命令将node节点加入集群

kubeadm join 192.168.131.128:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:95373e9880f7a6fed65093e8ee081d25e739c4ea45feac7c3aa8728f7a93f237

- #出现标黄这段,就表示集群初始化成功了,!!还真不容易。

这个token信息默认的有效期为24小时,过期以后,需要重新创建

kubeadm token create --print-join-command

使用kubectl查看pods信息

kubectl get pods

[root@k8s-master01 k8s-install]# kubectl get pods

No resources found in default namespace.

查看节点信息

kubectl get nodes

[root@k8s-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane,master 2m8s v1.22.0

可以看到节点目前只有master节点

添加node节点(node01和node02)

使用之前创建master节点给到的配置进行

[root@k8s-node01 ~]# kubeadm join 192.168.131.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:95373e9880f7a6fed65093e8ee081d25e739c4ea45feac7c3aa8728f7a93f237

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-node02 ~]# kubeadm join 192.168.131.128:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:95373e9880f7a6fed65093e8ee081d25e739c4ea45feac7c3aa8728f7a93f237

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

之前出了一点小问题。因为三台主机是同时进行的基础配置,也就是说之前swap交换分区的关闭,都是没做,也就报了和之前一样的错误;

同样,docker默认使用的,根据之前的操作进行调整。

- 再回到master节点查看

但是状态是未准备就绪

[root@k8s-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane,master 20m v1.22.0

k8s-node01 NotReady 11m v1.22.0

k8s-node02 NotReady 7m56s v1.22.0

安装flannel网络插件(master01执行)

获取yml文件

根据上面部署master节点时候的提示,k8s集群需要生成一个属于pod的网络

先下载一个kube-flannel.yml文件,文件可以到官网github(需要爬墙)上下载

- 因为是需要访问到github,可能会出现页面能打开,但是机器wget不到。

- 可以在页面获取内容,然后粘贴到记事本之后保存为kube-flannel.yml这个文件就解决了

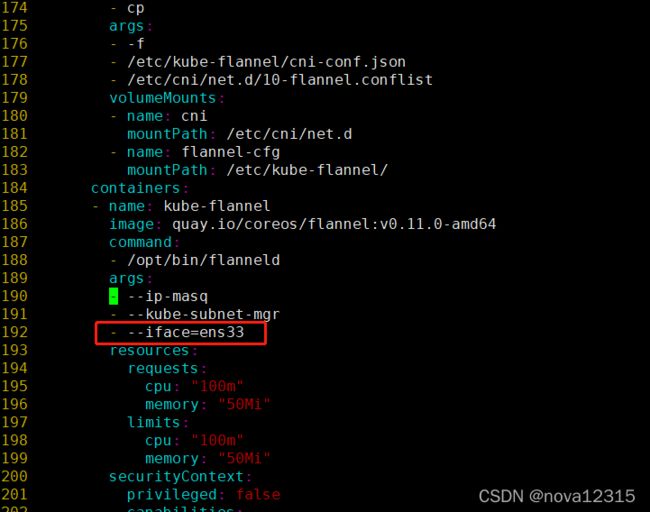

文件下载成功以后,需要稍微的调整一下配置

大约在190行,在kube-subnet-mgr下新增一个

指定机器的网卡名(写网卡的时候,记得确认一下自己机器的网卡名是什么。)

创建网络

创建网络前,需要先下载好flannel的镜像

[root@k8s-master01 k8s-install]# docker pull quay.io/coreos/flannel:v0.11.0-amd64

v0.11.0-amd64: Pulling from coreos/flannel

cd784148e348: Pull complete

04ac94e9255c: Pull complete

e10b013543eb: Pull complete

005e31e443b1: Pull complete

74f794f05817: Pull complete

Digest: sha256:7806805c93b20a168d0bbbd25c6a213f00ac58a511c47e8fa6409543528a204e

Status: Downloaded newer image for quay.io/coreos/flannel:v0.11.0-amd64

quay.io/coreos/flannel:v0.11.0-amd64

使用命令查看

[root@k8s-master01 ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 NotReady control-plane,master 4d19h v1.22.0 192.168.131.128 CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

k8s-node01 NotReady 4d19h v1.22.0 192.168.131.129 CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

k8s-node02 NotReady 4d19h v1.22.0 192.168.131.130 CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

安装网络节点的原因:节点信息显示为NotReady,因为node节点的网络还没有配置

读取kube-flannel.yaml文件,开始安装flannel网络插件

kubectl create -f kube-flannel.yml

[root@k8s-master01 k8s-install]# kubectl create -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

Warning: spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[0].key: beta.kubernetes.io/os is deprecated since v1.14; use "kubernetes.io/os" instead

Warning: spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[0].matchExpressions[1].key: beta.kubernetes.io/arch is deprecated since v1.14; use "kubernetes.io/arch" instead

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

unable to recognize "kube-flannel.yml": no matches for kind "ClusterRole" in version "rbac.authorization.k8s.io/v1beta1"

unable to recognize "kube-flannel.yml": no matches for kind "ClusterRoleBinding" in version "rbac.authorization.k8s.io/v1beta1"

之前使用的为视频中的yml文件,比较旧,就出现了无法识别的情况。

重新创建

- 登陆到github上找到flannel,找到最新的下载地址,调整之前的yaml文件,并重新创建

[root@k8s-master01 k8s-install]# kubectl create -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

daemonset.apps/kube-flannel-ds created

Error from server (AlreadyExists): error when creating "kube-flannel.yml": podsecuritypolicies.policy "psp.flannel.unprivileged" already exists

Error from server (AlreadyExists): error when creating "kube-flannel.yml": serviceaccounts "flannel" already exists

Error from server (AlreadyExists): error when creating "kube-flannel.yml": configmaps "kube-flannel-cfg" already exists

发现之前创建不了的,都成功创建了。

验证

- 再次查看节点详细状态

[root@k8s-master01 k8s-install]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 Ready control-plane,master 4d19h v1.22.0 192.168.131.128 CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

k8s-node01 Ready 4d19h v1.22.0 192.168.131.129 CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

k8s-node02 Ready 4d19h v1.22.0 192.168.131.130 CentOS Linux 7 (Core) 3.10.0-1160.36.2.el7.x86_64 docker://20.10.8

节点已经为Ready状态,表示pod的网络已经创建成功。

- 使用kubectl get pods -A查看所有的配置状态,确保所有的都是Running;

[root@k8s-master01 k8s-install]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f6cbbb7b8-9w8j5 1/1 Running 0 4d19h

kube-system coredns-7f6cbbb7b8-rg56b 1/1 Running 0 4d19h

kube-system etcd-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h

kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h

kube-system kube-flannel-ds-amd64-btsdx 1/1 Running 7 (9m41s ago) 15m

kube-system kube-flannel-ds-amd64-c64z6 1/1 Running 6 (9m47s ago) 15m

kube-system kube-flannel-ds-amd64-x5g44 1/1 Running 7 (8m49s ago) 15m

kube-system kube-flannel-ds-d27bs 1/1 Running 0 7m20s

kube-system kube-flannel-ds-f5t9v 1/1 Running 0 7m20s

kube-system kube-flannel-ds-vjdtg 1/1 Running 0 7m20s

kube-system kube-proxy-7tfk9 1/1 Running 1 (4d18h ago) 4d19h

kube-system kube-proxy-cf4qs 1/1 Running 1 (4d18h ago) 4d19h

kube-system kube-proxy-dncdt 1/1 Running 1 (4d18h ago) 4d19h

kube-system kube-scheduler-k8s-master01 1/1 Running 1 (4d18h ago) 4d19h

8s的配置、组件非常多,利用namespace进行管理

- PS:在coredns的namespace没有Running时,使用kubectl get nodes会看到

[root@k8s-master01 ~]# kubectl get pods

No resources found in default namespace.

- 这个时候再使用kubectl get nodes查看集群状态

[root@k8s-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane,master 4d19h v1.22.0

k8s-node01 Ready 4d19h v1.22.0

k8s-node02 Ready 4d19h v1.22.0

这才是集群正常的状态。需要确保所有节点都是Ready状态

测试使用K8S部署应用程序

快速的用k8s,创建pod,运行nginx,且可以在集群内,访问这个nginx的页面

安装服务

使用k8s部署nginx web 服务

[root@k8s-master01 k8s-install]# kubectl run test-nginx --image=nginx:alpine

pod/test-nginx created

验证安装情况

[root@k8s-master01 k8s-install]# kubectl get pods -o wide --watch

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx 1/1 Running 0 2m32s 10.244.2.2 k8s-node02

- status,可以直观的看到目前容器的情况;

- ip,为flannel网络的ip,为内部局域网;

- node,所属节点

- 访问nginx,可以直接访问集群中的局域网IP,也就是flannel创建的IP

- 验证应用使用情况

[root@k8s-master01 k8s-install]# curl 10.244.2.2

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

已经能明确看到页面内容,表示master节点访问成功。

验证节点程序情况

node01

[root@k8s-node01 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d7ff94443f74 8522d622299c "/opt/bin/flanneld -…" 23 minutes ago Up 23 minutes k8s_kube-flannel_kube-flannel-ds-f5t9v_kube-system_1db5e255-936d-4c7f-a920-a01845675bb2_0

c53005a05dfe ff281650a721 "/opt/bin/flanneld -…" 24 minutes ago Up 24 minutes k8s_kube-flannel_kube-flannel-ds-amd64-c64z6_kube-system_3ed24627-e4f0-48ae-bb31-458039ddfe11_6

adba14b324bf registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 24 minutes ago Up 24 minutes k8s_POD_kube-flannel-ds-f5t9v_kube-system_1db5e255-936d-4c7f-a920-a01845675bb2_0

1b765df0d569 registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 32 minutes ago Up 32 minutes k8s_POD_kube-flannel-ds-amd64-c64z6_kube-system_3ed24627-e4f0-48ae-bb31-458039ddfe11_0

92975ce5b0d8 bbad1636b30d "/usr/local/bin/kube…" About an hour ago Up About an hour k8s_kube-proxy_kube-proxy-cf4qs_kube-system_e554677b-2723-4d96-8021-dd8b0b5961ce_1

89c7e9a3119b registry.aliyuncs.com/google_containers/pause:3.5 "/pause" About an hour ago Up About an hour k8s_POD_kube-proxy-cf4qs_kube-system_e554677b-2723-4d96-8021-dd8b0b5961ce_1

[root@k8s-node01 ~]#

[root@k8s-node01 ~]# docker ps |grep test

[root@k8s-node01 ~]#

找不到任何和test相关的容器

node02

[root@k8s-node02 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

3644313f47fa nginx "/docker-entrypoint.…" 6 minutes ago Up 6 minutes k8s_test-nginx_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0

e5937fa65f8d registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 7 minutes ago Up 7 minutes k8s_POD_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0

ed982452d79e ff281650a721 "/opt/bin/flanneld -…" 20 minutes ago Up 20 minutes k8s_kube-flannel_kube-flannel-ds-amd64-x5g44_kube-system_007ea640-6b4e-47f2-9eb9-bad78e0d0df0_7

b01ac01ed2c9 8522d622299c "/opt/bin/flanneld -…" 23 minutes ago Up 23 minutes k8s_kube-flannel_kube-flannel-ds-d27bs_kube-system_6b2736d9-f316-4693-b7a1-1980efafe7a6_0

8d6066c5fe7d registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 24 minutes ago Up 24 minutes k8s_POD_kube-flannel-ds-d27bs_kube-system_6b2736d9-f316-4693-b7a1-1980efafe7a6_0

98daec830156 registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 32 minutes ago Up 32 minutes k8s_POD_kube-flannel-ds-amd64-x5g44_kube-system_007ea640-6b4e-47f2-9eb9-bad78e0d0df0_0

023b31545099 bbad1636b30d "/usr/local/bin/kube…" About an hour ago Up About an hour k8s_kube-proxy_kube-proxy-dncdt_kube-system_d1053902-b372-4add-be59-a6a1dd9ac651_1

4f9883a37b2b registry.aliyuncs.com/google_containers/pause:3.5 "/pause" About an hour ago Up About an hour k8s_POD_kube-proxy-dncdt_kube-system_d1053902-b372-4add-be59-a6a1dd9ac651_1

[root@k8s-node02 ~]# docker ps |grep test

3644313f47fa nginx "/docker-entrypoint.…" 7 minutes ago Up 7 minutes k8s_test-nginx_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0

e5937fa65f8d registry.aliyuncs.com/google_containers/pause:3.5 "/pause" 8 minutes ago Up 8 minutes k8s_POD_test-nginx_default_925e1358-2845-455e-8079-101c8a823c77_0

可以明确的看见,node02节点上容器里面多了两个test相关的容器

PS:

1、 docker是每一个node节点的运行时环境

2、 kubectl负责所有容器的启停,保证节点正常工作。

详解最小调度单元pod

- docker调度的是容器

- k8s集群中,最小调度单元的是pod(豆荚)

k8s创建pod,pod中去运行容器实例

在集群中的工作状态

假设一台机器是k8s-node01,这个机器上创建了很多pod,这些个pod自己可以运行很多个容器,每一个pod也是会分配自己的一个IP地址

- pod管理一组容器的共享资源:

- 存储,vloumes

- 网络,每个pod在k8s集群中有一个唯一的ip

- 容器,容器的基本信息,如镜像版本,端口映射等

在创建K8S应用时,集群首先创建的是包含容器的pod,然后pod和一个node绑定,被访问,或者被删除。

每个k8s-node节点,至少要运行

- kubelet,负责master,node之间的通信,以及管理pod和pod内部运行的容器

- kube-proxy 流量转发

- 容器运行时环境,负责下载镜像,创建,运行容器实例。

pod

- k8s支持容器的高可用

- node01上的应用程序挂了,能自动重启

- 支持扩容,缩容

- 实现负载均衡

使用deployment部署nginx应用

- 这里先创建一个deployment资源,该资源是k8s部署应用的重点。

- 创建deployment资源后,deployment指示k8s如何创建应用实例,k8s-master将应用程序,调度到具体的node上,也就是生成pod以及内部的容器实例。

- 应用创建后,deployment会持续监控这些pod,如果node节点出现故障,deployment控制器会自动找到更优的node,重新创建新的实例,这就提供了自我修复能力,解决服务器故障问题。

创建k8s资源有两种方式

- yaml配置文件,生产环境使用

- 命令行,调试使用

创建yaml,应用yaml

apiVersion: apps/v1

kind:Deployment

metadata:

name: test02-nginx

labels:

app: nginx

spec:

#创建2个nginx容器

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

开始创建

[root@k8s-master01 k8s-install]# kubectl apply -f test02-nginx.yaml

deployment.apps/test02-nginx created

[root@k8s-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test-nginx 1/1 Running 0 5h5m

test02-nginx-7fb7fd49b4-dxj5c 0/1 ContainerCreating 0 10s

test02-nginx-7fb7fd49b4-r28gc 0/1 ContainerCreating 0 10s

[root@k8s-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test-nginx 1/1 Running 0 5h6m

test02-nginx-7fb7fd49b4-dxj5c 1/1 Running 0 31s

test02-nginx-7fb7fd49b4-r28gc 0/1 ContainerCreating 0 31s

[root@k8s-master01 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx 1/1 Running 0 5h9m 10.244.2.2 k8s-node02

test02-nginx-7fb7fd49b4-dxj5c 1/1 Running 0 3m25s 10.244.1.2 k8s-node01

test02-nginx-7fb7fd49b4-r28gc 1/1 Running 0 3m25s 10.244.1.3 k8s-node01

#从pods中可以看到已经创建了2个nginx程序

测试两个pod的IP,程序是否运行正常

[root@k8s-master01 k8s-install]# curl 10.244.1.2

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

[root@k8s-master01 k8s-install]# curl 10.244.1.3

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

service

service是k8s中的一个重要概念,主要是提供负载均衡和服务自动发现。

问题又回到一开始了,使用deployment创建的nginx已经运行成功了。那么外部应该如何访问到这个nginx?

- pod IP仅仅是集群内可见的虚拟IP,外部无法访问。

- pod IP会随着pod 的销毁而消失,当ReplicaSet对pod进行动态伸缩时,pod IP可能随时随地的都会改变,这样就会增加访问这个服务的难度

因此,通过pod的IP去访问服务,基本上是不现实的,解决方案就是使用新的资源,(service)负载均衡资源

kubectl expose deployment test02-nginx --port=80 --type=LoadBalancer

指定了deployment为test02-nginx ,端口为80,标签为LoadBalancer

[root@k8s-master01 k8s-install]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

test02-nginx 2/2 2 2 17m

#查看所有的deployment

[root@k8s-master01 k8s-install]# kubectl expose deployment test02-nginx --port=80 --type=LoadBalancer

service/test02-nginx exposed

[root@k8s-master01 k8s-install]# kubectl get services -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 443/TCP 5d1h

test02-nginx LoadBalancer 10.98.122.29 80:30172/TCP 19s app=nginx

#可以直接访问这个端口,就能够访问到这个pod内的应用。

配置kubectl命令补全

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source < (kubectl completion bash)

echo "source < (kubectl completion bash)" >> ~/.bashrc

部署dashboard

https://github.com/kubernetes/dashboard

kubernetes 仪表盘是kubernetes集群的通用、基于web的UI。它允许用户管理集群中运行应用程序并对其进行故障排除,以及管理集群本事。

到github上下载这个应用的yaml文件

在文件的44行左右,新增一段

39 spec:

40 ports:

41 - port: 443

42 targetPort: 8443

43 selector:

44 k8s-app: kubernetes-dashboard

45 type: NodePort #加上这个,能直接访问宿主机的IP,就能访问到集群内的dashboard页面

创建资源

[root@k8s-master01 k8s-install]# kubectl create -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

Warning: spec.template.metadata.annotations[seccomp.security.alpha.kubernetes.io/pod]: deprecated since v1.19; use the "seccompProfile" field instead

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master01 k8s-install]# kubectl get po -o wide -A #列出所有namespace,加上--watch不停止监测

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default test-nginx 1/1 Running 0 5h57m 10.244.2.2 k8s-node02

default test02-nginx-7fb7fd49b4-dxj5c 1/1 Running 0 52m 10.244.1.2 k8s-node01

default test02-nginx-7fb7fd49b4-r28gc 1/1 Running 0 52m 10.244.1.3 k8s-node01

kube-system coredns-7f6cbbb7b8-9w8j5 1/1 Running 0 5d2h 10.244.0.2 k8s-master01

kube-system coredns-7f6cbbb7b8-rg56b 1/1 Running 0 5d2h 10.244.0.3 k8s-master01

kube-system etcd-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01

kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01

kube-system kube-flannel-ds-amd64-btsdx 1/1 Running 7 (6h17m ago) 6h23m 192.168.131.128 k8s-master01

kube-system kube-flannel-ds-amd64-c64z6 1/1 Running 6 (6h17m ago) 6h23m 192.168.131.129 k8s-node01

kube-system kube-flannel-ds-amd64-x5g44 1/1 Running 7 (6h16m ago) 6h23m 192.168.131.130 k8s-node02

kube-system kube-flannel-ds-d27bs 1/1 Running 0 6h15m 192.168.131.130 k8s-node02

kube-system kube-flannel-ds-f5t9v 1/1 Running 0 6h15m 192.168.131.129 k8s-node01

kube-system kube-flannel-ds-vjdtg 1/1 Running 0 6h15m 192.168.131.128 k8s-master01

kube-system kube-proxy-7tfk9 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01

kube-system kube-proxy-cf4qs 1/1 Running 1 (5d ago) 5d1h 192.168.131.129 k8s-node01

kube-system kube-proxy-dncdt 1/1 Running 1 (5d ago) 5d1h 192.168.131.130 k8s-node02

kube-system kube-scheduler-k8s-master01 1/1 Running 1 (5d ago) 5d2h 192.168.131.128 k8s-master01

kubernetes-dashboard dashboard-metrics-scraper-856586f554-6n48q 1/1 Running 0 98s 10.244.1.4 k8s-node01 #这个就dashboard

kubernetes-dashboard kubernetes-dashboard-67484c44f6-fch2q 1/1 Running 0 98s 10.244.2.3 k8s-node02 #这个就dashboard

容器正确运行后,测试方案为,查看service资源

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.104.11.88 8000/TCP 19m

kubernetes-dashboard NodePort 10.109.201.42 443:31016/TCP 19m

- service可以简写为svc,pod可以简写为po

- 命令解释:kubectl指定一个命名空间为kubernetes-dashboard,查看这个命名空间下的service

- 从上面可以看到kubernetes-dashboard命名空间的类型为NodePort,可以直接使用这个端口访问web

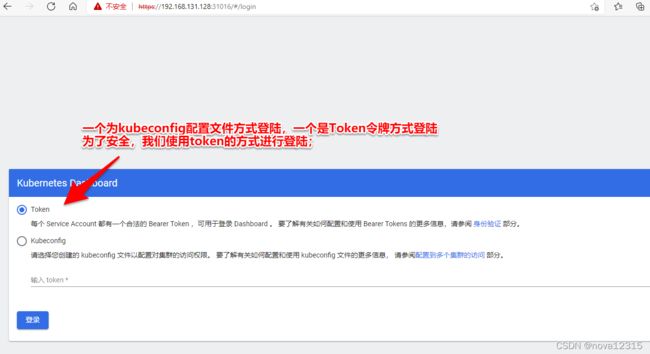

直接访问宿主机IP+kubernetes-dashboard的端口

因为我们使用的是http的请求去访问https,所以才会这样提示

改用https之后,就能正确访问了。

因为K8S集群中所有的请求都是走的https的协议,做的一个双向认证,最大程度的保证这个请求安全

Token的获取方式

需要创建认证系统

创建yaml文件

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

然后创建

[root@k8s-master01 k8s-install]# kubectl create -f admin-user.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

serviceaccount/admin-user created

获取令牌,用于登陆dashboard

#查看令牌信息

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get secret |grep admin-user

admin-user-token-kq6vm kubernetes.io/service-account-token 3 3m16s

#对令牌进行解密

[root@k8s-master01 k8s-install]# kubectl -n kubernetes-dashboard get secret admin-user-token-kq6vm -o jsonpath={.data.token}|base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6ImNZeVdGNDdGMFRFY0lEWWRnTUhwcllfdzNrMG1vTkswYlphYzJIWUlZQ3cifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWtxNnZtIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwNmU4ODhiNS0xMjk5LTRjOTktOGI0NS0xNTFmOWI5MjMzMTkiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.qPdCZzydj9jKIjoSxBf7rPFjiDkpzyIoSgKz1hWpSRpx-xSvSjJ5rwuEYtcmeRE4CKw17783Y-DEDH-jPeyEajFZ1XkSCR19zXgfp9uAU1_vKVOxSK77zBxqXDzQY0bzq8hjhcYUlHcAtFkWS_9aGjyL94r2cyjdgbTDxHJpFUCByuIOeVtGDtIa2YbqFvLRMaSoSOOdJnN_EYBZJW5LoeD3p1NN1gkbqXT56rvKzPtElQFv4tBU1uo501_Iz_MKPxH9lslwReORjE5ttWRbUAd4XguXOmTs0BWeRIG6I9sF0X4JEvS2TSbnCREslJKTBYHU6wkh1ssoMqWbFlD-eg

使用得到的解密信息,回到web页面Token中输入进行登陆