【实时数仓】热度关键词接口、项目整体部署流程

文章目录

- 一 热度关键词接口

-

- 1 Sugar配置

-

- (1)图表配置

- (2)接口地址

- (3)数据格式

- (4)执行SQL

- 2 数据接口实现

-

- (1)创建关键词统计实体类

- (2)Mapper层:创建KeywordStatsMapper

- (3)Service层:创建KeywordStatsService接口

- (4)Service层:创建KeywordStatsServiceImpl

- (5)Controller层:在SugarController中增加方法

- (6)测试

- 3 整体效果

- 二 项目部署流程

一 热度关键词接口

1 Sugar配置

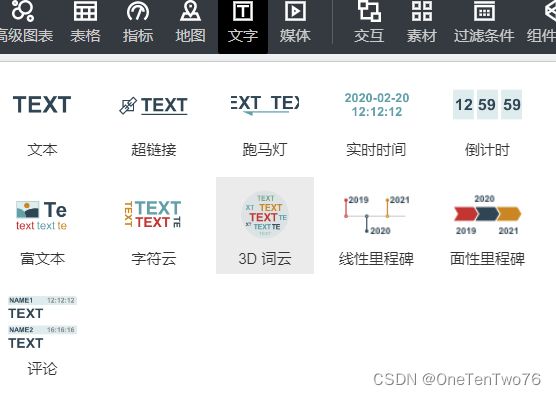

(1)图表配置

(2)接口地址

https://m23o108551.zicp.fun/api/sugar/keyword?date=20221213

(3)数据格式

{

"status": 0,

"data": [

{

"name": "海门",

"value": 1

},

{

"name": "鄂尔多斯",

"value": 1

}

]

}

(4)执行SQL

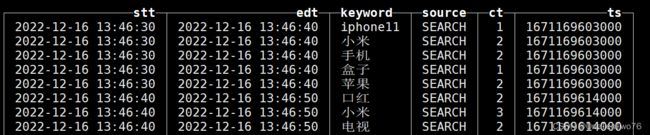

表中数据格式:

根据关键词的出现类型分配不同的热度分数

- 搜索关键词=10分

- 下单商品=5分

- 加入购物车=2分

- 点击商品=1分

- 其他=0分

其中ClickHouse函数multiIf类似于case when

select keyword,sum(keyword_stats_2022.ct *

multiIf(

source='SEARCH',10,

source='ORDER',5,

source='CART',2,

source='CLICK',1,

0

)) ct

from keyword_stats_2022 where toYYYYMMDD(stt)=20221216

group by keyword order by ct desc limit 5;

2 数据接口实现

(1)创建关键词统计实体类

package com.hzy.gmall.publisher.beans;

/**

* Desc: 关键词统计实体类

*/

@Data

@AllArgsConstructor

@NoArgsConstructor

public class KeywordStats {

private String stt;

private String edt;

private String keyword;

private Long ct;

private String ts;

}

(2)Mapper层:创建KeywordStatsMapper

package com.hzy.gmall.publisher.mapper;

public interface KeywordStatsMapper {

@Select("select keyword,sum(keyword_stats_2022.ct * " +

"multiIf(" +

" source='SEARCH',10," +

" source='ORDER',5," +

" source='CART',2," +

" source='CLICK',1," +

" 0" +

")) ct " +

" from keyword_stats_2022 where toYYYYMMDD(stt)=#{date}" +

" group by keyword order by ct desc limit #{limit};")

List<KeywordStats> selectKeywordStats(@Param("date") Integer date,@Param("limit") Integer limit);

}

(3)Service层:创建KeywordStatsService接口

package com.hzy.gmall.publisher.service;

public interface KeywordStatsService {

List<KeywordStats> getKeywordStats(Integer date,Integer limit);

}

(4)Service层:创建KeywordStatsServiceImpl

package com.hzy.gmall.publisher.service.impl;

@Service

public class KeywordStatsServiceImpl implements KeywordStatsService {

@Autowired

private KeywordStatsMapper keywordStatsMapper;

@Override

public List<KeywordStats> getKeywordStats(Integer date, Integer limit) {

return keywordStatsMapper.selectKeywordStats(date,limit);

}

}

(5)Controller层:在SugarController中增加方法

@Autowired

private KeywordStatsService keywordStatsService;

@RequestMapping("/keyword")

public String getKeywordStats(

@RequestParam(value = "date",defaultValue = "0") Integer date,

@RequestParam(value = "limit",defaultValue = "20") Integer limit) {

if (date == 0) {

date = now();

}

List<KeywordStats> keywordStatsList = keywordStatsService.getKeywordStats(date, limit);

StringBuilder jsonBuilder = new StringBuilder("{\"status\": 0,\"data\": [");

for (int i = 0; i < keywordStatsList.size(); i++) {

KeywordStats keywordStats = keywordStatsList.get(i);

jsonBuilder.append("{\"name\": \""+keywordStats.getKeyword()+"\",\"value\": "+keywordStats.getCt()+"}");

if (i < keywordStatsList.size() - 1){

jsonBuilder.append(",");

}

}

jsonBuilder.append("]}");

return jsonBuilder.toString();

}

(6)测试

$API_HOST/api/sugar/keyword?date=20221216

3 整体效果

二 项目部署流程

---项目打包 、部署到服务器

1.修改realtime项目中的并行度(资源足够则不用修改),并打jar包

-BaseLogApp

-KeywordStatsApp

2.修改flink-conf.yml(注意冒号后面有一个“空格”)

taskmanager.memory.process.size: 2000m

taskmanager.numberOfTaskSlots: 8

3.启动zk、kf、clickhouse、flink本地集群(bin/start-cluster.sh)、logger.sh

4.启动BaseLog、KeywordStatsApp

-独立分窗口启动

bin/flink run -m hadoop101:8081 -c com.hzy.gmall.realtime.app.dwd.BaseLogApp ./gmall2022-realtime-1.O-SNAPSHOT-jar-with-dependencies.jar

bin/flink run -m hadoop101:8081 -c com.hzy.gmall.realtime.app.dws.KeywordStatsApp ./gmall2022-realtime-1.O-SNAPSHOT-jar-with-dependencies.jar

-编写realtime.sh脚本

echo "========BaseLogApp==============="

/opt/module/flink-local/bin/flink run -m hadoop101:8081 -c com.hzy.gmall.realtime.app.dwd.BaseLogApp /opt/module/flink-local/gmall2022-realtime-1.O-SNAPSHOT-jar-with-dependencies.jar

>/dev/null 2>&1 &

echo "========KeywordStatsApp==============="

/opt/module/flink-local/bin/flink run -m hadoop101:8081 -c com.hzy.gmall.realtime.app.dws.KeywordStatsApp /opt/module/flink-local/gmall2022-realtime-1.O-SNAPSHOT-jar-with-dependencies.jar

>/dev/null 2>&1 &

5.打包publisher并上传运行

6.花生壳添加hadoop上的publisher地址映射

hadoop101:8070/api/sugar/keyword/

aliyun服务器直接访问公网地址即可

7.sugar修改空间映射

8.运行模拟生成日志的jar包,查看效果

9.常见问题排查

-启动flink集群,不能访问webUI(logger使用的8081,flink同样使用8081,造成冲突)

查看日志,端口冲突 lsof -i:8081

-集群启动之后,应用不能启动

bin/flink run -m hadoop101:8081 -c com.hzy.gmall.realtime.app.dwd.BaseLogApp ./gmall2022-realtime-1.O-SNAPSHOT-jar-with-dependencies.jar

*phoenix驱动不识别,需要加Class.forName指定

*找不到hadoop和hbase等相关的jar

原因:NoClassDefoundError:这个错误编译期间不会报,运行期间才会包。原因是运行期间找不到这个类或无法加载,这个比较复杂。我的做法是把类所在jar包放在flink lib下重启集群就不会出现这个问题。

解决:

>在my.env环境变量中添加

export HADOOP_CLASSPATH=`hadoop classpath`

>在flink的lib目录下创建执行hbase的lib的软连接

ln -s /opt/module/hbase/lib/ ./

*和官方jar包冲突

Caused by: java.lang.ClassCastException: org.codehaus.janino.CompilerFactory cannot be cast to org.codehaus.commons.compiler.ICompilerFactory

将程序中flink、hadoop相关以及三个日志包的scope调整为provided,<scope>provided</scope>

注意:不包含connector相关的