关于OpenResty+doujiang24/lua-resty-kafka写入kafka故障转移模拟测试

关于OpenResty+doujiang24/lua-resty-kafka写入kafka故障转移模拟测试

PS:文章中用到的ip和代码已脱敏

1. 环境

请查看这篇文章https://editor.csdn.net/md/?articleId=122735525

2. 配置

kafka地址:

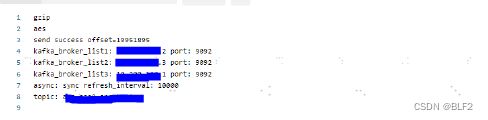

kafka_broker_list={

{host="193.168.1.2",port=9092},

{host="193.168.1.3",port=9092},

{host="193.168.1.1",port=9092}

}

发送脚本:

local kafka_broker_list={

{host="193.168.1.2",port=9092},

{host="193.168.1.3",port=9092},

{host="193.168.1.1",port=9092}

}

local kafka_topic_app = "topic_app"

local p = producer:new(kafka_broker_list, {producer_type = "sync",refresh_interval=10000})

local offset, err = p:send(kafka_topic_app, nil, body)

将我的服务打包成docker image,使用的基础镜像是debian10

测试是在某个服务器上安装的docker中进行的

3. 过程

3.1 服务器上启动容器脚本

docker run -d -it -p 0.0.0.0:9000:8080 --name nginx-kafka --privileged=true nginx-kafka:0.0.1 /bin/bash

说明:

-p 0.0.0.0:9000:8080开放ECS(10.11.12.13)的9000端口访问,流量会转发到容器的8080端口--privileged=true开放特权,否则root账户不可操作iptables

3.2 启动容器后安装iptables

apt-get install iptables -y

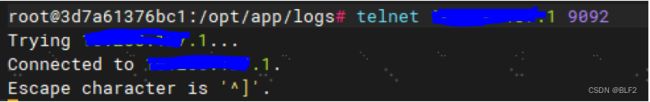

3.3 测试与kafka连通性

telnet 192.168.1.1 9092

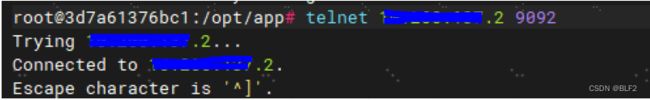

telnet 192.168.1.2 9092

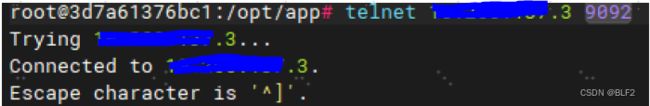

telnet 192.168.1.3 9092

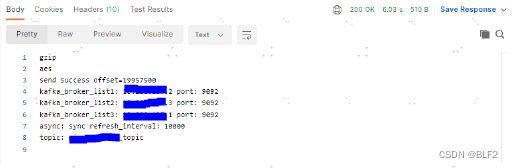

3.4 postman调用测试

3.5 封禁容器对 192.168.1.1 的访问

iptables -A OUTPUT -d 192.168.1.1 -j DROP

一直在尝试连接

此时nginx的error.log无任何输出。

使用postman调用报送接口:

可以看到发送kafka消息超时了。

3.6 封禁容器对 192.168.1.1,192.168.1.2 的访问

iptables -A OUTPUT -d 192.168.1.2 -j DROP

发现已经连接不上了。

此时使用postman测试报送,结果和章节3.5一致。

3.7 封禁容器对 192.168.1.1,192.168.1.2,192.168.1.3 的访问

iptables -A OUTPUT -d 192.168.1.3 -j DROP

日志中出现了连接192.168.1.1:9092,192.168.1.2:9092,192.168.1.3:9092的报错,也有fetch_metadata的报错

2023/03/22 14:57:56 [error] 51#0: *1450 lua tcp socket connect timed out, when connecting to 192.168.1.1:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:57:56 [error] 51#0: *1450 [lua] client.lua:151: _fetch_metadata(): all brokers failed in fetch topic metadata, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:57:56 [error] 51#0: *1452 lua tcp socket connect timed out, when connecting to 192.168.1.1:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:57:56 [error] 51#0: *1452 [lua] client.lua:151: _fetch_metadata(): all brokers failed in fetch topic metadata, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:57:58 [error] 51#0: *1466 lua tcp socket connect timed out, when connecting to 192.168.1.2:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:58:00 [error] 51#0: *1469 lua tcp socket connect timed out, when connecting to 192.168.1.2:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:58:01 [error] 51#0: *1466 lua tcp socket connect timed out, when connecting to 192.168.1.3:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:58:03 [error] 51#0: *1469 lua tcp socket connect timed out, when connecting to 192.168.1.3:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:58:04 [error] 51#0: *1466 lua tcp socket connect timed out, when connecting to 192.168.1.1:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 14:58:04 [error] 51#0: *1466 [lua] client.lua:151: _fetch_metadata(): all brokers failed in fetch topic metadata, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

此时使用postman测试报送,结果和章节3.5一致。

3.8 删除封禁 192.168.1.1

iptables -t filter -D OUTPUT -d 192.168.1.1 -j DROP

测试连通性:

2023/03/22 15:02:22 [error] 51#0: *1912 lua tcp socket connect timed out, when connecting to 192.168.1.2:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 15:02:23 [error] 51#0: *1915 lua tcp socket connect timed out, when connecting to 192.168.1.2:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 15:02:23 [error] 51#0: *1917 lua tcp socket connect timed out, when connecting to 192.168.1.2:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 15:02:25 [error] 51#0: *1912 lua tcp socket connect timed out, when connecting to 192.168.1.3:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 15:02:26 [error] 51#0: *1915 lua tcp socket connect timed out, when connecting to 192.168.1.3:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

2023/03/22 15:02:26 [error] 51#0: *1917 lua tcp socket connect timed out, when connecting to 192.168.1.3:9092, context: ngx.timer, client: 192.168.1.4, server: 0.0.0.0:8080

奇迹般的可以访问了。

结论

-

封禁192.168.1.1,开发其它两个ip访问时埋点报送无法发送至kafka,没能故障转移

-

解除192.168.1.1封禁时可以正确的将埋点发送到kafka,可以认为当此ip恢复访问时,kafka可以正确的恢复。

以上结论可以类推某个ip如果一直无法访问就没法将请求消息正确发送到kafka,此ip恢复时即可正确发送到kafka

-------------------------->20230327分界线<-------------------------------

写完这篇文章后,收集了一下资料,也请教了Kafka大佬,上面的测试存在一定的问题,Kafka是一主(leader)多从(follower)架构,当leader节点正常时,metadata数据会一直显示leader节点正常,网络不通不代表leader节点挂了。当leader节点确实挂了后,zk会重新选举新的leader节点,此时client端接收到这个信息后会自动故障转移。

我把上面的测试在作者的github上提了个issue,很开心得到了作者的耐心解答:

所以综上,当leader网络从不通变为通畅时,client端还是可以重新连接到leader发消息的。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-T2ZS4CYe-1679469875060)(C:\Users\E104239\AppData\Roaming\Typora\typora-user-images\image-20230322142817103.png)]](http://img.e-com-net.com/image/info8/39757483531445ce993e45eb4bb0601b.jpg)