gateway+kafka+spark+Mongodb,基于网关搭建日志,PV点击量,访问量统计

基于网关搭建日志,PV点击量,访问量统计,gateway+kafka+spark+Mongodb

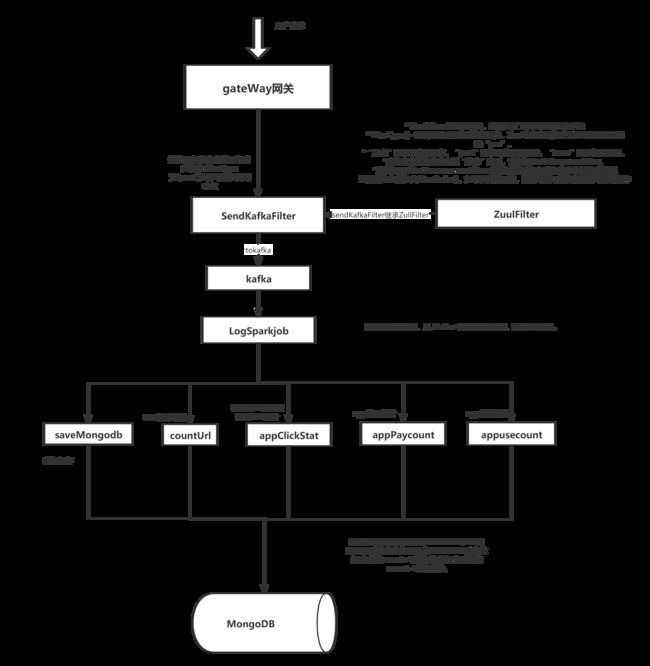

- 1.流程图

- 2.gateway网关配置

-

- 2.1 kafka配置

- 新建SendKafkaFilter继承ZullFilter

- 3.搭建spark接收消费服务

-

- 3.1引入pom依赖

- 3.2 yml配置

- 3.3读取配置文件配置

- 3.4创建@PostConstruct方法 接收数据

- 3.5从 Kafka 中直接读取数据

1.流程图

由于是前后端分离项目,不方便协调前端所有没有在前端埋点,基于gateway网关向kafka发送请求和响应信息

2.gateway网关配置

2.1 kafka配置

引入pom依赖

<dependency>

<groupId>org.springframework.kafkagroupId>

<artifactId>spring-kafkaartifactId>

dependency>

配置kafka

package com.aeotrade.gateway.kafka;

import org.springframework.boot.autoconfigure.kafka.KafkaProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.core.DefaultKafkaProducerFactory;

import org.springframework.kafka.core.KafkaTemplate;

import javax.annotation.Resource;

import java.util.Map;

@Configuration

public class KafkaProducerConfig {

@Resource

private KafkaProperties kafkaProperties = null;

@Bean

public KafkaTemplate kafkaTemplate () {

Map<String, Object> params = kafkaProperties.buildProducerProperties();

DefaultKafkaProducerFactory producerFactory = new DefaultKafkaProducerFactory(params);

return new KafkaTemplate(producerFactory, true);

}

}

DocumentSender注入,发送错误记录

package com.aeotrade.gateway.kafka;

import org.apache.commons.lang3.time.DateFormatUtils;

import org.bson.Document;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.mongodb.core.MongoTemplate;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Component;

import org.springframework.util.concurrent.ListenableFuture;

import org.springframework.util.concurrent.ListenableFutureCallback;

import javax.annotation.Resource;

import java.io.PrintWriter;

import java.io.StringWriter;

@Component

public class DocumentSender {

private static final Logger logger = LoggerFactory.getLogger(DocumentSender.class.getName());

private String sendFailCollection = "kafka_send_fail";

@Resource

private KafkaTemplate<String,String> kafkaTemplate = null;

@Autowired

private MongoTemplate mongoTemplate;

/**

* 发送 MongoDB Document 文档

* @param topic

* @param doc

*/

public void sendDocment(String topic, String doc) {

System.out.println("send------------------- 开始发送" );

ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send(topic, doc);

future.addCallback(new ListenableFutureCallback<SendResult<String, String>>() {

@Override

public void onFailure(Throwable throwable) {

saveFailure(topic, doc, throwable);

}

@Override

public void onSuccess(SendResult<String, String> sendResult) {

System.out.println("kafka发送成功--------------" + sendResult.toString());

}

});

}

/**

* 记录错误日志

* @param topic

* @param doc

* @param throwable

*/

private void saveFailure(String topic, String doc, Throwable throwable) {

System.out.println("============== 发送失败 " + topic + " ,doc=" + doc);

Document resultDoc = new Document();

resultDoc.put("topic", topic);

resultDoc.put("doc", doc);

resultDoc.put("error", getStackTraceAsString(throwable));

resultDoc.put("error_time", DateFormatUtils.format(System.currentTimeMillis(), "yyyy-MM-dd hh:mm:ss"));

mongoTemplate.save(resultDoc, sendFailCollection);

}

public static String getStackTraceAsString(Throwable throwable) {

StringWriter stringWriter = new StringWriter();

throwable.printStackTrace(new PrintWriter(stringWriter));

return stringWriter.toString();

}

}

新建SendKafkaFilter继承ZullFilter

微服务中Zuul服务网关一共定义了四种类型的过滤器:

pre:在请求被路由(转发)之前调用

route:在路由(请求)转发时被调用

error:服务网关发生异常时被调用

post:在路由(转发)请求后调用

final RequestContext currentContext = RequestContext.getCurrentContext();

final SecurityContext context = SecurityContextHolder.getContext();

final Authentication authentication = context.getAuthentication();

final HttpServletRequest request = currentContext.getRequest();

HttpServletResponse response = currentContext.getResponse();

以上内可以分别获取到请求方式 ,URL ,IP,访问系统,参数 ,以及响应的状态码,上下文等等

可以根据个人需要去过滤筛选,需要过滤掉.js .css .ico等静态资源的访问下面直接贴代码

创建日志实体

package com.aeotrade.gateway.dto;

import lombok.Data;

import org.springframework.data.mongodb.core.mapping.Document;

import java.io.Serializable;

import java.util.Date;

import java.util.Map;

/**

* @Author: yewei

* @Date: 17:18 2020/11/26

* @Description:

*/

@Document(collection = "hmm_log")

@Data

public class MgLogEntity implements Serializable {

private String id =null;

/**访问主机/方式*/

private String userAgent=null;

/**访问主机/方式 详情*/

private Map<String, String> userAgentDetils=null;

/**访问路径*/

private String url=null;

/**前端访问IP*/

private String host=null;

/**用户访问IP*/

private String ip=null;

/**访问用户详情*/

private Map<String, Object> userDetils=null;

/**是否登录*/

private Integer isLogin=null;

/**访问时间*/

private Date time=null;

/**请求方式*/

private String wayRequest=null;

/**员工名称*/

private String staffName=null;

/**是否被拦截*/

private Integer isTercept=0;

/**请求参数*/

private String param =null;

/**登录方式*/

private String Logintype =null;

/**响应状态码*/

private Integer status =200;

}

filter配置

package com.aeotrade.gateway.filter;

import com.aeotrade.exception.AeotradeException;

import com.aeotrade.gateway.dto.MgLogEntity;

import com.aeotrade.gateway.kafka.DocumentSender;

import com.aeotrade.gateway.mq.RabbitMQTopic;

import com.aeotrade.gateway.mq.RabbitSender;

import com.aeotrade.util.JacksonUtil;

import com.alibaba.fastjson.JSONObject;

import com.google.common.collect.Lists;

import com.netflix.zuul.ZuulFilter;

import com.netflix.zuul.context.RequestContext;

import com.netflix.zuul.exception.ZuulException;

import com.netflix.zuul.http.ServletInputStreamWrapper;

import io.micrometer.core.instrument.util.IOUtils;

import lombok.SneakyThrows;

import org.apache.commons.lang3.StringUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.security.authentication.UsernamePasswordAuthenticationToken;

import org.springframework.security.core.Authentication;

import org.springframework.security.core.GrantedAuthority;

import org.springframework.security.core.context.SecurityContext;

import org.springframework.security.core.context.SecurityContextHolder;

import org.springframework.security.oauth2.provider.OAuth2Authentication;

import org.springframework.security.oauth2.provider.OAuth2Request;

import org.springframework.security.oauth2.provider.authentication.OAuth2AuthenticationDetails;

import org.springframework.util.StreamUtils;

import javax.servlet.ServletInputStream;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletRequestWrapper;

import javax.servlet.http.HttpServletResponse;

import javax.xml.ws.ResponseWrapper;

import java.io.*;

import java.nio.charset.Charset;

import java.util.*;

/**

* @Author: yewei

* @Date: 13:47 2020/12/2

* @Description:错误过滤器

*/

public class SendKafkaFilter extends ZuulFilter {

@Autowired

private RabbitSender rabbitSender;

@Autowired

private DocumentSender documentSender;

@Override

public String filterType() {

return "post";

}

@Override

public int filterOrder() {

return 0;

}

@Override

public boolean shouldFilter() {

return true;

}

@SneakyThrows

@Override

public Object run() throws ZuulException {

final RequestContext currentContext = RequestContext.getCurrentContext();

final SecurityContext context = SecurityContextHolder.getContext();

final Authentication authentication = context.getAuthentication();

final HttpServletRequest request = currentContext.getRequest();

HttpServletResponse response = currentContext.getResponse();

Boolean u= isURL(request.getRequestURI());

if(!u){

return null;

}

MgLogEntity log = new MgLogEntity();

Enumeration<String> names = request.getHeaderNames();

Map<String, String> enumenrt = new HashMap<>();

for (;names.hasMoreElements();) {

String name = names.nextElement();

System.out.println(name + " = " + request.getHeader(name));

enumenrt.put(name,request.getHeader(name));

}

if(enumenrt.containsKey("authorization")){

enumenrt.put("authorization","bearer");

}

// 请求方法

String method = request.getMethod();

// 获取请求的输入流

InputStream in = request.getInputStream();

String body = StreamUtils.copyToString(in, Charset.forName("UTF-8"));

log.setIsLogin(0);

log.setUserAgent(enumenrt.get("user-agent"));

log.setUserAgentDetils(enumenrt);

log.setUrl(request.getRequestURI());

log.setHost(enumenrt.get("host"));

log.setIp(enumenrt.get("x-real-ip"));

log.setTime(new Date(System.currentTimeMillis()));

log.setWayRequest(method);

log.setParam(StringUtils.isEmpty(body)?"":body);

if(method.equals("GET")) {

String queryString = request.getQueryString();//路径参数

log.setParam(StringUtils.isEmpty(queryString)?"":queryString);

}

if(request.getRequestURI().equals("/wx/wxcat/image")){

log.setParam(null);

}

if (!(authentication instanceof OAuth2Authentication)){

log.setIsLogin(0);

int status = response.getStatus();

System.out.println("响应状态------------------------"+status);

log.setStatus(status);

//发送kafka

sendKafka(log);

if(status==500) {

sendMq(log);

}

return null;

}

final OAuth2Authentication oAuth2Authentication = (OAuth2Authentication) authentication;

final UsernamePasswordAuthenticationToken userAuthentication = (UsernamePasswordAuthenticationToken) oAuth2Authentication.getUserAuthentication();

final String principal = (String) userAuthentication.getPrincipal();

if (!StringUtils.containsIgnoreCase(principal,"{")){

return null;

}

List<String> authorities=new ArrayList<>();

try {

// 如果body为空初始化为空json

if (StringUtils.isBlank(body)) {

body = "{}";

}

Map<String, Object> map= JacksonUtil.parseJson(principal,Map.class);//用户信息

log.setIsLogin(1);

log.setLogintype(map.get("loginType").toString());

log.setStaffName(map.get("staffName").toString());

log.setUserDetils(map);

//发送kafka

sendKafka(log);

//sendMq(log);

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

private Boolean isURL(String url) {

if(url.endsWith(".js")){

return false;

}else if (url.endsWith(".css")){

return false;

}else if (url.endsWith(".ico")){

return false;

}else if (url.endsWith(".jpg")){

return false;

}else if (url.endsWith(".png")){

return false;

}

return true;

}

private void sendKafka(MgLogEntity log) {

String s = JSONObject.toJSONString(log);

documentSender.sendDocment("log_message",s);

}

private void sendMq(MgLogEntity log) throws Exception {

Map<String, Object> maps = new HashMap<>();

String s = JSONObject.toJSONString(log);

rabbitSender.send(RabbitMQTopic.Log,s,maps);

}

private String getvalue(Object o){

return o!=null?o.toString(): StringUtils.EMPTY;

}

}

3.搭建spark接收消费服务

3.1引入pom依赖

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.12artifactId>

<version>2.4.4version>

<exclusions>

<exclusion>

<artifactId>slf4j-log4j12artifactId>

<groupId>org.slf4jgroupId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.12artifactId>

<version>2.4.4version>

<exclusions>

<exclusion>

<artifactId>janinoartifactId>

<groupId>org.codehaus.janinogroupId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming_2.12artifactId>

<version>2.4.0version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming-kafka-0-10_2.12artifactId>

<version>2.4.5version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql-kafka-0-10_2.12artifactId>

<version>2.4.5version>

dependency>

<dependency>

<groupId>org.mongodb.sparkgroupId>

<artifactId>mongo-spark-connector_2.12artifactId>

<version>2.4.1version>

dependency>

3.2 yml配置

kafka:

bootstrap-servers: 192.168.0.201:9092

spark:

mongodb:

out:

collection: mongodb://aeotrade:*******@192.168.0.201:27017/aeotrade.company_dec_message

aeotrade:

topic:

name: log_message

group-id: log_message_group

mongodb:

uri: mongodb://aeotrade:*******@192.168.0.201:27017/aeotrade

db: aeotrade

username: aeotrade

password: *******

data:

mongodb:

host: 192.168.1.201

port: 27017

uri: mongodb://aeotrade:*******@192.168.0.201:27017/aeotrade

username: aeotrade

password: *******

database: aeotrade

3.3读取配置文件配置

SparkConfiguration

package com.aeotrade.provider.config;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.spark.SparkConf;

import org.apache.spark.sql.SparkSession;

import java.util.Arrays;

public class SparkConfiguration {

private TopicProperties topicProperties = null;

public SparkConfiguration(TopicProperties properties){

this.topicProperties = properties;

}

public SparkSession sparkSession() {

return SparkSession.builder().config(getSparkConf(topicProperties))

.getOrCreate();

}

public SparkConf getSparkConf(TopicProperties properties) {

SparkConf sparkConf = new SparkConf()

.setAppName("local_spark_statistics")

.setMaster("local")

.set("spark.serializer","org.apache.spark.serializer.KryoSerializer")

.set("spark.mongodb.output.uri",properties.getSparkMongodbOutCollection());

sparkConf.registerKryoClasses((Class<?>[]) Arrays.asList(ConsumerRecord.class).toArray());

return sparkConf;

}

}

TopicProperties

package com.aeotrade.provider.config;

import lombok.Data;

import lombok.ToString;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Configuration;

import java.io.Serializable;

import java.util.HashMap;

import java.util.Map;

//@ConfigurationProperties(

// prefix = "aeotrade"

//)

@Configuration

@Data

@ToString

public class TopicProperties implements Serializable {

@Value("${aeotrade.topic.name}")

private String name = null;

@Value("${aeotrade.topic.group-id}")

private String groupId = "dec_msg_group";

// MongoDB 的服务器地址 mongodb://host:port/

@Value("${aeotrade.mongodb.uri}")

private String mongodburi = null;

@Value("${aeotrade.mongodb.db}")

private String mongoDatabase = null;

@Value("${aeotrade.mongodb.username}")

private String mongoUsername = null;

@Value("${aeotrade.mongodb.password}")

private String mongoPassword = null;

@Value("${spark.mongodb.out.collection}")

private String sparkMongodbOutCollection;

private HashMap<String, String> mongodbConfig = null;

public HashMap<String, String> toMongoConfig() {

//HashMap mongodbConfig = null;

if (mongodbConfig == null) {

mongodbConfig = new HashMap<>();

mongodbConfig.put("uri", mongodburi);

mongodbConfig.put("database", mongoDatabase);

mongodbConfig.put("userName",mongoUsername);

mongodbConfig.put("password",mongoPassword);

}

return mongodbConfig;

}

public Map<String, String> getMongodbConfig() {

return toMongoConfig();

}

}

3.4创建@PostConstruct方法 接收数据

@PostConstruct注解好多人以为是Spring提供的。其实是Java自己的注解。

Java中该注解的说明:@PostConstruct该注解被用来修饰一个非静态的void()方法。被@PostConstruct修饰的方法会在服务器加载Servlet的时候运行,并且只会被服务器执行一次。PostConstruct在构造函数之后执行,init()方法之前执行。

通常我们会是在Spring框架中使用到@PostConstruct注解 该注解的方法在整个Bean初始化中的执行顺序:

Constructor(构造方法) -> @Autowired(依赖注入) -> @PostConstruct(注释的方法)

应用:在静态方法中调用依赖注入的Bean中的方法。

package com.aeotrade.provider.consumer;

import com.aeotrade.provider.config.TopicProperties;

import com.aeotrade.provider.dto.MgLogEntity;

import com.aeotrade.provider.kafka.DocumentSender;

import com.aeotrade.provider.model.entity.LogConfiguration;

import com.aeotrade.provider.service.LogConfigurationService;

import com.alibaba.fastjson.JSONObject;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.springframework.beans.BeansException;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

import org.springframework.data.domain.PageRequest;

import org.springframework.data.mongodb.core.MongoTemplate;

import org.springframework.data.mongodb.core.query.Query;

import org.springframework.stereotype.Component;

import javax.annotation.PostConstruct;

import javax.annotation.PreDestroy;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* @Author: yewei

* @Date: 14:17 2020/11/30

* @Description:

*/

@Component

public class LogConsumer implements ApplicationContextAware {

@Value("${spring.kafka.bootstrap-servers}")

private String bootstrapServers = null;

@Autowired

private DocumentSender documentSender;

@Autowired

private TopicProperties topicProperties = null;

@Autowired

private MongoTemplate mongoTemplate;

@PostConstruct

public void start() throws InterruptedException {

System.out.println("=============== data streaming start ========== ");

Map<String, Object> kafkaParams = new HashMap<>();

kafkaParams.put("bootstrap.servers", bootstrapServers);

kafkaParams.put("key.deserializer", StringDeserializer.class);

kafkaParams.put("value.deserializer", StringDeserializer.class);

kafkaParams.put("group.id", topicProperties.getGroupId());

kafkaParams.put("auto.offset.reset", "earliest");//latest , earliest

kafkaParams.put("enable.auto.commit", true);

//List rules = labelService.rules();

new LogSparkjob().start(kafkaParams, topicProperties);

}

@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

try{

//这里可以加载自己的业务逻辑

LogConfigurationService logConfigurationService = applicationContext.getBean(LogConfigurationService.class);

List<LogConfiguration> list = logConfigurationService.findAll();

list.forEach(log->{

System.out.println("规则详情____________________________________");

System.out.println(log);

});

}catch (Exception ex) {

ex.printStackTrace(System.err);

}

}

@PreDestroy

public void shutdown() {

System.out.println("=============== data streaming shutdown ");

}

}

3.5从 Kafka 中直接读取数据

1.创建JavaStreamingContext对象

Collection topics = Arrays.asList(topicProps.getName());

SparkConfiguration configuration = new SparkConfiguration(topicProps);

final SparkSession session = configuration.sparkSession();

final JavaSparkContext sparkContext = new JavaSparkContext(session.sparkContext());

// 建立数据流,每 10 秒读取一批数据

//创建JavaStreamingContext对象

JavaStreamingContext streamingContext = new JavaStreamingContext(sparkContext, Durations.seconds(5));

// 建立实时数据流,从 Kafka 中直接读取数据,实现 零 拷贝。

//创建DStream

JavaInputDStream> stream = KafkaUtils.createDirectStream(

streamingContext,

LocationStrategies.PreferConsistent(),

ConsumerStrategies.Subscribe(topics, params)

);

System.out.println("==================== stream ============== ");

// 使用 Spark 的 map 操作进行数据转换。

JavaDStream docStream = stream.flatMap(new LogTransformerFunction(topicProperties.toMongoConfig()));

这里采用的是docStream.foreachRDD 里面不能直接注入MongoTemplate,或者 mybatils 所以直接用 的MongoConnector 去create 一个连接

2.这里是多维度统计

private void countUrl(MgLogEntity logEntity) throws IOException {

String format = new SimpleDateFormat("yyyyMMdd").format(logEntity.getTime());

int countTime = Integer.parseInt(format);

Document query = new Document();

query.put("countTime",countTime);

query.put("url",logEntity.getUrl());

Document document = MongodbService.findDocument(LogDomentName.urlPv, query, topicProperties.toMongoConfig());

if(document==null){

MgLogUrlStat logUrlStat = new MgLogUrlStat();

BeanUtils.copyProperties(logEntity,logUrlStat);

logUrlStat.setCountTime(countTime);

logUrlStat.setCount(1);

MongodbService.saveMongodb(LogDomentName.urlPv, JacksonUtil.parseJson(JacksonUtil.

toJson(logUrlStat), Document.class),topicProperties.toMongoConfig());

}else {

Integer count = document.getInteger("count");

Integer integer = count+1;

document.put("count",integer);

MongodbService.saveMongodb(LogDomentName.urlPv, document,topicProperties.toMongoConfig());

}

}

每次插入前都会先将时间转换为yyyyMMdd Int类型根据天来查询是否有数据 ,有的话直接count+1没有 count=1确保每个请求一天只有一条数据

这样可扩展 ,天,周,月,季度,年等维度,而且存入统计的数据不会很大.

这也是不采用直接在表上加一列的原因,这里可以实现自己的很多业务逻辑,

也可以匹配规则,来筛选

下面是具体源码

消费kafka数据,筛选过滤,分别保存的代码

package com.aeotrade.provider.consumer;

import com.aeotrade.provider.config.SparkConfiguration;

import com.aeotrade.provider.config.TopicProperties;

import com.aeotrade.provider.dto.MgLogEntity;

import com.aeotrade.provider.model.entity.MgAppClickStat;

import com.aeotrade.provider.model.entity.MgAppPayStat;

import com.aeotrade.provider.model.entity.MgLogUrlStat;

import com.aeotrade.provider.service.LogDomentName;

import com.aeotrade.provider.service.MongodbService;

import com.aeotrade.provider.utils.JacksonUtil;

import com.alibaba.fastjson.JSONObject;

import com.mongodb.client.FindIterable;

import com.mongodb.client.MongoCollection;

import com.mongodb.spark.MongoConnector;

import com.mongodb.spark.config.ReadConfig;

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.StringUtils;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.sql.SparkSession;

import org.apache.spark.streaming.Durations;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka010.ConsumerStrategies;

import org.apache.spark.streaming.kafka010.KafkaUtils;

import org.apache.spark.streaming.kafka010.LocationStrategies;

import org.bson.Document;

import org.springframework.beans.BeanUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.mongodb.core.query.Criteria;

import org.springframework.data.mongodb.core.query.Query;

import java.io.IOException;

import java.io.Serializable;

import java.text.SimpleDateFormat;

import java.util.*;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

/**

* 慧贸贸日志的 SparkJob

*/

@Slf4j

public class LogSparkjob implements Serializable {

public static final String UNIQUE_KEY_FIELD = "_unique_key";

public static final String BUS_KEY_FIELD = "bid";

private TopicProperties topicProperties = null;

public void start(Map<String, Object> params, TopicProperties topicProps) throws InterruptedException {

this.topicProperties = topicProps;

Collection<String> topics = Arrays.asList(topicProps.getName());

SparkConfiguration configuration = new SparkConfiguration(topicProps);

final SparkSession session = configuration.sparkSession();

final JavaSparkContext sparkContext = new JavaSparkContext(session.sparkContext());

// 建立数据流,每 10 秒读取一批数据

//创建JavaStreamingContext对象

JavaStreamingContext streamingContext = new JavaStreamingContext(sparkContext, Durations.seconds(5));

// 建立实时数据流,从 Kafka 中直接读取数据,实现 零 拷贝。

//创建DStream

JavaInputDStream<ConsumerRecord<String, String>> stream = KafkaUtils.createDirectStream(

streamingContext,

LocationStrategies.PreferConsistent(),

ConsumerStrategies.<String, String>Subscribe(topics, params)

);

System.out.println("==================== stream ============== ");

// 使用 Spark 的 map 操作进行数据转换。

JavaDStream<MgLogEntity> docStream = stream.flatMap(new LogTransformerFunction(topicProperties.toMongoConfig()));

docStream.print(10);

docStream.foreachRDD(rdd->{

List<MgLogEntity> collect = rdd.collect();

collect.forEach(logEntity->{

if(logEntity!=null) {

try {

/**保存源数据*/

MongodbService.saveMongodb("hmm_log", JacksonUtil.parseJson(JacksonUtil.

toJson(logEntity), Document.class),topicProperties.toMongoConfig());

/**按照统计url,和时间统计访问量*/

countUrl(logEntity);

/**统计app点击量*/

Pattern pattern = Pattern.compile("^[/cloud/app/](.*?)\\d$");

Matcher matcher = pattern.matcher(logEntity.getUrl());

if( matcher.find()) {

appClickStat(logEntity);

}

/**统计app购买量*/

if(logEntity.getUrl().equals("/cloud/order/confirmpayment") && StringUtils.isEmpty(logEntity.getParam())

&& logEntity.getStatus()==200){

appPaycount(logEntity);

}

/**统计app使用量*/

if(logEntity.getUrl().equals("/cloud/app/oauth") && StringUtils.isEmpty(logEntity.getParam())

&& logEntity.getStatus()==200){

appusecount(logEntity);

}

} catch (IOException e) {

e.printStackTrace();

}

}

});

});

streamingContext.start();

try {

streamingContext.awaitTermination();

} catch (InterruptedException e) {

e.printStackTrace(System.err);

}

streamingContext.close();

}

private void appusecount(MgLogEntity logEntity) throws IOException {

String format = new SimpleDateFormat("yyyyMMdd").format(logEntity.getTime());

int countTime = Integer.parseInt(format);

Map<String, String> split = Split(logEntity.getParam());

Long appId = Long.valueOf(split.get("appId"));

Long memberid = Long.valueOf(split.get("id"));

Document query = new Document();

query.put("countTime",countTime);

query.put("url",logEntity.getUrl());

query.put("memberId",memberid);

query.put("appId",appId);

query.put("type",2);

Document document = MongodbService.findDocument(LogDomentName.appPay, query, topicProperties.toMongoConfig());

if(document==null){

MgAppPayStat app= new MgAppPayStat();

BeanUtils.copyProperties(logEntity,app);

app.setCountTime(countTime);

app.setAppId(appId);

app.setMemberId(memberid);

app.setCount(1);

app.setType(2);

MongodbService.saveMongodb(LogDomentName.appPv, JacksonUtil.parseJson(JacksonUtil.

toJson(app), Document.class),topicProperties.toMongoConfig());

}else {

Integer count = document.getInteger("count");

Integer integer = count+1;

document.put("count",integer);

MongodbService.saveMongodb(LogDomentName.appPv, document,topicProperties.toMongoConfig());

}

}

private void appPaycount(MgLogEntity logEntity) throws IOException {

String format = new SimpleDateFormat("yyyyMMdd").format(logEntity.getTime());

int countTime = Integer.parseInt(format);

Map<String, String> split = Split(logEntity.getParam());

Long appId = Long.valueOf(split.get("appId"));

Long memberid = Long.valueOf(split.get("memberid"));

Document query = new Document();

query.put("countTime",countTime);

query.put("url",logEntity.getUrl());

query.put("appId",appId);

query.put("type",1);

Document document = MongodbService.findDocument(LogDomentName.appPay, query, topicProperties.toMongoConfig());

if(document==null){

MgAppPayStat app= new MgAppPayStat();

BeanUtils.copyProperties(logEntity,app);

app.setCountTime(countTime);

app.setAppId(appId);

app.setMemberId(memberid);

app.setCount(1);

app.setType(1);

MongodbService.saveMongodb(LogDomentName.appPay, JacksonUtil.parseJson(JacksonUtil.

toJson(app), Document.class),topicProperties.toMongoConfig());

}else {

Integer count = document.getInteger("count");

Integer integer = count+1;

document.put("count",integer);

MongodbService.saveMongodb(LogDomentName.appPay, document,topicProperties.toMongoConfig());

}

}

private void appClickStat(MgLogEntity logEntity) throws IOException {

boolean isClickUrl = logEntity.getUrl().startsWith("/cloud/app/");

if(isClickUrl) {

String format = new SimpleDateFormat("yyyyMMdd").format(logEntity.getTime());

int countTime = Integer.parseInt(format);

Document query = new Document();

query.put("countTime", countTime);

query.put("url", logEntity.getUrl());

Document document = MongodbService.findDocument(LogDomentName.appPv, query, topicProperties.toMongoConfig());

if (document == null) {

MgAppClickStat app = new MgAppClickStat();

BeanUtils.copyProperties(logEntity, app);

app.setCountTime(countTime);

String[] split = logEntity.getUrl().split("/");

Long aLong = Long.valueOf(split[split.length - 1]);

app.setAppId(aLong);

app.setCount(1);

MongodbService.saveMongodb(LogDomentName.appPv, JacksonUtil.parseJson(JacksonUtil.

toJson(app), Document.class), topicProperties.toMongoConfig());

} else {

Integer count = document.getInteger("count");

Integer integer = count + 1;

document.put("count", integer);

MongodbService.saveMongodb(LogDomentName.appPv, document, topicProperties.toMongoConfig());

}

}

}

private void countUrl(MgLogEntity logEntity) throws IOException {

String format = new SimpleDateFormat("yyyyMMdd").format(logEntity.getTime());

int countTime = Integer.parseInt(format);

Document query = new Document();

query.put("countTime",countTime);

query.put("url",logEntity.getUrl());

Document document = MongodbService.findDocument(LogDomentName.urlPv, query, topicProperties.toMongoConfig());

if(document==null){

MgLogUrlStat logUrlStat = new MgLogUrlStat();

BeanUtils.copyProperties(logEntity,logUrlStat);

logUrlStat.setCountTime(countTime);

logUrlStat.setCount(1);

MongodbService.saveMongodb(LogDomentName.urlPv, JacksonUtil.parseJson(JacksonUtil.

toJson(logUrlStat), Document.class),topicProperties.toMongoConfig());

}else {

Integer count = document.getInteger("count");

Integer integer = count+1;

document.put("count",integer);

MongodbService.saveMongodb(LogDomentName.urlPv, document,topicProperties.toMongoConfig());

}

}

public Map<String,String> Split(String urlparam){

Map<String,String> map = new HashMap<String,String>();

String[] param = urlparam.split("&");

for(String keyvalue:param){

String[] pair = keyvalue.split("=");

if(pair.length==2){

map.put(pair[0], pair[1]);

}

}

return map;

}

}

MongodbService,主要用来查询和保存到MongoDB

package com.aeotrade.provider.service;

import com.aeotrade.provider.dto.MgLogEntity;

import com.mongodb.client.FindIterable;

import com.mongodb.client.MongoCollection;

import com.mongodb.client.result.UpdateResult;

import com.mongodb.spark.MongoConnector;

import com.mongodb.spark.config.ReadConfig;

import com.mongodb.spark.config.WriteConfig;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD;

import org.bson.Document;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.mongodb.core.MongoTemplate;

import org.springframework.stereotype.Component;

import org.springframework.stereotype.Service;

import java.util.Date;

import java.util.Map;

/**

* @Author: yewei

* @Date: 9:16 2020/12/3

* @Description:

*/

@Component

public class MongodbService {

public static void saveMongodb(String collectionName, Document document, Map<String, String> mongoConfig) {

try {

Long time = document.getLong("time");

Date date = new Date(time);

document.put("time",date) ;

}catch (Exception e){

}

mongoConfig.put("collection",collectionName);

mongoConfig.put("replaceDocument","false");

WriteConfig writeConfig = WriteConfig.create(mongoConfig);

MongoConnector connector = MongoConnector.create(writeConfig.asJavaOptions());

connector.withCollectionDo(writeConfig, Document.class, new Function<MongoCollection<Document>, Document>() {

@Override

public Document call(MongoCollection<Document> collection) throws Exception {

if (document.containsKey("_id")) {

Document searchDoc = new Document();

searchDoc.put("_id", document.get("_id"));

UpdateResult ur = collection.replaceOne(searchDoc, document);

if (ur.getMatchedCount() == 0) {

collection.insertOne(document);

}

} else {

collection.insertOne(document);

}

return document;

}

});

}

public static Document findDocument(String table, Document query, Map<String, String> mongoConfig) {

mongoConfig.put("collection",table);

ReadConfig readConfig = ReadConfig.create(mongoConfig);

MongoConnector connector = MongoConnector.create(readConfig.asJavaOptions());

MongoCollection<Document> collection = connector.withCollectionDo(readConfig, Document.class, new Function<MongoCollection<Document>, MongoCollection>() {

@Override

public MongoCollection call(MongoCollection<Document> collection) throws Exception {

return collection;

}

});

FindIterable<Document> findIterable = collection.find(query);

readConfig = null;

collection = null;

Document document = findIterable.first();

if (document==null)return null;

return document;

}

}

有的地方还没有重构和细化,以上仅是自己理解,不妥之处请批评指正