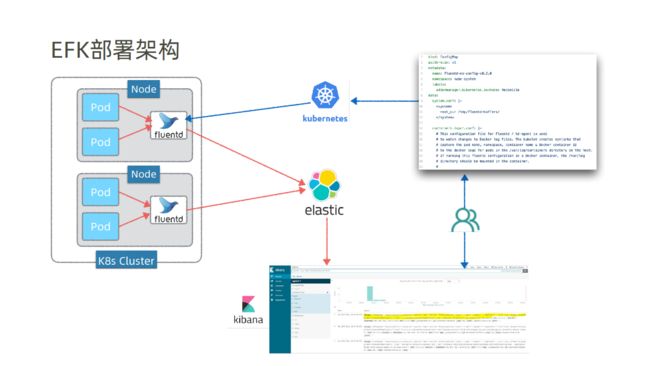

微服务实践之构建EFK日志监控平台

- 基于

Elasticsearch+Fluentd+Kibana

上线流程

- 基于

Fluentd需要在每个主机节点上部署一个DaemonSet,Fluentd负责采集所有节点上的日志,主要采集容器控制、容器本身、操作系统的日志,然后定期传入到Es当中。关于DaemonSet了解

资源清单

从以下yaml文件中获取Kubernetes部署资源。

Kibana 7.5.1

kind: Deployment

apiVersion: apps/v1

metadata:

name: kibana-kibana

namespace: default

labels:

app: kibana

app.kubernetes.io/managed-by: Helm

heritage: Helm

release: kibana

annotations:

deployment.kubernetes.io/revision: '1'

meta.helm.sh/release-name: kibana

meta.helm.sh/release-namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: kibana

release: kibana

template:

metadata:

creationTimestamp: null

labels:

app: kibana

release: kibana

spec:

containers:

- name: kibana

image: 'docker.elastic.co/kibana/kibana:7.5.1'

ports:

- name: http-kibana

containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_HOSTS

value: 'http://elasticsearch-master:9200'

- name: SERVER_HOST

value: 0.0.0.0

- name: NODE_OPTIONS

value: '--max-old-space-size=1800'

resources: {}

readinessProbe:

exec:

command:

- sh

- '-c'

- >

#!/usr/bin/env bash -e

# Disable nss cache to avoid filling dentry cache when calling

curl

# This is required with Kibana Docker using nss < 3.52

export NSS_SDB_USE_CACHE=no

http () {

local path="${1}"

set -- -XGET -s --fail -L

if [ -n "${ELASTICSEARCH_USERNAME}" ] && [ -n "${ELASTICSEARCH_PASSWORD}" ]; then

set -- "$@" -u "${ELASTICSEARCH_USERNAME}:${ELASTICSEARCH_PASSWORD}"

fi

STATUS=$(curl --output /dev/null --write-out "%{http_code}" -k "$@" "http://localhost:5601${path}")

if [[ "${STATUS}" -eq 200 ]]; then

exit 0

fi

echo "Error: Got HTTP code ${STATUS} but expected a 200"

exit 1

}

http "/app/kibana"

initialDelaySeconds: 10

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 3

failureThreshold: 3

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

securityContext:

capabilities:

drop:

- ALL

runAsUser: 1000

runAsNonRoot: true

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext:

fsGroup: 1000

schedulerName: default-scheduler

strategy:

type: Recreate

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

Fluentd latest

DockerHub-Fluentd官网

FLUENT_ELASTICSEARCH_HOST: 指定elastic主机地址FLUENT_ELASTICSEARCH_PORT: 指定elastic端口FLUENT_ELASTICSEARCH_SSL_VERIFY: 是否验证SSL证书(可以不开)FLUENT_ELASTICSEARCH_SSL_VERSION: TLS版本(可以不开)

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-system

labels:

k8s-app: fluentd-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluentd-logging

version: v1

template:

metadata:

labels:

k8s-app: fluentd-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1-debian-elasticsearch

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch-master-headless.default" # 地址写headless.名称空间

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENT_UID

value: "0"

- name: FLUENTD_SYSTEMD_CONF

value: disable

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

fluentd-elasticsearch-rbac

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

ElasticSearch 7.5.1

我这个Es直接用Skywalking的配置了,你们有需要的话自己修改一下yaml配置就行。

kind: StatefulSet

apiVersion: apps/v1

metadata:

name: elasticsearch-master

namespace: default

labels:

app: elasticsearch-master

app.kubernetes.io/managed-by: Helm

chart: elasticsearch

heritage: Helm

release: skywalking

annotations:

esMajorVersion: '7'

meta.helm.sh/release-name: skywalking

meta.helm.sh/release-namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: elasticsearch-master

template:

metadata:

name: elasticsearch-master

creationTimestamp: null

labels:

app: elasticsearch-master

chart: elasticsearch

heritage: Helm

release: skywalking

spec:

initContainers:

- name: configure-sysctl

image: 'docker.elastic.co/elasticsearch/elasticsearch:7.5.1'

command:

- sysctl

- '-w'

- vm.max_map_count=262144

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

runAsUser: 0

containers:

- name: elasticsearch

image: 'docker.elastic.co/elasticsearch/elasticsearch:7.5.1'

ports:

- name: http

containerPort: 9200

protocol: TCP

- name: transport

containerPort: 9300

protocol: TCP

volumeMounts:

- name: datadir

mountPath: /usr/share/elasticsearch/data

env:

- name: node.name

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: >-

elasticsearch-master-0,elasticsearch-master-1,elasticsearch-master-2,

- name: discovery.seed_hosts

value: elasticsearch-master-headless

- name: cluster.name

value: elasticsearch

- name: network.host

value: 0.0.0.0

- name: ES_JAVA_OPTS

value: '-Xmx1g -Xms1g'

- name: node.data

value: 'true'

- name: node.ingest

value: 'true'

- name: node.master

value: 'true'

resources:

limits:

cpu: '1'

memory: 2Gi

requests:

cpu: 100m

memory: 2Gi

readinessProbe:

exec:

command:

- sh

- '-c'

- >

#!/usr/bin/env bash -e

# If the node is starting up wait for the cluster to be ready

(request params: 'wait_for_status=green&timeout=1s' )

# Once it has started only check that the node itself is

responding

START_FILE=/tmp/.es_start_file

http () {

local path="${1}"

if [ -n "${ELASTIC_USERNAME}" ] && [ -n "${ELASTIC_PASSWORD}" ]; then

BASIC_AUTH="-u ${ELASTIC_USERNAME}:${ELASTIC_PASSWORD}"

else

BASIC_AUTH=''

fi

curl -XGET -s -k --fail ${BASIC_AUTH} http://127.0.0.1:9200${path}

}

if [ -f "${START_FILE}" ]; then

echo 'Elasticsearch is already running, lets check the node is healthy and there are master nodes available'

http "/_cluster/health?timeout=0s"

else

echo 'Waiting for elasticsearch cluster to become cluster to be ready (request params: "wait_for_status=green&timeout=1s" )'

if http "/_cluster/health?wait_for_status=green&timeout=1s" ; then

touch ${START_FILE}

exit 0

else

echo 'Cluster is not yet ready (request params: "wait_for_status=green&timeout=1s" )'

exit 1

fi

fi

initialDelaySeconds: 10

timeoutSeconds: 5

periodSeconds: 10

successThreshold: 3

failureThreshold: 3

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

securityContext:

capabilities:

drop:

- ALL

runAsUser: 1000

runAsNonRoot: true

restartPolicy: Always

terminationGracePeriodSeconds: 120

dnsPolicy: ClusterFirst

securityContext:

runAsUser: 1000

fsGroup: 1000

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- elasticsearch-master

topologyKey: kubernetes.io/hostname

schedulerName: default-scheduler

volumeClaimTemplates:

- metadata:

name: datadir

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 10Gi

serviceName: elasticsearch-master-headless

podManagementPolicy: Parallel

updateStrategy:

type: RollingUpdate

revisionHistoryLimit: 10