目录

- 1. 部署zookeeper+kafka+EFK过程

-

- 1.1 搭建环境

- 1.2 部署zookeeper

- 1.3 部署 kafka 集群

-

- 1.4 部署EFK

-

- 1.4.1 搭建环境

- 1.4.2 部署Elasticsearch

- 1.4.3 部署elasticsearch-head插件

- 1.4.4 部署kibana

- 1.4.5 部署logstash

- 1.4.6 部署Filebeat

- 1.5 验证

1. 部署zookeeper+kafka+EFK过程

1.1 搭建环境

192.168.152.130:zookeeper kafka

192.168.152.129:zookeeper kafka

192.168.152.128:zookeeper kafka

1.2 部署zookeeper

注意:这里是三台服务器一起安装

[root@localhost ~]

[root@localhost ~]

[root@localhost ~]

[root@localhost ~]

[root@localhost ~]

[root@localhost opt]

[root@localhost opt]

[root@localhost opt]

[root@localhost opt]

[root@localhost conf]

[root@localhost conf]

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/usr/local/zookeeper-3.5.7/data ●修改,指定保存Zookeeper中的数据的目录,目录需要单独创建

dataLogDir=/usr/local/zookeeper-3.5.7/logs ●添加,指定存放日志的目录,目录需要单独创建

clientPort=2181

在最后添加集群信息

server.1=192.168.152.130:3188:3288

server.2=192.168.152.129:3188:3288

server.3=192.168.152.128:3188:3288

[root@localhost conf]

[root@localhost conf]

[root@localhost conf]

[root@localhost zookeeper-3.5.7]

[root@localhost zookeeper-3.5.7]

[root@localhost zookeeper-3.5.7]

[root@localhost zookeeper-3.5.7]

ZK_HOME='/usr/local/zookeeper-3.5.7'

case $1 in

start)

echo "---------- zookeeper 启动 ------------"

$ZK_HOME/bin/zkServer.sh start

;;

stop)

echo "---------- zookeeper 停止 ------------"

$ZK_HOME/bin/zkServer.sh stop

;;

restart)

echo "---------- zookeeper 重启 ------------"

$ZK_HOME/bin/zkServer.sh restart

;;

status)

echo "---------- zookeeper 状态 ------------"

$ZK_HOME/bin/zkServer.sh status

;;

*)

echo "Usage: $0 {start|stop|restart|status}"

esac

[root@server zookeeper-3.5.7]

[root@server zookeeper-3.5.7]

[root@server zookeeper-3.5.7]

[root@server zookeeper-3.5.7]

1.3 部署 kafka 集群

注意:这是在安装zookeeper的三台服务器上部署kafka

[root@localhost zookeeper-3.5.7]

[root@localhost opt]

[root@localhost opt]

[root@localhost opt]

[root@localhost opt]

[root@localhost config]

[root@localhost config]

第21行 broker.id=0

第31行 listeners=PLAINTEXT://192.168.152.130:9092

第42行 num.network.threads=3

第45行 num.io.threads=8

第48行 socket.send.buffer.bytes=102400

第51行 socket.receive.buffer.bytes=102400

第54行 socket.request.max.bytes=104857600

第60行 log.dirs=/usr/local/kafka/logs

第65行 num.partitions=1

第69行 num.recovery.threads.per.data.dir=1

第103行 log.retention.hours=168

第110行 log.segment.bytes=1073741824

第123行 zookeeper.connect=192.168.238.100:2181,192.168.238.150:2181,192.168.238.99:2181

[root@localhost config]

export KAFKA_HOME=/usr/local/kafka

export PATH=$PATH:$KAFKA_HOME/bin

[root@localhost config]

[root@localhost config]

KAFKA_HOME='/usr/local/kafka'

case $1 in

start)

echo "---------- Kafka 启动 ------------"

${KAFKA_HOME}/bin/kafka-server-start.sh -daemon ${KAFKA_HOME}/config/server.properties

;;

stop)

echo "---------- Kafka 停止 ------------"

${KAFKA_HOME}/bin/kafka-server-stop.sh

;;

restart)

$0 stop

$0 start

;;

status)

echo "---------- Kafka 状态 ------------"

count=$(ps -ef | grep kafka | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

echo "kafka is not running"

else

echo "kafka is running"

fi

;;

*)

echo "Usage: $0 {start|stop|restart|status}"

esac

[root@localhost config]

[root@localhost config]

[root@localhost config]

[root@localhost config]

注意:如果查看不到端口,说明服务没有启动成功,将kafka目录下的logs目录里的文件,删除后重启

1.3.1 Kafka 命令行操作

[root@localhost logs]

[root@localhost logs]

[root@localhost logs]

[root@localhost logs]

[root@localhost logs]

[root@localhost logs]

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!

[root@localhost logs]

1.4 部署EFK

1.4.1 搭建环境

node1:192.168.152.11 Elasticsearch kibana

node2:192.168.152.12 Elasticsearch

apach:192.168.152.127 Apache logstash

1.4.2 部署Elasticsearch

node1、node2做相同的操作

[root@localhost ~]

[root@node1 ~]

[root@node1 ~]

192.168.152.11 node1

192.168.152.12 node2

[root@node1 ~]

[root@node1 ~]

[root@node1 opt]

[root@node1 opt]

[root@node1 opt]

[root@node1 opt]

[root@node1 opt]

第17行 cluster.name: my-elk-cluster

第23行 node.name: node1

第33行 path.data: /data/elk_data

第37行 path. logs: /var/log/elasticsearch/

第43行 bootstrap.memory_lock: false

第55行 network.host: 0.0.0.0

第59行 http.port: 9200

第68行 discoveryp zen.ping.unicast.hosts:["node1", "node2"]

[root@node1 opt]

[root@node1 opt]

[root@node1 opt]

[root@node1 opt]

可在真机上输入192.168.152.11:9200进行验证

1.4.3 部署elasticsearch-head插件

node1、node2做相同的操作

[root@node1 opt]

[root@node1 opt]

[root@node1 node-v8.2.1]

[root@node1 node-v8.2.1]

[root@node1 src]

[root@node2 bin]

[root@node1 bin]

[root@localhost src]

[root@node1 src]

[root@node1 elasticsearch-head]

[root@localhost ~]

[root@localhost ~]

http.cors.enabled: true

http.cors.allow-origin: "*"

[root@localhost ~]

[root@localhost ~]

[root@localhost elasticsearch-head]

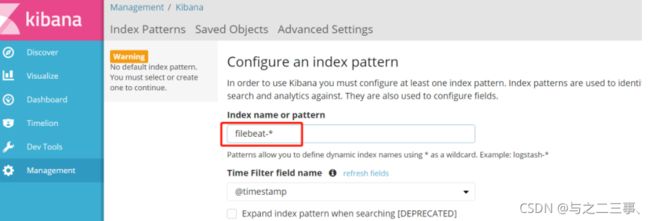

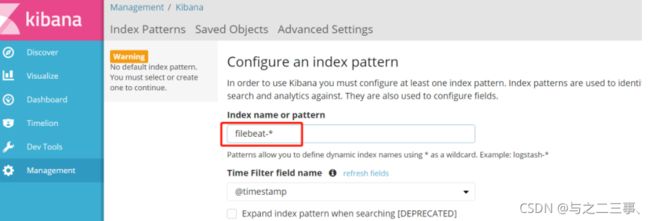

1.4.4 部署kibana

在node1主机安装kibana

[root@node1 elasticsearch-head]

[root@node1 src]

[root@node1 src]

[root@node1 kibana]

[root@node1 kibana]

第2行 server.port: 5601

第7行 server.host: "0.0.0.0"

第21行 elasticsearch.url: "http://192.168.152.11:9200"

第30行 kibana.index: ".kibana"

[root@node1 kibana]

[root@node1 kibana]

1.4.5 部署logstash

logstash是为了收集Kafka队列传输过来的数据的

在192.168.152.127上面操作

[root@localhost ~]

[root@localhost ~]

[root@apache ~]

[root@apache ~]

[root@apache opt]

[root@apache opt]

[root@apache opt]

[root@apache opt]

[root@apache opt]

[root@apache conf.d]

input {

kafka {

bootstrap_servers => "192.168.152.130:9092,192.168.152.129:9092,192.168.152.128:9092"

topics => "filebeat_test"

group_id => "test123"

auto_offset_reset => "earliest"

}

}

output {

elasticsearch {

hosts => ["192.168.152.11:9200"]

index => "filebeat-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

[root@apache conf.d]

1.4.6 部署Filebeat

准备另一台虚拟机 地址为Filebeat:192.168.152.13

[root@localhost opt]

[root@localhost local]

[root@localhost local]

[root@localhost local]

[root@localhost local]

[root@localhost filebeat]

filebeat.prospectors:

- type: log

enabled: true

paths:

- /var/log/messages

- /var/log/*.log

......

output.kafka:

enabled: true

hosts: ["192.168.152.130:9092","192.168.152.129:9092","192.168.152.128:9092"]

topic: "filebeat_test"

ps:将filebeat.yml文件中,如下注释掉,否则启动不了

[root@localhost filebeat]

1.5 验证