python矩阵类&极限学习机

极限学习机实验

一、实验要求

1. 掌握神经元网络中的几种常见的激活函数。

2. 掌握矩阵的常见操作,并能用程序实现。

3. 掌握最小二乘法,并能用程序实现。

4. 通过激活函数、矩阵操作、最小二乘法实现极限学习机,能够对任意变量数量的数据集进行训练,确定网络结构中的参数,并能够利用获得的确定网络进行预测。

二、矩阵类

我跟着这个python的矩阵类实现写的自己的矩阵类。

链接中的矩阵类使用的是二维数组,然而无法直接赋值整个矩阵,只能进行单行赋值。

因此我使用的是一维数组,初始化时矩阵可以直接通过list、tuple等直接整体赋值

class MyMatrix(object):

def __init__(self, row, column, value = 0):

self.row = row

self.column = column

if value == 0:

self._matrix = [0.0 for i in range(row * column)]

else:

assert len(value) == row * column, '赋值数量不匹配'

self._matrix = list(value)

通过python的特殊函数实现了加、乘、获取元素、赋值和输出功能。

# return m[i],返回矩阵第i行元素(list)

# return m[i, j],返回矩阵第i行第j行的元素

def __getitem__(self, index):

if isinstance(index, int):

assert index <= self.row, str(index) + ' ' + str(self.row) + ' index超了'

row = []

for i in range(self.column):

row.append(self._matrix[self.column * (index - 1) + i])

return row

elif isinstance(index, tuple):

assert index[0] <= self.row and index[1] <= self.column, str(index[0]) + ' ' + str(self.row) + ' ' + str(index[1]) + ' ' + str(self.column) + ' index超了'

return self._matrix[self.column * (index[0] - 1) + index[1] - 1]

# m[i, 0] ~ m[i, j] = value,给矩阵第i行赋值,list或tuple

# m[i, j] = value

def __setitem__(self, index, value):

if isinstance(index, int):

assert index <= self.row, str(index) + ' ' + str(self.row) + ' index超了'

for i in range(self.column):

self._matrix[self.column * (index - 1) + i] = value[i]

elif isinstance(index, tuple):

assert index[0] <= self.row and index[1] <= self.column, str(index[0]) + ' ' + str(self.row) + ' ' + str(index[1]) + ' ' + str(self.column) + ' index超了'

self._matrix[self.column * (index[0] - 1) + index[1] - 1] = value

# A * B (A * 2.0)

def __mul__(A, B):

# 矩阵乘以一个数

if isinstance(B, int) or isinstance(B, float):

temp = MyMatrix(A.row, A.column)

for r in range(1, A.row + 1):

for c in range(1, A.column + 1):

temp[r, c] = A[r, c] * B

else:

# 矩阵乘以矩阵

assert A.column == B.row, str(A.row) + str(A.column) + str(B.row) + str(B.column) + '维度不匹配,不能相乘'

temp = MyMatrix(A.row, B.column)

for r in range(1, A.row + 1):

for c in range(1, B.column + 1):

sum = 0

for k in range(1, A.column + 1):

sum += A[r, k] * B[k, c]

temp[r, c] = sum

return temp

# A + B

def __add__(A, B):

assert A.row == B.row and A.column == B.column, str(A.row) + str(A.column) + str(B.row) + str(B.column) + '维度不匹配,不能相加'

temp = MyMatrix(A.row, A.column)

for r in range(1, A.row + 1):

for c in range(1, A.column + 1):

temp[r, c] = A[r, c] + B[r, c]

return temp

# print(M)

def __str__(self):

out = ""

for r in range(1, self.row + 1):

for c in range(1, self.column +1):

out = out + str(self[r, c]) + ' '

out += "\n"

return out

矩阵的转置、求逆

求逆用的方法是矩阵求逆的三种方法中的第三种

# A(T),求矩阵的转置

def transpose(self):

trans = MyMatrix(self.column, self.row)

for r in range(1, 1 + self.column):

for c in range(1, 1 + self.row):

trans[r, c] = self[c, r]

return trans

# A(-1),求矩阵的逆,通过初等行变换的方法

def invert(self):

assert self.row == self.column, "不是方阵"

inv2 = MyMatrix(self.row, self.column * 2)

# 构造 n * 2n 的矩阵

for r in range(1, 1 + inv2.row):

doub = self[r]

for i in range(1, 1 + inv2.row):

if i == r:

doub.append(1)

else:

doub.append(0)

inv2[r] = doub

# 初等行变换

for r in range(1, inv2.row + 1):

# 判断矩阵是否可逆

if inv2[r, r] == 0:

for rr in range(r + 1, inv2.row + 1):

if inv2[rr, r] != 0:

inv2[r], inv2[rr] = inv2[rr], inv2[r]

break

assert inv2[r, r] != 0, '矩阵不可逆'

# inv2[r, r] = 1, 行变换

temp = inv2[r, r]

for c in range(r, inv2.column + 1):

inv2[r, c] /= temp

# inv2[r, r]所在列剩余元素 = 0,行变换

for rr in range(1, inv2.row + 1):

temp = inv2[rr, r]

for c in range(r, inv2.column + 1):

if rr == r:

continue

inv2[rr, c] -= temp * inv2[r, c]

# inv

inv = MyMatrix(inv2.row, inv2.row)

for i in range(1, 1 + inv.row):

doub = inv2[i]

inv[i] = doub[inv.row:]

return inv

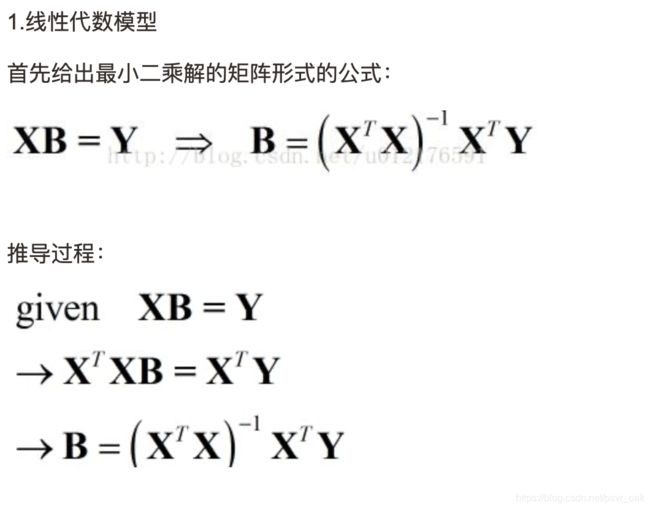

三、最小二乘法

如何理解最小二乘法不是矩阵的,但我比较喜欢这篇文章就码一下

代码就很好写哦

from MyMatrix import MyMatrix

def least_square_method(H, Q):

# H是inputsize * hiddensize,Q是inputsize * 1

# 最后是hiddensize * 1大

return (H.transpose() * H).invert() * H.transpose() * Q

四、极限学习机

各种文章反正我是没太搞清,实在是那个矩阵啊,我搞不懂是什么意思

所以我没太写明白………………

科普我看的这个极限学习机从原理到实现,当然我没看后面的代码

写我看的这个用于简单分类的极限学习机(Python实现)

from MyMatrix import MyMatrix

from least_square_method import least_square_method

import math

import random

class ELM(object):

def __init__(self, inputSize, outputSize, bit):

self.inputSize = inputSize

self.outputSize = outputSize

# 老师说hiddensize取变量(就是我的bit)的2~3倍

self.hiddenSize = bit * 2

self.bit = bit

self.H = 0

self.w = 0

# 随机出bit * hiddensize的weight权重

ran = [random.uniform(-0.2, 0.2) for i in range(self.bit * self.hiddenSize)]

self.weight = MyMatrix(self.bit, self.hiddenSize, ran)

#随机出hiddensize * 1的bias截距

ran = [random.uniform(0, 1) for i in range(self.hiddenSize)]

self.bias = MyMatrix(self.hiddenSize, 1, ran)

def sigmoid(self, x):

return 1 / (1 + math.e ** (x * -1))

def train(self, X, Q):

# 隐含层输出,X是inputsize * bit的矩阵,weight是bit * hiddensize的,所以这里H是inputsize * hiddensize的

self.H = X * self.weight

# 激活函数,我就随便H中的每个元素加上bias后塞进激活函数,之后H仍然是inputsize * hiddensize

for r in range(1, 1 + self.H.row):

for c in range(1, 1 + self.H.column):

self.H[r, c] = self.sigmoid(self.H[r, c] + self.bias[c, 1])

# 最小二乘法求w,返回的是hiddensize * 1的矩阵

self.w = least_square_method(self.H, Q)

# 返回的是inputsize * 1的矩阵

return self.H * self.w

def predict(self, X):

# 隐含层输出

self.H = X * self.weight

# 激活函数

for r in range(1, 1 + self.H.row):

for c in range(1, 1 + self.H.column):

self.H[r, c] = self.sigmoid(self.H[r, c] + self.bias[c, 1])

return self.H * self.w

最后学着这篇用于简单分类的极限学习机用sklearn测试了一下

准确度0.96我的妈呀

我本以为截距没有用,结果删掉截距后准确度就变成0.8+了,神奇

这就是最后测试的代码,把自己写的矩阵类和numpy里的矩阵统一真的是太麻烦了哭

基本上就是在reshape、reshape,还好结果还不错

import numpy as np

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from ELM import ELM

from MyMatrix import MyMatrix

def reshapeX(row, column, ij):

li = []

for i in ij:

for j in i:

li.append(float(j))

return MyMatrix(int(row), int(column), li)

def reshapey(row, ij):

li = []

for i in ij:

li.append(float(i))

return MyMatrix(int(row), 1, li)

# data是tuple类型

data = datasets.make_classification(1000)

elm = ELM(data[0].shape[1], 1)

X_train, X_test, y_train, y_test = train_test_split(data[0], data[1], test_size = 0.2)

print(y_train)

X_train = reshapeX(data[0].shape[0] * 0.8, data[0].shape[1], X_train)

X_test = reshapeX(data[0].shape[0] * 0.2, data[0].shape[1], X_test)

y_train = reshapey(data[1].shape[0] * 0.8, y_train)

elm.train(X_train, y_train)

y_pred = elm.predict(X_test)

li = []

for i in range(1, y_pred.row + 1):

for j in range(1, y_pred.column + 1):

li.append(y_pred[i, j])

y_pred = np.array(li)

y_pred = (y_pred > 0.5).astype(int)

print(accuracy_score(y_test, y_pred))

最后废物弟弟花了至少13小时25分钟的实验二终于完成了!

ddl前的第二天才开始写,如今才明白老师给延长一周的原因啊!

虽然写出来了但是对极限学习机的原理还是不是很清楚呢,果然还是需要更多的时间,而不是这样着急写的

去写Linux实验去!还有编译原理明天居然还有作业要交……13号还要交大创材料,哎……