Springboot整合ShardingSphere-JDBC-5.1.1版本实现分库分表的标准自定义策略定制

一、背景

业务中每隔5s推送一条数据而且最高并发量可达2w,经过计算数据量轻松过亿。一般数据库单表超过1000W性能就会急剧下降,这时候就需要做相应的分库分表处理

二、技术选型

Shardingjdbc以jar的形式分库分表,只需要引入相关依赖并做相应配置即可完成分库分表性能优于mycat而且ShardingSphere整个生态已入驻Apache,社区也很活跃是目前主流使用的分库分表解决方式;

*使用Shardingjdbc需要注意的地方:

Shardingjdbc对业务代码的入侵性比较高,在做整合的时候有些地方需要按照Shardingjdbc官方规范改写底层的查询方式

shardingjdbc-version-4点几的版有一些SQL是不支持或者存在一些关于sql解析的问题相对较多,所以这里选择最新的5.1.1版本解决了很多不支持项和一些关于sql解析的问题

关于ShardingSphere版本4和版本5的官方说明文档已放到网盘里了有兴趣的可以研究比对一下:

https://pan.baidu.com/s/1nlQoY6Ye5wKdwek94svEKQ

提取码:hibs

三、ShardingSphere-JDBC分库分表

1、建表准备

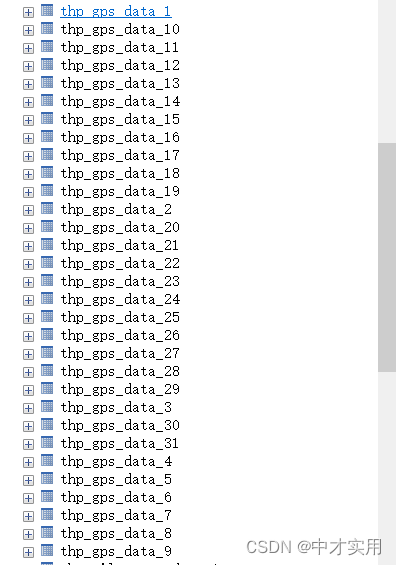

这里打算分三个库,每个库31张表,以thp_gps_data表为例

bhdcm-obd-00--

|--thp_gps_data_1

|--thp_gps_data_2

|--thp_gps_data_...31

bhdcm-obd-01--

|--thp_gps_data_1

|--thp_gps_data_2

|--thp_gps_data_...31

bhdcm-obd-02--

|--thp_gps_data_1

|--thp_gps_data_2

|--thp_gps_data_...31

thp_gps_data_{1…31}依次建表,建表sql:

CREATE TABLE `thp_gps_data_1` (

`id` VARCHAR(64) NOT NULL COMMENT '主键',

`equip_no` VARCHAR(500) DEFAULT NULL COMMENT '设备号',

`instruction_value` VARCHAR(500) DEFAULT NULL COMMENT '指令值',

`timestamp` BIGINT(20) DEFAULT NULL COMMENT '时间戳',

`longitude` VARCHAR(500) DEFAULT NULL COMMENT '经度',

`latitude` VARCHAR(500) DEFAULT NULL COMMENT '纬度',

`altitude` VARCHAR(500) DEFAULT NULL COMMENT '海拔高度',

`speed` VARCHAR(500) DEFAULT NULL COMMENT '车速(km)',

`angle` VARCHAR(500) DEFAULT NULL COMMENT '方向角度',

`signal_power` VARCHAR(500) DEFAULT NULL COMMENT '信号强度(0-31)',

`create_time` DATETIME DEFAULT NULL COMMENT '创建时间',

`update_time` DATETIME DEFAULT NULL COMMENT '修改时间',

PRIMARY KEY (`id`)

) ENGINE=INNODB DEFAULT CHARSET=utf8mb4 COMMENT='obd-gps数据表';

2、引入依赖

<!--注意不要用这个依赖,他会创建数据源,跟上面ShardingJDBC的SpringBoot集成依赖有冲突 -->

<!-- <dependency>-->

<!-- <groupId>com.alibaba</groupId>-->

<!-- <artifactId>druid-spring-boot-starter</artifactId>-->

<!-- <version>1.1.20</version>-->

<!-- </dependency>-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.2.13-SNSAPSHOT</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.11</version>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>shardingsphere-jdbc-core-spring-boot-starter</artifactId>

<version>5.1.1</version>

</dependency>

3、配置文件

#**************************************shardingjdbc-version-5.1.1***************************************#

# 数据源参数配置

initialSize=5

minIdle=5

maxIdle=100

maxActive=20

maxWait=60000

timeBetweenEvictionRunsMillis=60000

minEvictableIdleTimeMillis=300000

# Sharding Jdbc配置

# 分库的数量

dataBaseSize=3

# 分表的数量

tableSize=31

# ds0,ds1,ds2

spring.shardingsphere.datasource.names=ds0,ds1,ds2

# 配置数据源ds0 shardingjdbc逻辑源ds0映射实际源bhdcm-obd-00

spring.shardingsphere.datasource.ds0.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.ds0.driver-class-name=com.mysql.cj.jdbc.Driver

spring.shardingsphere.datasource.ds0.url=jdbc:mysql://10.254.250.178:3306/bhdcm-obd-00?useUnicode=true&characterEncoding=utf8&tinyInt1isBit=false&useSSL=false&allowMultiQueries=true&serverTimezone=Asia/Shanghai

spring.shardingsphere.datasource.ds0.username=root

spring.shardingsphere.datasource.ds0.password=root

spring.shardingsphere.datasource.ds0.initialSize=${initialSize}

spring.shardingsphere.datasource.ds0.minIdle=${minIdle}

spring.shardingsphere.datasource.ds0.maxActive=${maxActive}

spring.shardingsphere.datasource.ds0.maxWait=${maxWait}

spring.shardingsphere.datasource.ds0.validationQuery=SELECT 1 FROM DUAL

spring.shardingsphere.datasource.ds0.timeBetweenEvictionRunsMillis=${timeBetweenEvictionRunsMillis}

spring.shardingsphere.datasource.ds0.minEvictableIdleTimeMillis=${minEvictableIdleTimeMillis}

# 配置数据源ds1 shardingjdbc逻辑源ds1映射实际源bhdcm-obd-01

spring.shardingsphere.datasource.ds1.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.ds1.driver-class-name=com.mysql.cj.jdbc.Driver

spring.shardingsphere.datasource.ds1.url=jdbc:mysql://10.254.250.178:3306/bhdcm-obd-01?useUnicode=true&characterEncoding=utf8&tinyInt1isBit=false&useSSL=false&allowMultiQueries=true&serverTimezone=Asia/Shanghai

spring.shardingsphere.datasource.ds1.username=root

spring.shardingsphere.datasource.ds1.password=root

spring.shardingsphere.datasource.ds1.initialSize=${initialSize}

spring.shardingsphere.datasource.ds1.minIdle=${minIdle}

spring.shardingsphere.datasource.ds1.maxActive=${maxActive}

spring.shardingsphere.datasource.ds1.maxWait=${maxWait}

spring.shardingsphere.datasource.ds1.validationQuery=SELECT 1 FROM DUAL

spring.shardingsphere.datasource.ds1.timeBetweenEvictionRunsMillis=${timeBetweenEvictionRunsMillis}

spring.shardingsphere.datasource.ds1.minEvictableIdleTimeMillis=${minEvictableIdleTimeMillis}

# 配置数据源ds2 shardingjdbc逻辑源ds2映射实际源bhdcm-obd-02

spring.shardingsphere.datasource.ds2.type=com.alibaba.druid.pool.DruidDataSource

spring.shardingsphere.datasource.ds2.driver-class-name=com.mysql.cj.jdbc.Driver

spring.shardingsphere.datasource.ds2.url=jdbc:mysql://10.254.250.178:3306/bhdcm-obd-02?useUnicode=true&characterEncoding=utf8&tinyInt1isBit=false&useSSL=false&allowMultiQueries=true&serverTimezone=Asia/Shanghai

spring.shardingsphere.datasource.ds2.username=root

spring.shardingsphere.datasource.ds2.password=root

spring.shardingsphere.datasource.ds2.initialSize=${initialSize}

spring.shardingsphere.datasource.ds2.minIdle=${minIdle}

spring.shardingsphere.datasource.ds2.maxActive=${maxActive}

spring.shardingsphere.datasource.ds2.maxWait=${maxWait}

spring.shardingsphere.datasource.ds2.validationQuery=SELECT 1 FROM DUAL

spring.shardingsphere.datasource.ds2.timeBetweenEvictionRunsMillis=${timeBetweenEvictionRunsMillis}

spring.shardingsphere.datasource.ds2.minEvictableIdleTimeMillis=${minEvictableIdleTimeMillis}

# 分库配置 分片键按时间戳timestamp进行分片;具体的分片策略在指定的standardDatabaseShardingAlgorithm类中实现

spring.shardingsphere.rules.sharding.default-database-strategy.standard.sharding-column=timestamp

spring.shardingsphere.rules.sharding.default-database-strategy.standard.sharding-algorithm-name=standardDatabaseShardingAlgorithm

# 分表配置 shardingjdbc逻辑表thp_gps_data映射实际表thp_gps_data_$->{1..31};分片键按时间戳timestamp进行分片;具体的分片策略在指定的gpsStandardTableShardingAlgorithm类中实现

spring.shardingsphere.rules.sharding.tables.thp_gps_data.actual-data-nodes=ds$->{0..2}.thp_gps_data_$->{1..31}

spring.shardingsphere.rules.sharding.tables.thp_gps_data.table-strategy.standard.sharding-column=timestamp

spring.shardingsphere.rules.sharding.tables.thp_gps_data.table-strategy.standard.sharding-algorithm-name=gpsStandardTableShardingAlgorithm

# 打印分库分表日志

spring.shardingsphere.props.sql-show=true

4、自定义分库策略实现

package com.zdft.bhdcm.config.shardingsphere;

import groovy.util.logging.Slf4j;

import org.apache.commons.lang3.StringUtils;

import org.springframework.beans.factory.annotation.Value;

import org.apache.shardingsphere.sharding.api.sharding.standard.PreciseShardingValue;

import org.apache.shardingsphere.sharding.api.sharding.standard.RangeShardingValue;

import org.apache.shardingsphere.sharding.api.sharding.standard.StandardShardingAlgorithm;

import org.springframework.stereotype.Component;

import java.util.ArrayList;

import java.util.Collection;

/**

*

* 分库策略

* standard标准分片策略

*

*

* @author lhy

* @since 2023/2/2

*/

@Slf4j

@Component(value = "standardDatabaseShardingAlgorithm")

public class StandardDatabaseShardingAlgorithm implements StandardShardingAlgorithm<Long> {

//分库数量

private static int dataBaseSize;

@Value("${dataBaseSize}")

public void setDataBaseSize(int size) {

dataBaseSize = size;

}

/**

* 精确分片

* @param availableTargetNames 有效的数据源或表的名字。这里就对应配置文件中配置的数据源信息

* @param shardingValue 包含 逻辑表名、分片列和分片列的值。

* @return 返回目标结果

*/

@Override

public String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Long> shardingValue) {

//分库的规则:时间戳取中间六位数对数据库数量取模 例:1688811155000 --> 811155 % 3

String timestamp = StringUtils.substring(String.valueOf(shardingValue.getValue()), -9, -3);

Integer mod = Integer.parseInt(timestamp) % dataBaseSize;

for (String targetName : availableTargetNames) {

if (targetName.endsWith(String.valueOf(mod))) {

return targetName;

}

}

throw new UnsupportedOperationException(" route ds"+mod+" is not supported. please check your config");

}

/**

* 范围分片

* @param availableTargetNames

* @param rangeShardingValue包含逻辑表名、分片列和分片列的条件范围。

* @return 返回目标结果。可以是多个。

*/

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames, RangeShardingValue<Long> rangeShardingValue) {

//时间戳范围查询,设定所有库都查

Collection<String> collect = new ArrayList<>();

collect.add("ds0");

collect.add("ds1");

collect.add("ds2");

return collect;

}

@Override

public void init() {

}

@Override

public String getType() {

return null;

}

}

5、自定义分表策略实现

package com.zdft.bhdcm.config.shardingsphere;

import com.google.common.collect.Range;

import com.zdft.bhdcm.utils.CommonUtils;

import groovy.util.logging.Slf4j;

import org.springframework.beans.factory.annotation.Value;

import org.apache.shardingsphere.sharding.api.sharding.standard.PreciseShardingValue;

import org.apache.shardingsphere.sharding.api.sharding.standard.RangeShardingValue;

import org.apache.shardingsphere.sharding.api.sharding.standard.StandardShardingAlgorithm;

import org.springframework.stereotype.Component;

import java.util.ArrayList;

import java.util.Collection;

import java.util.Date;

/**

*

* 分表策略

* standard标准分片策略

*

*

* @author lhy

* @since 2023/2/2

*/

@Slf4j

@Component(value = "gpsStandardTableShardingAlgorithm")

public class GpsStandardTableShardingAlgorithm implements StandardShardingAlgorithm<Long> {

//分表数量

private static int tableSize;

@Value("${tableSize}")

public void setTableSize(int size) {

tableSize = size;

}

/**

* 精确分片

* @param availableTargetNames 有效的数据源或表的名字。这里就对应配置文件中配置的数据源信息

* @param shardingValue 包含 逻辑表名、分片列和分片列的值。

* @return 返回目标结果

*/

@Override

public String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Long> shardingValue) {

//分表的规则:从时间戳中获取天,哪一天落到哪个表 例:1688811155000 --> day(8)对应落到的库为thp_gps_data_8

//实现按照 = 或 IN 进行精确分片。

//例如 select * from t_order where order_id = 1 or order_id in (1,3,5)

Long timestamp = Long.valueOf(Long.parseLong(String.valueOf(shardingValue.getValue())));

Integer day = CommonUtils.getDay(new Date(timestamp));

String key = shardingValue.getLogicTableName()+"_"+day;

if(availableTargetNames.contains(key)){

return key;

}

throw new UnsupportedOperationException(" route "+key+" is not supported. please check your config");

}

/**

* 范围分片

* @param availableTargetNames

* @param rangeShardingValue 包含逻辑表名、分片列和分片列的条件范围。

* @return 返回目标结果。可以是多个。

*/

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames, RangeShardingValue<Long> rangeShardingValue) {

Collection<String> collect = new ArrayList<>();

//实现按照 Between 进行范围分片。

Range<Long> valueRange = rangeShardingValue.getValueRange();//获取查询条件中范围值

String slowerEndpoint = String.valueOf(valueRange.hasLowerBound()?valueRange.lowerEndpoint():"");//查询条件下限

String supperEndpoint = String.valueOf(valueRange.hasUpperBound()?valueRange.upperEndpoint():"");//查询条件上限

if(!slowerEndpoint.isEmpty()&&!supperEndpoint.isEmpty()) {

Long lowerEndpoint = Math.abs(Long.parseLong(slowerEndpoint));

Long upperEndpoint = Math.abs(Long.parseLong(supperEndpoint));

Integer lowerMonth = CommonUtils.getMonth(new Date(lowerEndpoint));

Integer upperMonth = CommonUtils.getMonth(new Date(upperEndpoint));

if(lowerMonth.intValue()!=upperMonth.intValue()){

throw new BusinessException(ResultCodeEnum.SYS_ERROR.getCode(), ResultCodeEnum.SYS_ERROR.getDesc()+"时间范围不允许跨月,请核实!");

}

Integer lowerDay = CommonUtils.getDay(new Date(lowerEndpoint));

Integer upperDay = CommonUtils.getDay(new Date(upperEndpoint));

for(;lowerDay <= upperDay; lowerDay ++){

collect.add(rangeShardingValue.getLogicTableName()+"_"+lowerDay);

}

} else if(!slowerEndpoint.isEmpty()&&supperEndpoint.isEmpty()) {

Long lowerEndpoint = Math.abs(Long.parseLong(slowerEndpoint));

Integer lowerDay = CommonUtils.getDay(new Date(lowerEndpoint));

for (int day = lowerDay; day <= tableSize; day++) {

collect.add(rangeShardingValue.getLogicTableName()+"_"+day);

}

}else if(slowerEndpoint.isEmpty()&&!supperEndpoint.isEmpty()) {

Long upperEndpoint = Math.abs(Long.parseLong(supperEndpoint));

Integer upperDay = CommonUtils.getDay(new Date(upperEndpoint));

for (int day = 1; day <= upperDay; day++) {

collect.add(rangeShardingValue.getLogicTableName()+"_"+day);

}

}

return collect;

}

@Override

public void init() {

}

@Override

public String getType() {

return null;

}

}

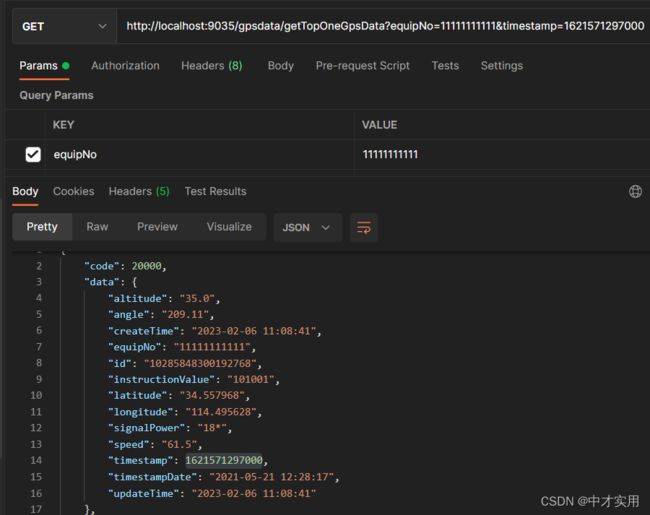

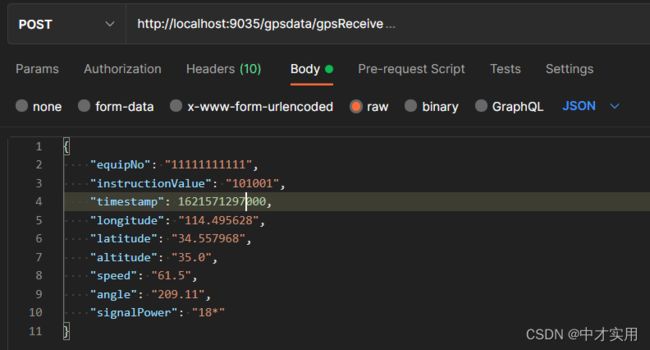

6、分库分表测试

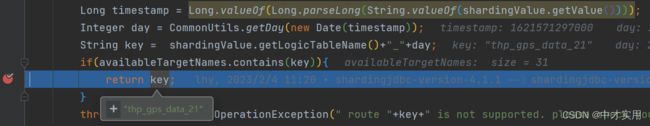

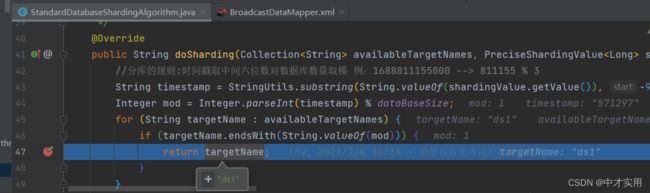

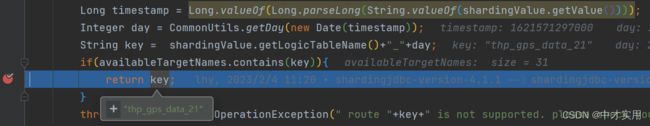

插入一条时间戳为1621571297000的数据

指定落到哪个库:

指定落到哪张表:

通过断点可以看到该条数据落到了ds1库中的thp_gps_data_21表中

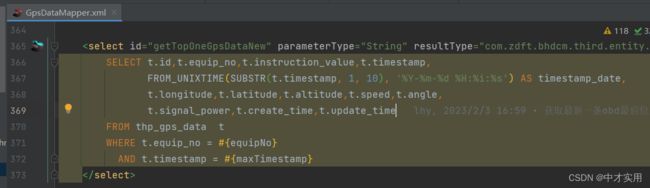

指定查询哪个库:

指定查询哪张表:

ShardingSphere-JDBC打印的日志:

Logic SQL: SELECT t.id, t.equip_no, t.instruction_value, t.timestamp, FROM_UNIXTIME(SUBSTR(t.timestamp, 1, 10), '%Y-%m-%d %H:%i:%s') AS timestamp_date, t.longitude, t.latitude, t.altitude, t.speed, t.angle, t.signal_power, t.create_time, t.update_time FROM thp_gps_data t WHERE t.equip_no = ? AND t.timestamp = ?

SQLStatement: MySQLSelectStatement(table=Optional.empty, limit=Optional.empty, lock=Optional.empty, window=Optional.empty)

Actual SQL: ds1 ::: SELECT t.id, t.equip_no, t.instruction_value, t.timestamp, FROM_UNIXTIME(SUBSTR(t.timestamp, 1, 10), '%Y-%m-%d %H:%i:%s') AS timestamp_date, t.longitude, t.latitude, t.altitude, t.speed, t.angle, t.signal_power, t.create_time, t.update_time FROM thp_gps_data_21 t WHERE t.equip_no = ? AND t.timestamp = ? ::: [11111111111, 1621571297000]

Logic SQL:逻辑sql,这里的逻辑sql其实就是写在mapper.xml中的业务sql

Actual SQL:实际sql,ShardingSphere-JDBC实际执行的分库分表的sql

通过日志可以看到ShardingSphere-JDBC通过分片键timestamp直接帮我们查询了ds1库中的thp_gps_data_21表。