Pytorch入门

文章目录

- pytorch

-

- Dataset

- tensorboard

-

- SummaryWriter

- transforms

-

- 使用

- 常见的transforms

- torchvision

-

- datasets

- DataLoader

- nn.Module

-

- 卷积层

- 池化层

- 非线性激活

- 线性层(全连接层)

- Sequential

- 损失函数

- 优化器

- 官方模型修改

- 模型的保存和加载

- 模型训练流程

-

- CPU

- GPU

- 模型验证流程

pytorch

详情跳转

Dataset

from torch.utils.data import Dataset

from PIL import Image

import os

class MyData(Dataset):

def __init__(self, root_dir, label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir, self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self, index):

img_name = self.img_path[index]

img_item_path = os.path.join(self.root_dir, self.label_dir, img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img, label

def __len__(self):

return len(self.img_path)

ants_dataset = MyData(r"F:\ZNV\笔记图片\pytorch\练手数据集1\train", "ants_image")

img, label = ants_dataset[0]

img.show()

tensorboard

SummaryWriter

便于展示图像

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter("logs")

for i in range(100):

# writer.add_image()

writer.add_scalar("y=x", i, i)

writer.close()

图片展示

import numpy as np

from torch.utils.tensorboard import SummaryWriter

from PIL import Image

writer = SummaryWriter("logs")

image_path = r"F:\ZNV\笔记图片\pytorch\练手数据集1\train\ants_image\0013035.jpg"

img = Image.open(image_path)

img_array = np.array(img)

# dataformats="HWC"是为了保证图片的维度。可查验add_image方法

writer.add_image("test", img_array, 1, dataformats="HWC")

for i in range(100):

writer.add_scalar("y=x", i, i)

writer.close()

transforms

使用

transforms是一个工具箱,可以对图片进行一系列的操作,输出想要的结果

- 数据源:图片…

- transforms创建具体的工具:

- transforms.ToTensor(),

PIL Imageornumpy.ndarrayto tensor

- transforms.ToTensor(),

- 输出想要的格式

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

from PIL import Image

img_path = r"F:\ZNV\笔记图片\pytorch\练手数据集1\train\ants_image\0013035.jpg"

img = Image.open(img_path)

writer = SummaryWriter("logs")

# ToTensor方法可将PIL Image 或者 numpy.ndarray 转换为 tensor 张量

tensor_trans = transforms.ToTensor()

tensor_img = tensor_trans(img)

writer.add_image("Tensor_img", tensor_img)

writer.close()

常见的transforms

- ToTensor:转换类型,

PIL Imageornumpy.ndarrayto tensor - Compose:将几个转换工具组合在一起

- Normalize:归一化,output[channel] = (input[channel] - mean[channel]) / std[channel]

- Resize:改变维度

- RandomCrop:随机的裁剪,可指定大小

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

writer = SummaryWriter("logs")

img = Image.open("../images/swiss-army-ant.jpg")

# 图片转换为tensor

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img)

writer.add_image("Tensor_img", img_tensor, 1)

# 归一化,output[channel] = (input[channel] - mean[channel]) / std[channel]

trans_norm = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5]) # 均值、标准化

img_norm = trans_norm(img_tensor)

writer.add_image("Normalize", img_norm, 2)

# Resize 改变维度,类似reshape

trans_resize = transforms.Resize((512, 512))

img_resize = trans_resize(img)

img_resize = trans_totensor(img_resize)

writer.add_image("Resize", img_resize, 3)

# Compose组合变换 比例不变,整体缩放

trans_resize_2 = transforms.Resize(512)

trans_compose = transforms.Compose([trans_resize_2, trans_totensor])

img_resize_2 = trans_compose(img)

writer.add_image("Compose", img_resize_2, 4)

# RandomCrop 随机裁剪

trans_random = transforms.RandomCrop((500, 1000))

trans_compose_2 = transforms.Compose([trans_random, trans_totensor])

for i in range(10):

img_crop = trans_compose_2(img)

writer.add_image("RandomCrop", img_crop, i)

writer.close()

torchvision

datasets

官网提供的数据集https://pytorch.org/vision/stable/datasets.html,可供使用

import torchvision.datasets

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

dataset_transform = transforms.Compose([

transforms.ToTensor()

])

train_set = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=dataset_transform, download=True)

# print(test_set[0])

# img, target = test_set[0]

# print(img)

## 图片所属类别标签

# print(target)

# print(test_set.classes[target])

# img.show()

# tensorboard显示

writer = SummaryWriter("logs")

for i in range(10):

img, target = test_set[i]

writer.add_image("test_set", img, i)

writer.close()

DataLoader

可迭代数据集,批次迭代https://pytorch.org/docs/stable/data.html#torch.utils.data.DataLoader

import torchvision

# 准备的测试数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

# 测试数据集中第一张图片及target

img, target = test_data[0]

print(img.shape)

print(target)

writer = SummaryWriter("./dataloader")

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape)

# print(targets)

writer.add_images("test_data", imgs, step)

step = step + 1

writer.close()

nn.Module

https://pytorch.org/docs/stable/generated/torch.nn.Module.html#torch.nn.Module

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 20, 5)

self.conv2 = nn.Conv2d(20, 20, 5)

def forward(self, x):

x = F.relu(self.conv1(x))

return F.relu(self.conv2(x))

torch.nn.functional是轮子,torch.nn是封装好的

卷积层

卷积层测试,https://pytorch.org/docs/stable/generated/torch.nn.Conv2d.html#torch.nn.Conv2d

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

# 彩色图片三层,输出6个,卷积核是3*3,步长为1,padding为0

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

xyp = XYP()

print(xyp)

step = 0

writer = SummaryWriter("./logs")

for data in dataloader:

imgs, targets = data

output = xyp(imgs)

# print(output.shape)

writer.add_images("input", imgs, step)

# 6个通道显示不了,所以转换了尺寸

output = torch.reshape(output, (-1, 3, 30, 30))

writer.add_images("output", output, step)

step = step + 1

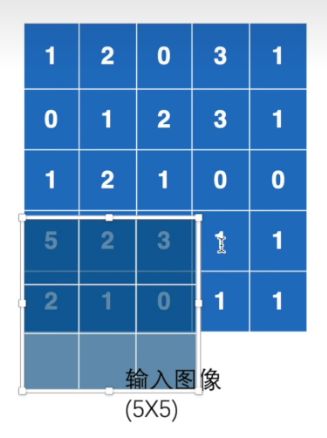

池化层

当ceil_mode为False就不会保留有缺值的,所以下图为2,只保留第一个框中的3*3最大值,默认移动的步长为池化核数

import torch

from torch import nn

from torch.nn import MaxPool2d

input_data = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]],

dtype=torch.float32)

# -1是batch_size

input_data = torch.reshape(input_data, (-1, 1, 5, 5))

print(input_data.shape)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

# ceil_mode为False就不会保留有缺值的

self.maxpool = MaxPool2d(kernel_size=3,

ceil_mode=False)

def forward(self, input_data1):

output_data = self.maxpool(input_data1)

return output_data

xyp = XYP()

output = xyp(input_data)

print(output)

非线性激活

from torch import nn

from torch.nn import ReLU, Sigmoid

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.sigmoid1 = Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

xyp = XYP()

writer = SummaryWriter("./dataloader")

step = 0

for data in test_loader:

imgs, targets = data

writer.add_images("input", imgs, step)

output = xyp(imgs)

writer.add_images("output", output, step)

step = step + 1

writer.close()

线性层(全连接层)

import torch

from torch import nn

import torchvision

from torch.nn import Linear

from torch.utils.data import DataLoader

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, drop_last=True)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.linear1 = Linear(196608, 10)

def forward(self, input):

output = self.linear1(input)

return output

xyp = XYP()

for data in test_loader:

imgs, targets = data

print(imgs.shape)

# 展平

output = torch.flatten(imgs)

output = xyp(output)

print(output)

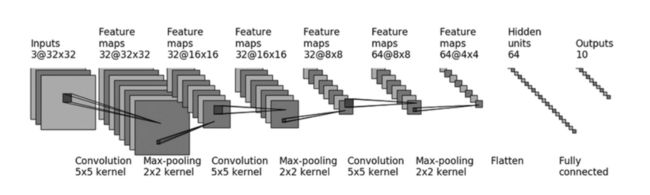

Sequential

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, drop_last=True)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2)

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2)

self.maxpool2 = MaxPool2d(kernel_size=2)

self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)

self.maxpool3 = MaxPool2d(kernel_size=2)

self.flatten1 = Flatten()

self.linear1 = Linear(in_features=1024, out_features=64)

self.linear2 = Linear(in_features=64, out_features=10)

self.mode1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

# x = self.conv1(x)

# x = self.maxpool1(x)

# x = self.conv2(x)

# x = self.maxpool2(x)

# x = self.conv3(x)

# x = self.maxpool3(x)

# x = self.flatten1(x)

# x = self.linear1(x)

# x = self.linear2(x)

x = self.mode1(x)

return x

xyp = XYP()

input = torch.ones((64, 3, 32, 32))

output = xyp(input)

print(output.shape)

writer = SummaryWriter("./dataloader")

writer.add_graph(xyp, input)

writer.close()

损失函数

import torch

from torch.nn import L1Loss, MSELoss, CrossEntropyLoss

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

loss = L1Loss()

result = loss(inputs, targets)

loss_mse = MSELoss()

result_mse = loss_mse(inputs, targets)

print(result)

print(result_mse)

# 交叉熵 loss

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3))

loss_cross = CrossEntropyLoss()

result_loss = loss_cross(x, y)

print(result_loss)

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, drop_last=True)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2)

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2)

self.maxpool2 = MaxPool2d(kernel_size=2)

self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)

self.maxpool3 = MaxPool2d(kernel_size=2)

self.flatten1 = Flatten()

self.linear1 = Linear(in_features=1024, out_features=64)

self.linear2 = Linear(in_features=64, out_features=10)

self.mode1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.mode1(x)

return x

xyp = XYP()

loss = nn.CrossEntropyLoss()

for data in test_loader:

imgs, targets = data

outputs = xyp(imgs)

result_loss = loss(outputs, targets)

# 反向传播

result_loss.backward()

优化器

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

from torch.utils.data import DataLoader

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, drop_last=True)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2)

self.maxpool1 = MaxPool2d(kernel_size=2)

self.conv2 = Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2)

self.maxpool2 = MaxPool2d(kernel_size=2)

self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2)

self.maxpool3 = MaxPool2d(kernel_size=2)

self.flatten1 = Flatten()

self.linear1 = Linear(in_features=1024, out_features=64)

self.linear2 = Linear(in_features=64, out_features=10)

self.mode1 = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.mode1(x)

return x

xyp = XYP()

# 损失函数

loss = nn.CrossEntropyLoss()

# 参数优化 梯度下降

optim = torch.optim.SGD(xyp.parameters(), lr=0.01)

# 循环全部数据

for epoch in range(20):

running_loss = 0.0

for data in test_loader:

imgs, targets = data

outputs = xyp(imgs)

result_loss = loss(outputs, targets)

# 梯度置0

optim.zero_grad()

# 反向传播

result_loss.backward()

# 参数调优

optim.step()

# 每次批次误差相加

running_loss = running_loss + result_loss

print(running_loss)

官方模型修改

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

from torch.utils.data import DataLoader

# 初始化参数

vgg16_false = torchvision.models.vgg16(pretrained=False)

# 训练好的参数

vgg16_true = torchvision.models.vgg16(pretrained=True)

train_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor())

# 最后添加一层线性网络

vgg16_true.add_module('add_linear', nn.Linear(1000, 10))

# 在classifier内添加

vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10))

print(vgg16_true)

# 修改其中一个

vgg16_false.classifier[6] = nn.Linear(4096, 10)

模型的保存和加载

import torch

import torchvision

vgg16_false = torchvision.models.vgg16(pretrained=False)

# 保存方式1 保存模型

torch.save(vgg16_false, "./model/vgg16_method1.pth")

# 保存方式2 保存模型参数状态

torch.save(vgg16_false.state_dict(), "./model/vgg16_method2.pth")

# 加载方式1

model = torch.load("./model/vgg16_method1.pth")

# 加载方式2

state_dict = torch.load("./model/vgg16_method2.pth")

vgg16_false.load_state_dict(state_dict)

模型训练流程

CPU

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

# 准备数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

train_data = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# DataLoader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 搭建神经网络

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model(x)

return x

# 创建网络模型

xyp = XYP()

# 损失函数,交叉熵

loss_fn = nn.CrossEntropyLoss()

# 优化器,梯度下降,1e-2=0.01

optimizer = torch.optim.SGD(xyp.parameters(), lr=1e-2)

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("./dataloader")

for i in range(epoch):

print("----- 第 {} 轮训练开始 -----".format(i + 1))

# 训练步骤开始

xyp.train()

for data in train_dataloader:

images, targets = data

outputs = xyp(images)

loss = loss_fn(outputs, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 训练次数+1

total_train_step = total_train_step + 1

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始

xyp.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

images, targets = data

outputs = xyp(images)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss

# 正确率

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy / test_data_size))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

total_test_step = total_test_step + 1

writer.close()

GPU

模型、损失函数、数据可以使用cuda()加速

- 直接使用cuda(),查验gpu是否可用 torch.cuda.is_available(),返回为True即可用

import time

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

# 准备数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

train_data = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# DataLoader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 搭建神经网络

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model(x)

return x

# 创建网络模型

xyp = XYP()

if torch.cuda.is_available():

xyp = xyp.cuda()

# 损失函数,交叉熵

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

# 优化器,梯度下降,1e-2=0.01

optimizer = torch.optim.SGD(xyp.parameters(), lr=1e-2)

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

start_time = time.time()

for i in range(epoch):

print("----- 第 {} 轮训练开始 -----".format(i + 1))

# 训练步骤开始

xyp.train()

for data in train_dataloader:

images, targets = data

if torch.cuda.is_available():

images = images.cuda()

targets = targets.cuda()

outputs = xyp(images)

loss = loss_fn(outputs, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 训练次数+1

total_train_step = total_train_step + 1

end_time = time.time()

print("花费时间:{}".format(end_time - start_time))

# 测试步骤开始

xyp.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

images, targets = data

if torch.cuda.is_available():

images = images.cuda()

targets = targets.cuda()

outputs = xyp(images)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss

# 正确率

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy / test_data_size))

total_test_step = total_test_step + 1

相比较而言,GPU是真的快

- 使用device方式,device = torch.device(“cuda”)

import time

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

# 准备数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# cpu cuda

device = torch.device("cuda")

train_data = torchvision.datasets.CIFAR10(root="./dataset", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_data = torchvision.datasets.CIFAR10(root="./dataset", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

# 数据集长度

train_data_size = len(train_data)

test_data_size = len(test_data)

# DataLoader加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# 搭建神经网络

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model(x)

return x

# 创建网络模型

xyp = XYP()

xyp = xyp.to(device)

# 损失函数,交叉熵

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

# 优化器,梯度下降,1e-2=0.01

optimizer = torch.optim.SGD(xyp.parameters(), lr=1e-2)

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# # 添加tensorboard

# writer = SummaryWriter("./dataloader")

start_time = time.time()

for i in range(epoch):

print("----- 第 {} 轮训练开始 -----".format(i + 1))

# 训练步骤开始

xyp.train()

for data in train_dataloader:

images, targets = data

images = images.to(device)

targets = targets.to(device)

outputs = xyp(images)

loss = loss_fn(outputs, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 训练次数+1

total_train_step = total_train_step + 1

# writer.add_scalar("train_loss", loss.item(), total_train_step)

end_time = time.time()

print("花费时间:{}".format(end_time - start_time))

# 测试步骤开始

xyp.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

images, targets = data

images = images.to(device)

targets = targets.to(device)

outputs = xyp(images)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss

# 正确率

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy / test_data_size))

# writer.add_scalar("test_loss", total_test_loss, total_test_step)

total_test_step = total_test_step + 1

# writer.close()

- 折中考虑 ,device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

模型验证流程

from PIL import Image

import torch

from torch import nn

import torchvision

from torch.nn import Linear, Conv2d, MaxPool2d, Flatten, Sequential

image = Image.open("../images/img_2.png")

# png格式除了三通道还有一个透明度通道

image = image.convert('RGB')

transform = torchvision.transforms.Compose([

torchvision.transforms.Resize((32, 32)),

torchvision.transforms.ToTensor()

])

image = transform(image)

class XYP(nn.Module):

def __init__(self):

super(XYP, self).__init__()

self.model = Sequential(

Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Conv2d(in_channels=32, out_channels=64, kernel_size=5, stride=1, padding=2),

MaxPool2d(kernel_size=2), Flatten(),

Linear(in_features=1024, out_features=64), Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model(x)

return x

# 读取模型

model = torch.load("./model/xyp_model", map_location=torch.device('cpu'))

image = torch.reshape(image, (1, 3, 32, 32))

model.eval()

with torch.no_grad():

output = model(image)

print(output)

print(output.argmax())