爬取淘宝网的商品信息!淘宝可是很难爬的哦!双十一你剁手了吗!

一、思路

首先,从命令行参数列表中,提取出要爬取商品的关键词,根据关键词拼接URL,请求相应的URL,然后利用Xpath从响应页面中提取商品信息,最后将商品信息存储到数据库即可

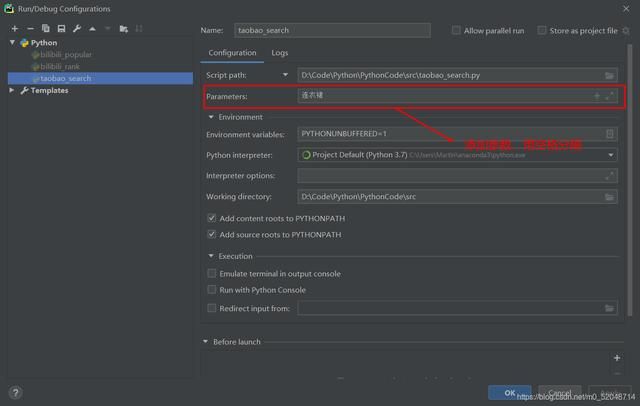

1、根据关键词搜索

https://re.taobao.com/search?keyword= 1

通过参数传入关键词,然后进行URL拼接

def spider(key_word):

for i in range(totalPages):

request_url = url + key_word + "&page=" + str(i)

response = requests.get(request_url, headers)

parse(response.text)

def main():

if len(sys.argv) < 1:

print("缺少参数!")

exit(0)

for index, key in enumerate(sys.argv):

if index != 0:

spider(key)

connect.close()

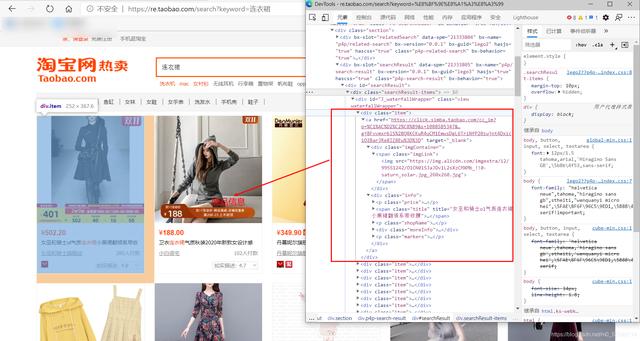

2、数据提取

这里用Xpath从网页中提取想要的商品信息,也可以使用正则或者BeautifulSoup库

def parse(text):

html = etree.HTML(text)

div_list = html.xpath('//div[@id="J_waterfallWrapper"]/div[@class="item"]')

result = []

for div in div_list:

commodity_url = ''.join(div.xpath('./a/@href'))

commodity_title = ''.join(div.xpath('./a/div[@class="info"]/span/@title'))

price = ''.join(div.xpath('./a/div[@class="info"]/p[@class="price"]/span/strong/text()'))

shop_name = ''.join(div.xpath('./a/div[@class="info"]/p[@class="shopName"]/span[1]/text()'))

li_list = div.xpath('./a/div[@class="info"]/div[@class="moreInfo"]/div[@class="dsr-info"]/ul/li')

truth = ''.join(li_list[0].xpath('./span[@class="morethan"]/b/text()'))

service = ''.join(li_list[1].xpath('./span[@class="morethan"]/b/text()'))

speed = ''.join(li_list[2].xpath('./span[@class="morethan"]/b/text()'))

commodity = {

'commodity_url': commodity_url,

'commodity_title': commodity_title,

'price': price,

'shop_name': shop_name,

'truth': truth,

'service': service,

'speed': speed,

}

result.append(commodity)

save(result)

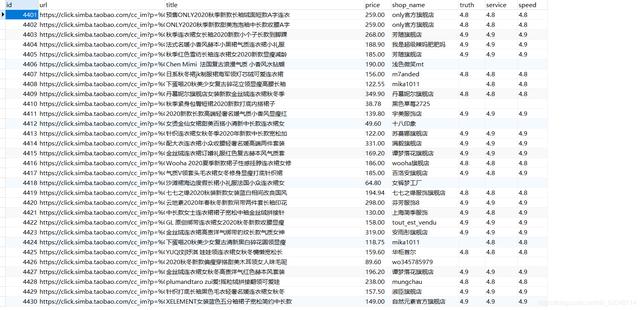

3、数据保存

将数据保存到MySQL中

def save(items):

sql = "insert into commodity(id,url,title,price,shop_name,truth,service,speed) values(null,%s,%s,%s,%s,%s,%s,%s)"

for item in items:

cursor.execute(sql, (

item['commodity_url'],

item['commodity_title'],

item['price'],

item['shop_name'],

item['truth'],

item['service'],

item['speed']

))

print(item)

connect.commit()

二、结果

三、源代码

import sys

import requests

import pymysql

from lxml import etree

url = "https://re.taobao.com/search?keyword="

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/86.0.4240.111 Safari/537.36 Edg/86.0.622.58 "

}

totalPages = 100

connect = pymysql.connect(host="127.0.0.1", user="root", password="root", database="taobao", charset="utf8mb4")

cursor = connect.cursor()

def save(items):

sql = "insert into commodity(id,url,title,price,shop_name,truth,service,speed) values(null,%s,%s,%s,%s,%s,%s,%s)"

for item in items:

cursor.execute(sql, (

item['commodity_url'],

item['commodity_title'],

item['price'],

item['shop_name'],

item['truth'],

item['service'],

item['speed']

))

print(item)

connect.commit()

def parse(text):

html = etree.HTML(text)

div_list = html.xpath('//div[@id="J_waterfallWrapper"]/div[@class="item"]')

result = []

for div in div_list:

commodity_url = ''.join(div.xpath('./a/@href'))

commodity_title = ''.join(div.xpath('./a/div[@class="info"]/span/@title'))

price = ''.join(div.xpath('./a/div[@class="info"]/p[@class="price"]/span/strong/text()'))

shop_name = ''.join(div.xpath('./a/div[@class="info"]/p[@class="shopName"]/span[1]/text()'))

li_list = div.xpath('./a/div[@class="info"]/div[@class="moreInfo"]/div[@class="dsr-info"]/ul/li')

truth = ''.join(li_list[0].xpath('./span[@class="morethan"]/b/text()'))

service = ''.join(li_list[1].xpath('./span[@class="morethan"]/b/text()'))

speed = ''.join(li_list[2].xpath('./span[@class="morethan"]/b/text()'))

commodity = {

'commodity_url': commodity_url,

'commodity_title': commodity_title,

'price': price,

'shop_name': shop_name,

'truth': truth,

'service': service,

'speed': speed,

}

result.append(commodity)

save(result)

def spider(key_word):

for i in range(totalPages):

request_url = url + key_word + "&page=" + str(i)

response = requests.get(request_url, headers)

parse(response.text)

def main():

if len(sys.argv) < 1:

print("缺少参数!")

exit(0)

for index, key in enumerate(sys.argv):

if index != 0:

spider(key)

connect.close()

if __name__ == '__main__':

main()

你学会了吗?

PS:如有需要Python学习资料的小伙伴可以加点击下方链接自行获取

python免费学习资料以及群交流解答点击即可加入