PyTorch——图像风格迁移

第一种代码

from __future__ import division

from torchvision import models

from torchvision import transforms

from PIL import Image

import argparse

import torch

import torchvision

import torch.nn as nn

import numpy as np

import matplotlib.pyplot as plt

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")#选择用gpu还是cpu

print(device)

def load_image(image_path, transform=None, max_size=None, shape=None):#图像预处理

image = Image.open(image_path)

if max_size:

scale = max_size / max(image.size)

size = np.array(image.size) * scale

image = image.resize(size.astype(int), Image.ANTIALIAS)

if shape:

image = image.resize(shape, Image.LANCZOS)

if transform:

image = transform(image).unsqueeze(0)

return image.to(device)

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]) # 来自ImageNet的mean和variance

content = load_image("D:/电脑壁纸/v2-8c48ebffbc108fb45f04e5ff0bff4015_r.jpg", transform, max_size=400)

style = load_image("D:/转移事项/桌面/R.jpg", transform, shape=[content.size(2), content.size(3)])

unloader = transforms.ToPILImage() # reconvert into PIL image

def imshow(tensor, title=None):

image = tensor.cpu().clone() # we clone the tensor to not do changes on it

image = image.squeeze(0) # remove the fake batch dimension

image = unloader(image)

plt.imshow(image)

if title is not None:

plt.title(title)

plt.pause(0.001) # pause a bit so that plots are updated

class VGGNet(nn.Module):

def __init__(self):

super(VGGNet, self).__init__()

self.select = ['0', '5', '10', '19', '28']

self.vgg = models.vgg19(pretrained=True).features

def forward(self, x):

features = []

for name, layer in self.vgg._modules.items():

x = layer(x)

if name in self.select:

features.append(x)

return features

target = content.clone().requires_grad_(True)

optimizer = torch.optim.Adam([target], lr=0.003, betas=[0.5, 0.999])

vgg = VGGNet().to(device).eval()

target_features = vgg(target)

total_step = 2000

style_weight = 100.

for step in range(total_step):

target_features = vgg(target)

content_features = vgg(content)

style_features = vgg(style)

style_loss = 0

content_loss = 0

for f1, f2, f3 in zip(target_features, content_features, style_features):

content_loss += torch.mean((f1 - f2) ** 2)

_, c, h, w = f1.size()

f1 = f1.view(c, h * w)

f3 = f3.view(c, h * w)

# 计算gram matrix

f1 = torch.mm(f1, f1.t())

f3 = torch.mm(f3, f3.t())

style_loss += torch.mean((f1 - f3) ** 2) / (c * h * w)

loss = content_loss + style_weight * style_loss

# 更新target

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step % 10 == 0:

print("Step [{}/{}], Content Loss: {:.4f}, Style Loss: {:.4f}"

.format(step, total_step, content_loss.item(), style_loss.item()))

denorm = transforms.Normalize((-2.12, -2.04, -1.80), (4.37, 4.46, 4.44))

img = target.clone().squeeze()

img = denorm(img).clam99p_(0, 1)

imshow(img)

plt.show()

第二种代码

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transforms

from PIL import Image

from copy import deepcopy

import matplotlib.pyplot as plt

import numpy as np

def gram_matrix(input):

a, b, c, d = input.size()

features = input.view(a * b, c * d)

G = torch.mm(features, features.t())

return G

class ContentLoss(nn.Module):

def __init__(self, target):

super(ContentLoss, self).__init__()

self.target = target.detach()

def forward(self, input):

self.loss = torch.sum((input-self.target) ** 2) / 2.0

return input

class StyleLoss(nn.Module):

def __init__(self, target_feature):

super(StyleLoss, self).__init__()

self.target = gram_matrix(target_feature).detach()

def forward(self, input):

a, b, c, d = input.size()

G = gram_matrix(input)

self.loss = torch.sum((G-self.target) ** 2) / (4.0 * b * b * c * d)

return input

def load_image(image_path, transform=None, max_size=None, shape=None):#图像预处理

image = Image.open(image_path)

if max_size:

scale = max_size / max(image.size)

size = np.array(image.size) * scale

image = image.resize(size.astype(int), Image.ANTIALIAS)

if shape:

image = image.resize(shape, Image.LANCZOS)

if transform:

image = transform(image).unsqueeze(0)

return image.to(device)

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]) # 来自ImageNet的mean和variance

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

content = load_image("D:/电脑壁纸/v2-8c48ebffbc108fb45f04e5ff0bff4015_r.jpg", transform, max_size=400)

style = load_image("D:/转移事项/桌面/R.jpg", transform, shape=[content.size(2), content.size(3)])

content_layers = ['conv_4_2'] # 内容损失函数使用的卷积层

style_layers = ['conv_1_1', 'conv2_1', 'conv_3_1', 'conv_4_1', 'conv5_1'] # 风格损失函数使用的卷积层

content_weights = [1] # 内容损失函数的权重

style_weights = [1e3, 1e3, 1e3, 1e3, 1e3] # 风格损失函数的权重

num_steps=1000 # 最优化的步数

class Model:

def __init__(self, device):

cnn = torchvision.models.vgg19(pretrained=True).features.to(device).eval()

self.cnn = deepcopy(cnn) # 获取预训练的VGG19卷积神经网络

self.device = device

self.content_losses = []

self.style_losses = []

def run(self):

content_image = load_image("D:/电脑壁纸/v2-8c48ebffbc108fb45f04e5ff0bff4015_r.jpg", transform, max_size=400)

style_image = load_image("D:/转移事项/桌面/R.jpg", transform, shape=[content.size(2), content.size(3)])

self._build(content_image, style_image) # 建立损失函数

output_image = self._transfer(content_image) # 进行最优化

return output_image

def _build(self, content_image, style_image):

self.model = nn.Sequential()

block_idx = 1

conv_idx = 1

# 逐层遍历VGG19,取用需要的卷积层

for layer in self.cnn.children():

# 识别该层类型并进行编号命名

if isinstance(layer, nn.Conv2d):

name = 'conv_{}_{}'.format(block_idx, conv_idx)

conv_idx += 1

elif isinstance(layer, nn.ReLU):

name = 'relu_{}_{}'.format(block_idx, conv_idx)

layer = nn.ReLU(inplace=False)

elif isinstance(layer, nn.MaxPool2d):

name = 'pool_{}'.format(block_idx)

block_idx += 1

conv_idx = 1

elif isinstance(layer, nn.BatchNorm2d):

name = 'bn_{}'.format(block_idx)

else:

raise Exception("invalid layer")

self.model.add_module(name, layer)

# 添加内容损失函数

if name in content_layers:

target = self.model(content_image).detach()

content_loss = ContentLoss(target)

self.model.add_module("content_loss_{}_{}".format(block_idx, conv_idx), content_loss)

self.content_losses.append(content_loss)

# 添加风格损失函数

if name in style_layers:

target_feature = self.model(style_image).detach()

style_loss = StyleLoss(target_feature)

self.model.add_module("style_loss_{}_{}".format(block_idx, conv_idx), style_loss)

self.style_losses.append(style_loss)

# 留下有用的部分

i = 0

for i in range(len(self.model) - 1, -1, -1):

if isinstance(self.model[i], ContentLoss) or isinstance(self.model[i], StyleLoss):

break

self.model = self.model[:(i + 1)]

def _transfer(self, content_image):

output_image = content_image.clone()

random_image = torch.randn(content_image.data.size(), device=self.device)

output_image = 0.4 * output_image + 0.6 * random_image

optimizer = torch.optim.LBFGS([output_image.requires_grad_()])

print('Optimizing..')

run = [0]

while run[0] <= num_steps:

def closure():

optimizer.zero_grad()

self.model(output_image)

style_score = 0

content_score = 0

for sl, sw in zip(self.style_losses, style_weights):

style_score += sl.loss * sw

for cl, cw in zip(self.content_losses, content_weights):

content_score += cl.loss * cw

loss = style_score + content_score

loss.backward()

run[0] += 1

if run[0] % 50 == 0:

print("iteration {}: Loss: {:4f} Style Loss: {:4f} Content Loss: {:4f}"

.format(run, loss.item(), style_score.item(), content_score.item()))

return loss

optimizer.step(closure)

return output_image

model = Model(device)

out_image = model.run()

out_image=out_image.cpu().clone()

denorm = transforms.Normalize((-2.12, -2.04, -1.80), (4.37, 4.46, 4.44))

out_image = out_image.squeeze()

out_image = denorm(out_image)

out_image=out_image.clamp(0,1)

unloader = transforms.ToPILImage() # reconvert into PIL image

out_image=unloader(out_image)

plt.imshow(out_image)

plt.show()

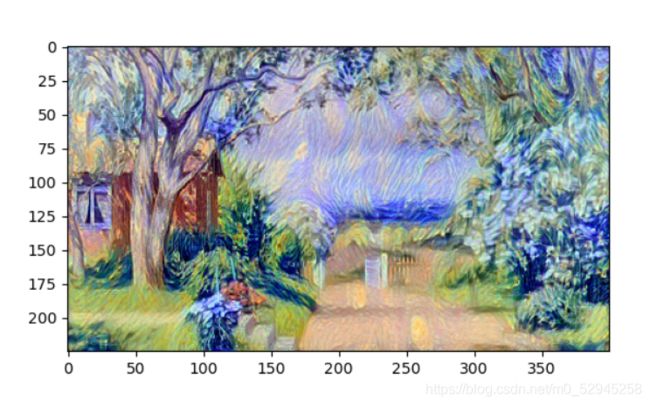

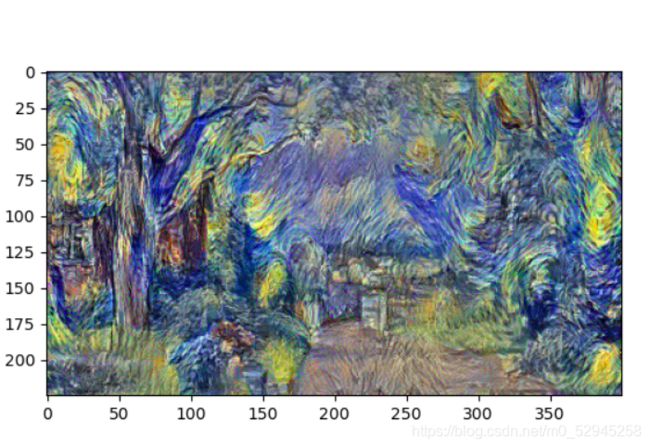

两种运行结果分别为:

参考:https://blog.csdn.net/wuzhongqiang/article/details/107643484

《Pytorch深度学习实战》