1. 使用通过flink sql 自动定义函数(udf)将实时接入的数据输出到 http 接口当中 。

2. 环境

| flink |

mysql |

接口 |

| flink 14.5 |

5.20 |

spring boot 接口 |

3. 环境

3.1 mysql 语句

CREATE TABLE `Flink_cdc` (

`id` bigint(64) NOT NULL AUTO_INCREMENT,

`name` varchar(64) DEFAULT NULL,

`age` int(20) DEFAULT NULL,

`birthday` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`ts` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=73 DEFAULT CHARSET=utf8mb4;

/*Data for the table `Flink_cdc` */

insert into `Flink_cdc`(`id`,`name`,`age`,`birthday`,`ts`) values

(69,'flink',21,'2022-12-09 22:57:15','2022-12-09 22:57:17'),

(70,'flink sql',22,'2022-12-09 23:01:43','2022-12-09 23:01:46'),

(71,'flk sql',23,'2022-12-09 23:43:04','2022-12-09 23:43:07'),

(72,'hbase',55,'2023-04-05 16:40:38','2023-04-05 16:40:40');

4.flink sql 自定义函数

4.1 实现自定义函数接口, 上传jar 包到flink lib 中 一定要重启flink 然后注册flink sql 的函数

4.2 自定义函数的实现

package com.wudl.flink.fun;

import com.alibaba.fastjson.JSON;

import com.wudl.flink.util.HttpClientUtils;

import org.apache.flink.table.functions.FunctionContext;

import org.apache.flink.table.functions.ScalarFunction;

import java.util.Map;

/**

* @Author: wudl

* @Description:

* @ClassName: MyHttpFunction

* @Date: 2023/4/5 12:41

*/

public class MyHttpFunction extends ScalarFunction {

public String eval(Map<String, String> map) {

String str = JSON.toJSONString(map);

String s = HttpClientUtils.doPostJson("http://192.168.244.1:8081/tUser/savedta", str);

return s;

}

}

4.2.1 需要的工具类

package com.wudl.flink.util;

import com.alibaba.fastjson.JSON;

import com.wudl.flink.bean.User;

import org.apache.http.NameValuePair;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.client.utils.URIBuilder;

import org.apache.http.entity.StringEntity;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.net.URI;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

/**

* @Author: wudl

* @Description:

* @ClassName: HttpClientUtils

* @Date: 2023/4/5 12:54

*/

public class HttpClientUtils {

public static void main(String[] args) {

// String s = HttpClientUtils.doGet("http://localhost:8081/tUser/get/getList");

// System.out.println(s);

User u = new User();

u.setId(12l);

u.setName("flinksql");

String s = JSON.toJSONString(u);

System.out.println(s);

String s1 = HttpClientUtils.doPostJson("http://192.168.1.109:8081/tUser/savedta", s);

System.out.println(s1);

}

public static String doGet(String url) {

return doGet(url, null);

}

public static String doGet(String url, Map<String, String> params) {

// 创建Httpclient对象

CloseableHttpClient httpclient = HttpClients.createDefault();

String result = "";

CloseableHttpResponse response = null;

try {

// 创建uri

URIBuilder builder = new URIBuilder(url);

if (params != null) {

for (String key : params.keySet()) {

builder.addParameter(key, params.get(key));

}

}

URI uri = builder.build();

// 创建http GET请求

HttpGet httpGet = new HttpGet(uri);

// 执行请求

response = httpclient.execute(httpGet);

// 判断返回状态是否为200

if (response.getStatusLine().getStatusCode() == 200) {

result = EntityUtils.toString(response.getEntity(), "UTF-8");

}

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

if (response != null) {

response.close();

}

httpclient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

return result;

}

public static String doPost(String url) {

return doPost(url, null);

}

public static String doPost(String url, Map<String, String> params) {

// 创建Httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

CloseableHttpResponse response = null;

String result = "";

try {

// 创建Http Post请求

HttpPost httpPost = new HttpPost(url);

// 创建参数列表

if (params != null) {

List<NameValuePair> paramList = new ArrayList<>();

for (String key : params.keySet()) {

paramList.add(new BasicNameValuePair(key, params.get(key)));

}

// 模拟表单

UrlEncodedFormEntity entity = new UrlEncodedFormEntity(paramList,"utf-8");

httpPost.setEntity(entity);

}

// 执行http请求

response = httpClient.execute(httpPost);

result = EntityUtils.toString(response.getEntity(), "utf-8");

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

response.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

return result;

}

public static String doPostJson(String url, String json) {

// 创建Httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

CloseableHttpResponse response = null;

String result = "";

try {

// 创建Http Post请求

HttpPost httpPost = new HttpPost(url);

// 创建请求内容

StringEntity entity = new StringEntity(json.toString(),"UTF-8");//解决中文乱码问题

entity.setContentEncoding("UTF-8");

entity.setContentType("application/json");

httpPost.setEntity(entity);

// 执行http请求

response = httpClient.execute(httpPost);

result = EntityUtils.toString(response.getEntity(), "utf-8");

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

response.close();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

return result;

}

}

4.2.2 需要的maven 环境

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>com.wudl.flink</groupId>

<artifactId>Flink-learning</artifactId>

<version>1.0-SNAPSHOT</version>

</parent>

<artifactId>wudl-flink-14.5</artifactId>

<!-- 指定仓库位置,依次为aliyun、apache和cloudera仓库 -->

<repositories>

<repository>

<id>aliyun</id>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

</repository>

<repository>

<id>apache</id>

<url>https://repository.apache.org/content/repositories/snapshots/</url>

</repository>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

<repository>

<id>spring-plugin</id>

<url>https://repo.spring.io/plugins-release/</url>

</repository>

</repositories>

<properties>

<encoding>UTF-8</encoding>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<java.version>1.8</java.version>

<scala.version>2.12</scala.version>

<flink.version>1.14.5</flink.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-core</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>commons-httpclient</groupId>

<artifactId>commons-httpclient</artifactId>

<version>3.1</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.5</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.83</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-jdbc_2.12</artifactId>

<version>1.10.3</version>

</dependency>

<!--依赖Scala语言-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>${flink.version}</version>

<exclusions>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-scala-bridge_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- blink执行计划,1.11+默认的-->

<!-- <dependency>-->

<!-- <groupId>org.apache.flink</groupId>-->

<!-- <artifactId>flink-table-planner-blink_2.12</artifactId>-->

<!-- <version>${flink.version}</version>-->

<!-- <exclusions>-->

<!-- <exclusion>-->

<!-- <artifactId>slf4j-api</artifactId>-->

<!-- <groupId>org.slf4j</groupId>-->

<!-- </exclusion>-->

<!-- </exclusions>-->

<!-- </dependency>-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-cep_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- flink连接器-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-sql-connector-kafka_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-csv</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-json</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hive_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>2.1.0</version>

<exclusions>

<exclusion>

<artifactId>hadoop-hdfs</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.1.0</version>

<exclusions>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-hadoop-2-uber</artifactId>

<version>2.7.5-10.0</version>

<exclusions>

<exclusion>

<artifactId>slf4j-log4j12</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.1.0</version>

<exclusions>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-log4j12</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

<!--<version>8.0.20</version>-->

</dependency>

<!-- 高性能异步组件:Vertx-->

<dependency>

<groupId>io.vertx</groupId>

<artifactId>vertx-core</artifactId>

<version>3.9.0</version>

</dependency>

<dependency>

<groupId>io.vertx</groupId>

<artifactId>vertx-jdbc-client</artifactId>

<version>3.9.0</version>

</dependency>

<dependency>

<groupId>io.vertx</groupId>

<artifactId>vertx-redis-client</artifactId>

<version>3.9.0</version>

</dependency>

<!-- 日志 -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.7</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.2</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>3.1.3</version>

<exclusions>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-log4j12</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-hbase_2.11</artifactId>

<version>1.10.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

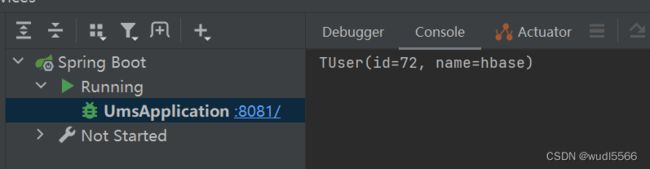

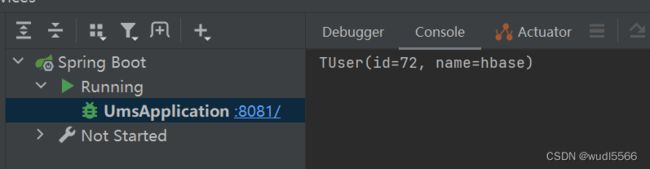

4.3 接口实现

@Api

@RestController

@RequestMapping("/tUser")

public class TUserController {

@Autowired

private TUserService tUserService;

@PostMapping("/savedta")

public String saveData(@RequestBody TUser user)

{

System.out.println(user.toString());

return user.toString();

}

4.4需要注册自定义函数—在flink sql client 中实现

CREATE FUNCTION myhttp AS 'com.wudl.flink.fun.MyHttpFunction';

4.5 执行

CREATE TABLE source_mysql (

id BIGINT PRIMARY KEY NOT ENFORCED,

name STRING,

age INT,

birthday TIMESTAMP(3),

ts TIMESTAMP(3)

) WITH (

'connector' = 'mysql-cdc',

'hostname' = '192.168.1.180',

'port' = '3306',

'username' = 'root',

'password' = '密码',

'server-time-zone' = 'Asia/Shanghai',

'debezium.snapshot.mode' = 'initial',

'database-name' = 'test',

'table-name' = 'Flink_cdc'

);

CREATE TABLE output (

t string

) WITH (

'connector' = 'print'

);

insert into output select myhttp(MAP['id',cast(id as string),'name',name]) from source_mysql;

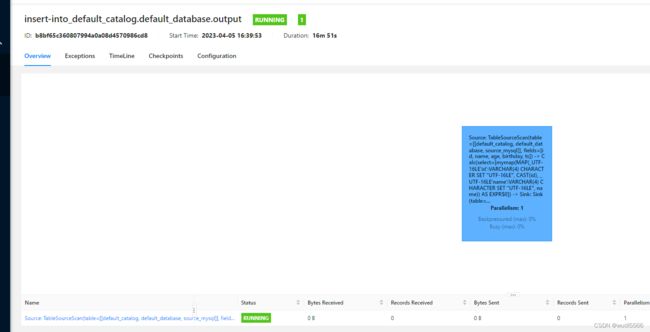

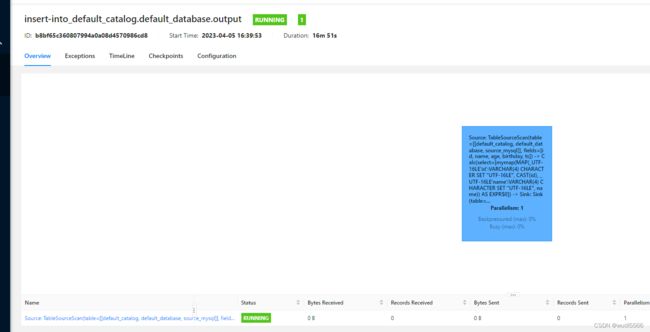

6查看结果

这样就实时实现了 flink sql sql 实时调用接口函数了。