cuda多流编程思考

1. 最后的cudaStreamSynchronize调用,一定要按照流水线完成的顺序来,即最先被完成的最先同步,不然会破坏overlap

2. 如果在代码中间插入计时器和其他操作,一定小心。因为stream 0 会强行和 1,2同步,间接导致1与2之间也存在了同步关系。cudaGetLasterror也会破坏并发。

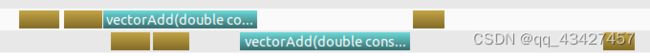

3. cuda的流之间是并行的,那么两个流之间的代码顺序重要吗?根据《cuda高性能编成》第10节的内容,以Vectoradd为例子,

这种是最好的

HANDLE_ERROR(cudaMemcpyAsync(d_A, h_A, chunk, cudaMemcpyHostToDevice, stream1));

HANDLE_ERROR(cudaMemcpyAsync(d_B, h_B, chunk, cudaMemcpyHostToDevice, stream1));

HANDLE_ERROR(cudaMemcpyAsync(d_A2, (void*)h_A + chunk, chunk + tail, cudaMemcpyHostToDevice, stream2));

vectorAdd << < blocksPerGrid, threadsPerBlock, 0, stream1 >> > (d_A, d_B, d_C, (chunk) / sizeof(REAL));

HANDLE_ERROR(cudaMemcpyAsync(d_B2, (void*)h_B + chunk, chunk + tail, cudaMemcpyHostToDevice, stream2));

vectorAdd << > > (d_A2, d_B2, d_C2, (chunk + tail) / sizeof(REAL));

printf("Copy output data from the CUDA device to the host memory\n");

HANDLE_ERROR(cudaMemcpyAsync(h_C, d_C, chunk, cudaMemcpyDeviceToHost, stream1));

HANDLE_ERROR(cudaMemcpyAsync((void*)h_C + chunk, d_C2, chunk + tail, cudaMemcpyDeviceToHost, stream2));

cudaStreamSynchronize(stream1);

cudaStreamSynchronize(stream2);

cudaStreamDestroy(stream2);

cudaStreamDestroy(stream1);

这种重叠就弱了

HANDLE_ERROR(cudaMemcpyAsync(d_A, h_A, chunk, cudaMemcpyHostToDevice, stream1));

HANDLE_ERROR(cudaMemcpyAsync(d_B, h_B, chunk, cudaMemcpyHostToDevice, stream1));

HANDLE_ERROR(cudaMemcpyAsync(d_A2, (void*)h_A + chunk, chunk + tail, cudaMemcpyHostToDevice, stream2));

HANDLE_ERROR(cudaMemcpyAsync(d_B2, (void*)h_B + chunk, chunk + tail, cudaMemcpyHostToDevice, stream2));

vectorAdd << > > (d_A2, d_B2, d_C2, (chunk + tail) / sizeof(REAL));

vectorAdd << < blocksPerGrid, threadsPerBlock, 0, stream1 >> > (d_A, d_B, d_C, (chunk) / sizeof(REAL));

printf("Copy output data from the CUDA device to the host memory\n");

HANDLE_ERROR(cudaMemcpyAsync(h_C, d_C, chunk, cudaMemcpyDeviceToHost, stream1));

HANDLE_ERROR(cudaMemcpyAsync((void*)h_C + chunk, d_C2, chunk + tail, cudaMemcpyDeviceToHost, stream2)); 由此可见:

1. mem engine内部是半串行(不同方向才可并行,且同一时间一方向最多一个)

2. kernel engine内部是乱序并行,根据依赖关系来,没有严格要求。后边的内存好了可以先做

3. 对于第一种情况,当stream1的kernel(简称ks1)发生在ms2之前,表示它的依赖已经全部完成。cuda会捕捉到这种语义并且直接调度它。而第二种,cuda知道ms2之后ks1的依赖一定会完成,但是之前能不能调度呢?则需要它自己去分析,其实也有一点重叠,但是中间gap大了很多。我分析这个gap是由于先有mem2的call,它会先处理mem eigen,然后再去检查kernel的依赖。而第一种kernel先来的,会先去检查kernel,能跑直接跑。所以深度优先调用可能更靠谱?!

4. 第3点只是我的分析,所有ppt和书本都没有提到这件事。。。

调度方式官方文档:

https://developer.download.nvidia.cn/CUDA/training/StreamsAndConcurrencyWebinar.pdf