MySQL-MHA高可用(一)

目录

同步概念

工作原理

环境拓扑

环境准备

manager

master1

master2

slave

配置半同步复制

master1

master2

slave

查看状态

创建用户并指定主从

master1

master2

slave

配置mysql-mha

配置mha

验证

SSH 有效性验证

集群复制的有效性验证

启动manager

故障转移验证

MHA Manager 端日常操作

博客主页:大虾好吃吗的博客

MySQL专栏:MySQL专栏地址

MHA(Master High Availability)目前在 MySQL 高可用方面是一个相对成熟的解决方案是一套优秀的作为 MySQL 高可用性环境下故障切换和主从提升的高可用软件。

在 MySQL 故障切换过程中,MHA 能做到在 0~30 秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA 能在最大程度上保证数据的一致性,以达到真正意义上的高可用。

该软件由两部分组成:MHA Manager(管理节点)和 MHA Node(数据节点)。MHA Manager 可以单独部署在一台独立的机器上管理多个 master-slave 集群,也可以部署在一台 slave 节点上。MHA Node 运行在每台 MySQL 服务器上,MHA Manager 会定时探测集群中的 master 节点,当 master 出现故障时,它可以自动将最新数据的 slave 提升为新的 master,然后将所有其他的 slave 重新指向新的 master。整个故障转移过程对应用程序完全透明。

在 MHA 自动故障切换过程中,MHA 试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失,但这并不总是可行的。例如,如果主服务器硬件故障或无法通过 ssh 访问,MHA 没法保存二进制日志,只进行故障转移而丢失了最新的数据。使用 MySQL 5.5 的半同步复制,可以大大降低数据丢失的风险。MHA 可以与半同步复制结合起来。如果只有一个 slave 已经收到了最新的二进制日志,MHA 可以将最新的二进制日志应用于其他所有的 slave 服务器上,因此可以保证所有节点的数据一致性。

在 MHA 自动故障切换过程中,MHA 试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失,但这并不总是可行的。例如,如果主服务器硬件故障或无法通过 ssh 访问,MHA 没法保存二进制日志,只进行故障转移而丢失了最新的数据。使用 MySQL 5.5 的半同步复制,可以大大降低数据丢失的风险。MHA 可以与半同步复制结合起来。如果只有一个 slave 已经收到了最新的二进制日志,MHA 可以将最新的二进制日志应用于其他所有的 slave 服务器上,因此可以保证所有节点的数据一致性。

注意:从MySQL5.5开始,MySQL以插件的形式支持半同步复制。

同步概念

如何理解半同步呢?首先我们来看看异步,全同步的概念:

异步复制(Asynchronous replication) MySQL默认的复制即是异步的,主库在执行完客户端提交的事务后会立即将结果返给给客户端,并不关心从库是否已经接收并处理,这样就会有一个问题,主如果crash掉了,此时主上已经提交的事务可能并没有传到从上,如果此时,强行将从提升为主,可能导致新主上的数据不完整。

全同步复制(Fully synchronous replication) 指当主库执行完一个事务,所有的从库都执行了该事务才返回给客户端。因为需要等待所有从库执行完该事务才能返回,所以全同步复制的性能必然会收到严重的影响。

半同步复制(Semisynchronous replication) 介于异步复制和全同步复制之间,主库在执行完客户端提交的事务后不是立刻返回给客户端,而是等待至少一个从库接收到并写到relay log中才返回给客户端。相对于异步复制,半同步复制提高了数据的安全性,同时它也造成了一定程度的延迟,这个延迟最少是一个TCP/IP往返的时间。所以,半同步复制最好在低延时的网络中使用。

总结:异步与半同步异同 默认情况下MySQL的复制是异步的,Master上所有的更新操作写入Binlog之后并不确保所有的更新都被复制到Slave之上。异步操作虽然效率高,但是在Master/Slave出现问题的时候,存在很高数据不同步的风险,甚至可能丢失数据。 MySQL5.5引入半同步复制功能的目的是为了保证在master出问题的时候,至少有一台Slave的数据是完整的。在超时的情况下也可以临时转入异步复制,保障业务的正常使用,直到一台salve追赶上之后,继续切换到半同步模式。

工作原理

相较于其它HA软件,MHA的目的在于维持MySQL Replication中Master库的高可用性,其最大特点是可以修复多个Slave之间的差异日志,最终使所有Slave保持数据一致,然后从中选择一个充当新的Master,并将其它Slave指向它。

-

从宕机崩溃的master保存二进制日志事件(binlogevents)。

-

识别含有最新更新的slave。

-

应用差异的中继日志(relay log)到其它slave。

-

应用从master保存的二进制日志事件(binlogevents)。

-

提升一个slave为新master。

-

使其它的slave连接新的master进行复制。

目前 MHA 主要支持一主多从的架构,要搭建 MHA, 要求一个复制集群中必须最少有三台数据库服务器,一主二从,即一台充当 master,一台充当备用 master,另外一台充当从库,因为至少需要三台服务器,出于机器成本的考虑,淘宝也在该基础上进行了改造,目前淘宝 TMHA 已经支持一主一从。

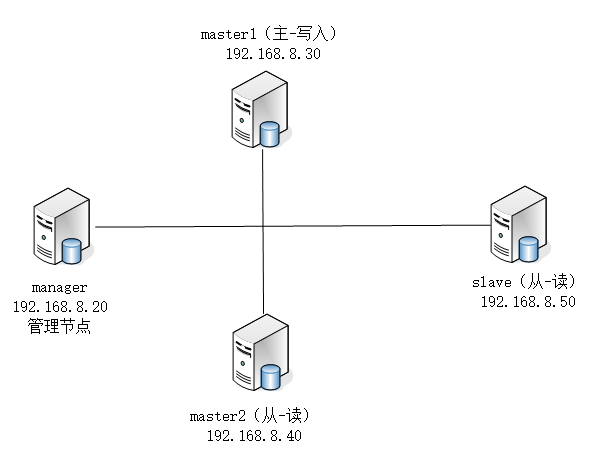

环境拓扑

其中master1对外提供写服务,备选master(实际为slave,主机名master2)提供读服务,slave也提供相关的读服务,一旦master1宕机,将会把备选master提升为新的master,slave指向新的master,manager作为管理服务器。生产环境中,是有很多从,从的操作都是一样的,这里以两台slave为例,而备选master也作为slave。

环境准备

1、 在配置好IP地址后检查selinux,iptables设置,关闭 selinux ,iptables 服务以便后期主从同步不出错 注:时间要同步 。

2、 在四台机器都配置epel源

[root@manager ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo3、建立ssh无交互登录环境

manager

下面四台主机都需要执行互信,通过for循环把公钥传出去。(互信时直接按回车即可,但是传出去需要允许(yes),然后输入对端密码)

[root@manager ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:8zzNm0tUTQ9bw/E9GQ6+1BbJIPOn5iWPsms6suwmFUU root@manager

The key's randomart image is:

+---[RSA 2048]----+

| .E o o===|

| . = +XO|

| . +oB=|

| . ..= .|

| S .= . |

| . + +o = |

| . +.+o . |

| ..o . +oo |

| ++o.+o=. |

+----[SHA256]-----+

[root@manager ~]# for i in 20 30 40 50;do ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected].$i;donemaster1

[root@master1 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:cBhASzDVEouISwajm3/j3L3XjLvwYzQzEvkTi2800+k root@master1

The key's randomart image is:

+---[RSA 2048]----+

|o o+*+. |

|+o +.o.o |

|+o. o.o .. |

|o+ oo . |

|+ S+ + . |

| . o % o |

| . o .= % |

| + o . oB E |

| o . o=++ |

+----[SHA256]-----+

[root@master1 ~]# for i in 20 30 40 50;do ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected].$i;donemaster2

[root@master2 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:MHKw5P1AEBshLUAPUnIKQ/wPtXYAxfMOU/cNErvjZLo root@master2

The key's randomart image is:

+---[RSA 2048]----+

|X*+o@=. .. |

|o*++.% . o.. |

|. .o* % ..o o |

| o B B .. . |

| + = S= |

| . .= . |

| . . |

| . |

| E |

+----[SHA256]-----+

[root@master2 ~]# for i in 20 30 40 50;do ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected].$i;doneslave

[root@slave ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:3RuS1syMJTLHKzqrHLph3xRDuCh7xDU05i6zqfqnFQM root@slave

The key's randomart image is:

+---[RSA 2048]----+

| + |

| + o . |

| E = . o + . |

| . = + = % |

|. * = o S B B |

| + = o + o . o |

|. * o + . |

| + *.+ o |

|+.=++.o |

+----[SHA256]-----+

[root@slave ~]# for i in 20 30 40 50;do ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected].$i;done测试ssh交互,远程查看各主机名称

[root@manager ~]# for i in 20 30 40 50 ;do ssh 192.168.8.$i hostname;done

manager

master1

master2

slave4、配置hosts环境

[root@manager ~]# vim /etc/hosts

#插入四行数据

192.168.8.20 manager

192.168.8.30 master1

192.168.8.40 master2

192.168.8.50 slave

[root@manager ~]# for i in 20 30 40 50;do scp /etc/hosts [email protected].$i:/etc/;done

hosts 100% 240 201.6KB/s 00:00

hosts 100% 240 151.9KB/s 00:00

hosts 100% 240 160.9KB/s 00:00

hosts 100% 240 105.1KB/s 00:00配置半同步复制

为了尽可能的减少主库硬件损坏宕机造成的数据丢失,因此在配置MHA的同时建议配置成MySQL的半同步复制。 注:mysql半同步插件是由谷歌提供,具体位置/usr/local/mysql/lib/plugin/下,一个是master用的semisync_master.so,一个是slave用的semisync_slave.so。 如果不清楚Plugin的目录,用下面命令查找。

mysql> show variables like '%plugin_dir%';

+---------------+------------------------------+

| Variable_name | Value |

+---------------+------------------------------+

| plugin_dir | /usr/local/mysql/lib/plugin/ |

+---------------+------------------------------+

1 row in set (0.00 sec)1、分别在主从节点上安装相关的插件(master, Candicate master,slave) 在MySQL上安装插件需要数据库支持动态载入。检查是否支持,用下面命令检测:

mysql> show variables like '%have_dynamic%';

+----------------------+-------+

| Variable_name | Value |

+----------------------+-------+

| have_dynamic_loading | YES |

+----------------------+-------+

1 row in set (0.00 sec)注意:在所有mysql数据库服务器,安装半同步插件(semisync_master.so,semisync_slave.so)

mysql> install plugin rpl_semi_sync_master soname 'semisync_master.so';

Query OK, 0 rows affected (0.01 sec)

mysql> install plugin rpl_semi_sync_slave soname 'semisync_slave.so';

Query OK, 0 rows affected (0.01 sec)使用下面命令查看是否成功

mysql> show plugins;

或者

mysql> select * from information_schema.plugins;查看半同步信息

mysql> show variables like '%rpl_semi_sync%';

+-------------------------------------------+------------+

| Variable_name | Value |

+-------------------------------------------+------------+

| rpl_semi_sync_master_enabled | OFF |

| rpl_semi_sync_master_timeout | 10000 |

| rpl_semi_sync_master_trace_level | 32 |

| rpl_semi_sync_master_wait_for_slave_count | 1 |

| rpl_semi_sync_master_wait_no_slave | ON |

| rpl_semi_sync_master_wait_point | AFTER_SYNC |

| rpl_semi_sync_slave_enabled | OFF |

| rpl_semi_sync_slave_trace_level | 32 |

+-------------------------------------------+------------+

8 rows in set (0.01 sec)上面可以看到半同复制插件已经安装,只是还没有启用,所以是off 。

2、修改my.cnf文件,配置主从同步

若主MYSQL服务器已经存在,只是后期才搭建从MYSQL服务器,在置配数据同步前应先将主MYSQL服务器的要同步的数据库拷贝到从MYSQL服务器上(如先在主MYSQL上备份数据库,再用备份在从MYSQL服务器上恢复)

master1

[root@master1 ~]# vim /etc/my.cnf

server-id = 1

log-bin=mysql-bin

binlog_format=mixed

log-bin-index=mysql-bin.index

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=1000

rpl_semi_sync_slave_enabled=1

relay_log_purge=0

relay-log = relay-bin

relay-log-index = slave-relay-bin.index

[root@master1 ~]# systemctl restart mysqld注:rpl_semi_sync_master_enabled=1 1表是启用,0表示关闭 rpl_semi_sync_master_timeout=10000:毫秒单位 ,该参数主服务器等待确认消息10秒后,不再等待,变为异步方式。

master2

[root@master2 ~]# vim /etc/my.cnf

server-id = 2

log-bin=mysql-bin

binlog_format=mixed

log-bin-index=mysql-bin.index

relay_log_purge=0

relay-log = relay-bin

relay-log-index = slave-relay-bin.index

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=10000

rpl_semi_sync_slave_enabled=1

[root@master2 ~]# systemctl restart mysqld注:relay_log_purge=0,禁止 SQL 线程在执行完一个 relay log 后自动将其删除,对于MHA场景下,对于某些滞后从库的恢复依赖于其他从库的relay log,因此采取禁用自动删除功能.

slave

[root@slave ~]# vim /etc/my.cnf

server-id = 3

log-bin = mysql-bin

relay-log = relay-bin

relay-log-index = slave-relay-bin.index

read_only = 1

rpl_semi_sync_slave_enabled=1

[root@slave ~]# systemctl restart mysqld查看状态

查看三台主机的半同步信息

mysql> show variables like '%rpl_semi_sync%';

+-------------------------------------------+------------+

| Variable_name | Value |

+-------------------------------------------+------------+

| rpl_semi_sync_master_enabled | ON |

| rpl_semi_sync_master_timeout | 1000 |

| rpl_semi_sync_master_trace_level | 32 |

| rpl_semi_sync_master_wait_for_slave_count | 1 |

| rpl_semi_sync_master_wait_no_slave | ON |

| rpl_semi_sync_master_wait_point | AFTER_SYNC |

| rpl_semi_sync_slave_enabled | ON |

| rpl_semi_sync_slave_trace_level | 32 |

+-------------------------------------------+------------+

8 rows in set (0.01 sec)查看三台主机的半同步状态

mysql> show status like '%rpl_semi_sync%';

+--------------------------------------------+-------+

| Variable_name | Value |

+--------------------------------------------+-------+

| Rpl_semi_sync_master_clients | 0 |

| Rpl_semi_sync_master_net_avg_wait_time | 0 |

| Rpl_semi_sync_master_net_wait_time | 0 |

| Rpl_semi_sync_master_net_waits | 0 |

| Rpl_semi_sync_master_no_times | 0 |

| Rpl_semi_sync_master_no_tx | 0 |

| Rpl_semi_sync_master_status | ON |

| Rpl_semi_sync_master_timefunc_failures | 0 |

| Rpl_semi_sync_master_tx_avg_wait_time | 0 |

| Rpl_semi_sync_master_tx_wait_time | 0 |

| Rpl_semi_sync_master_tx_waits | 0 |

| Rpl_semi_sync_master_wait_pos_backtraverse | 0 |

| Rpl_semi_sync_master_wait_sessions | 0 |

| Rpl_semi_sync_master_yes_tx | 0 |

| Rpl_semi_sync_slave_status | OFF |

+--------------------------------------------+-------+

15 rows in set (0.01 sec)有几个状态参数值得关注:

rpl_semi_sync_master_status :显示主服务是异步复制模式还是半同步复制模式

rpl_semi_sync_master_clients :显示有多少个从服务器配置为半同步复制模式

rpl_semi_sync_master_yes_tx :显示从服务器确认成功提交的数量

rpl_semi_sync_master_no_tx :显示从服务器确认不成功提交的数量

rpl_semi_sync_master_tx_avg_wait_time :事务因开启 semi_sync ,平均需要额外等待的时间

rpl_semi_sync_master_net_avg_wait_time :事务进入等待队列后,到网络平均等待时间

创建用户并指定主从

master1

第一条grant命令是创建一个用于主从复制的帐号,在master1和master2的主机上创建即可。 第二条grant命令是创建MHA管理账号,所有mysql服务器上都需要执行。MHA会在配置文件里要求能远程登录到数据库,所以要进行必要的赋权。

mysql> grant replication slave on *.* to mharep@'192.168.8.%' identified by '123';

Query OK, 0 rows affected, 1 warning (1.00 sec)

mysql> grant all privileges on *.* to manager@'192.168.8.%' identified by '123';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> show master status;

+------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------+

| mysql-bin.000005 | 742 | | | |

+------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)master2

mysql> grant replication slave on *.* to mharep@'192.168.8.%' identified by '123';

Query OK, 0 rows affected, 1 warning (10.01 sec)

mysql> grant all privileges on *.* to manager@'192.168.8.%' identified by '123';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> change master to

-> master_host='192.168.8.30',

-> master_port=3306,

-> master_user='mharep',

-> master_password='123',

-> master_log_file='mysql-bin.000005',

-> master_log_pos=742;

Query OK, 0 rows affected, 2 warnings (0.01 sec)

mysql> start slave;

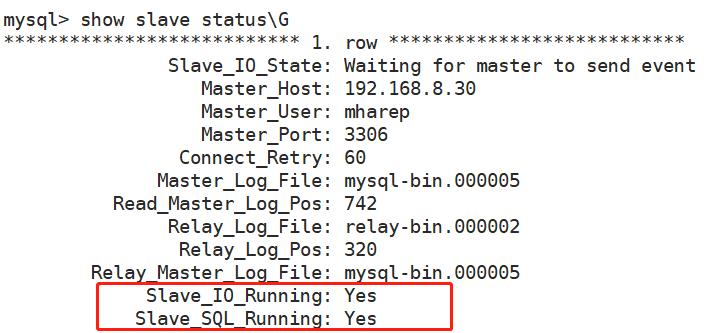

Query OK, 0 rows affected (0.00 sec)查看从(master2)的状态(切记不要搞混,master2也是从),以下两个值必须为yes,代表从服务器能正常连接主服务器 Slave_IO_Running:Yes,Slave_SQL_Running:Yes

slave

mysql> grant all privileges on *.* to manager@'192.168.8.%' identified by '123';

Query OK, 0 rows affected, 1 warning (0.04 sec)

mysql> change master to

-> master_host='192.168.8.30',

-> master_port=3306,

-> master_user='mharep',

-> master_password='123',

-> master_log_file='mysql-bin.000005',

-> master_log_pos=742;

Query OK, 0 rows affected, 2 warnings (0.01 sec)

mysql> start slave;

Query OK, 0 rows affected (0.01 sec)查看从的状态,以下两个值必须为yes,代表从服务器能正常连接主服务器 Slave_IO_Running:Yes Slave_SQL_Running:Yes

查看master1半同步状态

mysql> show status like '%rpl_semi_sync%';

+--------------------------------------------+-------+

| Variable_name | Value |

+--------------------------------------------+-------+

| Rpl_semi_sync_master_clients | 2 |

| Rpl_semi_sync_master_net_avg_wait_time | 0 |

| Rpl_semi_sync_master_net_wait_time | 0 |

| Rpl_semi_sync_master_net_waits | 0 |

| Rpl_semi_sync_master_no_times | 1 |

| Rpl_semi_sync_master_no_tx | 2 |

| Rpl_semi_sync_master_status | ON |

| Rpl_semi_sync_master_timefunc_failures | 0 |

| Rpl_semi_sync_master_tx_avg_wait_time | 0 |

| Rpl_semi_sync_master_tx_wait_time | 0 |

| Rpl_semi_sync_master_tx_waits | 0 |

| Rpl_semi_sync_master_wait_pos_backtraverse | 0 |

| Rpl_semi_sync_master_wait_sessions | 0 |

| Rpl_semi_sync_master_yes_tx | 0 |

| Rpl_semi_sync_slave_status | OFF |

+--------------------------------------------+-------+

15 rows in set (0.01 sec)配置mysql-mha

manager需要安装node包和manager包,data节点包括原有的MySQL复制结构中的主机,至少3台,即1主2从,当masterfailover后,还能保证主从结构;只需安装node包。manager server:运行监控脚本,负责monitoring 和 auto-failover;

1、 在所有主机上安装mha所依赖的软件包。

[root@manager ~]# yum -y install perl-DBD-MySQL perl-Config-Tiny perl-Log-Dispatch perl-Parallel-ForkManager perl-Config-IniFiles ncftp perl-Params-Validate perl-CPAN perl-Test-Mock-LWP.noarch perl-LWP-Authen-Negotiate.noarch perl-devel perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker2、在管理节点(manager)需要两个都安装:mha4mysql-node-0.56.tar.gz和mha4mysql-manager-0.56.tar.gz ,在另外三台数据库节点只要安装MHA的node节点。

软件下载 yoshinorim (Yoshinori Matsunobu) · GitHub

管理节点安装mha4mysql-manager-0.58.tar.gz

[root@manager ~]# tar zxf mha4mysql-manager-0.58.tar.gz

[root@manager ~]# cd mha4mysql-manager-0.58/

[root@manager mha4mysql-manager-0.58]# perl Makefile.PL

[root@manager mha4mysql-manager-0.58]# make && make install

[root@manager mha4mysql-manager-0.58]# mkdir /etc/masterha

[root@manager mha4mysql-manager-0.58]# mkdir -p /masterha/app1

[root@manager mha4mysql-manager-0.58]# mkdir /scripts

[root@manager mha4mysql-manager-0.58]# cp samples/conf/* /etc/masterha/

[root@manager mha4mysql-manager-0.58]# cp samples/scripts/* /scripts注意:在所有数据库(包括管理节点的四台服务器)上安装mha4mysql-node-0.56.tar.gz

[root@manager ~]# tar zxf mha4mysql-node-0.58.tar.gz

[root@manager ~]# cd mha4mysql-node-0.58/

[root@manager mha4mysql-node-0.58]# perl Makefile.PL

[root@manager mha4mysql-node-0.58]# make && make install配置mha

与绝大多数Linux应用程序类似,MHA的正确使用依赖于合理的配置文件。MHA的配置文件与mysql的my.cnf文件配置相似,采取的是param=value的方式来配置,配置文件位于管理节点,通常包括每一个mysql server的主机名,mysql用户名,密码,工作目录等等。 编辑/etc/masterha/app1.conf,内容如下:

[root@manager ~]# vim /etc/masterha/app1.cnf

[server default]

manager_workdir=/masterha/app1

manager_log=/masterha/app1/manager.log

user=manager

password=123

ssh_user=root

repl_user=mharep

repl_password=123

ping_interval=1

[server1]

hostname=192.168.8.30

port=3306

master_binlog_dir=/usr/local/mysql/data

candidate_master=1

[server2]

hostname=192.168.8.40

port=3306

master_binlog_dir=/usr/local/mysql/data

candidate_master=1

[server3]

hostname=192.168.8.50

port=3306

master_binlog_dir=/usr/local/mysql/data

no_master=1配关配置项的解释如下:

manager_workdir=/masterha/app1 #设置manager的工作目录

manager_log=/masterha/app1/manager.log #设置manager的日志

user=manager #设置监控用户manager

password=123 #监控用户manager的密码

ssh_user=root #ssh连接用户

repl_user=mharep #主从复制用户

repl_password=123 #主从复制用户密码

ping_interval=1 #设置监控主库,发送ping包的时间间隔,默认是3秒,尝试三次没有回应的时候自动进行

railover master_binlog_dir=/usr/local/mysql/data #设置master 保存binlog的位置,以便MHA可以找到master的日志,我这里的也就是mysql的数据目录

candidate_master=1 #设置为候选master,如果设置该参数以后,发生主从切换以后将会将此从库提升为主库

验证

SSH 有效性验证

[root@manager ~]# masterha_check_ssh --global_conf=/etc/masterha/masterha_default.cnf --conf=/etc/masterha/app1.cnf

Tue Apr 4 13:44:58 2023 - [info] Reading default configuration from /etc/masterha/masterha_default.cnf..

Tue Apr 4 13:44:58 2023 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Tue Apr 4 13:44:58 2023 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Tue Apr 4 13:44:58 2023 - [info] Starting SSH connection tests..

Tue Apr 4 13:45:01 2023 - [debug]

Tue Apr 4 13:44:59 2023 - [debug] Connecting via SSH from [email protected](192.168.8.50:22) to [email protected](192.168.8.30:22)..

Tue Apr 4 13:45:00 2023 - [debug] ok.

Tue Apr 4 13:45:00 2023 - [debug] Connecting via SSH from [email protected](192.168.8.50:22) to [email protected](192.168.8.40:22)..

Tue Apr 4 13:45:00 2023 - [debug] ok.

Tue Apr 4 13:45:01 2023 - [debug]

Tue Apr 4 13:44:59 2023 - [debug] Connecting via SSH from [email protected](192.168.8.40:22) to [email protected](192.168.8.30:22)..

Tue Apr 4 13:44:59 2023 - [debug] ok.

Tue Apr 4 13:44:59 2023 - [debug] Connecting via SSH from [email protected](192.168.8.40:22) to [email protected](192.168.8.50:22)..

Tue Apr 4 13:45:00 2023 - [debug] ok.

Tue Apr 4 13:45:01 2023 - [debug]

Tue Apr 4 13:44:58 2023 - [debug] Connecting via SSH from [email protected](192.168.8.30:22) to [email protected](192.168.8.40:22)..

Tue Apr 4 13:44:59 2023 - [debug] ok.

Tue Apr 4 13:44:59 2023 - [debug] Connecting via SSH from [email protected](192.168.8.30:22) to [email protected](192.168.8.50:22)..

Tue Apr 4 13:45:00 2023 - [debug] ok.

Tue Apr 4 13:45:01 2023 - [info] All SSH connection tests passed successfully.集群复制的有效性验证

所有mysql必须都启动,验证成功的话会自动识别出所有服务器和主从状况 注:验证成功的话会自动识别出所有服务器和主从状况 在验证时,若遇到这个错误:Can't exec "mysqlbinlog" ...... 解决方法是在所有服务器上执行:

ln -s /usr/local/mysql/bin/* /usr/local/bin/[root@manager ~]# masterha_check_repl --global_conf=/etc/masterha/masterha_default.cnf --conf=/etc/masterha/app1.cnf

Tue Apr 4 13:48:03 2023 - [info] Reading default configuration from /etc/masterha/masterha_default.cnf..

Tue Apr 4 13:48:03 2023 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Tue Apr 4 13:48:03 2023 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Tue Apr 4 13:48:03 2023 - [info] MHA::MasterMonitor version 0.58.

Tue Apr 4 13:48:04 2023 - [info] GTID failover mode = 0

Tue Apr 4 13:48:04 2023 - [info] Dead Servers:

Tue Apr 4 13:48:04 2023 - [info] Alive Servers:

Tue Apr 4 13:48:04 2023 - [info] 192.168.8.30(192.168.8.30:3306)

Tue Apr 4 13:48:04 2023 - [info] 192.168.8.40(192.168.8.40:3306)

Tue Apr 4 13:48:04 2023 - [info] 192.168.8.50(192.168.8.50:3306)

Tue Apr 4 13:48:04 2023 - [info] Alive Slaves:

Tue Apr 4 13:48:04 2023 - [info] 192.168.8.40(192.168.8.40:3306) Version=5.7.40-log (oldest major version between slaves) log-bin:enabled

Tue Apr 4 13:48:04 2023 - [info] Replicating from 192.168.8.30(192.168.8.30:3306)

Tue Apr 4 13:48:04 2023 - [info] Primary candidate for the new Master (candidate_master is set)

Tue Apr 4 13:48:04 2023 - [info] 192.168.8.50(192.168.8.50:3306) Version=5.7.40-log (oldest major version between slaves) log-bin:enabled

Tue Apr 4 13:48:04 2023 - [info] Replicating from 192.168.8.30(192.168.8.30:3306)

Tue Apr 4 13:48:04 2023 - [info] Not candidate for the new Master (no_master is set)

Tue Apr 4 13:48:04 2023 - [info] Current Alive Master: 192.168.8.30(192.168.8.30:3306)

Tue Apr 4 13:48:04 2023 - [info] Checking slave configurations..

Tue Apr 4 13:48:04 2023 - [info] read_only=1 is not set on slave 192.168.8.40(192.168.8.40:3306).

Tue Apr 4 13:48:04 2023 - [warning] relay_log_purge=0 is not set on slave 192.168.8.50(192.168.8.50:3306).

Tue Apr 4 13:48:04 2023 - [info] Checking replication filtering settings..

Tue Apr 4 13:48:04 2023 - [info] binlog_do_db= , binlog_ignore_db=

Tue Apr 4 13:48:04 2023 - [info] Replication filtering check ok.

Tue Apr 4 13:48:04 2023 - [info] GTID (with auto-pos) is not supported

Tue Apr 4 13:48:04 2023 - [info] Starting SSH connection tests..

Tue Apr 4 13:48:07 2023 - [info] All SSH connection tests passed successfully.

Tue Apr 4 13:48:07 2023 - [info] Checking MHA Node version..

Tue Apr 4 13:48:07 2023 - [info] Version check ok.

Tue Apr 4 13:48:07 2023 - [info] Checking SSH publickey authentication settings on the current master..

Tue Apr 4 13:48:08 2023 - [info] HealthCheck: SSH to 192.168.8.30 is reachable.

Tue Apr 4 13:48:08 2023 - [info] Master MHA Node version is 0.58.

Tue Apr 4 13:48:08 2023 - [info] Checking recovery script configurations on 192.168.8.30(192.168.8.30:3306)..

Tue Apr 4 13:48:08 2023 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/usr/local/mysql/data --output_file=/data/log/masterha/save_binary_logs_test --manager_version=0.58 --start_file=mysql-bin.000005

Tue Apr 4 13:48:08 2023 - [info] Connecting to [email protected](192.168.8.30:22)..

Creating /data/log/masterha if not exists.. ok.

Checking output directory is accessible or not..

ok.

Binlog found at /usr/local/mysql/data, up to mysql-bin.000005

Tue Apr 4 13:48:08 2023 - [info] Binlog setting check done.

Tue Apr 4 13:48:08 2023 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Tue Apr 4 13:48:08 2023 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='manager' --slave_host=192.168.8.40 --slave_ip=192.168.8.40 --slave_port=3306 --workdir=/data/log/masterha --target_version=5.7.40-log --manager_version=0.58 --relay_log_info=/usr/local/mysql/data/relay-log.info --relay_dir=/usr/local/mysql/data/ --slave_pass=xxx

Tue Apr 4 13:48:08 2023 - [info] Connecting to [email protected](192.168.8.40:22)..

Creating directory /data/log/masterha.. done.

Checking slave recovery environment settings..

Opening /usr/local/mysql/data/relay-log.info ... ok.

Relay log found at /usr/local/mysql/data, up to relay-bin.000002

Temporary relay log file is /usr/local/mysql/data/relay-bin.000002

Checking if super_read_only is defined and turned on.. not present or turned off, ignoring.

Testing mysql connection and privileges..

mysql: [Warning] Using a password on the command line interface can be insecure.

done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Tue Apr 4 13:48:09 2023 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='manager' --slave_host=192.168.8.50 --slave_ip=192.168.8.50 --slave_port=3306 --workdir=/data/log/masterha --target_version=5.7.40-log --manager_version=0.58 --relay_log_info=/usr/local/mysql/data/relay-log.info --relay_dir=/usr/local/mysql/data/ --slave_pass=xxx

Tue Apr 4 13:48:09 2023 - [info] Connecting to [email protected](192.168.8.50:22)..

Creating directory /data/log/masterha.. done.

Checking slave recovery environment settings..

Opening /usr/local/mysql/data/relay-log.info ... ok.

Relay log found at /usr/local/mysql/data, up to relay-bin.000002

Temporary relay log file is /usr/local/mysql/data/relay-bin.000002

Checking if super_read_only is defined and turned on.. not present or turned off, ignoring.

Testing mysql connection and privileges..

mysql: [Warning] Using a password on the command line interface can be insecure.

done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Tue Apr 4 13:48:09 2023 - [info] Slaves settings check done.

Tue Apr 4 13:48:09 2023 - [info]

192.168.8.30(192.168.8.30:3306) (current master)

+--192.168.8.40(192.168.8.40:3306)

+--192.168.8.50(192.168.8.50:3306)

Tue Apr 4 13:48:09 2023 - [info] Checking replication health on 192.168.8.40..

Tue Apr 4 13:48:09 2023 - [info] ok.

Tue Apr 4 13:48:09 2023 - [info] Checking replication health on 192.168.8.50..

Tue Apr 4 13:48:09 2023 - [info] ok.

Tue Apr 4 13:48:09 2023 - [warning] master_ip_failover_script is not defined.

Tue Apr 4 13:48:09 2023 - [warning] shutdown_script is not defined.

Tue Apr 4 13:48:09 2023 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.启动manager

注:在应用Unix/Linux时,我们一般想让某个程序在后台运行,于是我们将常会用 & 在程序结尾来让程序自动运行。比如我们要运行mysql在后台: /usr/local/mysql/bin/mysqld_safe –user=mysql &。可是有很多程序并不想mysqld一样,这样我们就需要nohup命令。

[root@manager mha4mysql-manager-0.58]# cd /masterha/

[root@manager masterha]# nohup masterha_manager --conf/etc/masterha/app1.cnf &> /tmp/mha_manager.log &

[2] 99442检查状态

[root@manager masterha]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:99277) is running(0:PING_OK), master:192.168.8.30故障转移验证

(自动failover) master dead后,MHA当时已经开启,master2(Slave)会自动failover为Master. 验证的方式是先停掉 master1,因为之前的配置文件中,把master2作为了候选人,那么就到 slave 上查看 master 的 IP 是否变为了master2 的 IP

1、停掉 master 在master1上把 mysql 停掉

[root@master1 ~]# systemctl stop mysqld2、查看 MHA 日志 上面的配置文件中指定了日志位置为/masterha/app1/manager.log

[root@manager ~]# cat /masterha/app1/manager.log

----- Failover Report -----

app1: MySQL Master failover 192.168.8.30(192.168.8.30:3306) to 192.168.8.40(192.168.8.40:3306) succeeded

Master 192.168.8.30(192.168.8.30:3306) is down!

Check MHA Manager logs at manager:/masterha/app1/manager.log for details.

Started automated(non-interactive) failover.

The latest slave 192.168.8.40(192.168.8.40:3306) has all relay logs for recovery.

Selected 192.168.8.40(192.168.8.40:3306) as a new master.

192.168.8.40(192.168.8.40:3306): OK: Applying all logs succeeded.

192.168.8.50(192.168.8.50:3306): This host has the latest relay log events.

Generating relay diff files from the latest slave succeeded.

192.168.8.50(192.168.8.50:3306): OK: Applying all logs succeeded. Slave started, replicating from 192.168.8.40(192.168.8.40:3306)

192.168.8.40(192.168.8.40:3306): Resetting slave info succeeded.

Master failover to 192.168.8.40(192.168.8.40:3306) completed successfully.从日志信息中可以看到 master failover 已经成功了,并可以看出故障转移的大体流程

3、检查 slave2 的复制 登录 slave(192.168.8.50) 的Mysql,查看 slave 状态

可以看到 master 的 IP 现在为 192.168.8.40, 已经切换到和192.168.8.40同步了,本来是和192.168.8.30同步的,说明 MHA 已经把master2提升为了新的 master,IO线程和SQL线程也正确运行,MHA搭建成功。

MHA Manager 端日常操作

1、检查是否有下列文件,有则删除。 发生主从切换后,MHAmanager服务会自动停掉,且在manager_workdir(/masterha/app1)目录下面生成文件app1.failover.complete,若要启动MHA,必须先确保无此文件。

主从转移后,需要删除下面两个文件。

ll /masterha/app1/app1.failover.complete

ll /masterha/app1/app1.failover.error2、检查MHA复制检查:(需要把master设置成candicatade的从服务器)

mysql> change master to

-> master_host='192.168.8.40',

-> master_port=3306,

-> master_user='mharep',

-> master_password='123',

-> master_log_file='mysql-bin.000007',

-> master_log_pos=154;

Query OK, 0 rows affected, 2 warnings (0.03 sec)

mysql> start slave;

Query OK, 0 rows affected (0.01 sec)3、登录manager

[root@manager ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf

#省略部分内容

Thu Apr 6 19:58:27 2023 - [info] Checking replication health on 192.168.8.30..

Thu Apr 6 19:58:27 2023 - [info] ok.

Thu Apr 6 19:58:27 2023 - [info] Checking replication health on 192.168.8.50..

Thu Apr 6 19:58:27 2023 - [info] ok.

Thu Apr 6 19:58:27 2023 - [warning] master_ip_failover_script is not defined.

Thu Apr 6 19:58:27 2023 - [warning] shutdown_script is not defined.

Thu Apr 6 19:58:27 2023 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.4、停止MHA:

[root@manager ~]# masterha_stop --conf=/etc/masterha/app1.cnf

Stopped app1 successfully.5、启动MHA:

[root@manager ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf &>/tmp/mha_manager.log &

[1] 101196当有slave 节点宕掉时,默认是启动不了的,加上 --ignore_fail_on_start 即使有节点宕掉也能启动MHA,如下:

nohup masterha_manager --conf=/etc/masterha/app1.cnf --ignore_fail_on_start &>/tmp/mha_manager.log &6、检查状态:

[root@manager ~]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:101303) is running(0:PING_OK), master:192.168.8.407、检查日志:

[root@manager ~]# tail -f /masterha/app1/manager.log8、主从切换后续工作

重构: 重构就是你的主挂了,切换到Candicate master上,Candicate master变成了主,因此重构的一种方案原主库修复成一个新的slave 主库切换后,把原主库修复成新从库,然后重新执行以上5步。

登录master1主机

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.8.40

Master_User: mharep

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000007

Read_Master_Log_Pos: 154

Relay_Log_File: relay-bin.000002

Relay_Log_Pos: 320

Relay_Master_Log_File: mysql-bin.000007

Slave_IO_Running: Yes

Slave_SQL_Running: Yes启动manager

[root@manager ~]# nohup masterha_manager --conf=/etc/masterha/app1.cnf &> /tmp/mha_manager.log &

[root@manager ~]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:101303) is running(0:PING_OK), master:192.168.8.40注意:如果正常,会显示"PING_OK",否则会显示"NOT_RUNNING",这代表MHA监控没有开启。定期删除中继日志 在配置主从复制中,slave上设置了参数relay_log_purge=0,所以slave节点需要定期删除中继日志,建议每个slave节点删除中继日志的时间错开。

[root@manager ~]# crontab -e

0 5 * * * /usr/local/bin/purge_relay_logs - -user=root --password=123 --port=3306 --disable_relay_log_purge >> /var/log/purge_relay.log 2>&1