scikit 模型评价指标

In this post, it will be about deploying a scikit-learn machine learning model using serverless services because it allows us to let the model deploy and only pay for the time it will be used unlike using a server.

在本文中,它将涉及使用无服务器服务来部署scikit学习机器学习模型,因为它允许我们让模型进行部署,并且仅支付使用时间,而不是使用服务器。

要求 (Requirements)

Before going further, you will need to have the following tool installed on your environment:

在继续之前,您需要在您的环境中安装以下工具:

An AWS account

一个AWS账户

AWS CLI

AWS CLI

A Github account

一个Github帐户

- Python 3.7 or greaterPython 3.7或更高版本

- a scikit-learn model trained and serialized with its preprocessing step (using pickle)通过预处理步骤训练并序列化的scikit学习模型(使用泡菜)

了解解决方案的架构 (Understanding the solution’s architecture)

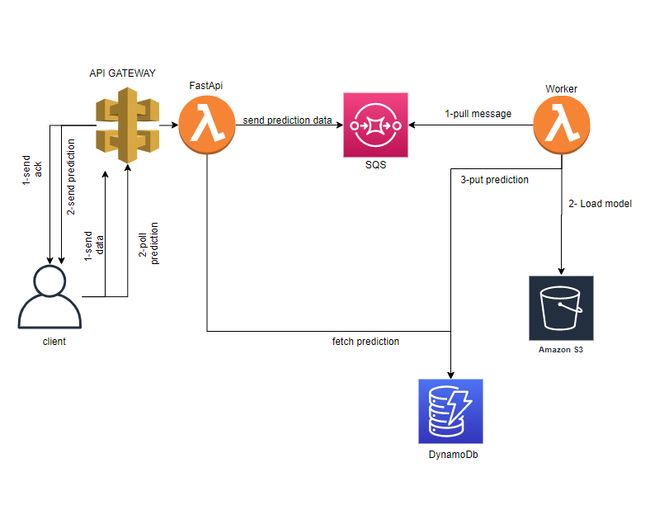

First, let’s see from a global point of view the application we are going to deploy.

首先,让我们从全局的角度来看待部署的应用程序。

The architecture to be deployed source: author 待部署的体系结构源:作者Lots of tutorials on how to deploy a model in production directly integrates the serialized model into the API. This way of proceeding has the disadvantage of making the API coupled to the model. Another way to do this is to delegate the prediction load to workers. This is what the schema above shows.

有关如何在生产中部署模型的大量教程将序列化模型直接集成到API中。 这种进行方式的缺点是使API与模型耦合。 做到这一点的另一种方法是将预测负荷委托给工人。 这就是上面的架构所显示的。

The machine learning model is stored in an s3 bucket. It is loaded by the worker which is a Lambda function when a message containing prediction data is put in the SQS queue by the client through the API gateway/Lambda REST endpoint.

机器学习模型存储在s3存储桶中。 当客户端通过API网关/ Lambda REST端点将包含预测数据的消息放入SQS队列时,它是Lambda函数的工作程序加载的。

When the worker has finished the prediction job, it puts the result in a DynamoDb table in order to be accessed. Finally, the client requests the prediction result through an API endpoint that will read the DynamoDb table to fetch the result.

工作人员完成预测工作后,会将结果放入DynamoDb表中以便进行访问。 最后,客户端通过API端点请求预测结果,该端点将读取DynamoDb表以获取结果。

As you can see we are delegating the loading and prediction work to a worker and we do not integrate the model into the REST API. This is because a model can take a long time to load and predict. Therefore we manage them asynchronously thanks to the addition of an SQS queue and a DynamoDb table.

如您所见,我们正在将加载和预测工作委派给工作人员,并且我们没有将模型集成到REST API中。 这是因为模型可能需要很长时间才能加载和预测。 因此,由于添加了SQS队列和DynamoDb表,我们可以异步管理它们。

如何部署基础架构? (How is the infrastructure deployed?)

It is possible to configure the infrastructure via the AWS console but here we will use a Github action that I wrote which will allow us to deploy a model without having to manage the deployment of the infrastructure except for the s3 bucket.

可以通过AWS控制台配置基础架构,但是在这里,我们将使用我编写的Github操作,该操作将允许我们部署模型,而不必管理除s3存储桶之外的基础架构部署。

The GitHub action uses the serverless framework under the hood in order to package the fast REST API, the prediction worker, and takes care of deploying the underlying infrastructure.

GitHub动作使用幕后的无服务器框架来打包快速的REST API,预测工作程序,并负责部署基础架构。

We will not discuss here how to write GitHub actions nor how to use the serverless framework.

我们将不在这里讨论如何编写GitHub动作或如何使用无服务器框架。

设置项目 (Setting up the project)

I will use poetry to set up the project structure and manage dependencies, feel free to adapt it if you want to use pip with a virtual environment.

我将使用诗歌来设置项目结构和管理依赖项,如果您想在虚拟环境中使用pip,请随时进行调整。

Create a new project with

poetry new用

poetry new新建一个新项目poetry newcdto the created foldercd到创建的文件夹Run the following command to install the project’s dependencies :

poetry add pandas scikit-learn pydantic typer boto3运行以下命令以安装项目的依赖项:

poetry add pandas scikit-learn pydantic typer boto3

4. Create an app.py file in

4.在根目录中的app.py文件。

Now that the project is set up, let’s do the same for the s3 bucket that will store our model.

现在已经建立了项目,让我们对将存储模型的s3存储桶执行相同的操作。

构建一个小的CLI来设置s3 (Building a small CLI to set up s3)

For the seek of reproducibility, we will write a simple CLI with Typer which is built on top of Click. If you are not familiar with CLI I’ve made a post that covers some basic concepts to create a CLI.

为了实现可重复性,我们将使用Typer编写一个简单的CLI,该CLI基于Click 。 如果您不熟悉CLI,我将发表一篇文章,介绍创建CLI的一些基本概念。

Let’s see the code to build the CLI.

让我们看一下构建CLI的代码。

import typer

import boto3

import os

app = typer.Typer()

@app.command()

def create_bucket(name: str):

try:

s3 = boto3.resource("s3")

s3.Bucket(name).create()

versioning = s3.BucketVersioning(name)

versioning.enable()

typer.echo(f"bucket {name} created")

except Exception as e:

typer.echo(f"error : {e}")

@app.command()

def delete_bucket(name: str):

try:

s3 = boto3.resource("s3")

s3.Bucket(name).delete()

typer.echo(f"bucket {name} deleted")

except Exception as e:

typer.echo(f"error : {e}")

@app.command()

def upload(name: str, model: str, tag: str = typer.Option(...)):

try:

key = os.path.basename(model)

s3 = boto3.client("s3")

s3.upload_file(model, name, key)

typer.echo(f"{model} uploaded")

objects = s3.list_object_versions(Bucket=name)

latest = [obj for obj in objects["Versions"] if obj["IsLatest"]].pop()

version_id = latest["VersionId"]

s3.put_object_tagging(

Bucket=name,

Key=key,

Tagging={

"TagSet": [

{

"Key": "Version",

"Value": tag,

}

],

},

VersionId=version_id,

)

except Exception as e:

typer.echo(f"error : {e}")

@app.command()

def list_objects(name: str):

try:

client = boto3.client("s3")

objects = client.list_object_versions(Bucket=name)

for version in objects["Versions"]:

key = version["Key"]

version_id = version["VersionId"]

response = client.get_object_tagging(

Bucket=name, Key=key, VersionId=version_id

)

typer.echo(

f"Key: {key} VersionId: {version_id} IsLatest: {version['IsLatest']} LastModified: {version['LastModified']} Tag: {response['TagSet']}"

)

except Exception as e:

typer.echo(f"error : {e}")

@app.command()

def update_lambda(func_name:str, version_id:str):

try:

client = boto3.client("lambda")

response = client.get_function_configuration(

FunctionName=func_name,

)

old_env = response["Environment"]["Variables"]

old_env["MODEL_VERSION"] = version_id

response = client.update_function_configuration(

FunctionName=func_name,

Environment={

'Variables': old_env

}

)

if response["ResponseMetadata"]["HTTPStatusCode"] == 200:

typer.echo("Updated")

else:

raise ValueError(response)

except Exception as e:

typer.echo(f"error : {e}")The first two commands allow us to create and delete an s3 Bucket. It’s important to note that the bucket is created with versioning. Versioning gives us the possibility to upload different versions of the same model and then update the worker to serve the version of our choice.

前两个命令允许我们创建和删除s3存储桶。 请务必注意,存储桶是使用版本控制创建的。 版本控制使我们可以上传同一模型的不同版本,然后更新工作程序以提供我们选择的版本。

Thanks to the third command we will be able to upload our model to the newly created bucket. Finally, the last command is designed to list all the files in the bucket with the id version in order to update the worker.

感谢第三个命令,我们将能够将模型上传到新创建的存储桶。 最后,最后一个命令旨在列出具有ID版本的存储桶中的所有文件,以更新工作程序。

Finally, the last command will allow us to update the version id of the model served by the API.

最后,最后一条命令将允许我们更新API服务的模型的版本ID。

Now, let’s add the CLI to our poetry scripts, open the pyproject.tomlfile, and add at the bottom of it the following lines:

现在,让我们将CLI添加到我们的诗歌脚本中,打开pyproject.toml文件,并在其底部添加以下行:

[tool.poetry.scripts]

cli = ".app:app" Then test if everything is working with poetry run cli you should something like :

然后测试是否一切都与poetry run cli检查以下内容:

Usage: cli [OPTIONS] COMMAND [ARGS]...Options:

--install-completion [bash|zsh|fish|powershell|pwsh]

Install completion for the specified shell.

--show-completion [bash|zsh|fish|powershell|pwsh]

Show completion for the specified shell, to

copy it or customize the installation.--help Show this message and exit.Commands:

create-bucket

delete-bucket

list-objects

upload

update-lambdaFinally, let’s use the CLI to create our bucket, upload our model, and get the version id of it. To achieve it type the following commands:

最后,让我们使用CLI创建存储桶,上传模型并获取其版本ID。 要实现它,请键入以下命令:

poetry run cli create-bucketpoetry run cli create-bucketpoetry run cli upload--tag 1.0 poetry run cli upload--tag 1.0 poetry run cli list-objectswhich should print something like the following output:poetry run cli list-objects,它应该显示类似以下输出的内容:

Key: .pickle

VersionId: 11j3aXER32C7exuanDNKvOea0FxJwXg

IsLatest: True

LastModified: 2020-09-11 09:38:46+00:00

Tag: [{'Key': 'Version', 'Value': '1.0'}] Now that our model is store on s3, we need to define a class that maps our data schema.

现在我们的模型存储在s3上,我们需要定义一个映射我们的数据模式的类。

创建验证架构 (Creating the validation schema)

As the GitHub action uses FastApi under the hood, we need to provide a class for the post method that will handle the data the client will submit to prediction. Thanks to the definition of that class, FastApi will handle the validation of the submitted data for us.

由于GitHub动作在后台使用FastApi,因此我们需要为post方法提供一个类,该类将处理客户端将提交给预测的数据。 由于该类的定义,FastApi将为我们处理提交的数据的验证。

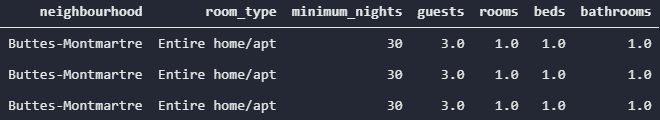

Create a validation.py in the root folder, your class must respect the order, names as well as the data type of the raw data you used to train your model. For instance, my raw data look like this :

在根文件夹中创建一个validate.py ,您的类必须遵守用于训练模型的原始数据的顺序,名称和数据类型。 例如,我的原始数据如下所示:

Example of raw data source: author 原始数据源示例:作者And here is my corresponding validation.py file with the PredictionData class:

这是我与PredictionData类对应的validation.py文件:

from pydantic import BaseModel

class PredictionData(BaseModel):

""" Validation class for FastApi

Attributs must match:

1- names of the source data

2- order of the source data

3- type of the source data

"""

neighbourhood: str

room_type: str

minimum_nights: float

guests: float

rooms: float

beds: float

bathrooms: floatNext, we will write some tests to ensure that our validation class and our model can predict data in the shape it will be posted through the endpoint.

接下来,我们将编写一些测试以确保我们的验证类和模型可以预测将通过端点发布的形状的数据。

Note: The class must be named PredictionData

注意:该类必须命名为PredictionData

测试模型 (Testing the model)

Our test suite will be written with pytest, we need to install it as dev dependency with the following command : poetry add pytest --dev

我们的测试套件将使用pytest编写,我们需要使用以下命令将其安装为dev依赖项: poetry add pytest --dev

In the folder tests create a test_model.py file and put the following tests :

在文件夹tests创建一个test_model.py文件,并进行以下测试:

from typing import Any, Dict

from sklearn_deploy import __version__

import pytest

import pickle

import numpy as np

import pandas as pd

from validation import PredictionData

from sklearn.pipeline import Pipeline

def test_version():

assert __version__ == '0.1.0'

@pytest.fixture

def model() -> Any:

"""Load the model to be injected in tests"""

with open(".pickle","rb") as f:

model = pickle.load(f)

return model

@pytest.fixture()

def raw_data() -> Dict:

"""Data as it will posted trough the API endpoint for prediction"""

return {"neighbourhood":"Buttes-Montmartre","room_type":"Entire home/apt","minimum_nights":1.555,"guests":2.5,"rooms":1,"beds":1,"bathrooms":1}

def test_init_prediction_data(raw_data):

"""Assert that the PredcitionData declared can init with the raw_data"""

prediction_data = PredictionData(**raw_data)

assert prediction_data

def test_is_pipeline(model):

"""Assert that the model load is of sklearn Pipeline type"""

assert type(model) == Pipeline

def test_prediction(model, raw_data):

"""Assert that the model can predict the raw_data"""

data = PredictionData(**raw_data)

formated_data = {}

for key, value in data.dict().items():

formated_data[key] = [value]

df = pd.DataFrame(formated_data)

res = model.predict(df)[0]

assert type(res) == The test suite first ensures that the validation class we wrote can be initialized with the raw data. Then it tests that the model is an instance of the scikit learn Pipeline class. Finally, it makes sure that our model can predict the raw data.

测试套件首先确保可以使用原始数据初始化我们编写的验证类。 然后,它测试该模型是scikit Learn Pipeline类的实例。 最后,它确保我们的模型可以预测原始数据。

Note: Don’t forget to change the path of your model in the model fixture as well as your raw data and your prediction data type at the bottom of the prediction test

注意:不要忘记在模型夹具中更改模型的路径,以及在预测测试底部更改原始数据和预测数据类型

Now, launch the test suite with poetry run pytest -v if everything went fine you should see the following output :

现在,以poetry run pytest -v启动测试套件,如果一切顺利,您应该看到以下输出:

tests/test_model.py::test_version PASSED [ 25%]

tests/test_model.py::test_init_prediction_data PASSED [ 50%]

tests/test_model.py::test_is_pipeline PASSED [ 75%]

tests/test_model.py::test_prediction PASSED [100%]Next, we will write a configuration file in order to build a simple web application with a form to post data to be predicted.

接下来,我们将编写一个配置文件,以构建一个简单的Web应用程序,该应用程序具有一个表单来发布要预测的数据。

编写网络应用程序表单 (Writing the web app form)

Users need to interact with our model, to achieve it we will build a simple form to allow them to post data. We’re not going to need to build a web application from scratch.

用户需要与我们的模型进行交互,要实现该模型,我们将构建一个简单的表单来允许他们发布数据。 我们不需要从头开始构建Web应用程序。

We’re going to use a GitHub action I wrote that uses vue-form-generator under the hood to produce a form by providing it with a config file. Create a form.yml file in the root directory of the project.

我们将使用我编写的GitHub操作,该操作在后台使用vue-form-generator通过向其提供配置文件来生成表单。 在项目的根目录中创建一个form.yml文件。

# make use of vue form generator for the schema property

# https://vue-generators.gitbook.io/vue-generators/

title: "'AIRBNBMLV1'"

units: "'$/night'"

home:

- title: About

subtitle: Informations

content: Side project to deploy machine learning model to AWS lambda

schema:

fields:

- type: select

label: Neighbourhood

model: neighbourhood

required: "true"

validator:

- required

values:

- Entrepôt

- Hôtel-de-Ville

- Opéra

- Ménilmontant

- Louvre

- Popincourt

- Buttes-Montmartre

- Élysée

- Panthéon

- Gobelins

- Luxembourg

- Buttes-Chaumont

- Palais-Bourbon

- Reuilly

- Bourse

- Vaugirard

- Batignolles-Monceau

- Observatoire

- Temple

- Passy

- type: select

label: Room type

model: room_type

required: "true"

validator:

- required

values:

- Entire home/apt

- Private room

- Shared room

- Hotel room

- type: input

inputType: number

label: Minimum night

model: minimum_nights

required: "true"

validator:

- required

- type: input

inputType: number

label: Guests

required: "true"

validator:

- required

model: guests

- type: input

inputType: number

label: Bedrooms

required: "true"

validator:

- required

model: rooms

- type: input

inputType: number

required: "true"

label: Beds

validator:

- required

model: beds

- type: input

inputType: number

required: "true"

label: Bath rooms

validator:

- required

model: bathroomsAt the beginning of the file, we have a first block that contains 3 keys:

在文件的开头,我们有一个包含3个键的第一个块:

title: a string representing the title of the web app

title :代表Web应用程序标题的字符串

units: a string representing the unit of the prediction result

units :一个字符串,代表预测结果的单位

home: a list of objects that will display

contentinside a box in the home pagehome :将在首页框中显示

content的对象列表

Note: For string variable you will need to add single quotes surrounding the string.

注意:对于字符串变量,您将需要在字符串周围添加单引号。

Next, we have the fieldsproperty under the schemakey that is a list of objects. For each object, we define a type. For instance, in my form.yml I used a select with some provided values that the user will need to choose. You will need to check the documentation of vue-form-generator to see all available options for the input you want in order to map your data fields.

接下来,我们在schema键下具有fields属性,该属性是对象列表。 对于每个对象,我们定义一个type 。 例如,在我的form.yml我使用了带有用户需要选择的一些提供值的选择。 您将需要查看vue-form-generator的文档以查看所需输入的所有可用选项,以映射数据字段。

Notes : Make sur to define the fields in the same order as you defined it in your validation.py.

注意:使sur的定义顺序与您在validate.py中定义的顺序相同。

The

modelkey maps the form input to the data field so make sure that both match.

model键将表单输入映射到数据字段,因此请确保两者匹配。

编写GitHub工作流程 (Writing the GitHub Workflow)

In order to use the GitHub action, you will have to create a new Github repository and configure some secrets to enable AWS access and grant permission for GitHub page deployment.

为了使用GitHub操作,您将必须创建一个新的Github存储库并配置一些秘密以启用AWS访问并为GitHub页面部署授予权限。

Secrets are made to store sensitive pieces of information such as passwords, or API keys. In our case the following secrets are needed:

机密用于存储敏感信息,例如密码或API密钥。 在我们的情况下,需要以下秘密:

- AWS_ACCESS_KEY AWS_ACCESS_KEY

- AWS_KEY_ID AWS_KEY_ID

- ACCESS_TOKEN ACCESS_TOKEN

The first two are your AWS ids that are needed to connect to AWS from the CLI. For the last one, you will need to create a GitHub personal access token, click here to see how to generate one.

前两个是从CLI连接到AWS所需的AWS ID。 对于最后一个,您将需要创建一个GitHub个人访问令牌,单击此处以查看如何生成一个。

You will need to select the scopes you’d like to grant this token, make sure to select the repo checkbox to be able to deploy the form to GitHub page.

您将需要选择要授予此令牌的范围,请确保选中“ repo复选框以将表单部署到GitHub页面。

Now you need to create secrets inside your GitHub repository so that actions can use them, check the official documentation here to see how to proceed.

现在,您需要在GitHub存储库中创建秘密,以便操作可以使用它们,请查看此处的官方文档以了解如何进行操作。

Once you have configured all secrets you can create a directory tree .github/worflowsinside the root folder of your project that will contain the following main.yml file.

一旦配置了所有秘密,就可以在项目的根文件夹内创建目录树.github/worflows ,其中将包含以下main.yml文件。

on:

push:

branches:

- master

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_DEFAULT_REGION: us-east-1

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

AWS_SESSION_TOKEN: ${{ secrets.AWS_SESSION_TOKEN }}

jobs:

deploy:

runs-on: ubuntu-latest

name: deploy sklearn model to aws lamnda

steps:

- name: cloning repo

uses: actions/checkout@v2

- name: api rest deployment

id: api

uses: Mg30/action-deploy-sklearn@v1

with:

service_name:

stage:

aws_default_region : ${{env.AWS_DEFAULT_REGION}}

aws_s3_bucket:

model_key: .pickle

model_version:

- name: building vue form

uses : Mg30/vue-form-sklearn@v1

with:

endpoint: ${{steps.api.outputs.endpoint}}

- name: Deploy frontend to github page

uses: JamesIves/github-pages-deploy-action@releases/v3

with:

ACCESS_TOKEN: ${{ secrets.ACCESS_TOKEN }}

BASE_BRANCH: master

BRANCH: gh-pages

FOLDER: dist Note: Pay attention to the indentation, you can use this tool to help you spot errors.

注意:请注意缩进,您可以使用此工具来帮助您发现错误。

The onkey defines when to trigger the deployment in my case every time a push is made against the master branch the action will run. Then in the env key, we define the AWS configuration as environment variables.

在我的情况下, on键定义了何时在每次针对master分支进行推送操作时触发部署的操作。 然后在env键中,我们将AWS配置定义为环境变量。

Finally, we define one job with 4 steps :

最后,我们通过四个步骤定义一个作业:

- The first one use actions/checkout to clone our repo inside the runner here a ubuntu virtual machine 第一个使用动作/签出将我们的存储库克隆到ubuntu虚拟机的运行器中

Then we call the action to deploy the scikit learn model. Thanks to the

withkey we specify:然后我们调用该动作来部署scikit学习模型。 多亏了

with键,我们可以指定:

- The bucket name 桶名称

- The model key型号键

- The version id of the model to be exposed in the REST APIREST API中公开的模型的版本ID

- The name of the service 服务名称

- The stages of deployment.部署阶段。

Notes: You can add

worker_timeout:"in the api step as input to set a different timeout for the Lambda worker in charge of loading and making prediction default to 10 seconds" 注意:您可以在api步骤中添加

worker_timeout:"作为输入,以为负责加载和将预测默认设置为10秒的Lambda worker设置不同的超时" You can find the model’s version id using the CLI we have written in previous section

您可以使用上一节中编写的CLI查找模型的版本ID

3. Then we use another GitHub action to build our web app from form.yml, it uses the previous step output to fetch the API endpoint.

3.然后,我们使用另一个GitHub动作从form.yml构建我们的Web应用程序,它使用上一步的输出来获取API端点。

4. Finally, we use the last GitHub action to deploy our built web app to GitHub page.

4.最后,我们使用上一个GitHub动作将构建的Web应用程序部署到GitHub页面。

部署解决方案 (Deploying the solution)

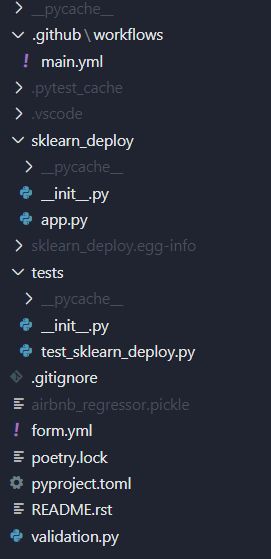

Before triggering the action, let’s check that the folder structure is ok if you have used poetry you should have something like that:

在触发操作之前,让我们检查一下文件夹结构是否正常(如果您使用过诗歌),您应该具有以下内容:

Example of folder structure source: author 文件夹结构来源示例:作者Note: Shaded names are not pushed to git

注意:阴影名称不会推送到git

Now that our workflow is set up, we need to trigger the action with git push

现在我们的工作流程已经设置好了,我们需要使用git push 触发动作。

Then, go to your GitHub repository and go to Actions in the selection ribbon below the name of your directory. Click on the name of your commit to see the deployment progress.

然后,转到您的GitHub存储库,然后转到目录名称下方选择区域中的“操作”。 单击提交的名称以查看部署进度。

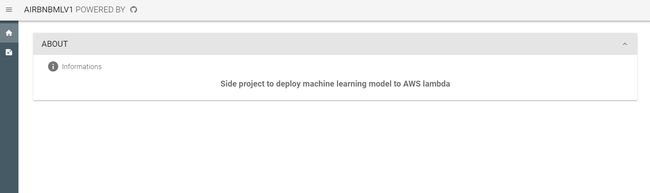

If everything went fine: go to http:// you should see a home page.

如果一切顺利:转到http://您应该会看到一个主页。

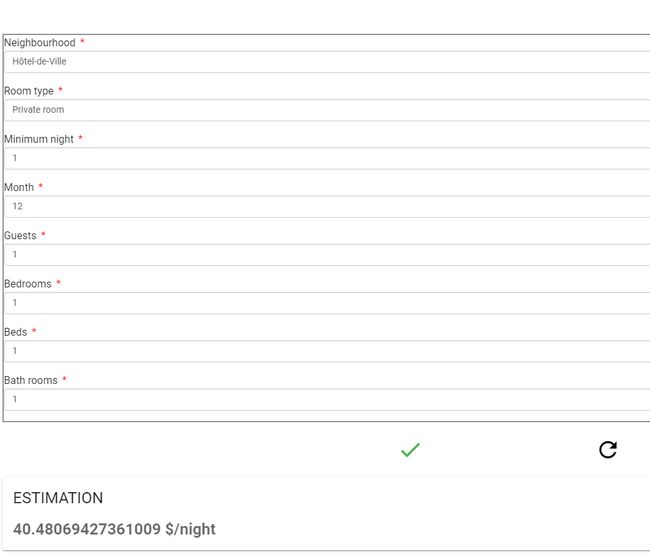

Now, click on the second button in the navigation drawer to access the form that we defined. Fill it and click the green check, after a while, you should see the result of the prediction.

现在,单击导航抽屉中的第二个按钮以访问我们定义的表单。 填充并单击绿色对勾,过一会儿,您应该会看到预测结果。

Web app form source: author Web应用程序表单来源:作者通过API端点进行交互(Interacting through the API Endpoint)

You can find the API endpoint address in the log of the GitHub action by clicking in the step “API rest deployment” or in the AWS console in the API gateway service.

您可以通过单击“ API rest部署”步骤或API网关服务中的AWS控制台,在GitHub操作的日志中找到API端点地址。

The endpoint provides two services:

端点提供两种服务:

POST -

/ /api/predict that accept the data as defined in the raw_data in our test suite and it returns a prediction_id POST-

/ / api / predict接受测试套件中raw_data中定义的数据,并返回预测ID - GET -

/ /api/predict/ that return the prediction result GET-返回预测结果的 / / api / predict /

更新模型 (Updating the model)

Now let’s imagine you want to deploy a second version of the same model because you have improved it. This how-to proceed:

现在,让我们假设您想部署同一模型的第二个版本,因为您已经对其进行了改进。 此操作步骤如下:

run the tests suit against the new version with

peotry run pytest使用

peotry run pytest对新版本运行测试服upload the new model to s3 using

poetry run cli--tag 2.0 使用

poetry run cli将新模型上传到s3--tag 2.0 get the new version id of the uploaded model with :

poetry run cli list-objects使用以下命令获取上载模型的新版本ID:

poetry run cli list-objectsupdate the lambda function with

poetry run cli update-lambda用

poetry run cli update-lambda更新lambda函数poetry run cli update-lambda

Lambda worker function is now serving the updated model through the same API endpoint. It allows us not to redeploy the entire stack.

Lambda worker函数现在通过相同的API端点提供更新的模型。 它使我们不必重新部署整个堆栈。

删除堆栈 (Deleting the stack)

If you want to delete all the services deployed, you can go to the AWS console and selectcloudformation service. You will see in the list of stacks the service_name you have defined in the workflow file. Select it and delete it.

如果要删除所有已部署的服务,则可以转到AWS控制台并选择cloudformation服务。 您将在堆栈列表中看到在工作流文件中定义的service_name 。 选择它并删除它。

结论 (Conclusion)

In this post, we saw how AWS serverless services were used to deploy a model. Thanks to GitHub actions, the model deployment is automated, and it is possible to interact with it programmatically or through a simple web application. It is also possible to update the model without having to re-deploy the stack.

在本文中,我们了解了如何使用AWS无服务器服务来部署模型。 由于GitHub的动作,模型部署是自动化的,并且可以通过编程方式或通过简单的Web应用程序与之交互。 也可以更新模型而不必重新部署堆栈。

Thank you for reading. Any constructive feedback, suggestions, or code improvements will be appreciated.

感谢您的阅读。 任何建设性的反馈,建议或代码改进将不胜感激。

Disclaimers: This post makes use of AWS services which may be chargeable depending on your situation. Be sure to take precautionary measures such as creating a budget to avoid unpleasant surprises.

免责声明:这篇文章利用了根据您的情况可能需要收费的AWS服务。 确保采取预防措施,例如制定预算,以免造成不愉快的意外。

翻译自: https://towardsdatascience.com/how-to-deploy-your-scikit-learn-model-to-aws-44aabb0efcb4

scikit 模型评价指标