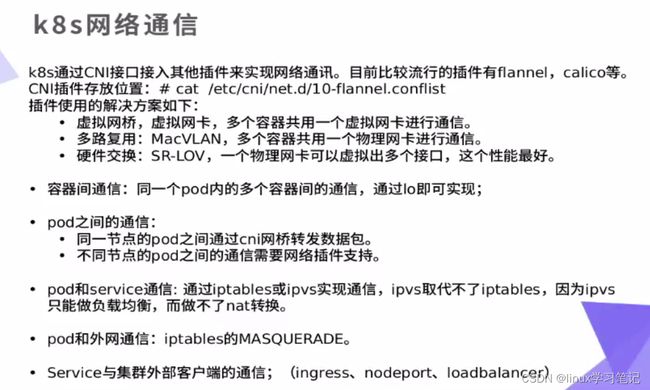

k8s(四)—service服务(暴露方式:ClusterIP、NodePort、LoadBalancer、ExternalName、ingress)

1、service简介

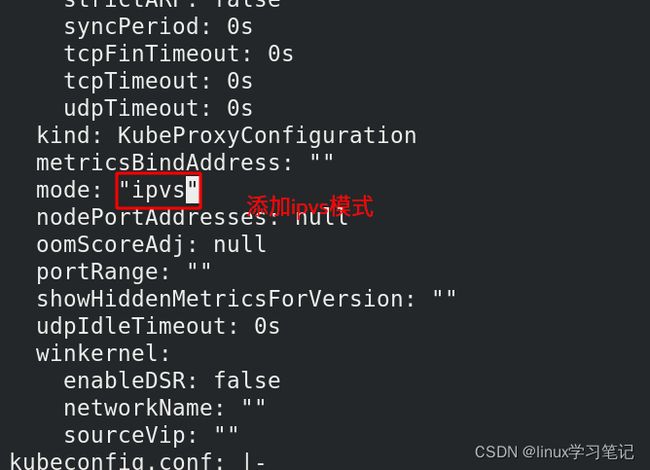

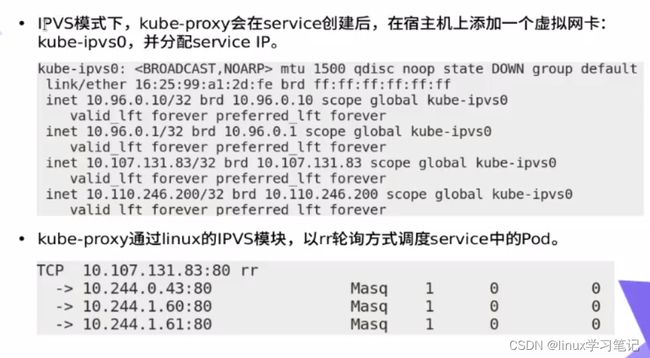

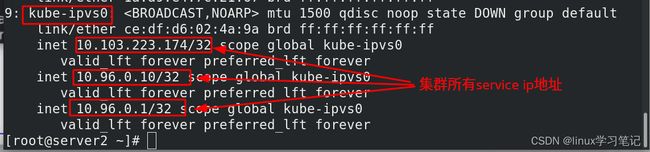

2、开启kube-proxy的ipvs

[root@server2 ~]# kubectl -n kube-system get pod | grep proxy 每个节点都有haproxy

kube-proxy-d6cp2 1/1 Running 6 (121m ago) 5d12h

kube-proxy-pqn5q 1/1 Running 6 (121m ago) 5d12h

kube-proxy-xt2m9 1/1 Running 11 (121m ago) 5d20h

[root@server2 ~]# yum install -y ipvsadm

[root@server3 ~]# yum install -y ipvsadm

[root@server4 ~]# yum install -y ipvsadm

[root@server2 ~]# kubectl get cm -n kube-system 查看服务配置文件

NAME DATA AGE

coredns 1 6d

extension-apiserver-authentication 6 6d

kube-flannel-cfg 2 5d16h

kube-proxy 2 6d

kube-root-ca.crt 1 6d

kubeadm-config 1 6d

kubelet-config-1.23 1 6d

[root@server2 ~]# kubectl -n kube-system edit cm kube-proxy 编辑kube-proxy配置文件,启用ipvs, cm是用来存储服务的配置文件的

[root@server2 ~]# kubectl -n kube-system get pod | grep proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}' 将之前建立的pod删除,新建的时侯配置就会生效

pod "kube-proxy-d6cp2" deleted

pod "kube-proxy-pqn5q" deleted

pod "kube-proxy-xt2m9" deleted

[root@server2 ~]# ipvsadm -ln 查看ipvs策略,已经有ipvs策略

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 172.25.50.2:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.18:53 Masq 1 0 0

-> 10.244.0.19:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.18:9153 Masq 1 0 0

-> 10.244.0.19:9153 Masq 1 0 0

TCP 10.103.223.174:80 rr

UDP 10.96.0.10:53 rr

-> 10.244.0.18:53 Masq 1 0 0

-> 10.244.0.19:53 Masq 1 0 0

[root@server2 ~]# ip addr

3、 ClusterIP模式

[root@server2 ~]# kubectl get svc 查看svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 6d1h

myservice ClusterIP 10.103.223.174 80/TCP 2d6h

[root@server2 ~]# kubectl delete svc myservice 删除svc服务

service "myservice" deleted

[root@server2 ~]# kubectl apply -f rs.yaml 运行

deployment.apps/deployment created

[root@server2 ~]# kubectl get pod 查看pode节点,生成3个pod

NAME READY STATUS RESTARTS AGE

deployment-57c78c68df-2t9l7 1/1 Running 0 25s

deployment-57c78c68df-6qw4c 1/1 Running 0 25s

deployment-57c78c68df-n28h6 1/1 Running 0 25s

[root@server2 ~]# kubectl get pod --show-labels 查看标签,标签为app=myapp

NAME READY STATUS RESTARTS AGE LABELS

deployment-57c78c68df-2t9l7 1/1 Running 0 84s app=myapp,pod-template-hash=57c78c68df

deployment-57c78c68df-6qw4c 1/1 Running 0 84s app=myapp,pod-template-hash=57c78c68df

deployment-57c78c68df-n28h6 1/1 Running 0 84s app=myapp,pod-template-hash=57c78c68df

[root@server2 ~]# vim myservice.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector: 选者器,将有app: myapp 的pod的标签调度到后端

app: myapp

type: ClusterIP

[root@server2 ~]# kubectl apply -f myservice.yaml 运行

service/myservice created

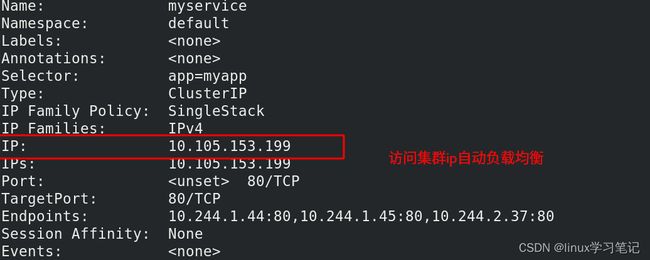

[root@server2 ~]# kubectl describe svc myservice 查看myservice服务详细信息

Name: myservice

Namespace: default

Labels:

Annotations:

Selector: app=myapp

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.105.153.199

IPs: 10.105.153.199

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.42:80,10.244.2.35:80,10.244.2.36:80 后端3个pod的ip地址

Session Affinity: None

Events:

[root@server2 ~]# kubectl get pod -o wide 查看ip

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-57c78c68df-2t9l7 1/1 Running 0 13m 10.244.2.35 server4

deployment-57c78c68df-6qw4c 1/1 Running 0 13m 10.244.2.36 server4

deployment-57c78c68df-n28h6 1/1 Running 0 13m 10.244.1.42 server3

[root@server2 ~]# kubectl label pods deployment-57c78c68df-2t9l7 app=nginx --overwrite 修改其中一个标签

pod/deployment-57c78c68df-2t9l7 labeled

[root@server2 ~]# kubectl describe svc myservice 查看myservice详细信息

Name: myservice

Namespace: default

Labels:

Annotations:

Selector: app=myapp

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.105.153.199

IPs: 10.105.153.199

Port: 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.42:80,10.244.1.43:80,10.244.2.36:80

由于之前10.244.2.35 变成了10.244.1.43,是因为标签被修改了,无法加入后端,从新拉起了一个pod

[root@server2 ~]# kubectl get pod --show-labels 查看标签

NAME READY STATUS RESTARTS AGE LABELS

deployment-57c78c68df-2t9l7 1/1 Running 0 25m app=nginx,pod-template-hash=57c78c68df 此标签不对,不能加入后端

deployment-57c78c68df-6q2gc 1/1 Running 0 9m1s app=myapp,pod-template-hash=57c78c68df

deployment-57c78c68df-6qw4c 1/1 Running 0 25m app=myapp,pod-template-hash=57c78c68df

deployment-57c78c68df-n28h6 1/1 Running 0 25m app=myapp,pod-template-hash=57c78c68df

[root@server2 ~]# kubectl label pods deployment-57c78c68df-2t9l7 app=myapp --overwrite 改成正确的标签

pod/deployment-57c78c68df-2t9l7 labeled

[root@server2 ~]# kubectl get pod --show-labels 副本数为3,会回收一个

NAME READY STATUS RESTARTS AGE LABELS

deployment-57c78c68df-2t9l7 1/1 Running 1 (7m59s ago) 5h15m app=myapp,pod-template-hash=57c78c68df

deployment-57c78c68df-6q2gc 1/1 Running 1 (8m6s ago) 4h59m app=myapp,pod-template-hash=57c78c68df

deployment-57c78c68df-n28h6 1/1 Running 1 5h15m app=myapp,pod-template-hash=57c78c68df

[root@server2 ~]# kubectl describe svc myservice 查看详细服务信息

[root@server2 ~]# curl 10.105.153.199 访问,负载均衡

Hello MyApp | Version: v2 | Pod Name

[root@server2 ~]# curl 10.105.153.199/hostname.html

deployment-57c78c68df-6q2gc

[root@server2 ~]# curl 10.105.153.199/hostname.html

deployment-57c78c68df-n28h6

[root@server2 ~]# curl 10.105.153.199/hostname.html

deployment-57c78c68df-2t9l7

[root@server2 ~]# curl 10.105.153.199/hostname.html

deployment-57c78c68df-6q2gc

[root@server2 ~]# curl 10.105.153.199/hostname.html

deployment-57c78c68df-n28h6

[root@server2 ~]# curl 10.105.153.199/hostname.html

deployment-57c78c68df-2t9l7

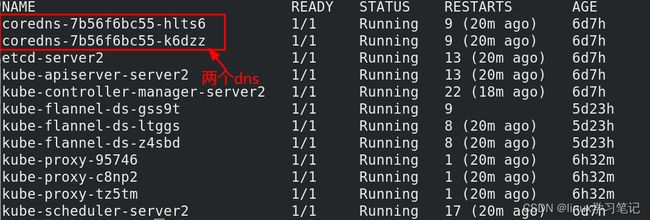

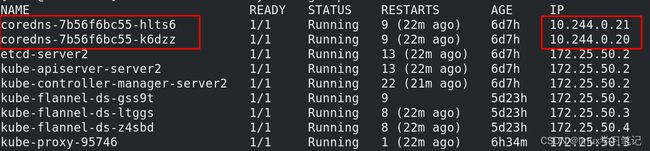

[root@server2 ~]# kubectl -n kube-system get pod

[root@server2 ~]# kubectl -n kube-system get pod -o wide 查看ip

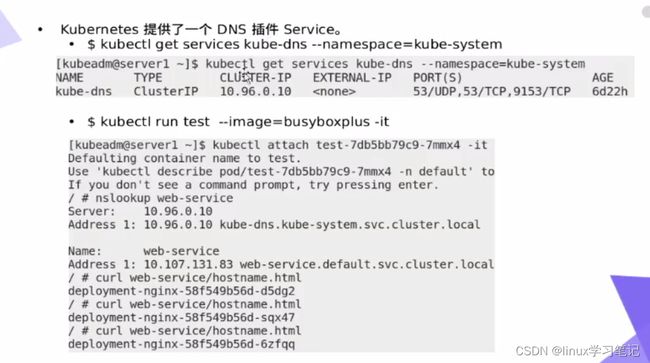

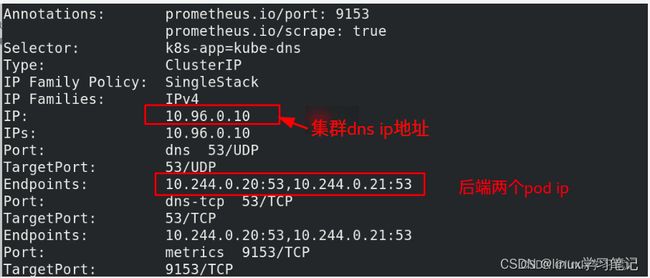

[root@server2 ~]# kubectl -n kube-system get svc 查看svc服务,生成了一个kube-dns服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP,9153/TCP 6d7h 可以看出kube-dns 的ip为10.96.0.10

[root@server2 ~]# kubectl -n kube-system describe svc kube-dns 查看kube-dns服务详细信息

[root@server2 ~]# kubectl run demo --image=busyboxplus -it --restart=Nerver 运行一个pod

/ # cat /etc/resolv.conf 查看集群dns解析地址

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

/ # curl myservice 访问域名,可以访问

Hello MyApp | Version: v2 | Pod Name

/ # curl myservice/hostname.html

deployment-57c78c68df-6q2gc

/ # curl myservice/hostname.html

deployment-57c78c68df-n28h6

/ # curl myservice/hostname.html

deployment-57c78c68df-2t9l7

/ # nslookup myservice 查看解析地址

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: myservice

Address 1: 10.105.153.199 myservice.default.svc.cluster.local 10.105.153.199就是myservice服务ip地址

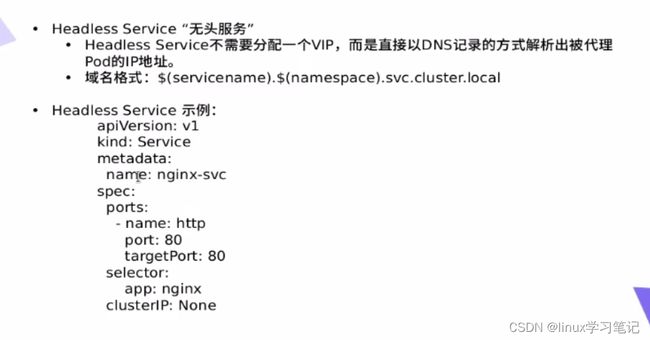

无头服务(clusterIP:none)

无头服务,不需要分配ip,直接以dns记录的方式解析出后端pod地址

此方法解决了集群ip地址不固定,可以通过访问服务名访问,自动对后端实现负载均衡

[root@server2 ~]# kubectl delete pod demo 删除

pod "demo" deleted

[root@server2 ~]# kubectl delete -f myservice.yaml 删除

service "myservice" deleted

[root@server2 ~]# vim myservice.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

clusterIP: None 表示不分配虚拟ip地址

[root@server2 ~]# kubectl apply -f myservice.yaml 运行

service/myservice created

[root@server2 ~]# kubectl get svc 查看svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 6d7h

myservice ClusterIP None 80/TCP 25s myservice服务没有地址

[root@server2 ~]# yum install bind-utils -y 安装软件包,目的是可以使用dig命令

[root@server2 ~]# dig -t A myservice.default.svc.cluster.local. @10.96.0.10 10.96.0.10 集群dns的ip地址

[root@server2 ~]# vim rs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 6 将副本数由3个变成6个

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v2

[root@server2 ~]# kubectl apply -f rs.yaml 运行

deployment.apps/deployment configured

[root@server2 ~]# kubectl describe svc myservice

4、NodePort模式—外部可以访问集群内部服务

[root@server2 ~]# kubectl delete -f myservice.yaml

service "myservice" deleted

[root@server2 ~]# vim myservice.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

type: NodePort 改成NodePort模式

[root@server2 ~]# kubectl apply -f myservice.yaml 运行

service/myservice created

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 7d

myservice NodePort 10.109.24.72 80:31776/TCP 40s 可以发现在ClusterIP的基础上分了一个31776的端口,此端口在宿主机上开放,通过此i宿主机i端口把服务暴露出去

[root@foundation50 network-scripts]# curl 172.25.50.2:31776 通过外部可以访问集群内部服务

Hello MyApp | Version: v2 | Pod Name

[root@foundation50 network-scripts]# curl 172.25.50.2:31776 随便访问一个节点可以进行负载均衡

Hello MyApp | Version: v2 | Pod Name

[root@foundation50 network-scripts]# curl 172.25.50.2:31776/hostname.html

deployment-57c78c68df-h27nh

[root@foundation50 network-scripts]# curl 172.25.50.2:31776/hostname.html

deployment-57c78c68df-n28h6

[root@foundation50 network-scripts]# curl 172.25.50.2:31776/hostname.html

deployment-57c78c68df-njgvt

[root@foundation50 network-scripts]# curl 172.25.50.2:31776/hostname.html

deployment-57c78c68df-h27nh

[root@foundation50 network-scripts]# curl 172.25.50.2:31776/hostname.html

deployment-57c78c68df-n28h6

[root@foundation50 network-scripts]# curl 172.25.50.2:31776/hostname.html

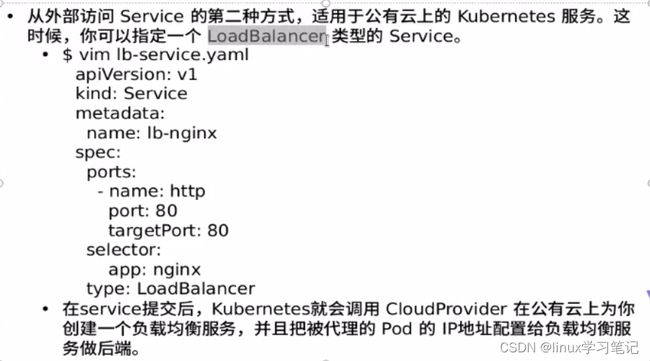

5、LoadBalancer模式—外部访问集群内部服务第二种方式

[root@server2 ~]# vim lb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: lb-svc

spec:

ports:

- name: http

port: 80

targetPort: 80

selector:

app: nginx

type: LoadBalancer

[root@server2 ~]# kubectl apply -f lb-svc.yaml

service/lb-svc created

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 7d

lb-svc LoadBalancer 10.102.177.166 80:31535/TCP 31s 服务创建成功

myservice NodePort 10.109.24.72 80:31776/TCP 37m

[root@server2 ~]# kubectl get svc lb-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

lb-svc LoadBalancer 10.102.177.166 80:31535/TCP 104s

分了一个集群内部ip, 外部ip处于等待状态,同样分配了一个端口,相当与是在NodePort基础上分配一个外部ip

当前没有公有云,无法提供外部ip,现在是裸金属环境

如何解决没有公有云提供外部ip,裸金属环境提供ip

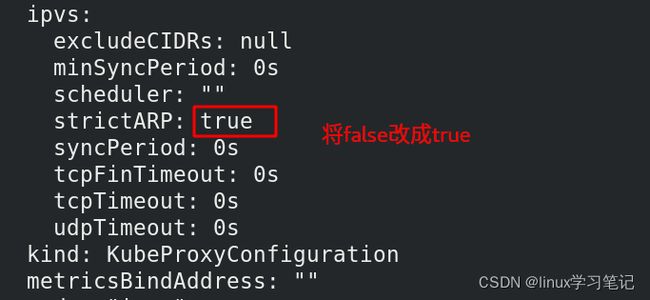

[root@server2 ~]# kubectl edit configmap -n kube-system kube-proxy 编辑kube-proxy服务

改完cm之后容器没有reload

[root@server2 ~]# kubectl -n kube-system get pod | grep proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}' 将之前的pod删除

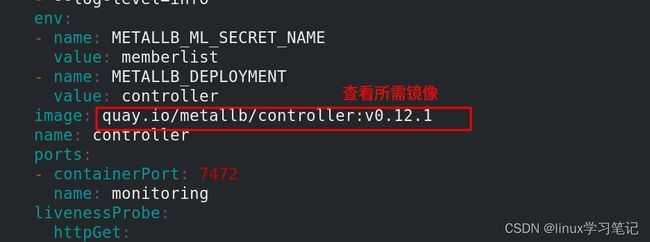

[root@server2 ~]# mkdir metallb

[root@server2 ~]# cd metallb/

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml

[root@server2 metallb]# wget https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

[root@server2 metallb]# ls 下载两个yaml文件成功

metallb.yaml namespace.yaml

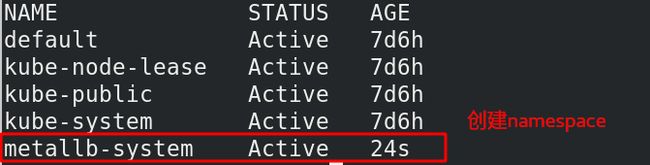

[root@server2 metallb]# kubectl apply -f namespace.yaml 运行

namespace/metallb-system created

[root@server2 metallb]# kubectl get ns

[root@server2 metallb]# vim metallb.yaml

[root@server1 ~]# docker pull quay.io/metallb/controller:v0.12.1 拉取所需镜像

[root@server1 ~]# docker pull quay.io/metallb/speaker:v0.12.1 拉取所需镜像

为了保持路经一致,在habor新建一个项目

[root@server1 harbor]# docker tag quay.io/metallb/controller:v0.12.1 reg.westos.org/metallb/controller:v0.12.1 改标签

[root@server1 harbor]# docker tag quay.io/metallb/speaker:v0.12.1 reg.westos.org/metallb/speaker:v0.12.1 改标签

[root@server1 harbor]# docker push reg.westos.org/metallb/controller:v0.12.1 上传镜像

[root@server1 harbor]# docker push reg.westos.org/metallb/speaker:v0.12.1 上传镜像

[root@server2 metallb]# kubectl apply -f metallb.yaml 运行

[root@server2 metallb]# kubectl -n metallb-system get secrets 查看密钥

NAME TYPE DATA AGE

controller-token-2vhdh kubernetes.io/service-account-token 3 13h

default-token-rwmtk kubernetes.io/service-account-token 3 13h

memberlist Opaque 1 12h 有密钥

speaker-token-9s5qx kubernetes.io/service-account-token 3 13h

[root@server2 metallb]# kubectl -n metallb-system get pod 查看pod,运行成功

NAME READY STATUS RESTARTS AGE

controller-57fd9c5bb-bvcmd 1/1 Running 1 (26m ago) 11h

speaker-5nr9p 1/1 Running 1 (26m ago) 11h

speaker-9flpf 1/1 Running 1 (26m ago) 11h

speaker-vcf7h 1/1 Running 1 11h

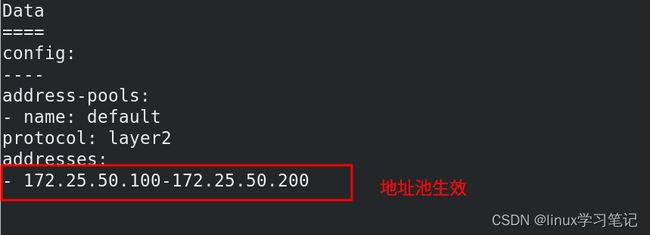

[root@server2 metallb]# vim config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.25.50.100-172.25.50.200 分配的ip地址池

[root@server2 metallb]# kubectl apply -f config.yaml 运行

configmap/config created

[root@server2 metallb]# kubectl -n metallb-system get cm 查看配置文件

NAME DATA AGE

config 1 2m11s 创建配置文件成功

kube-root-ca.crt 1 13h

[root@server2 metallb]# k

测试:

先启动一个svc服务,在其中添加了deployment控制器控制的myapp镜像,便于后续测试。

[root@server2 ~]# vim rs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment

spec:

replicas: 3

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: myapp:v1

[root@server2 ~]# vim lb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: lb-svc

spec:

ports:

- name: http

port: 80

targetPort: 80

selector:

app: myapp 将标签改为app:myapp ,因为当前pod的标签是app:myapp

type: LoadBalancer

[root@server2 ~]# kubectl delete svc myservice 删除不用的myservice服务

service "myservice" deleted

[root@server2 ~]# kubectl apply -f lb-svc.yaml 运行

[root@server2 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 8d

lb-svc LoadBalancer 10.110.254.104 172.25.50.100 80:30644/TCP 39m 可以发现分配了ip

[root@foundation50 isos]# curl 172.25.50.100 访问,可以访问

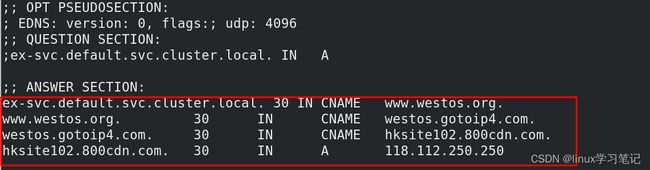

6、 ExternalName模式—外部访问集群内部服务第三种方式

由于svc在集群内的IP地址总是在变化,因此可以提供一个解析使其相对固定下来;外部网络访问时只需要去找域名就可以自动解析到svc的IP。

[root@server2 ~]# kubectl delete -f lb-svc.yaml

service "lb-svc" deleted

[root@server2 ~]# vim ex-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: ex-svc

spec:

type: ExternalName

externalName: www.westos.org

[root@server2 ~]# kubectl apply -f ex-svc.yaml 创建成功

service/ex-svc created

[root@server2 ~]# kubectl get svc ex-svc 查看ex-svc服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ex-svc ExternalName www.westos.org 88s 没有集群ip

[root@server2 ~]# dig -t A ex-svc.default.svc.cluster.local. @10.96.0.10

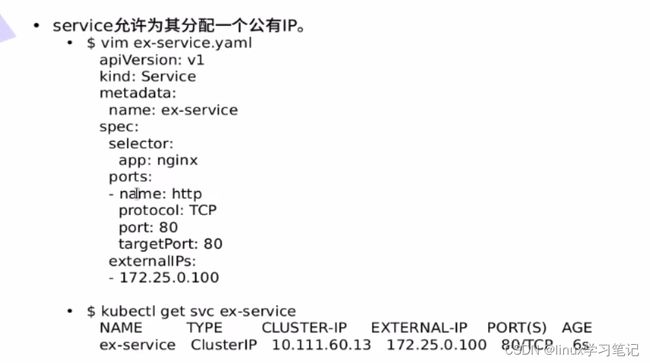

7、外部访问集群内部服务第四种方式—指定一个固定ip

也可以直接为svc绑定一个IP,svc启动后,需要手工向节点中加上一个IP地址,然后集群外部就可以访问,一般不推荐,这对后期的维护成本要求较高。

[root@server2 ~]# vim exip-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: exip-svc

spec:

selector:

app: myapp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

externalIPs: 提供的外部ip地址

- 172.25.50.100

[root@server2 ~]# kubectl apply -f exip-svc.yaml 运行

service/exip-svc created

[root@server2 ~]# kubectl get svc exip-svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

exip-svc ClusterIP 10.101.33.192 172.25.0.100 80/TCP 89s 创建成功

[root@foundation50 ~]# curl 172.25.50.100 可以访问集群内部pod

Hello MyApp | Version: v2 | Pod Name

[root@foundation50 ~]# curl 172.25.50.100/hostname.html 可以实现负载均衡

deployment-57c78c68df-h27nh

[root@foundation50 ~]# curl 172.25.50.100/hostname.html

deployment-57c78c68df-n28h6

[root@foundation50 ~]# curl 172.25.50.100/hostname.html

deployment-57c78c68df-njgvt

[root@foundation50 ~]# curl 172.25.50.100/hostname.html

deployment-57c78c68df-h27nh

[root@foundation50 ~]# curl 172.25.50.100/hostname.html

deployment-57c78c68df-n28h6

[root@foundation50 ~]# curl 172.25.50.100/hostname.html

deployment-57c78c68df-njgvt

[root@server2 ~]# kubectl delete -f exip-svc.yaml 回收

service "exip-svc" deleted

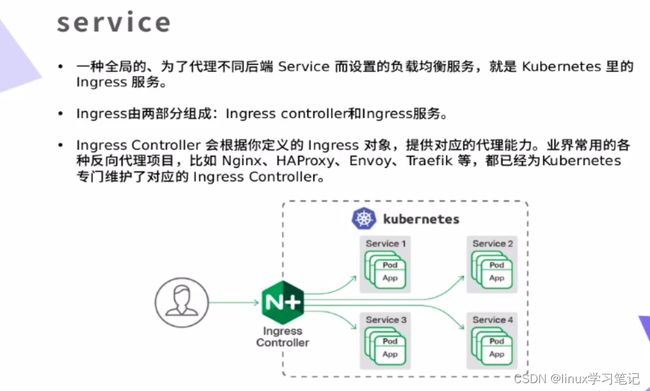

8、ingress服务—外部访问集群内部pod

[root@server2 ingress]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.2/deploy/static/provider/baremetal/deploy.yaml

[root@server2 ingress]# ls

deploy.yaml 下载成功

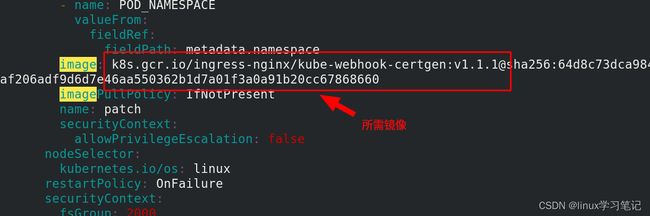

[root@server2 ingress]# vim deploy.yaml 查看所需镜像

[root@server2 ingress]# docker search ingress-nginx 查找所需镜像

[root@server2 ingress]# docker pull willdockerhub/ingress-nginx-controller:v1.1.2 拉取所需镜像

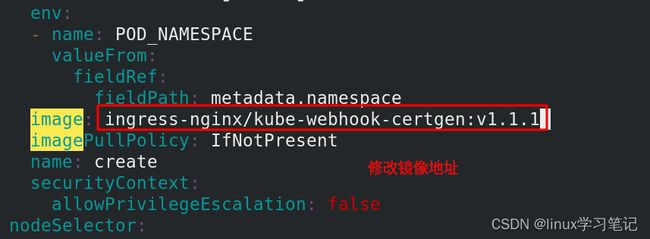

[root@server1 ~]# docker pull cangyin/ingress-nginx-kube-webhook-certgen:v1.1.1 拉取所需镜像

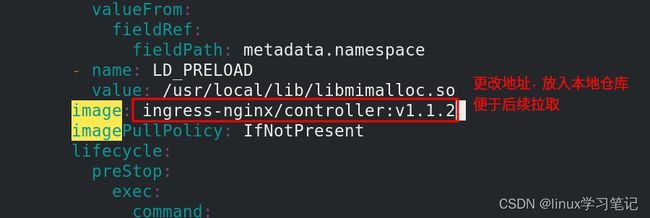

[root@server2 ingress]# vim deploy.yaml 更改镜像地址,放入本地仓库,以便后续拉取

[root@server1 ~]# docker tag cangyin/ingress-nginx-kube-webhook-certgen:v1.1.1 reg.westos.org/ingress-nginx/kube-webhook-certgen:v1.1.1 改标签

[root@server1 ~]# docker tag willdockerhub/ingress-nginx-controller:v1.1.2 reg.westos.org/ingress-nginx/controller:v1.1.2 改标签

[root@server1 ~]# docker push reg.westos.org/ingress-nginx/kube-webhook-certgen:v1.1.1 上传镜像到仓库

[root@server1 ~]# docker push reg.westos.org/ingress-nginx/controller:v1.1.2 上传镜像到仓库

[root@server2 ingress]# kubectl apply -f deploy.yaml 运行

[root@server2 ingress]# kubectl -n ingress-nginx get pod 查看pod,都成功了

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-g2trs 0/1 Completed 0 3h55m 运行完成

ingress-nginx-admission-patch-gh8z8 0/1 Completed 1 3h55m

ingress-nginx-controller-678d797d77-sl6xc 1/1 Running 1 (6m7s ago) 3h55m 运行中

[root@server2 ingress]# kubectl -n ingress-nginx get svc 查看服务

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.100.130.86 80:30569/TCP,443:31698/TCP 4h 外部主机访问31698端口,就可以访问集群内部pod

ingress-nginx-controller-admission ClusterIP 10.106.242.217 443/TCP 4h

[root@server2 ~]# kubectl delete svc lb-svc 删除之前创建的服务

service "lb-svc" deleted

[root@server2 ~]# vim myservice.yaml

---

apiVersion: v1

kind: Service

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: myapp

type: ClusterIP 改成集群内部访问模式

[root@server2 ~]# kubectl apply -f myservice.yaml 运行myservice 服务

service/myservice created

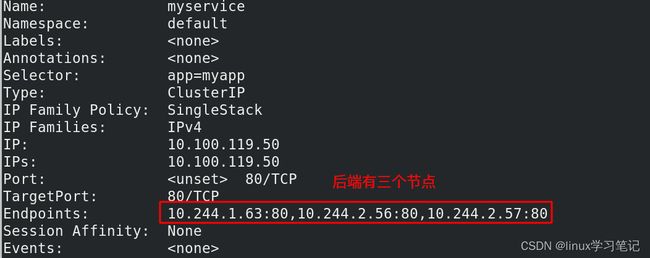

[root@server2 ~]# kubectl describe svc myservice 查看myservice服务详细信息

[root@server2 ~]# vim ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

spec:

ingressClassName: nginx

rules:

- host: www1.westos.org

http:

paths:

- path: / #直接访问根目录

pathType: Prefix

backend: #设置后端的svc

service:

name: myservice #后端的svc名称,必须匹配

port:

number: 80

ingress基本逻辑是:client——>ingress——>svc——>pod

ingress访问后面的svc,svc在去调后面的pod

[root@server2 ~]# kubectl apply -f ingress.yaml 运行

[root@server2 ~]# kubectl describe ingress ingress-demo

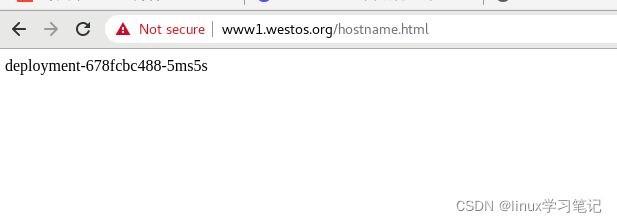

红色方框可以看出,当外部网络访问“www1.westos.org”时,会自动访问后端“myservice:80”上的3个pod

[root@server2 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-demo nginx www1.westos.org 172.25.50.3 80 11h 可以发现调度在server3上

[root@server2 ~]# kubectl -n ingress-nginx get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.100.130.86 80:30569/TCP,443:30998/TCP 5h25m 查看端口

ingress-nginx-controller-admission ClusterIP 10.106.242.217 443/TCP 5h25m

[root@server3 ~]# vim /etc/hosts

172.25.50.3 server3 www1.westos.org 在server3上添加解析

[root@foundation50 Desktop]# curl www1.westos.org:30998 访问,可以负载均衡

Hello MyApp | Version: v1 | Pod Name

[root@foundation50 Desktop]# curl www1.westos.org:30998/hostname.html

deployment-678fcbc488-sgcd6

[root@foundation50 Desktop]# curl www1.westos.org:30998/hostname.html

deployment-678fcbc488-sgcd6

[root@foundation50 Desktop]# curl www1.westos.org:30998/hostname.html

deployment-678fcbc488-2lf22

[root@foundation50 Desktop]# curl www1.westos.org:30998/hostname.html

deployment-678fcbc488-2lf22

[root@foundation50 Desktop]# curl www1.westos.org:30998/hostname.html

deployment-678fcbc488-5ms5s

[root@foundation50 Desktop]# curl www1.westos.org:30998/hostname.html

deployment-678fcbc488-5ms5s

ingress是怎么做到负载均衡的?

ingress控制器侦听了nginx策略,改变nginx配置文件

[root@server2 ~]# kubectl -n ingress-nginx get pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-kbdwc 0/1 Completed 0 3h6m

ingress-nginx-admission-patch-tzdss 0/1 Completed 0 3h6m

ingress-nginx-controller-678d797d77-ndfmj 1/1 Running 0 3h6m 这个就是ingress空间里的容器

[root@server2 ~]# kubectl -n ingress-nginx exec -it ingress-nginx-controller-678d797d77-ndfmj -- bash

bash-5.1$ cd /etc/nginx/

bash-5.1$ cat nginx.conf | less

[root@server2 ~]# cp rs.yaml ingress/ 重新复制一个原来的rs.yaml 到ingress

[root@server2 ~]# cd ingress/

[root@server2 ingress]# vim rs.yaml 修改原来的rs.yaml,将标签改成app:nginx,镜像改成myapp:v2

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-2 此处name不能和之前重复

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: myapp:v2

[root@server2 ingress]# kubectl apply -f rs.yaml 运行

deployment.apps/deployment-2 created

[root@server2 ingress]# kubectl get deployments.apps 查看deployments控制器

NAME READY UP-TO-DATE AVAILABLE AGE

deployment 3/3 3 3 4h11m

deployment-2 3/3 3 3 52s 两个控制器

[root@server2 ingress]# kubectl get pod --show-labels 查看pod标签,3个nginx标签,3个myapp标签

NAME READY STATUS RESTARTS AGE LABELS

deployment-2-77cd76f9c5-2zrkd 1/1 Running 0 4m53s app=nginx,pod-template-hash=77cd76f9c5

deployment-2-77cd76f9c5-96cwp 1/1 Running 0 4m53s app=nginx,pod-template-hash=77cd76f9c5

deployment-2-77cd76f9c5-stppk 1/1 Running 0 4m53s app=nginx,pod-template-hash=77cd76f9c5

deployment-678fcbc488-2lf22 1/1 Running 0 4h15m app=myapp,pod-template-hash=678fcbc488

deployment-678fcbc488-5ms5s 1/1 Running 0 4h15m app=myapp,pod-template-hash=678fcbc488

deployment-678fcbc488-sgcd6 1/1 Running 0 4h15m app=myapp,pod-template-hash=678fcbc488

[root@server2 ingress]# cp ../myservice.yaml . 将原来的myservice.yaml文件拷贝到ingress里

[root@server2 ingress]# vim myservice.yaml 修改yaml文件

apiVersion: v1

kind: Service

metadata:

name: nginx-svc 服务名修改为nginx-svc

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx 标签改成nginx

type: ClusterIP

[root@server2 ingress]# kubectl apply -f myservice.yaml

service/nginx-svc created

[root@server2 ingress]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 443/TCP 9d

myservice ClusterIP 10.100.178.184 80/TCP 3h48m

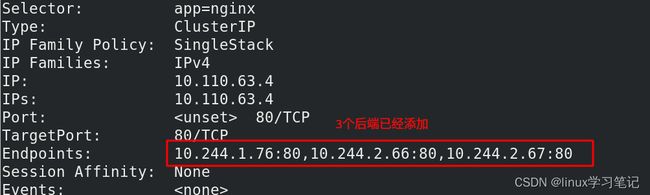

nginx-svc ClusterIP 10.110.63.4 80/TCP 104s 生成了nginx-svc 服务

[root@server2 ingress]# kubectl describe svc nginx-svc

[root@server2 ~]# vim ingress.yaml 编辑 ingress.yaml 文件,在添加一个Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

spec:

ingressClassName: nginx

rules:

- host: www1.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservice

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-www2

spec:

ingressClassName: nginx

rules:

- host: www2.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

[root@server2 ~]# kubectl apply -f ingress.yaml 运行

ingress.networking.k8s.io/ingress-demo unchanged

ingress.networking.k8s.io/ingress-www2 created

[root@server2 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-demo nginx www1.westos.org 172.25.50.3 80 15h

ingress-www2 nginx www2.westos.org 80 51s

[root@server2 ~]# kubectl -n ingress-nginx exec -it ingress-nginx-controller-678d797d77-ndfmj -- bash 进入容器里

bash-5.1$ less nginx.conf

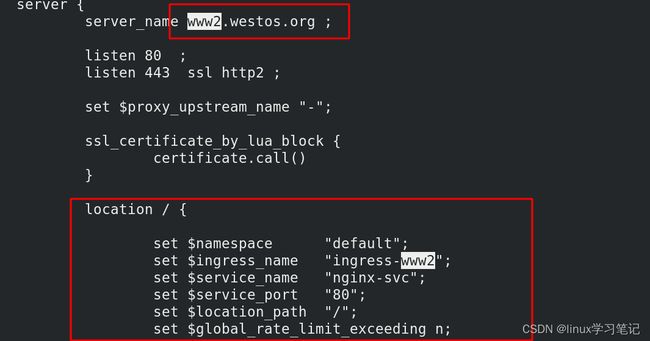

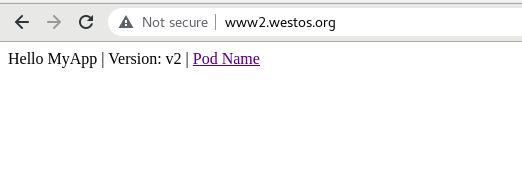

ingress控制器侦听了nginx策略,改变nginx配置文件,配置虚拟主机自动就会变成www2.westos.org,

相当与配置nginx来负载后端的service服务

测试:在外部主机上分别访问这两个域名,会被ingress调度到不同的svc上

[root@foundation50 Desktop]# vim /etc/hosts 添加解析

172.25.50.3 server3 www1.westos.org www2.westos.org

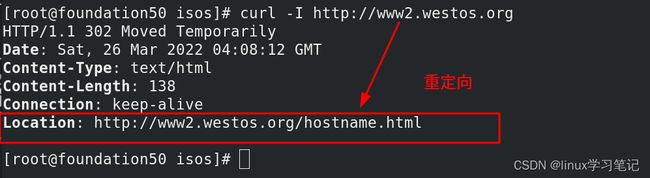

[root@foundation50 Desktop]# curl www2.westos.org:30998

Hello MyApp | Version: v2 | Pod Name

[root@foundation50 Desktop]# curl www2.westos.org:30998/hostname.html

deployment-2-77cd76f9c5-2zrkd

[root@foundation50 Desktop]# curl www2.westos.org:30998/hostname.html

deployment-2-77cd76f9c5-stppk

[root@foundation50 Desktop]# curl www1.westos.org:30998

Hello MyApp | Version: v1 | Pod Name

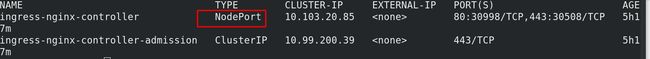

由ingress访问不方便,他的暴露方式是以nodport方式,访问时需要在节点上添加端口访问(curl www2.westos.org:30998)可以和loadbalancer结合

[root@server2 ~]# kubectl -n ingress-nginx get svc

[root@server2 ~]# kubectl -n ingress-nginx edit svc ingress-nginx-controller 编辑控制器

service/ingress-nginx-controller edited

[root@server2 ~]# kubectl -n ingress-nginx get svc

[root@foundation50 Desktop]# vim /etc/hosts 添加解析

172.25.50.100 www1.westos.org www2.westos.org 域名添加到vip上

[root@foundation50 Desktop]# curl www1.westos.org 此时就可以访问域名,不用

Hello MyApp | Version: v1 | Pod Name

[root@foundation50 Desktop]# curl www2.westos.org

Hello MyApp | Version: v2 | Pod Name

具体流程:

client -> vip -> (ingress svc -> nginx pod) -> service->pod

客户端通过vip访问ingress svc ,由ingress 加载 nginx 配置文件策略, nginx对后端7层service负载均衡,sevice后面跟常规pod

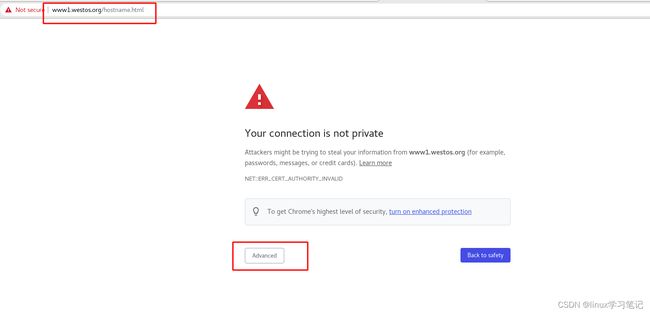

ingress加密

生成tls证书文档

生成证书:

[root@server2 ~]# openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc" 生成证书和密钥

Generating a 2048 bit RSA private key

................+++

.........................................+++

writing new private key to 'tls.key' 生成了一个tls.key

[root@server2 ~]# kubectl create secret tls tls-secret --key tls.key --cert tls.crt

secret/tls-secret created 上传证书和密钥

[root@server2 ~]# kubectl get secrets 查看上传的证书

NAME TYPE DATA AGE

default-token-cvr9d kubernetes.io/service-account-token 3 9d

tls-secret kubernetes.io/tls 2 69s 这个就是上传的证书

对那个虚拟主机启用证书:

[root@server2 ~]# vim ingress.yaml 在www1.westos.org虚拟主机上进行加密

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls: 用tls加密

- hosts:

- www1.westos.org 加密虚拟主机

secretName: tls-secret 加密证书名字

ingressClassName: nginx

rules:

- host: www1.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservice

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-www2

spec:

ingressClassName: nginx

rules:

- host: www2.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

[root@server2 ~]# kubectl apply -f ingress.yaml 运行

ingress.networking.k8s.io/ingress-demo configured

ingress.networking.k8s.io/ingress-www2 unchanged

[root@server2 ~]# kubectl describe ingress ingress-demo 查看ingress-demo 详细信息

[root@server2 ~]# kubectl -n ingress-nginx get pod 查看pod

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-kbdwc 0/1 Completed 0 23h

ingress-nginx-admission-patch-tzdss 0/1 Completed 0 23h

ingress-nginx-controller-678d797d77-ndfmj 1/1 Running 1 (80m ago) 23h 运行的容器

[root@foundation50 ~]# curl -I www.westos.org 访问80重定向到443

HTTP/1.1 301 Moved Permanently

Server: wts/1.6.4

Date: Sat, 26 Mar 2022 03:12:20 GMT

Content-Type: text/html; charset=iso-8859-1

Connection: keep-alive

Location: https://www.westos.org/

[root@foundation50 ~]# curl -k https://www1.westos.org 加密访问

Hello MyApp | Version: v1 | Pod Name

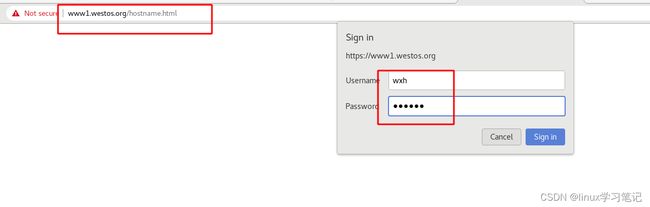

ingress启用认证

[root@server2 ingress]# yum install -y httpd-tools 安装

[root@server2 ingress]# htpasswd -c auth wxh 创建认证用户

New password:

Re-type new password:

Adding password for user wxh

[root@server2 ingress]# cat auth 上成认证文件

wxh:$apr1$GyWsJ//a$b8VuFMFvVKJ7ZEw595IbY1

[root@server2 ingress]# kubectl create secret generic basic-auth --from-file=auth 将用户认证文件加载到kubectl资源中

secret/basic-auth created

[root@server2 ingress]# kubectl get secrets

NAME TYPE DATA AGE

basic-auth Opaque 1 3m22s 用书认证信息已经加进来了

default-token-cvr9d kubernetes.io/service-account-token 3 9d

tls-secret kubernetes.io/tls 2 76m

[root@server2 ~]# vim ingress.yaml 编辑ngress.yaml文件,添加激活认证选项

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

annotations: 添加激活认证选项

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh' 打印一个认证信息

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: www1.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservice

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-www2

spec:

ingressClassName: nginx

rules:

- host: www2.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

[root@server2 ~]# kubectl apply -f ingress.yaml 运行成功

ingress.networking.k8s.io/ingress-demo configured

ingress.networking.k8s.io/ingress-www2 unchanged

访问www1.westos.org/hostname.html

ingress地址重写

例子一:

ingress重定向文档

默认80自动重定向443

需求:访问“www2.westos.org“时直接重定向到“www1.westos.org.hostname.html”

[root@server2 ~]# vim ingress.yaml 编辑文件,添加重定向

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: www1.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservice

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-www2

annotations:

nginx.ingress.kubernetes.io/app-root: /hostname.html 在此处添加重定向, 重定向目录里面的页面

spec:

ingressClassName: nginx

rules:

- host: www2.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

[root@server2 ~]# kubectl apply -f ingress.yaml 运行成功

ingress.networking.k8s.io/ingress-demo unchanged

ingress.networking.k8s.io/ingress-www2 configured

[root@server2 ~]# vim ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - wxh'

spec:

tls:

- hosts:

- www1.westos.org

secretName: tls-secret

ingressClassName: nginx

rules:

- host: www1.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: myservice

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-www2

annotations:

#nginx.ingress.kubernetes.io/app-root: /hostname.html

nginx.ingress.kubernetes.io/rewrite-target: /$2 $2变量就表示.*

spec:

ingressClassName: nginx

rules:

- host: www2.westos.org

http:

paths:

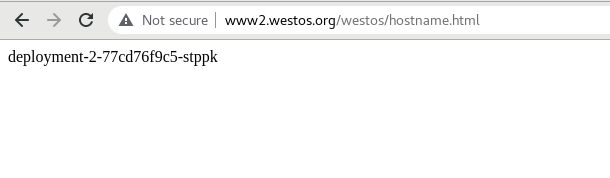

- path: /westos(/|$)(.*) /|$表示/或者直接结尾 , .* 表示后面跟的网站url ,westos关键字是自己指定的

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

--- 注意:如果不添加下面的内容,就会访问不到www2.westos.org内容了

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-www3 name不能重复

annotations:

#nginx.ingress.kubernetes.io/app-root: /hostname.html

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

ingressClassName: nginx

rules:

- host: www2.westos.org

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

[root@server2 ~]# kubectl apply -f ingress.yaml 运行成功

ingress.networking.k8s.io/ingress-demo unchanged

ingress.networking.k8s.io/ingress-www2 configured

ingress.networking.k8s.io/ingress-www3 configured

测试:

正常访问:www2.westos.org

加上关键字访问:www2.westos.org/westos/ /可加可不加

加上关键字加上页面或者网站url访问:http://www2.westos.org/westos/hostname.html 可以访问

注意: ingress一定调用的是svc,由svc在去调度后端pod