Autoware感知瞎学笔记(一)lidar_kf_contour_track

Autoware感知瞎学笔记(一)lidar_kf_contour_track

- 目录

-

- 代码分析:

- 一、雷达目标Kalman滤波器

-

- 1. lidar_kf_contour_track.cpp

- 2. lidar_kf_contour_tracker_core.h

- 3. lidar_kf_contour_tracker_core.cpp

-

- 3.1 构造函数ContourTracker()

- 3.2 析构函数 ~ContourTracker()

- 3.3 点云聚类回调函数

-

- 3.3.1 ImportCloudClusters函数

- 3.3.2 DoOneStep函数

- 3.3.3 LogAndSend函数

- 3.3.3 VisualizeLocalTracking函数

- 3.3.4 CalculateTTC函数

- 二、SimpleTracker分析

-

- 1.构造函数:

- 2.初始化方法:

- 3. DoOneStep方法

- 三、KF滤波器(ToDo)

- 总结

目录

想要学习L4的无人驾驶,入门教科书autoware堪称经典,这里分享个人的学习笔记以及个人理解(如果错了,请轻点指正哈)

代码分析:

一、雷达目标Kalman滤波器

三维激光雷达数据从传感器出来后,经过传统的聚类+高精地图object filter,或者learning-based的方法之后,得到一个目标列表,此目标列表含有二维位置信息和角度信息,没有速度信息;同时基于每一帧的检测方法,可能会出现“目标消失”的问题,因此需要滤波器来track住这些目标,下面看Autoware是怎么处理这个问题的。

代码位置:autoware/core_perception/lidar_kf_contour_track

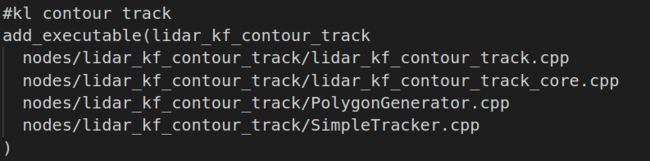

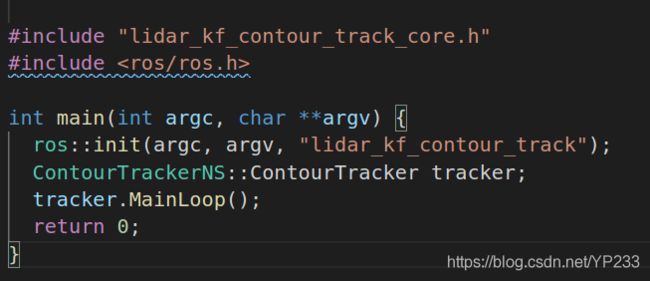

1. lidar_kf_contour_track.cpp

拿到ros的package,直接打开CMakeList文件

可以看到构成lidar_kf_contour_track这个节点的文件,有四个。我们直奔主题打开每个cpp,可以看到lidar_kf_contour_track.cpp是这个节点的入口,在main函数内实例化在ContourTrackerNS命名空间内的一个类ContourTracker,调用MainLoop() 方法。因此打开定义ContourTracker类的头文件:lidar_kf_contour_tracker_core.h

2. lidar_kf_contour_tracker_core.h

我们在这里首先分析这个入口类ContourTracker的头文件,并且从类内变量逐行分析

1. std::vector< PlannerHNS::DetectedObject > m_OriginalClusters;

打开DetectedObject这一类型定义:该文件存放在common/op_planner/RoadNetwork.h中

里面存放这描述检测目标的一些基本属性,标签,类别,尺寸,速度,角度,等等,,。同时在构造函数的时候,初始化这些变量

2.autoware_msgs::DetectedObjectArray m_OutPutResults;

记录发布数据的ros消息,消息格式如下:

std_msgs/Header header

DetectedObject[] objects

DetectedObject消息格式:

可以看到是将3D BoundingBox和目标在2D图像中的大小都汇总在此消息数据里,便于目标消息的复用。

std_msgs/Header header

uint32 id

string label

float32 score #Score as defined by the detection, Optional

std_msgs/ColorRGBA color # Define this object specific color

bool valid # Defines if this object is valid, or invalid as defined by the filtering

################ 3D BB

string space_frame #3D Space coordinate frame of the object, required if pose and dimensions are defines

geometry_msgs/Pose pose

geometry_msgs/Vector3 dimensions

geometry_msgs/Vector3 variance

geometry_msgs/Twist velocity

geometry_msgs/Twist acceleration

sensor_msgs/PointCloud2 pointcloud

geometry_msgs/PolygonStamped convex_hull

autoware_msgs/LaneArray candidate_trajectories

bool pose_reliable

bool velocity_reliable

bool acceleration_reliable

############### 2D Rect

string image_frame # Image coordinate Frame, Required if x,y,w,h defined

int32 x # X coord in image space(pixel) of the initial point of the Rect

int32 y # Y coord in image space(pixel) of the initial point of the Rect

int32 width # Width of the Rect in pixels

int32 height # Height of the Rect in pixels

float32 angle # Angle [0 to 2*PI), allow rotated rects

sensor_msgs/Image roi_image

############### Indicator information

uint8 indicator_state # INDICATOR_LEFT = 0, INDICATOR_RIGHT = 1, INDICATOR_BOTH = 2, INDICATOR_NONE = 3

############### Behavior State of the Detected Object

uint8 behavior_state # FORWARD_STATE = 0, STOPPING_STATE = 1, BRANCH_LEFT_STATE = 2, BRANCH_RIGHT_STATE = 3, YIELDING_STATE = 4, ACCELERATING_STATE = 5, SLOWDOWN_STATE = 6

#

string[] user_defined_info

3.bool bNewClusters;

bool类型变量, 表达是否是新的cluster

4.PlannerHNS::WayPoint m_CurrentPos; 使用WayPoint类型来表示当前的位姿

其中waypoint记录了当前所属的lane ID,id,Left ID, Right ID,前面的和后面的waypoints。这里因为作者还没有阅读整个autoware的代码,所以在这大胆猜测一下,这里记录的是当前lane内,在此waypoint的前面和后面所有的waypoints,和carla里面导航的方式是一样的。同时记录了关于occupancy grid map 的参数。关于其他的参数含义,我们在后续的文章中再去解读。

5.bool bNewCurrentPos;

5.bool bNewCurrentPos;

这里表示的应该是是否收到了新的位姿信息,表示位置更新

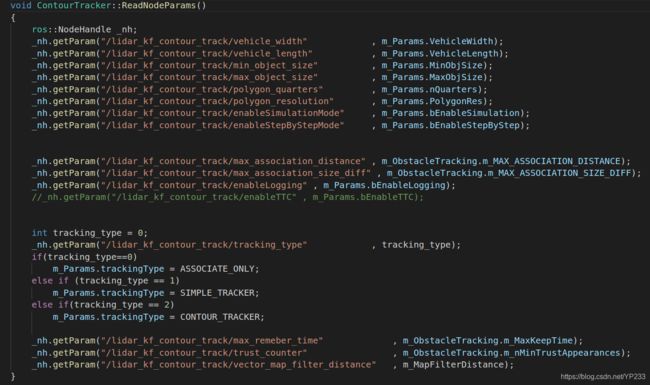

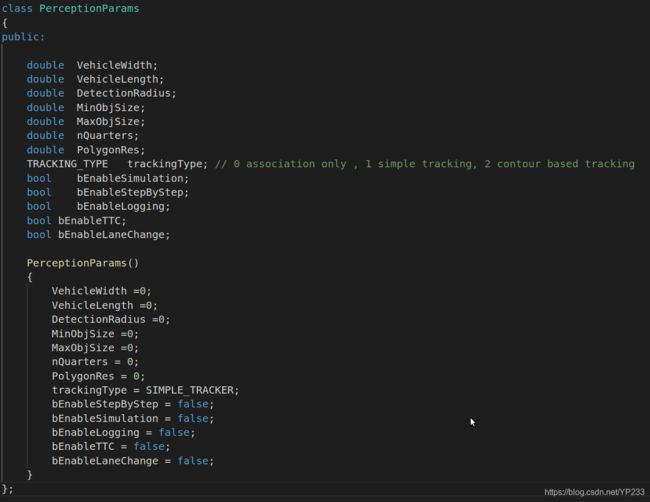

6.PerceptionParams m_Params;

看到变量的名字感觉是感知相关的参数,但是看内容其实这里的参数感觉是滤波相关的参数,车辆的size,检测半径,最大的objectsize,最小的objectsize,目标boundingbox相关参数,其他的应该是滤波器的相关设置

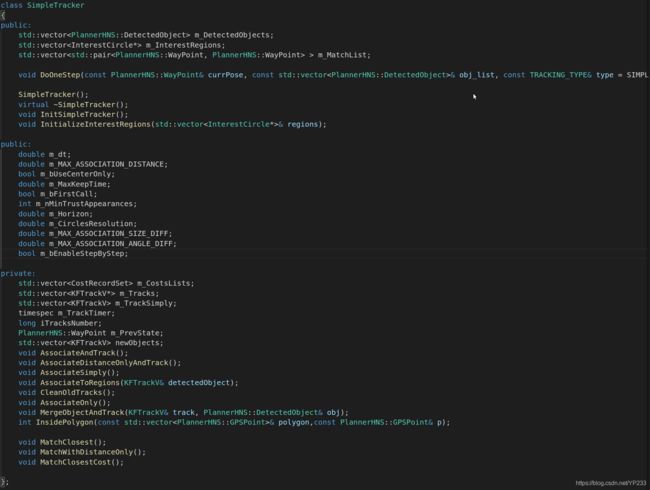

7.SimpleTracker m_ObstacleTracking;

7.SimpleTracker m_ObstacleTracking;

这里便是SimpleTracker实际的调用了,这个类其实是将kF又封装了一层,用于管理检测到的对象。关于SimpleTracker我们在第二节分析。

8.这部分的成员变量是用来表示可视化的

8.这部分的成员变量是用来表示可视化的

9. std::vector < std::string > m_LogData; 记录log数据

10.PlannerHNS::MAP_SOURCE_TYPE m_MapType; 地图的类型

11.std::string m_MapPath;存放地图的路径

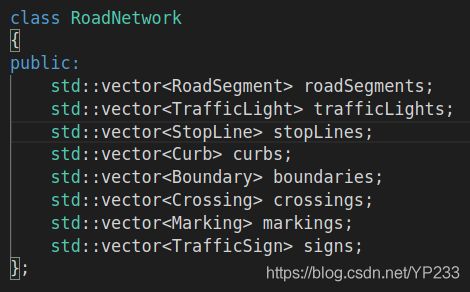

12.PlannerHNS::RoadNetwork m_Map;

这里存放的是便是autoware的高精地图,vector_map,我们打开RoadNetWork的类定义看下:

可以看到autoware使用vector来存放同一类型的道路元素,关于底层基类比如RoadSegament,TrafficLight,StopLine的分析,会在后续的文章中讲解。同时我们也会去学习autoware中的高精地图是怎样做的,它是怎么提供我们所需要的信息的。

13.bool bMap;

14.double m_MapFilterDistance;

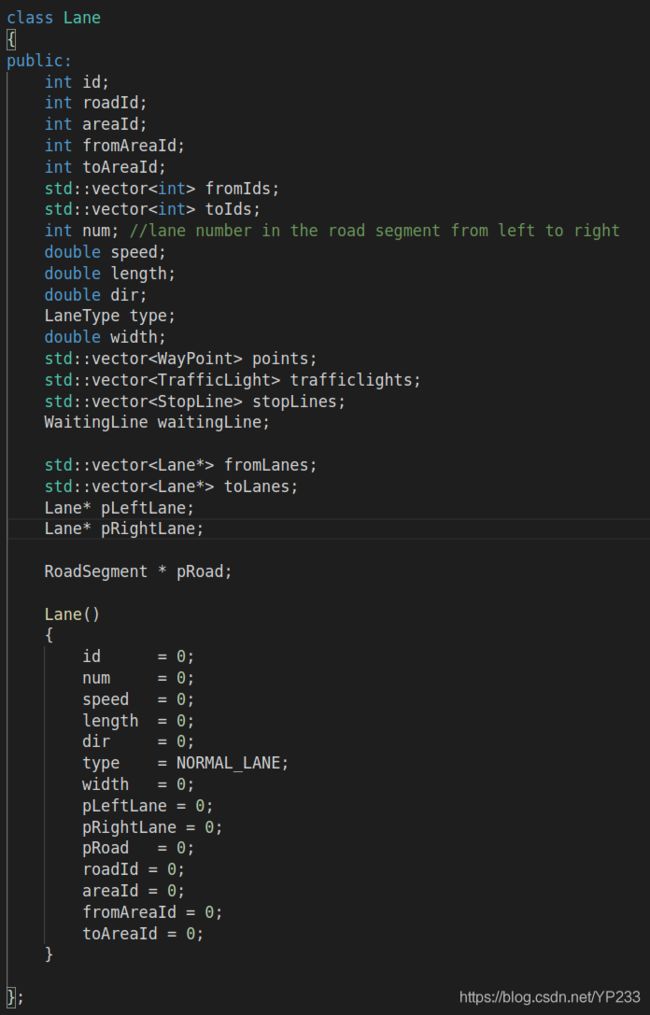

15.std::vector < PlannerHNS::Lane*> m_ClosestLanesList;

在这里我们打开PlannerHNS::Lane这个数据类型:

这里分别有如下属性:

id,roadId当前lane所在road的id,areaId,fromAreaId,toAreaId,

fromIds表示当前lane的父lane Id,

toIds表示当前lane的子lane Id,

num表示一个road内有多少条lane

speed,dir,type,

points这个车道内所有的路点,trafficlights 交通灯,stoplines停止线,

fromLanes, toLanes 则是刚刚ID对应的道路集合

pLeftLane, pRightLane 这是左右两边的道路

pRoad则是当前lane所在的road

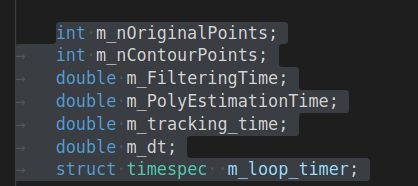

16.这里是类内关于kf滤波器的一些参数了

17. ros层变量以及回调函数声明

18.最后公有变量里面都是关于map的,通过ros topic的方式接收地图各种元素消息。

18.最后公有变量里面都是关于map的,通过ros topic的方式接收地图各种元素消息。

3. lidar_kf_contour_tracker_core.cpp

3.1 构造函数ContourTracker()

-

ReadCommonParams():这里bEnableLaneChange参数需要记住,还有上面的m_MapFilterDistance 参数,下面要考。

-

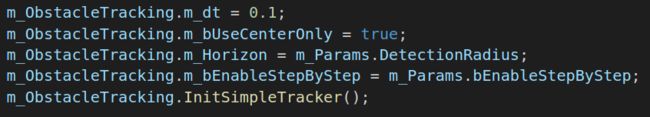

设置并且初始化kf滤波器

最后的初始化部分,会在第二节SimpleTracker部分分析,根据函数名字可以猜到,这是初始化滤波器。 -

订阅和发布topic

这里让人注意的应该是发布ttc_direct_path这个topic,单学习过感知但是没有无人驾驶知识的,应该不知道ttc这个知识点,ttc的全称是time to crash,就是剩余到达要碰撞的时间。因此读到这里,感觉下面是要计算出一个到达前面目标的路径,然后可视化出来。

这里让人注意的应该是发布ttc_direct_path这个topic,单学习过感知但是没有无人驾驶知识的,应该不知道ttc这个知识点,ttc的全称是time to crash,就是剩余到达要碰撞的时间。因此读到这里,感觉下面是要计算出一个到达前面目标的路径,然后可视化出来。 -

下面便是关于可视化的一些操作

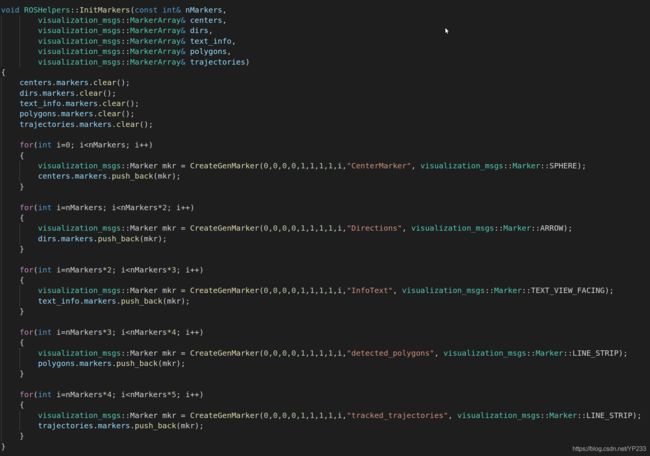

打开InitMarkers()函数:

打开InitMarkers()函数:

其实这里就是通过调用CreateGenMarker的方法生成五种类型的显示Marker,分别用来显示目标的中心,方向,文本标记,外围的polygon,还有track出来的轨迹。打开CreateGenMarker方法:

其实这里就是通过调用CreateGenMarker的方法生成五种类型的显示Marker,分别用来显示目标的中心,方向,文本标记,外围的polygon,还有track出来的轨迹。打开CreateGenMarker方法:

这里传入相应marker的属性标签,返回对应的marker。

这里传入相应marker的属性标签,返回对应的marker。

因此我们可以得知,类内全局变量m_DetectedPolygonsDummy 和 m_DetectedPolygonsActual 存放的是五种关于目标属性的Marker。

最后调用InitMatchingMarkers() 方法:

其实这里也是换汤不换药,调用CreateMarker方法生成用于显示匹配关系的Marker,然后类型是LINE_STRIP

其实这里也是换汤不换药,调用CreateMarker方法生成用于显示匹配关系的Marker,然后类型是LINE_STRIP

3.2 析构函数 ~ContourTracker()

在析构的时候,我们需要在某个位置写入log数据,记录跟踪对象的详细数据

在析构的时候,我们需要在某个位置写入log数据,记录跟踪对象的详细数据

3.3 点云聚类回调函数

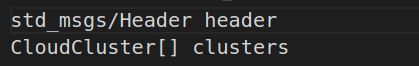

- 首先看下消息格式类型:autoware_msgs::CloudClusterArrayConstPtr, 里面存放的是标准的消息头,还有CloudCluster类型的数组

打开CloudCluster消息类型,查看里面的格式:

分别是标准消息头,id,标签,得分,点云消息,估计角度,点云的最小值,最大值,平均值,中心值, 三个尺度量(这里暂时不清楚存放的是什么变量),点云fpfh描述子,boundingbox,描述凸边界的convex_hull,目标转向信息。

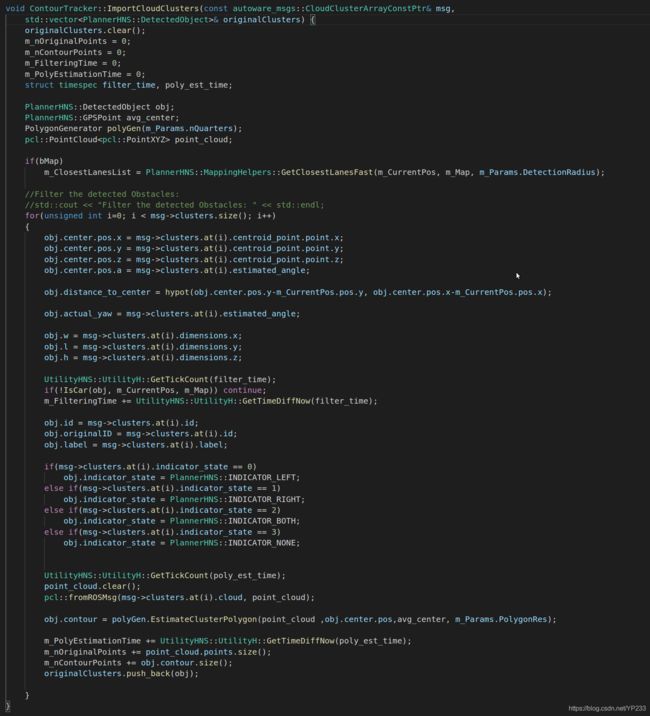

3.3.1 ImportCloudClusters函数

- 如果有定位数据 || 使用仿真的方式

调用ImportCloudClusters(msg, m_OtiginalClusters) 方法, 将聚类的数据转到类内全局变量m_OriginalClusters中

先是初始化一些变量,然后是实例化一个PolygonGenrator类,打开PolygonGenrator类定义:

class PolygonGenerator

{

public:

PlannerHNS::GPSPoint m_Centroid;

std::vector<QuarterView> m_Quarters;

std::vector<PlannerHNS::GPSPoint> m_Polygon;

PolygonGenerator(int nQuarters);

virtual ~PolygonGenerator();

std::vector<QuarterView> CreateQuarterViews(const int& nResolution);

std::vector<PlannerHNS::GPSPoint> EstimateClusterPolygon(const pcl::PointCloud<pcl::PointXYZ>& cluster,

const PlannerHNS::GPSPoint& original_centroid, PlannerHNS::GPSPoint& new_centroid, const double& polygon_resolution = 1.0);

};

打开类定义CPP文件PolygonGenerator.cpp,首先看到构造函数部分:

std::vector<QuarterView> PolygonGenerator::CreateQuarterViews(const int& nResolution)

{

std::vector<QuarterView> quarters;

if(nResolution <= 0)

return quarters;

double range = 360.0 / nResolution;

double angle = 0;

for(int i = 0; i < nResolution; i++)

{

QuarterView q(angle, angle+range, i);

quarters.push_back(q);

angle+=range;

}

return quarters;

}

这里面构造函数返回值是:std::vector< QuaterView >,我们就先看下QuarterView类定义:

- 构造函数和Init方法都可以传入参数

- Reset方法重置标志位

- UpdateQuarterView()方法,如果传入的路点的角度超出角度范围限制,则不更新。如果bFirst为真,即第一次更新数据,则直接将点赋值给类内全局变量max_from_center, 如果更新过了那么只有传入点的cost大于类内max_from_center的cost,才覆盖max_from_center。

- GetMaxPoint() 方法,如果没有更新过UpdateQuarterView方法,则返回false,即拿不到最大点。否则取得类内全局变量max_from_center。

这里再回到CreateQuarterViews方法,先定义一个QuarterView类型的vector,如果传入nResolution小于0,则直接返回。将360度分成nResolution等份,实例化nResolution个QuarterView,每个QuarterView平分360度,并存入到quarters,返回。

std::vector<QuarterView> PolygonGenerator::CreateQuarterViews(const int& nResolution)

{

std::vector<QuarterView> quarters;

if(nResolution <= 0)

return quarters;

double range = 360.0 / nResolution;

double angle = 0;

for(int i = 0; i < nResolution; i++)

{

QuarterView q(angle, angle+range, i);

quarters.push_back(q);

angle+=range;

}

return quarters;

}

继续打开PolygonGenerator.cpp分析,下面是主要的一个函数EstimateClusterPolygon:

std::vector<PlannerHNS::GPSPoint> PolygonGenerator::EstimateClusterPolygon(const pcl::PointCloud<pcl::PointXYZ>& cluster, const PlannerHNS::GPSPoint& original_centroid, PlannerHNS::GPSPoint& new_centroid, const double& polygon_resolution)

{

for(unsigned int i=0; i < m_Quarters.size(); i++)

m_Quarters.at(i).ResetQuarterView();

PlannerHNS::WayPoint p;

for(unsigned int i=0; i< cluster.points.size(); i++)

{

p.pos.x = cluster.points.at(i).x;

p.pos.y = cluster.points.at(i).y;

p.pos.z = original_centroid.z;

PlannerHNS::GPSPoint v(p.pos.x - original_centroid.x , p.pos.y - original_centroid.y, 0, 0);

p.cost = pointNorm(v);

p.pos.a = UtilityHNS::UtilityH::FixNegativeAngle(atan2(v.y, v.x))*(180. / M_PI);

for(unsigned int j = 0 ; j < m_Quarters.size(); j++)

{

if(m_Quarters.at(j).UpdateQuarterView(p))

break;

}

}

m_Polygon.clear();

PlannerHNS::WayPoint wp;

for(unsigned int j = 0 ; j < m_Quarters.size(); j++)

{

if(m_Quarters.at(j).GetMaxPoint(wp))

m_Polygon.push_back(wp.pos);

}

// //Fix Resolution:

bool bChange = true;

while (bChange && m_Polygon.size()>1)

{

bChange = false;

PlannerHNS::GPSPoint p1 = m_Polygon.at(m_Polygon.size()-1);

for(unsigned int i=0; i< m_Polygon.size(); i++)

{

PlannerHNS::GPSPoint p2 = m_Polygon.at(i);

double d = hypot(p2.y- p1.y, p2.x - p1.x);

if(d > polygon_resolution)

{

PlannerHNS::GPSPoint center_p = p1;

center_p.x = (p2.x + p1.x)/2.0;

center_p.y = (p2.y + p1.y)/2.0;

m_Polygon.insert(m_Polygon.begin()+i, center_p);

bChange = true;

break;

}

p1 = p2;

}

}

PlannerHNS::GPSPoint sum_p;

for(unsigned int i = 0 ; i< m_Polygon.size(); i++)

{

sum_p.x += m_Polygon.at(i).x;

sum_p.y += m_Polygon.at(i).y;

}

new_centroid = original_centroid;

if(m_Polygon.size() > 0)

{

new_centroid.x = sum_p.x / (double)m_Polygon.size();

new_centroid.y = sum_p.y / (double)m_Polygon.size();

}

return m_Polygon;

}

- 遍历m_Quaters,重置QuarterView

- 遍历聚类之后一簇点云中的所有点,算出每一个点到中心点的距离和相对中心的角度,更新到m_Quarters数据中

- 遍历m_Quaters,得到每一个Quater的最大点,即最外面的边界点,并且传入到m_polygon中

- 当m_polygon中有数据:

- bchange循环开关变量置为false,取出m_polygon中最后一个点

- 遍历m_polygon

- 计算每一个点和最后一个点的距离

- 如果这个距离大于一定的阈值:在m_Polygon中插入一个中间点,退出for循环,继续while循环,直到不满足两点之间的距离大于某个阈值

- 重新计算新的m_polygon所有点的中心值

以上便是PolygonGenrator类的方法,我们重新回到主线程ImportCloudClusters方法中:

- 实例化一个m_Params.nQuaters数量的Polygen

- 使用GetClosestLaneFast方法来快速获取附近的所有车道

- 遍历所有的聚类点云

- 计算出这些点的一些属性

- 通过IsCar方法来判断是否是车辆

- 更新时间

- 调用上述的EsitimateClusterPolygon方法来重新插值计算新的聚类中心

- 继续更新时间,将obj传入到originalCluster中,返回出去

3.3.2 DoOneStep函数

此部分是kf滤波中的方法,会在第二节分析

3.3.3 LogAndSend函数

void ContourTracker::LogAndSend()

{

timespec log_t;

UtilityHNS::UtilityH::GetTickCount(log_t);

std::ostringstream dataLine;

std::ostringstream dataLineToOut;

dataLine << UtilityHNS::UtilityH::GetLongTime(log_t) <<"," << m_dt << "," <<

m_ObstacleTracking.m_DetectedObjects.size() << "," <<

m_OriginalClusters.size() << "," <<

m_ObstacleTracking.m_DetectedObjects.size() - m_OriginalClusters.size() << "," <<

m_nOriginalPoints << "," <<

m_nContourPoints<< "," <<

m_FilteringTime<< "," <<

m_PolyEstimationTime<< "," <<

m_tracking_time<< "," <<

m_tracking_time+m_FilteringTime+m_PolyEstimationTime<< ",";

m_LogData.push_back(dataLine.str());

m_OutPutResults.objects.clear();

autoware_msgs::DetectedObject obj;

for(unsigned int i = 0 ; i <m_ObstacleTracking.m_DetectedObjects.size(); i++)

{

PlannerHNS::ROSHelpers::ConvertFromOpenPlannerDetectedObjectToAutowareDetectedObject(m_ObstacleTracking.m_DetectedObjects.at(i), m_Params.bEnableSimulation, obj);

m_OutPutResults.objects.push_back(obj);

}

m_OutPutResults.header.frame_id = "map";

m_OutPutResults.header.stamp = ros::Time();

pub_AllTrackedObjects.publish(m_OutPutResults);

}

这里面就是简单的记录log数据,然后调用ConvertFromOpenPlannerDetectedObjectToAutowareDetectedObject方法将检测到的目标转换到autoware_msgs::DetectedObject 类型变量,压入到m_OutPutResults变量中,然后通过ros发布出去。

3.3.3 VisualizeLocalTracking函数

void ContourTracker::VisualizeLocalTracking()

{

PlannerHNS::ROSHelpers::ConvertTrackedObjectsMarkers(m_CurrentPos, m_ObstacleTracking.m_DetectedObjects,

m_DetectedPolygonsDummy.at(0),

m_DetectedPolygonsDummy.at(1),

m_DetectedPolygonsDummy.at(2),

m_DetectedPolygonsDummy.at(3),

m_DetectedPolygonsDummy.at(4),

m_DetectedPolygonsActual.at(0),

m_DetectedPolygonsActual.at(1),

m_DetectedPolygonsActual.at(2),

m_DetectedPolygonsActual.at(3),

m_DetectedPolygonsActual.at(4));

PlannerHNS::ROSHelpers::ConvertMatchingMarkers(m_ObstacleTracking.m_MatchList, m_MatchingInfoDummy.at(0), m_MatchingInfoActual.at(0), 0);

m_DetectedPolygonsAllMarkers.markers.clear();

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), m_DetectedPolygonsActual.at(0).markers.begin(), m_DetectedPolygonsActual.at(0).markers.end());

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), m_DetectedPolygonsActual.at(1).markers.begin(), m_DetectedPolygonsActual.at(1).markers.end());

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), m_DetectedPolygonsActual.at(2).markers.begin(), m_DetectedPolygonsActual.at(2).markers.end());

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), m_DetectedPolygonsActual.at(3).markers.begin(), m_DetectedPolygonsActual.at(3).markers.end());

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), m_DetectedPolygonsActual.at(4).markers.begin(), m_DetectedPolygonsActual.at(4).markers.end());

visualization_msgs::MarkerArray all_circles;

for(unsigned int i = 0; i < m_ObstacleTracking.m_InterestRegions.size(); i++)

{

visualization_msgs::Marker circle_mkrs;

PlannerHNS::ROSHelpers::CreateCircleMarker(m_CurrentPos, m_ObstacleTracking.m_InterestRegions.at(i)->radius, i ,circle_mkrs );

all_circles.markers.push_back(circle_mkrs);

}

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), all_circles.markers.begin(), all_circles.markers.end());

m_DetectedPolygonsAllMarkers.markers.insert(m_DetectedPolygonsAllMarkers.markers.end(), m_MatchingInfoActual.at(0).markers.begin(), m_MatchingInfoActual.at(0).markers.end());

pub_DetectedPolygonsRviz.publish(m_DetectedPolygonsAllMarkers);

jsk_recognition_msgs::BoundingBoxArray boxes_array;

boxes_array.header.frame_id = "map";

boxes_array.header.stamp = ros::Time();

for(unsigned int i = 0 ; i < m_ObstacleTracking.m_DetectedObjects.size(); i++)

{

jsk_recognition_msgs::BoundingBox box;

box.header.frame_id = "map";

box.header.stamp = ros::Time().now();

box.pose.position.x = m_ObstacleTracking.m_DetectedObjects.at(i).center.pos.x;

box.pose.position.y = m_ObstacleTracking.m_DetectedObjects.at(i).center.pos.y;

box.pose.position.z = m_ObstacleTracking.m_DetectedObjects.at(i).center.pos.z;

box.value = 0.9;

box.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(0, 0, m_ObstacleTracking.m_DetectedObjects.at(i).center.pos.a);

box.dimensions.x = m_ObstacleTracking.m_DetectedObjects.at(i).l;

box.dimensions.y = m_ObstacleTracking.m_DetectedObjects.at(i).w;

box.dimensions.z = m_ObstacleTracking.m_DetectedObjects.at(i).h;

boxes_array.boxes.push_back(box);

}

pub_TrackedObstaclesRviz.publish(boxes_array);

}

这里是可视化的方法,将数据传入到对应的变量里,通过ros发布出去

3.3.4 CalculateTTC函数

void ContourTracker::CalculateTTC(const std::vector<PlannerHNS::DetectedObject>& objs, const PlannerHNS::WayPoint& currState, PlannerHNS::RoadNetwork& map)

{

std::vector<std::vector<PlannerHNS::WayPoint> > paths;

GetFrontTrajectories(m_ClosestLanesList, currState, m_Params.DetectionRadius, paths);

double min_d = DBL_MAX;

int closest_obj_id = -1;

int closest_path_id = -1;

int i_start = -1;

int i_end = -1;

for(unsigned int i_obj = 0; i_obj < objs.size(); i_obj++)

{

for(unsigned int i =0 ; i < paths.size(); i++)

{

PlannerHNS::RelativeInfo obj_info, car_info;

PlannerHNS::PlanningHelpers::GetRelativeInfoLimited(paths.at(i), objs.at(i_obj).center , obj_info);

if(!obj_info.bAfter && !obj_info.bBefore && fabs(obj_info.perp_distance) < m_MapFilterDistance)

{

PlannerHNS::PlanningHelpers::GetRelativeInfoLimited(paths.at(i), currState , car_info);

double longitudinalDist = PlannerHNS::PlanningHelpers::GetExactDistanceOnTrajectory(paths.at(i), car_info, obj_info);

if(longitudinalDist < min_d)

{

min_d = longitudinalDist;

closest_obj_id = i_obj;

closest_path_id = i;

i_start = car_info.iFront;

i_end = obj_info.iBack;

}

}

}

}

std::vector<PlannerHNS::WayPoint> direct_paths;

if(closest_path_id >= 0 && closest_obj_id >= 0)

{

for(unsigned int i=i_start; i<=i_end; i++)

{

direct_paths.push_back(paths.at(closest_path_id).at(i));

}

}

//Visualize Direct Path

m_TTC_Path.markers.clear();

if(direct_paths.size() == 0)

direct_paths.push_back(currState);

PlannerHNS::ROSHelpers::TTC_PathRviz(direct_paths, m_TTC_Path);

pub_TTC_PathRviz.publish(m_TTC_Path);

//calculate TTC

if(direct_paths.size() > 2 && closest_obj_id >= 0)

{

double dd = min_d;

double dv = objs.at(closest_obj_id).center.v - currState.v;

if(fabs(dv) > 0.1)

{

double ttc = (dd - 4) / dv;

cout << "TTC: " << ttc << ", dv: << " << dv <<", dd:" << dd << endl;

}

else

cout << "TTC: Inf" << endl;

}

}

这里是计算TTC的函数,我们暂时先跳过此部分,等到后面作者有时间再叙述一下,这里面到底是如何操作的。

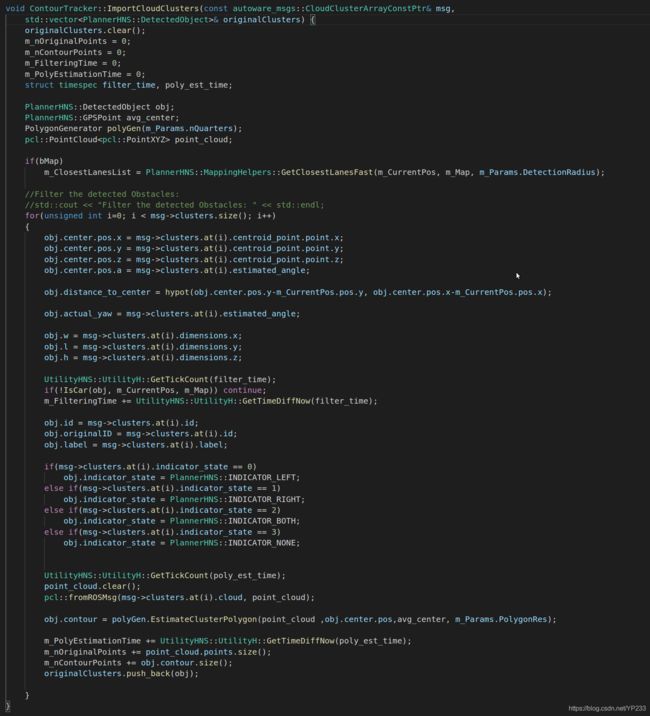

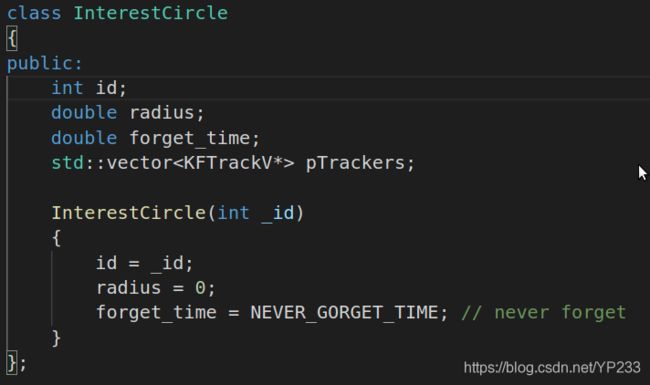

二、SimpleTracker分析

1.构造函数:

构造函数内初始化变量,可以看到:

m_MAX_ASSOCIATION_DISTANCE:最大关联距离,即前后两帧数据的同一个目标距离相差不能超过2m

m_MAX_ASSOCIATION_SIZE_DIFF:最大关联尺寸变化

m_MAX_ASSOCIATION_ANGLE_DIFF:最大关联角度变化

m_MaxKeepTime:最大保留时间

m_nMinTrustAppearances:最少出现次数

UtilityHNS::UtilityH::GetTickCount(m_TrackTimer);这个是封装的一个用来计时的方法:

void UtilityH::GetTickCount(struct timespec& t)

{

while(clock_gettime(0, & t) == -1);

}

timespec结构体存放了两种数据类型:秒 tv_sec 和纳秒 tv_nsec

然后clock_gettime 是time.h中的API,用于查询到当前的系统时刻,并且赋值给timespec

/* Get current value of clock CLOCK_ID and store it in TP. */

extern int clock_gettime (clockid_t __clock_id, struct timespec *__tp) __THROW;

因此此方法就是获得当前时间,并且存放在类内成员方法中m_TrackTimer。

2.初始化方法:

在初始化的时候,又一次更新了m_TrackTimer的时间,同时初始化目标区域。

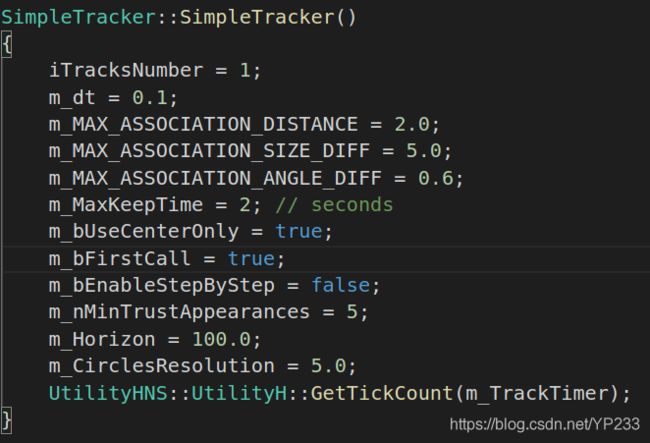

m_InterestRegions是一个存放InterestCircle类型vector的类内公有成员变量。

std::vector

其中这个类型里面记录了id,区域半径,还有遗忘时间,加上KFTrackV类型的vector。

这个KFTrackV类型便是最底层的kf实现,这里面是调用的CV::KalmanFilter接口,这个会在第三节做介绍

继续InitializeInterestRegions() 方法:

1.m_CirclesResolution 初始值为5, m_MaxKeepTime为2

1.m_CirclesResolution 初始值为5, m_MaxKeepTime为2

2.进入while循环,可以看到退出循环的唯一条件为:next_raduis半径大于初始值m_Horizon:100

3.初始regions的数量为0,因此会new一个InterestCircle类型的指针,id为1,半径为0。

4.进入if判断,新生成的指针半径即为5, forget_time为2,压入到vector里面。

5.继续函数进程,next_raduis半径增加为1.8倍,但是不能大于m_Horizon:100

6.同时遗忘时间减小为0.75倍,但是不能小于0.1s

因此,此方法会生成

{id, radius, forget_time}

{1, 5, 2}

{2, 9,1.5}

{3, 16.2, 1.125}

{4, 29.16, 0.84375}

{5, 52.488, 0.5339}

{6, 94.4784, 0.4}

{7, 100, 0.3}

这七个interestregion区域

3. DoOneStep方法

这里为调用cv kf tracker的接口

这里为调用cv kf tracker的接口

1.!m_bEnableStepByStep, 这里launch文件中加载的是false,因此直接进入判断

2. m_bFirstCall为true,即第一次进入滤波器中,需要初始化滤波器,并将m_bFirstCall置为false

3. 更新m_TrackTimer计时器

4. 根据传入的type判断,是什么样的track方式,这里依次分析:

5. AssociateOnly();数据关联

第一步做前后帧的数据关联:

第一步做前后帧的数据关联:

void SimpleTracker::MatchWithDistanceOnly()

{

newObjects.clear();

m_MatchList.clear();

double d_y = 0, d_x = 0, d = 0;

//std::cout << std::endl << std::endl << std::endl;

while(m_DetectedObjects.size() > 0)

{

double iClosest_track = -1;

double iClosest_obj = -1;

double dClosest = m_MAX_ASSOCIATION_DISTANCE;

double size_diff = 0;

//std::cout << std::endl;

for(unsigned int jj = 0; jj < m_DetectedObjects.size(); jj++)

{

double object_size = sqrt(m_DetectedObjects.at(jj).w*m_DetectedObjects.at(jj).w + m_DetectedObjects.at(jj).l*m_DetectedObjects.at(jj).l + m_DetectedObjects.at(jj).h*m_DetectedObjects.at(jj).h);

for(unsigned int i = 0; i < m_TrackSimply.size(); i++)

{

double old_size = sqrt(m_TrackSimply.at(i).obj.w*m_TrackSimply.at(i).obj.w + m_TrackSimply.at(i).obj.l*m_TrackSimply.at(i).obj.l + m_TrackSimply.at(i).obj.h*m_TrackSimply.at(i).obj.h);

d_y = m_DetectedObjects.at(jj).center.pos.y-m_TrackSimply.at(i).obj.center.pos.y;

d_x = m_DetectedObjects.at(jj).center.pos.x-m_TrackSimply.at(i).obj.center.pos.x;

d = hypot(d_y, d_x);

if(d < dClosest)

{

dClosest = d;

iClosest_track = i;

iClosest_obj = jj;

size_diff = fabs(old_size - object_size);

}

}

}

if(iClosest_obj != -1 && iClosest_track != -1 && dClosest < m_MAX_ASSOCIATION_DISTANCE)

{

std::cout << "MatchObj: " << m_TrackSimply.at(iClosest_track).obj.id << ", MinD: " << dClosest << ", SizeDiff: (" << size_diff << ")" << ", ObjI" << iClosest_obj <<", TrackI: " << iClosest_track << std::endl;

m_MatchList.push_back(std::make_pair(m_TrackSimply.at(iClosest_track).obj.center, m_DetectedObjects.at(iClosest_obj).center));

m_DetectedObjects.at(iClosest_obj).id = m_TrackSimply.at(iClosest_track).obj.id;

MergeObjectAndTrack(m_TrackSimply.at(iClosest_track), m_DetectedObjects.at(iClosest_obj));

newObjects.push_back(m_TrackSimply.at(iClosest_track));

m_TrackSimply.erase(m_TrackSimply.begin()+iClosest_track);

m_DetectedObjects.erase(m_DetectedObjects.begin()+iClosest_obj);

}

else

{

iTracksNumber = iTracksNumber + 1;

m_DetectedObjects.at(0).id = iTracksNumber;

KFTrackV track(m_DetectedObjects.at(0).center.pos.x, m_DetectedObjects.at(0).center.pos.y,m_DetectedObjects.at(0).actual_yaw, m_DetectedObjects.at(0).id, m_dt, m_nMinTrustAppearances);

track.obj = m_DetectedObjects.at(0);

newObjects.push_back(track);

//std::cout << "NewObj: " << iTracksNumber << ", MinD: " << dCloseset << ", MinS: " << min_size << ", ObjI:" << 0 <<", TrackI: " << iCloseset_track << ", ContMatch: " << bFoundMatch << std::endl;

m_DetectedObjects.erase(m_DetectedObjects.begin()+0);

}

}

m_TrackSimply = newObjects;

}

将全局变量新目标newObjects清空,将匹配列表清空,dy,dx,d清空为0

当有检测到目标时:

声明四个局部变量iClosest_track, iClosest_obj, dClosest, size_diff, 并且初始化

遍历所有的检测到的目标:

- 计算目标的尺寸大小object size

- 第一步初始化的时候,m_TrackSimply数量为0, 因此跳过此循环

- if条件不满足,因此进入else部分:

- iTracksNumber = 2,将m_DetectedObjects第一个目标的id置为2,将第一个目标的参数属性传给KFTrackV对象, 并将track对象传到newobjects中,m_DetectedObjects对象删除第一个

- 因此一直遍历,直到m_DetectedObjects的数量为0时,退出循环,因此此方法就是将所有检测的目标赋给每一个滤波器,最后传入到m_TrackSimply中。

如果不是第一次进入此滤波器时,即m_TrackSimply记录了上一帧的检测目标时:

将全局变量新目标newObjects清空,将匹配列表清空,dy,dx,d清空为0

当前帧有目标时:

声明四个局部变量iClosest_track, iClosest_obj, dClosest, size_diff, 并且初始化

遍历所有当前帧的目标:

- 计算目标的尺寸大小object size

- 遍历上一帧的目标:

- 计算上一帧目标的尺寸大小

- 计算出和当前帧目标距离最小的上一帧 size_diff

- 此时if判断会进入前面一项,把匹配的两项压入到m_MatchList 中,同时更新当前检测到的目标id,将上一帧关联的id赋给当前。同时互相更新tracker和obj的参数,并将标志位m_bUpdated更新

6. 删除两个容器中更新的数据,继续循环,直到m_DetectedObjects清空。

6. 删除两个容器中更新的数据,继续循环,直到m_DetectedObjects清空。

第二步更新数据关联:

这里对每一个跟踪对象都做UpdateAssociateOnly()操作

这里对每一个跟踪对象都做UpdateAssociateOnly()操作

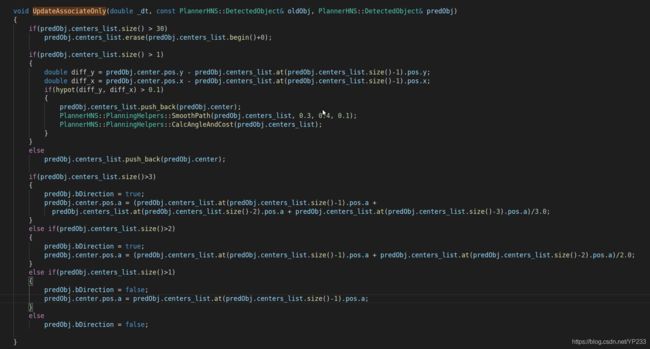

- 如果目标的center_list的数量大于30,则删除第一个,这里好奇为什么没有用while循环,而是用的if,只算一次

- 如果目标的center_list的数量大于1,即存在多个数据时,算出当前中心的x,y和center_list最后一个的x,y的距离,如果距离大于0.1,把center存入center_list里面,然后调用SmoothPath(), CalcAngleAndCost()函数。否则将中心数据压入到center_list中

看函数的名字是猜测其功能是平滑曲线:

如果输入的path点数少于等于2,直接返回。

然后声明一些局部变量,并初始化赋值,进入while循环。

遍历path的点,循环内会使用path内的点左右数据更新中间点的值,直到相对于原始线变化范围大于容忍值时,退出循环。

因此本函数就是利用两边的点均衡中的点,并且这个均衡程度不能太大,使得变形超过设定的值。

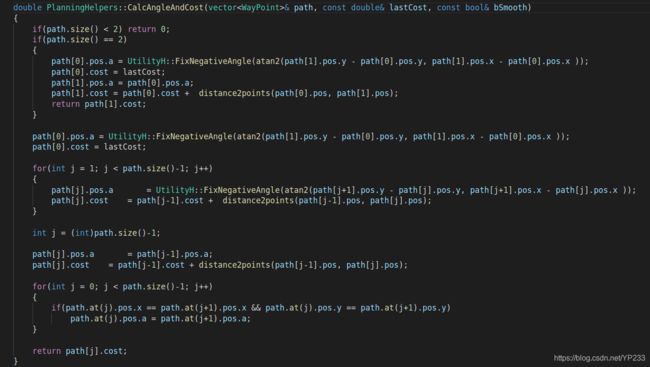

看函数的名字是计算角度和cost:

看函数的名字是计算角度和cost:

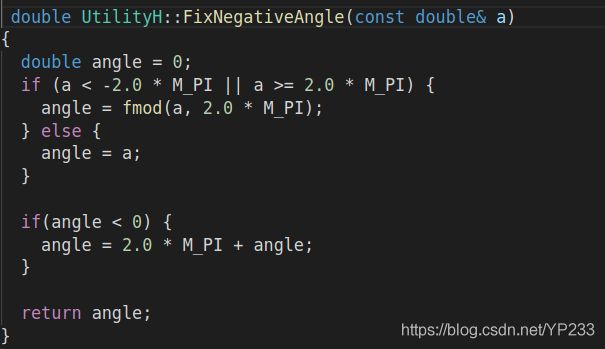

如果输入的path点数少于等于2,直接返回。

如果输入的path只有两个点的时候:使用方法下面的函数将path的角度归一到0 - 2pi内,第一个点的角度和第二个点的角度一致,都为直线的角度,但是1号点的cost是0号点的cost加上两点之间的直线距离。

如果path的点数大于2个时,则cost采用累加的方式,角度等于前一个和当前的点计算出的角度。同时如果两个点重叠的话,为避免计算问题,直接将前一个点的角度赋值给后一个。

因此本函数的功能是计算出所有点累加出来的距离cost,同时算出角度。

- 如果center_list的数据大于3时,bDirection标志位改为true,中心位置的角度改成倒数三个角度的平均值

- 如果center_list的数据大于2时,bDirection标志位改为true,中心位置的角度改成倒数两个角度的平均值

- 如果center_list的数据大于1时,bDirection标志位改为false,中心位置的角度即为最后一个

- 如果center_list的数据为0时,bDirection标志位改为false,即没有方向

因此此处部分是更新了每一个track对象内的center_list存放的数据。

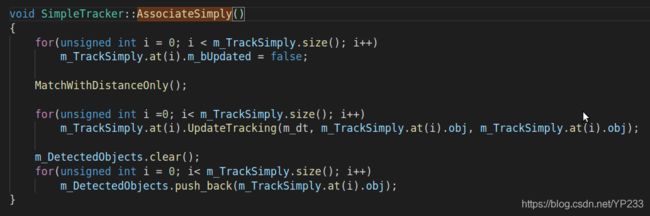

6. AssociateSimply();简单关联

如果type方式为SimpleTracker的时候,则简单关联。

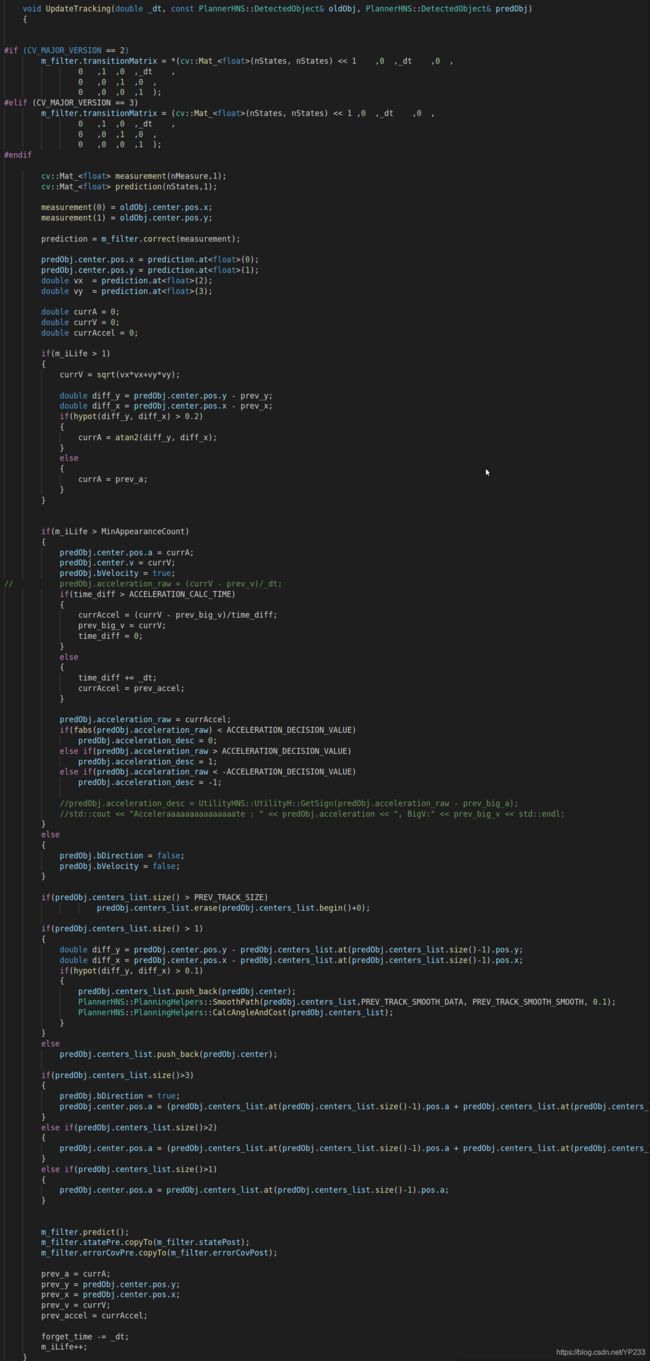

- 根据CV版本的不同,设置kf滤波器的转移矩阵

- 将oldObj的位置传递给kf滤波器的修正值

- 将预测的位置传递给predObj对象

- 得到vx,vy预测数据

- 声明局部变量并且初始化

- 如果m_iLife>1,即如果目标生命周期大于1

- 计算出合速度

- 比较前后帧的位置差

- 如果位置变化较大时:

- 角度等于atan2(diff_y,diff_x)

- 否则角度等于之前帧的角度

- 如果位置变化较大时:

- 如果m_iLife > MinAppearanceCount

- 将角度传给预测Obj的角度

- 将速度传给预测Obj的速度

- 预测速度标志为true

- 如果前后帧时间差大于加速度最小计算时间

- 此时的加速度等于前后速度差除以时间 currAccel = (currV - prev_big_v)/time_diff;

- 现在的速度赋值给历史帧速度

- 时间差变为0

- 否则时间差增加一帧

- 加速度保持不变

- 最后对加速度进行一个限制,使得加速度处于-1和1之间

- 否则速度和方向标志为都是为false

- 如果预测的目标中center_list 的尺寸大于PREV_TRACK_SIZE的时候:

- 剔除掉第一个目标(这里仍然没有搞明白为什么不是while循环,保证数目等于设定的数量)

- 下面执行的内容其实就是UpdateAssociateOnly函数的内容

- 滤波器预测m_filter.predict();

- 复制此时的状态量到下一刻

- 将当前时刻的角度,位置,速度,加速度传给前一帧,遗忘时间减去一帧时间,生命周期加一

回到AssociateSimply( ) 主函数进程内,最后也是将跟踪对象传给全局对象m_DetectedObjects内。

- AssociateDistanceOnlyAndTrack();

CleanOldTracks();

如果type等于contour track时,则使用如下的方式进行数据关联更新

void SimpleTracker::AssociateDistanceOnlyAndTrack() {

for(unsigned int i = 0; i < m_TrackSimply.size(); i++)

m_TrackSimply.at(i).m_bUpdated = false;

double d_y = 0, d_x = 0, d = 0;

//std::cout << std::endl;

while(m_DetectedObjects.size() > 0)

{

double iClosest_track = -1;

double iClosest_obj = -1;

double dClosest = m_MAX_ASSOCIATION_DISTANCE;

double size_diff = -1;

double angle_diff = 0;

//std::cout << "DetObjSize: " << m_DetectedObjects.size() << ", TracksSize: " << m_TrackSimply.size() << std::endl;

for(unsigned int jj = 0; jj < m_DetectedObjects.size(); jj++)

{

double object_size = sqrt(m_DetectedObjects.at(jj).w*m_DetectedObjects.at(jj).w + m_DetectedObjects.at(jj).l*m_DetectedObjects.at(jj).l + m_DetectedObjects.at(jj).h*m_DetectedObjects.at(jj).h);

for(unsigned int i = 0; i < m_TrackSimply.size(); i++)

{

d_y = m_DetectedObjects.at(jj).center.pos.y-m_TrackSimply.at(i).obj.center.pos.y;

d_x = m_DetectedObjects.at(jj).center.pos.x-m_TrackSimply.at(i).obj.center.pos.x;

d = hypot(d_y, d_x);

double old_size = sqrt(m_TrackSimply.at(i).obj.w*m_TrackSimply.at(i).obj.w + m_TrackSimply.at(i).obj.l*m_TrackSimply.at(i).obj.l + m_TrackSimply.at(i).obj.h*m_TrackSimply.at(i).obj.h);

size_diff = fabs(old_size - object_size);

// if(m_TrackSimply.at(i).obj.bDirection && m_TrackSimply.at(i).obj.bVelocity && m_TrackSimply.at(i).obj.center.v*3.6 > 3)

// {

// double a_check = UtilityHNS::UtilityH::FixNegativeAngle(atan2(d_y, d_x));

// double a_old = UtilityHNS::UtilityH::FixNegativeAngle(m_TrackSimply.at(i).obj.center.pos.a);

// angle_diff = UtilityHNS::UtilityH::AngleBetweenTwoAnglesPositive(a_check, a_old);

// }

// else

// angle_diff = 0;

if(d < dClosest && size_diff < m_MAX_ASSOCIATION_SIZE_DIFF)

{

dClosest = d;

iClosest_track = i;

iClosest_obj = jj;

}

//std::cout << "Test: " << m_TrackSimply.at(i).obj.id << ", MinD: " << d << ", ObjS: " << object_size << ", ObjI: " << jj << ", TrackS: " << old_size << ", TrackI: " << i << std::endl;

}

}

if(iClosest_obj != -1 && iClosest_track != -1 && dClosest < m_MAX_ASSOCIATION_DISTANCE)

{

//std::cout << "MatchObj: " << m_TrackSimply.at(iClosest_track).obj.id << ", MinD: " << dClosest << ", Sdiff: " << size_diff << ", ObjI: " << iClosest_obj <<", TrackI: " << iClosest_track << std::endl;

m_DetectedObjects.at(iClosest_obj).id = m_TrackSimply.at(iClosest_track).obj.id;

MergeObjectAndTrack(m_TrackSimply.at(iClosest_track), m_DetectedObjects.at(iClosest_obj));

AssociateToRegions(m_TrackSimply.at(iClosest_track));

m_DetectedObjects.erase(m_DetectedObjects.begin()+iClosest_obj);

}

else

{

iTracksNumber = iTracksNumber + 1;

if(iClosest_obj != -1)

{

m_DetectedObjects.at(iClosest_obj).id = iTracksNumber;

KFTrackV track(m_DetectedObjects.at(iClosest_obj).center.pos.x, m_DetectedObjects.at(iClosest_obj).center.pos.y,m_DetectedObjects.at(iClosest_obj).actual_yaw, m_DetectedObjects.at(iClosest_obj).id, m_dt, m_nMinTrustAppearances);

track.obj = m_DetectedObjects.at(iClosest_obj);

AssociateToRegions(track);

m_TrackSimply.push_back(track);

//std::cout << "UnMachedObj: " << iTracksNumber << ", MinD: " << dClosest << ", ObjI:" << iClosest_obj <<", TrackI: " << iClosest_track << std::endl;

m_DetectedObjects.erase(m_DetectedObjects.begin()+iClosest_obj);

}

else

{

m_DetectedObjects.at(0).id = iTracksNumber;

KFTrackV track(m_DetectedObjects.at(0).center.pos.x, m_DetectedObjects.at(0).center.pos.y,m_DetectedObjects.at(0).actual_yaw, m_DetectedObjects.at(0).id, m_dt, m_nMinTrustAppearances);

track.obj = m_DetectedObjects.at(0);

AssociateToRegions(track);

m_TrackSimply.push_back(track);

//std::cout << "NewObj: " << iTracksNumber << ", MinD: " << dClosest << ", ObjI:" << 0 <<", TrackI: " << iClosest_track << std::endl;

m_DetectedObjects.erase(m_DetectedObjects.begin()+0);

}

}

}

for(unsigned int i =0; i< m_TrackSimply.size(); i++)

{

m_TrackSimply.at(i).UpdateTracking(m_dt, m_TrackSimply.at(i).obj, m_TrackSimply.at(i).obj);

}

}

相比于前文的MatchWithDistanceOnly()方法,大致算法流程是一样的,只是增加了个别方法。我们从遍历完前后帧目标后说起:

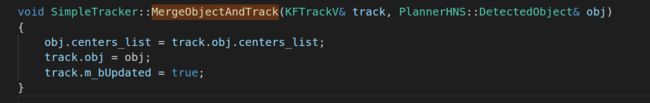

- 如果当前帧和上一帧存在匹配的目标时,

- 更新m_DetectedObjects

- MergeObjectAndTrack,即将track里面的center_list赋给Obj,obj赋值给track对象,其实就是将obj属性进一步赋值给track对象

- 下面多了一步AssociateToRegions()

在前文比较前面的位置,我们生成了7个半径,id,遗忘时间不一致的兴趣区域。

在前文比较前面的位置,我们生成了7个半径,id,遗忘时间不一致的兴趣区域。

- 遍历所有m_InterestRegions

- 清空区域内的所有pTrackers

- 如果检测的目标在某个区域内,则将区域内的id赋值给检测目标,还有遗忘时间,并且直接返回

- 如果发现检测目标没有在某个区域内,则将最大的那个区域id和遗忘时间赋值给目标。

因此这个函数就是将检测到的目标分配对应的区域id和遗忘时间。

最后遍历所有的track对象,调用UpdateTracking方法,更新卡尔曼滤波器。

- 遍历所有m_InterestRegions

- 反之如果不存在匹配的目标时,但是iClosest_Obj不等于-1时,显然是不会进此循环的,因此这里代码属于BUG

- 在进入else判断时,也会增加一个关联到兴趣区域的过程

- 最后也是遍历所有track对象,调用UpdateTracking方法,更新卡尔曼滤波器。

- 清空m_DetectedObjects

- 遍历m_TrackSimply对象,如果忘记时间小于0,并且时间不等于-1000,删除对象

- 否则如果跟踪对象的生命周期大于最小信任出现次数,则将跟踪对象obj传给m_DetectedObjects。

以上就是SimpleTracker.cpp的主要几个函数,剩下的

MatchClosest()

MatchClosestCost()

AssociateAndTrack()

InsidePolygon

都是没有用到的,因此不在这里讲述了,主要流程是一样的,只是增加了几个tricks。

三、KF滤波器(ToDo)

总结

上面便是Autoware里面的lidar_kf_contour_track部分,比较绕,需要慢慢细致理。里面第三节关于滤波器的部分,作者还没有写,等到后续有时间的时候,会继续补充此文档。