QT接收ROS视频信息,并将其显示在界面上(含配置过程以及报错解决方案)

QT接收ROS视频信息,并将其显示在界面上

- 一、项目背景

- 二、环境安装

-

- 1、安装ROS对qt pkg的支持

- 2、安装ROS qtc pluging版本QtCreator

- 3、创建ros_qt_gui包

- 4、编译功能包(这里面ROS和QT都有)

- 三、打开QTCreator

- 五、程序细节

-

- main_window.hpp

- main_window.cpp

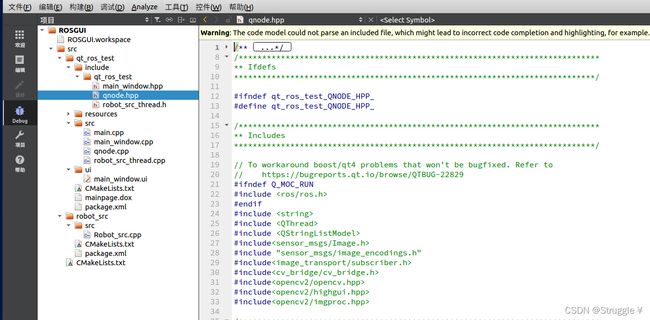

- qnode.hpp

- qnode.cpp

- 将相机话题中的图像消息转为opencv图像格式,再转为ROS消息发送个QT

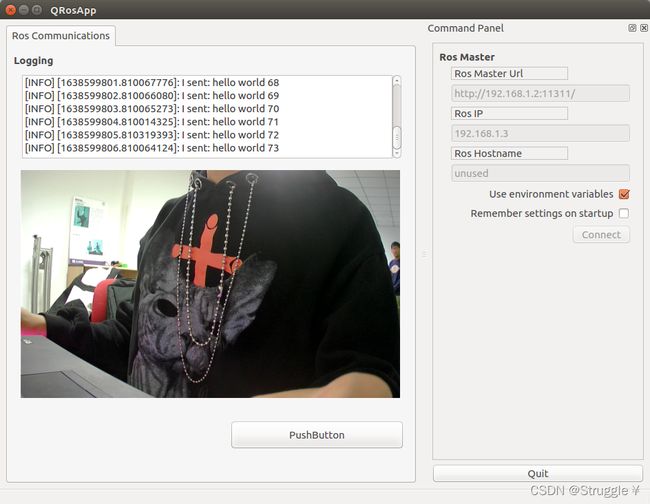

- 六、运行结果

- 七、错误总结

-

- 1、Bug-one

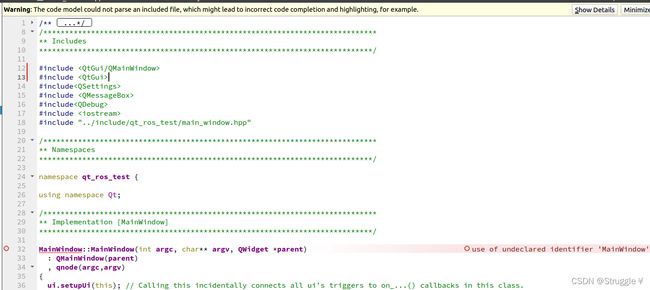

- 2、Bug-two

- 3、Bug-three

- 4、Bug-four

- 5、Bug-five

- 6、Bug-six

- 七、QT5和melodic配置时,CMakelist文件的编写

- 八、参考文章

一、项目背景

本项目为室内场景理解,需要机器人开启摄像头检测识别室内物体,进而完成一系列智能操作。而我负责的部分为将机器人所携带的摄像头画面接收,将其显示在QT界面上。因为机器人采用ROS驱动,所以摄像头的视频信息通过ROS的话题传输,因此,QT需要与ROS搭配起来。

二、环境安装

1、安装ROS对qt pkg的支持

我这里的ros版本为kinetic,需要改为自己对应的版本(ubuntu16.04对应kinetic)

sudo apt-get install ros-kinetic-qt-create

sudo apt-get install ros-kinetic-qt-build

如果你的ros版本为melodic,需要改为自己对应的版本(ubuntu18.04对应melodic)

sudo apt-get install ros-melodic-qt-create

sudo apt-get install ros-melodic-qt-build

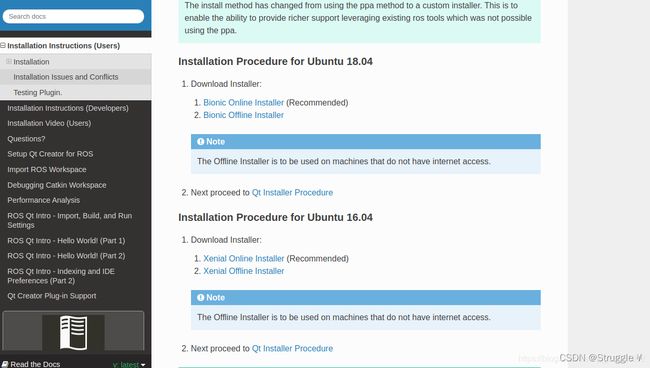

2、安装ROS qtc pluging版本QtCreator

这个带有ROS插件的QT可以在QtCreator中创建ROS工作空间

下载地址:ros-qtc-pluging-kinetic

也可以去官网下载对应的版本(melodic和kinetic不同,我的是kinetic的)

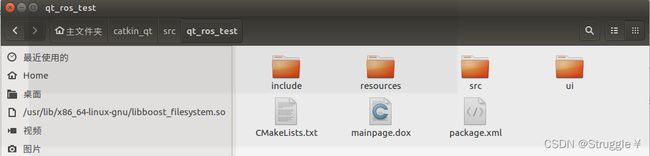

3、创建ros_qt_gui包

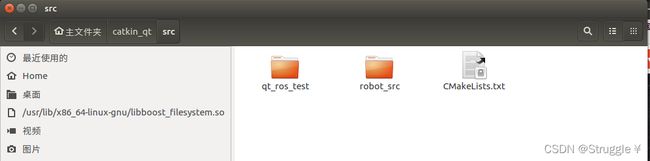

- 创建ROS的工作空间catkin_qt(此时与Qt还没有关系,只是单纯的ROS空间ws)

mkdir -p ~/catkin_qt/src

- 进入src文件夹:

cd ~/catkin_qt/src

- 初始化ROS工作空间:

catkin_init_workspace

这样就在src文件中创建了一个 CMakeLists.txt 的文件,目的是告诉系统,这个是ROS的工作空间。

- 创建完ROS的工作空间后,就能通过catkin_create_qt_pkg命令创建ros_qt_gui包了,在src目录下创建包,本例程将ros_gui包命名为qt_ros_test:

(此时与Qt与ROS已经有关系了)

catkin_create_qt_pkg qt_ros_test

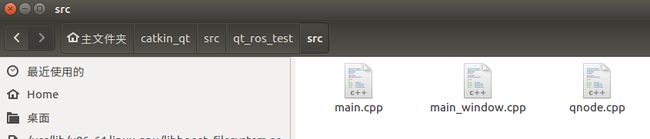

这时候ros_qt_gui的src下自动生成三个.cpp文件

- main.cpp是主函数文件

- main_window.cpp是Qt的ui管理文件

- qnode.cpp是ROS管理节点的文件

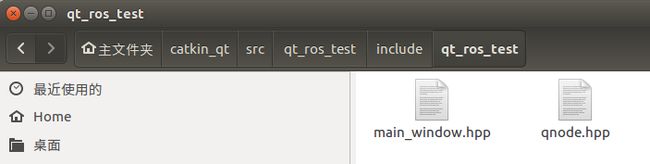

同时,include下会有两个hpp文件,分别对应cpp文件的头文件

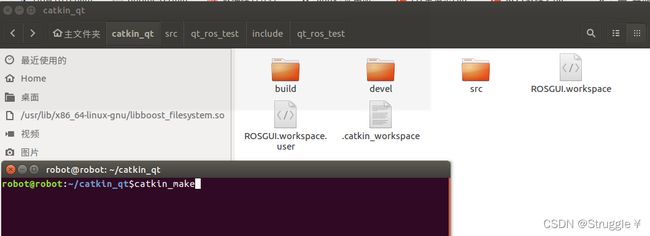

4、编译功能包(这里面ROS和QT都有)

- 进入工作空间catkin_qt编译

catkin_make

- 更改功能包的Cmakelist.txt,进入这个功能包qt_ros_test之后,看到Cmakelist.txt

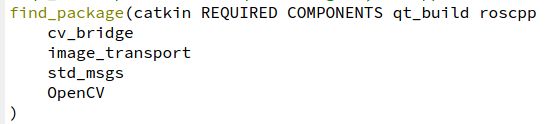

打开Cmakelist.txt文件,注意find_package的改动,和*.h的添加

##############################################################################

# CMake

##############################################################################

cmake_minimum_required(VERSION 2.8.0)

project(qt_ros_test)

##############################################################################

# Catkin

##############################################################################

# qt_build provides the qt cmake glue, roscpp the comms for a default talker

find_package(catkin REQUIRED COMPONENTS qt_build roscpp

cv_bridge

image_transport

std_msgs

OpenCV

)

include_directories(${catkin_INCLUDE_DIRS})

# Use this to define what the package will export (e.g. libs, headers).

# Since the default here is to produce only a binary, we don't worry about

# exporting anything.

catkin_package()

##############################################################################

# Qt Environment

##############################################################################

# this comes from qt_build's qt-ros.cmake which is automatically

# included via the dependency call in package.xml

rosbuild_prepare_qt4(QtCore QtGui) # Add the appropriate components to the component list here

##############################################################################

# Sections

##############################################################################

file(GLOB QT_FORMS RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} ui/*.ui)

file(GLOB QT_RESOURCES RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} resources/*.qrc)

file(GLOB_RECURSE QT_MOC RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} FOLLOW_SYMLINKS include/qt_ros_test/*.hpp *.h) #加*.h可以找到自己额外添加的.h文件

QT4_ADD_RESOURCES(QT_RESOURCES_CPP ${QT_RESOURCES})

QT4_WRAP_UI(QT_FORMS_HPP ${QT_FORMS})

QT4_WRAP_CPP(QT_MOC_HPP ${QT_MOC})

##############################################################################

# Sources

##############################################################################

file(GLOB_RECURSE QT_SOURCES RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} FOLLOW_SYMLINKS src/*.cpp)

##############################################################################

# Binaries

##############################################################################

add_executable(qt_ros_test ${QT_SOURCES} ${QT_RESOURCES_CPP} ${QT_FORMS_HPP} ${QT_MOC_HPP})

target_link_libraries(qt_ros_test ${QT_LIBRARIES} ${catkin_LIBRARIES})

install(TARGETS qt_ros_test RUNTIME DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION})

打开package.xml文件,注意opencv的添加

<?xml version="1.0"?>

<package>

<name>qt_ros_test</name>

<version>0.1.0</version>

<description>

qt_ros_test

</description>

<maintainer email="[email protected]">robot</maintainer>

<author>robot</author>

<license>BSD</license>

<!-- <url type="bugtracker">https://github.com/stonier/qt_ros/issues</url> -->

<!-- <url type="repository">https://github.com/stonier/qt_ros</url> -->

<buildtool_depend>catkin</buildtool_depend>

<build_depend>opencv2</build_depend>

<run_depend>opencv2</run_depend>

<build_depend>qt_build</build_depend>

<build_depend>roscpp</build_depend>

<build_depend>libqt4-dev</build_depend>

<run_depend>qt_build</run_depend>

<run_depend>roscpp</run_depend>

<run_depend>libqt4-dev</run_depend>

<build_depend>cv_bridge</build_depend>

<run_depend>cv_bridge</run_depend>

<build_depend>std_msgs</build_depend>

<run_depend>std_msgs</run_depend>

<build_depend>image_transport</build_depend>

<run_depend>image_transport</run_depend>

</package>

三、打开QTCreator

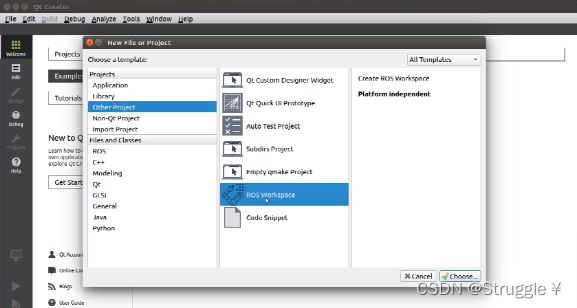

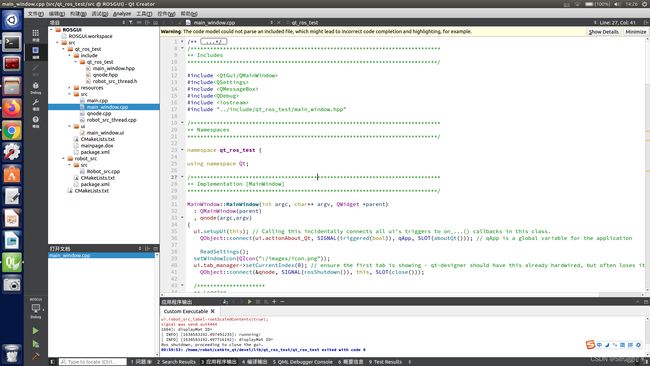

- 创建完rou_qt的包之后,打开QtCreator4.9.2,如下界面,然后选择ROS workspace

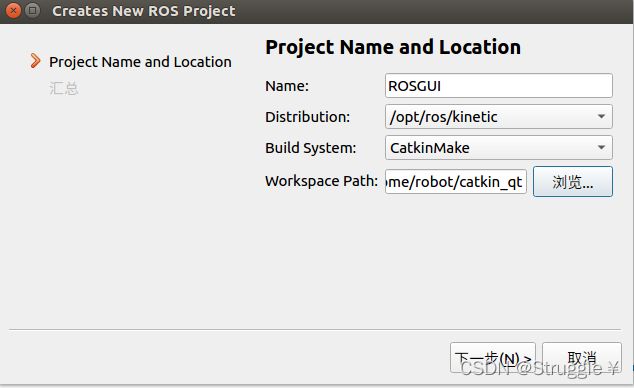

- Name可以随意命名;Build System:改为Catkin_make编译模式;Workspace Path选择刚才建立的总ROS工作空间catkin_qt。

- 打开界面如下图所示:

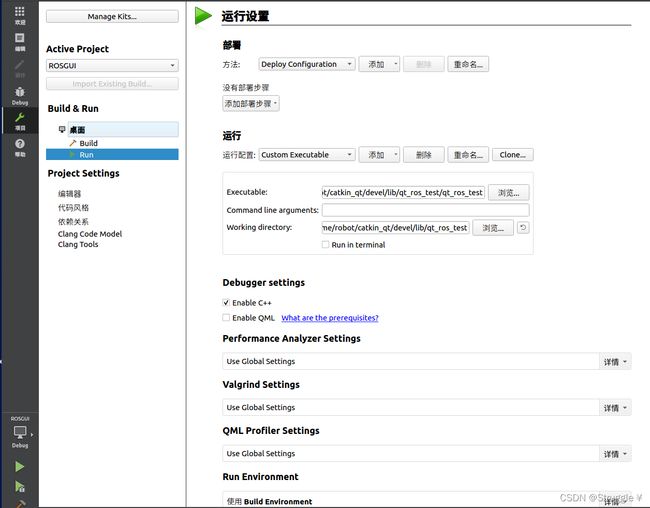

由于已经编译过ROS工作空间,所以节点已经存在,Qt想要运行,必须选择要运行的节点 - 项目->RUN->运行,配置运行配置为Custom Executable,可执行文件Executable选择编译好的节点,工作,目录Working directory就选择节点的上一级目录即可

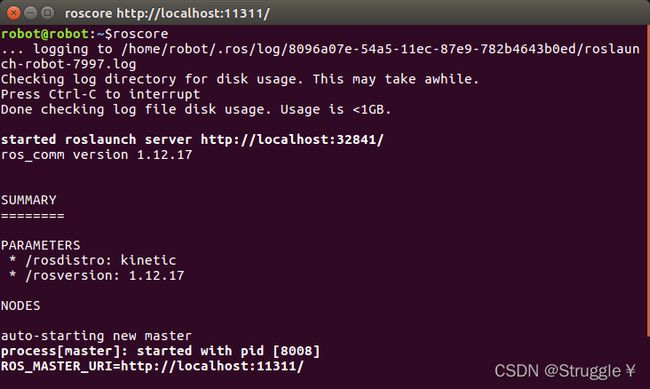

- 在终端运行节点,初始化话题,然后qnode.cpp里接收话题

五、程序细节

main_window.hpp

这个文件是main_window.cpp的头文件,里面编写关于界面显示的一切声明。下面的代码是部分代码,主要都是我添加的一些代码,创建项目自动生成的我没有呈现出来。

//******************头文件部分**********************//

#include main_window.cpp

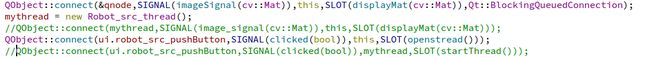

界面的实现函数,也是信号与槽的绑定之处。

信号与槽函数的绑定

/*********************

** 鼠标事件

**********************/

QObject::connect(this,SIGNAL(sendMessageSignal(QString)),this,SLOT(receiveMessageSLOT(QString)));

this->setMouseTracking(true);

ui.Grasp_Interaction_label->setAttribute(Qt::WA_TransparentForMouseEvents,true);

QObject::connect(this,SIGNAL(sendMessageSignalX(qint16)),&qnode,SLOT(receiveX(qint16)));

QObject::connect(this,SIGNAL(sendMessageSignalY(qint16)),&qnode,SLOT(receiveY(qint16)));

/*********************

**实时画面的显示

**********************/

QObject::connect(&qnode,SIGNAL(imageSignal(cv::Mat)),this,SLOT(displayMat(cv::Mat)),Qt::BlockingQueuedConnection);

QObject::connect(ui.RobotCamera_pushButton,SIGNAL(clicked(bool)),this,SLOT(openstread()));

QObject::connect(&qnode,SIGNAL(imageSignal2(cv::Mat)),this,SLOT(displayMat2(cv::Mat)),Qt::BlockingQueuedConnection);

QObject::connect(ui.Semantic_Segmentation_pushButton,SIGNAL(clicked(bool)),this,SLOT(openstread2()));

QObject::connect(&qnode,SIGNAL(imageSignal_seg_results(cv::Mat)),this,SLOT(displayMat_seg_results(cv::Mat)),Qt::BlockingQueuedConnection);

QObject::connect(ui.Segmentation_result_pushButton,SIGNAL(clicked(bool)),this,SLOT(openstread2()));

/*********************

** 按钮按下,This is an offic room 显示

**********************/

QObject::connect(ui.Scene_classication_pushButton,SIGNAL(clicked(bool)),this,SLOT(scene_classication()));//scene classication

/*********************

** 摄像头检测到的抓取结果显示,

**********************/

QObject::connect(&qnode,SIGNAL(imageSignal_grasp_results(cv::Mat)),this,SLOT(displayMat_grasp_results(cv::Mat)),Qt::BlockingQueuedConnection);

这里要说一个知识点,在connect的过程中,如果要显示的图像是一帧一帧的接收,就需要Qt::BlockingQueuedConnection放置信号和槽堵塞。

槽函数的编写

/*****************************************************************************

** 点击按钮,显示This is an Office Room在LineEdit上,这里编写了一个延时三秒的延时函数

*****************************************************************************/

void MainWindow::scene_classication(){

//延时部分

QDateTime old_time = QDateTime::currentDateTime();

QDateTime new_time;

do {

new_time = QDateTime::currentDateTime();

}while(old_time.secsTo(new_time) <= 3);

//延时部分

ui.Scene_classification_lineEdit->setText("This is an Office Room");

ui.Scene_classification_lineEdit->setStyleSheet("color:green");//文字颜色

ui.Scene_classification_lineEdit->setFont(QFont("Timers" , 25 ,QFont::Bold));//字体大小,加粗样式

}

/*****************************************************************************

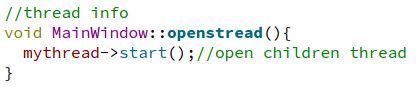

** 通过按钮控制子线程开启,但qnode在ROS Master启动之后,就自动启动了子线程,因此这个槽函数无用

*****************************************************************************/

void MainWindow::openstread(){

qnode.start();

qDebug()<<"qnode.start()";

}

void MainWindow::openstread2(){

qnode.start();//open children thread

}

/*****************************************************************************

** 图像显示槽函数,这是在Qt中标准显示opencv图像的函数,需要与信号绑定

*****************************************************************************/

void MainWindow::displayMat(cv::Mat image)

{

cv::Mat rgb;

qDebug() << "displayMat ID=" << QThread::currentThread();

QImage img;

if(image.channels()==3)

{

//cvt Mat BGR 2 QImage RGB

cv::cvtColor(image,rgb,CV_BGR2RGB);

img =QImage((const unsigned char*)(rgb.data),

rgb.cols,rgb.rows,

rgb.cols*rgb.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

else

{

img =QImage((const unsigned char*)(image.data),

image.cols,image.rows,

image.cols*image.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

ui.Robot_camera_label->setPixmap(QPixmap::fromImage(img));//image_label就是你添加的label的对象名

ui.Robot_camera_label->setScaledContents(true);

qDebug()<<"ui.robot_src_label->setScaledContents(true);";

}

void MainWindow::displayMat2(cv::Mat image)

{

cv::Mat rgb;

qDebug() << "displayMat2 ID=" << QThread::currentThread();

ROS_INFO( "displayMat2 ID=");

QImage img;

qDebug()<<"receive out success!!";

if(image.channels()==3)

{

//cvt Mat BGR 2 QImage RGB

cv::cvtColor(image,rgb,CV_BGR2RGB);

img =QImage((const unsigned char*)(rgb.data),

rgb.cols,rgb.rows,

rgb.cols*rgb.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

else

{

img =QImage((const unsigned char*)(image.data),

image.cols,image.rows,

image.cols*image.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

ui.Semantic_segmentation_label->setPixmap(QPixmap::fromImage(img));//image_label就是你添加的label的对象名

ui.Semantic_segmentation_label->setScaledContents(true);

}

void MainWindow::displayMat_seg_results(cv::Mat image)

{

cv::Mat rgb;

qDebug() << "displayMat_seg_results ID=" << QThread::currentThread();

QImage img;

if(image.channels()==3)

{

//cvt Mat BGR 2 QImage RGB

cv::cvtColor(image,rgb,CV_BGR2RGB);

img =QImage((const unsigned char*)(rgb.data),

rgb.cols,rgb.rows,

rgb.cols*rgb.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

else

{

img =QImage((const unsigned char*)(image.data),

image.cols,image.rows,

image.cols*image.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

ui.Segmentation_result_label->setPixmap(QPixmap::fromImage(img));//image_label就是你添加的label的对象名

ui.Segmentation_result_label->setScaledContents(true);

qDebug()<<"ui.robot_src_label->setScaledContents(true);";

}

void MainWindow::displayMat_grasp_results(cv::Mat image)

{

cv::Mat rgb;

qDebug() << "displayMat_seg_results ID=" << QThread::currentThread();

QImage img;

if(image.channels()==3)

{

//cvt Mat BGR 2 QImage RGB

cv::cvtColor(image,rgb,CV_BGR2RGB);

img =QImage((const unsigned char*)(rgb.data),

rgb.cols,rgb.rows,

rgb.cols*rgb.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

else

{

img =QImage((const unsigned char*)(image.data),

image.cols,image.rows,

image.cols*image.channels(),

QImage::Format_RGB888);

qDebug()<<"cvt Mat BGR 2 QImage RGB";

}

ui.Grasp_Interaction_label->setPixmap(QPixmap::fromImage(img));//image_label就是你添加的label的对象名

ui.Grasp_Interaction_label->setScaledContents(true);

qDebug()<<"ui.robot_src_label->setScaledContents(true);";

}

/*****************************************************************************

** 接收Q_EMIT sendMessageSignal(sendMsg),将坐标以字符串的形式显示在label上

*****************************************************************************/

void MainWindow::receiveMessageSLOT(QString str1){

qDebug()<<"receiveMessageSLOT";

ui.pixel_label->setText(str1);

}

/*****************************************************************************

** 鼠标移动事件,this->setMouseTracking(true)是设置鼠标移动时也可以出发mouseMoveEvent函数

*****************************************************************************/

void MainWindow::mouseMoveEvent(QMouseEvent *event){

//event->x()就是得到鼠标移动时的x坐标

QString sendMsg = "(" + QString::number(1*((event->x())-490)) + "," + QString::number(1*((event->y())-510)) + ")";

if(!sendMsg.isEmpty())

{

qDebug()<<"sendMsg is not Empty";

Q_EMIT sendMessageSignal(sendMsg);

}

else

{

qDebug()<<"sendMsg.isEmpty";

}

}

/*****************************************************************************

** 鼠标按下事件,注意是右键按下!

*****************************************************************************/

void MainWindow::mousePressEvent(QMouseEvent *event){

int x = int(1.33*((event->x())-490-13)); //-490-13就是根据显示label在Qt界面中的相对位置计算了

int y = int(1.65*((event->y())-510+48));

//1.33 is width scale 代表的是显示图像的label和真实图像之间的宽度比例,因为我要映射到真实图像中

//1.65 is height scale 代表的是显示图像的label和真实图像之间的高度比例,因为我要映射到真实图像中

QString sendMsg = "(" + QString::number(x) + "," + QString::number(y) + ")";

qDebug()<<"x = "<<x;

qDebug()<<"y = "<<y;

if(!sendMsg.isEmpty())

{

qDebug()<<"sendMsg is not Empty";

Q_EMIT sendMessageSignal(sendMsg);

Q_EMIT sendMessageSignalX(x);//将鼠标按下得到的X坐标,发出去,qt中发送int类型需要是qint

Q_EMIT sendMessageSignalY(y);//将鼠标按下得到的X坐标,发出去,qt中发送int类型需要是qint

}

else

{

qDebug()<<"sendMsg.isEmpty";

}

}

/*****************************************************************************

** 鼠标释放事件

*****************************************************************************/

void MainWindow::mouseReleaseEvent(QMouseEvent *event){

qDebug()<<"event->pos() = "<<event->pos();

}

== 鼠标事件不需要链接槽函数,只需要声明+实现就可以。==

qnode.hpp

这个文件是,QT与ROS结合开发时,在QT中管理ROS节点的声明、发布、订阅的声明文件。与之配对的是qnode.cpp文件。

//******************头文件部分**********************//

#ifndef Q_MOC_RUN

#include qnode.cpp

这个文件是QT与ROS之间的桥梁,管理着ROS中节点的初始化,订阅发布等一系列操作。而且QNode继承自QThread,所以可以重写run函数,实现多线程的编程。

//******************头文件部分**********************//

#include 回调函数的编写

void QNode::myCallback_img(const sensor_msgs::ImageConstPtr &msg)

{

cv::Mat grasp_result_image = cv::imread("/home/robot/catkin_ws/src/grasp/scripts/2.jpg");

qDebug()<<"cv_bridge::CvImagePtr cv_ptr";

cv_bridge::CvImagePtr cv_ptr;

try

{ /*change to CVImage*/

cv_ptr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);//将ros::sensor_msgs转为opencv格式

img = cv_ptr->image;//转为opencv图像格式

if(img.empty())

{

qDebug()<<"img is empty";

}

Q_EMIT imageSignal(img) ; //将图像发出去,由于是循环执行回调函数,所以会一直发送图像

if(!grasp_result_image.empty()){

Q_EMIT imageSignal_grasp_results(grasp_result_image);

qDebug()<<"grasp_result_image is not empty";

}else {

qDebug()<<"grasp_result_image is empty";

}

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str());

}

}

void QNode::myCallback_img2(const sensor_msgs::ImageConstPtr &msg)

{

cv::Mat grasp_result_image = cv::imread("/home/robot/catkin_ws/src/grasp/scripts/1.png");

cv_bridge::CvImagePtr cv_ptr;

try

{ /*change to CVImage*/

cv_ptr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);

img2 = cv_ptr->image;//呼应public中的img 啊!

if(img2.empty()){

qDebug()<<"img is empty";

}

Q_EMIT imageSignal2(img2) ; //将信号发出去

if(!grasp_result_image.empty()){

Q_EMIT imageSignal_grasp_results(grasp_result_image);

qDebug()<<"grasp_result_image is not empty";

}else {

qDebug()<<"grasp_result_image is empty";

}

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str());

}

}

//从QT主界面接收到的X坐标值

void QNode::receiveX(qint16 x){

int xx = x;

qDebug()<<"xx = "<<xx;

std_msgs::Int16 pixel_x_msgs;

pixel_x_msgs.data = xx;//将int类型的数据赋值给std_msgs::Int16类型的消息数据

pixel_x_publisher.publish(pixel_x_msgs);//将消息发送出去

qDebug()<<"x is sending";

}

void QNode::receiveY(qint16 y){

int yy = y;

qDebug()<<"yy = "<<yy;

std_msgs::Int16 pixel_y_msgs;

pixel_y_msgs.data = yy;

pixel_y_publisher.publish(pixel_y_msgs);

qDebug()<<"y is sending";

}

void QNode::myCallback_img_seg_results(const sensor_msgs::ImageConstPtr &msg)

{

qDebug()<<"signal was send out1111";

cv_bridge::CvImagePtr cv_ptr;

try

{ /*change to CVImage*/

cv_ptr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);

img_seg_results = cv_ptr->image;//呼应public中的img 啊!

if(img_seg_results.empty()){

qDebug()<<"img is empty";

}

Q_EMIT imageSignal_seg_results(img_seg_results) ; //将信号发出去

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str());

}

}

void QNode::myCallback_img_grasp_results(const sensor_msgs::ImageConstPtr &msg)

{

qDebug()<<"signal was send out1111";

cv_bridge::CvImagePtr cv_ptr;

try

{ /*change to CVImage*/

cv_ptr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);

img_grasp_results = cv_ptr->image;//呼应public中的img 啊!

if(img_seg_results.empty()){

qDebug()<<"img is empty";

}

Q_EMIT imageSignal_grasp_results(img_grasp_results) ; //将信号发出去

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str());

}

}

将相机话题中的图像消息转为opencv图像格式,再转为ROS消息发送个QT

先说明一下,我为什么要做这件事!由于本项目所用的相机为RGBD相机,该相机的SDK内部集成了ROS通信部分。相机会将图像画面发送到某个话题 /camera/color/image_raw 上,本来以为QT可以直接接收这个话题的消息,然后在显示在QT界面上。但是,QT收不到这个话题。于是,我自己写了一个订阅者节点订阅 /camera/color/image_raw 上的数据,然后我通过QT间接的订阅我自己写的这个节点,但是依然订阅不到。我怀疑是消息类型的问题,所以编写了一个订阅发布同时存在的节点,即先订阅==/camera/color/image_raw==消息,然后转为解码为opencv格式的Mat图像,然后将Mat格式的图像编码为ROS中的消息,通过发布者发送到某个话题 /XUHONGBO 上,这时候QT就可以订阅这个话题 /XUHONGBO 了,就可以正常显示了。这个节点的代码如下:

#include 这是其中一种opencv-img转ros-msg的编码方式,还有很多种方式,但这个相机的消息只适用于这种方式。这也是试验了好久才成功的方式。因此记录下来!!!

sensor_msgs::ImagePtr img2msg = cv_bridge::CvImage(std_msgs::Header(),"bgr8",img).toImageMsg();

六、运行结果

七、错误总结

1、Bug-one

- Bug描述

QTCreator 编译错误:has_binary_operator.hpp:51: Parse error at “BOOST_JOIN”

#ifndef Q_MOC_RUN

#include qnode.cpp文件中,#include

- 解决方案

找到has_binary_operator.hpp这个文件,本系统的路径为:/usr/include/boost/type_traits/has_binary_operator.hpp。然后修改51行和224行,分别加上条件编译即可。

51行

#ifndef Q_MOC_RUN

namespace BOOST_JOIN(BOOST_TT_TRAIT_NAME,_impl) {

#endif

224行

#ifndef Q_MOC_RUN

} // namespace boost

#endif

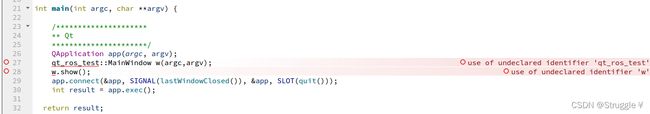

2、Bug-two

- Bug描述

use of undeclared identifier “MainWindow” 同时 use of undeclared identifier “qt_ros_test”

- 原因

采用自动创建的文件,在main.cpp和main_window.cpp文件中,头文件是#include < QtGui> ,所以会报错。

- 解决方案

3、Bug-three

- Bug描述

ubuntu调用usb摄像头出错

- 原因

没有安装驱动

- 解决方案

安装驱动

一、让系统可以识别到usb摄像头设备

打开终端,输入命令:ls /dev/v*,则可看到"/dev/video0",表示成功驱动摄像头。

如果没有的话,拔掉摄像头,重新插上。然后再输入命令:ls /dev/v*,则可看到。

输入命令:lsusb ,可以查看usb摄像头的型号。

二、安装应用程序显示摄像头捕捉到的视频

1)使用应用程序camorama

输入命令:sudo apt-get install camorama

安装完成后,在终端中输入命令:camorama,即可显示出视频信息;或在菜单“应用程序”中可以打开程序。

4、Bug-four

- Bug描述

QtVIDEOIO ERROR: V4L2: Pixel format of incoming image is unsupported by OpenCV

Unable to stop the stream: 设备或资源忙

- 原因

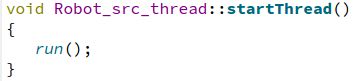

网上很多博客都说缺少v4l1compat.so,然后需要把v4l1compat.so添加到bashrc里,但是不适合我的项目。找了很久,发现我的子线程写错了

我这里采用的子线程方法是通过按钮,调用子线程下的槽函数startThread,然后调用run函数

- 解决方案

子线程的进入必须要start开启,否则子线程无法开启,于是我改动了线程方法,问题解决。

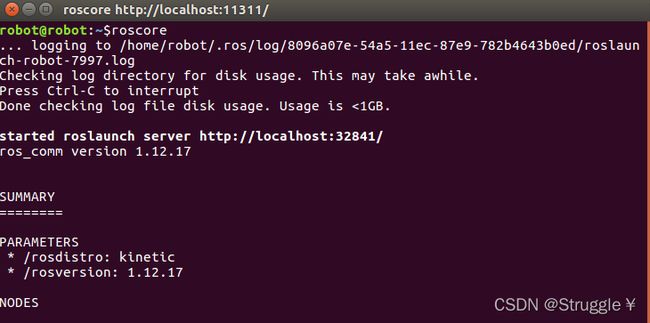

5、Bug-five

- Bug描述

启动roscore时,报错:ImportError: No module named

defusedxml

- 原因

ROS依赖于python2,linux并且通过路径/usr/bin/python来指定默认的python版本,一旦该路径没有被设置,或者被设置为python3,就会出现以上错误。本例程是因为师姐换了python的路径,导致无法链接到python2。

- 解决方案

(1)删除原有链接

sudo rm -rf /usr/bin/python

(2)重新指定python2

sudo ln -s /usr/bin/python2 /usr/bin/python

(3)再次执行查看目前的python版本

ls -n /usr/bin/python

(4)修改之后的结果,可以看到-> /usr/bin/python2,指向的python2

lrwxrwxrwx 1 0 0 16 11月 13 23:46 /usr/bin/python -> /usr/bin/python2

(5)再次运行roscore

6、Bug-six

- Bug描述

‘cv::VideoCapture::isOpened() const’未定义的引用,这是在测试阶段,我通过OpenCV下的VideoCapture读取摄像头数据,结果已知报错,说找不到引用。

- 原因

这是因为ros_qt_test功能包的Cmakelist.txt里缺少OpenCV的库

- 解决方案

在ros_qt_test功能包的Cmakelist.txt中的find_package下添加OpenCV库即可

七、QT5和melodic配置时,CMakelist文件的编写

##############################################################################

# CMake

##############################################################################

cmake_minimum_required(VERSION 2.8.0)

project(qt_ros_gui_pkg)

set(CMAKE_INCLUDE_CURRENT_DIR ON)

##############################################################################

# Catkin

##############################################################################

# qt_build provides the qt cmake glue, roscpp the comms for a default talker

set(OpenCV_DIR /usr/share/OpenCV/)

find_package(catkin REQUIRED COMPONENTS

OpenCV

sensor_msgs

image_transport

std_msgs

cv_bridge

)

find_package(Qt5 REQUIRED Core Widgets)

set(QT_LIBRARIES Qt5::Widgets)

include_directories(${catkin_INCLUDE_DIRS})

# Use this to define what the package will export (e.g. libs, headers).

# Since the default here is to produce only a binary, we don't worry about

# exporting anything.

catkin_package()

##############################################################################

# Qt Environment

##############################################################################

#this comes from qt_build's qt-ros.cmake which is automatically

#included via the dependency call in package.xml

#rosbuild_prepare_qt4(QtCore QtGui) # Add the appropriate components to the component list here

##############################################################################

# Sections

##############################################################################

file(GLOB QT_FORMS RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} ui/*.ui)

file(GLOB QT_RESOURCES RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} resources/*.qrc)

file(GLOB_RECURSE QT_MOC RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} FOLLOW_SYMLINKS include/qt_ros_gui_pkg/*.hpp *.h)

QT5_ADD_RESOURCES(QT_RESOURCES_CPP ${QT_RESOURCES})

QT5_WRAP_UI(QT_FORMS_HPP ${QT_FORMS})

QT5_WRAP_CPP(QT_MOC_HPP ${QT_MOC})

##############################################################################

# Sources

##############################################################################

file(GLOB_RECURSE QT_SOURCES RELATIVE ${CMAKE_CURRENT_SOURCE_DIR} FOLLOW_SYMLINKS src/*.cpp)

##############################################################################

# Binaries

##############################################################################

add_executable(qt_ros_gui_pkg ${QT_SOURCES} ${QT_RESOURCES_CPP} ${QT_FORMS_HPP} ${QT_MOC_HPP})

target_link_libraries(qt_ros_gui_pkg ${QT_LIBRARIES} ${catkin_LIBRARIES} )

install(TARGETS qt_ros_gui_pkg RUNTIME DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION})

target_link_libraries(qt_ros_gui_pkg

/usr/lib/x86_64-linux-gnu/libtiff.so.5

/usr/lib/x86_64-linux-gnu/libgdal.so.20

/usr/lib/x86_64-linux-gnu/libsqlite3.so.0

)

message(STATUS "OpenCV_INCLUDE_DIRS ?" : ${OpenCV_INCLUDE_DIRS})

message(STATUS "OpenCV_VERSION ?" : ${OpenCV_VERSION})

message(STATUS "OpenCV_LIB ?" : ${OpenCV_LIBS})

message(STATUS "OpenCV_CONGIG_PATH ?" : ${OpenCV_CONFIG_PATH})

其中,让我困扰了很久的地方:

target_link_libraries(qt_ros_gui_pkg

/usr/lib/x86_64-linux-gnu/libtiff.so.5

/usr/lib/x86_64-linux-gnu/libgdal.so.20

/usr/lib/x86_64-linux-gnu/libsqlite3.so.0

)

先是缺tiff库,导入进来之后,缺libgdal的库,再导入进来之后,对sqlite3中的东西未定义,所以把sqlite3的库导入进来,结果就成了!!所以遇到这种问题,只需要一步一步的看报错点,然后一点一点的试验就可以了。

八、参考文章

ROS:两个节点同时具有发布和订阅图像信息的功能

QT中的多线程编程

ROS学习记录3——创建一个节点

ROS Qt5 librviz人机交互界面开发一(配置QT环境)

ROS学习–第3篇:ROS基础—创建工作空间

QTCreator 编译错误:has_binary_operator.hpp:51: Parse error at “BOOST_JOIN”

ubuntu 使用USB摄像头

QT信号槽连接之不同线程之间的信号槽连接方式

ImportError: No module named defusedxml