深度学习论文分享(一)ByteTrackV2: 2D and 3D Multi-Object T racking by Associating Every Detection Box

深度学习论文分享(一)ByteTrackV2: 2D and 3D Multi-Object T racking by Associating Every Detection Box

- 前言

-

- Abstract

- 1 INTRODUCTION

- 2 RELATED WORK

-

- 2.1 2D Object Detection

- 2.2 3D Object Detection

- 2.3 2D Multi-Object Tracking

- 2.4 3D Multi-Object Tracking

- 3 BYTETRACK V2

-

- 3.1 Problem Formulation(问题表述)

- 3.2 Preliminary

- 3.3 Complementary 3D Motion Prediction(互补的 3D 运动预测)

- 3.4 Unified 2D and 3D Data Association

- 4 DATASETS AND METRICS

-

- 4.1 Datasets

- 4.2 Metrics

- 5 EXPERIMENTS

-

- 5.1 Implementation Details

- 6 CONCLUSION

- REFERENCES

前言

论文原文:https://arxiv.org/pdf/2303.15334.pdf

论文代码:https://github.com/ifzhang/ByteTrack-V2

Title:ByteTrackV2: 2D and 3D Multi-Object Tracking by Associating Every Detection Box

Authors:Yifu Zhang, Xinggang Wang, Xiaoqing Y e, Wei Zhang, Jincheng Lu, Xiao T an, Errui Ding, Peize Sun, Jingdong Wang

在此仅做翻译(经过个人调整,有基础的话应该不难理解),有时间会有详细精读笔记。

Abstract

多目标跟踪(MOT)旨在估计视频帧内物体的边界框和身份。检测框是二维和三维MOT的基础。检测分数不可避免的变化会导致跟踪后的目标缺失。我们提出了一种分层的数据关联策略来挖掘低分检测框中的真实目标,缓解了目标缺失和轨迹碎片化的问题。简单而通用的数据关联策略在2D和3D设置下都显示了有效性。在3D场景中,跟踪器更容易预测世界坐标中的物体速度。我们提出了一种补充的运动预测策略,将检测到的速度与卡尔曼滤波器结合起来,以解决突然运动和短期消失的问题。ByteT rackV2在nuScenes 3D MOT排行榜上领先于相机(56.4% AMOT A)和激光雷达(70.1% AMOT A)模式。此外,它是非参数的,可以与各种检测器集成,使其在实际应用中具有吸引力。源代码发布于https://github.com/ifzhang/ByteTrack-V2。

1 INTRODUCTION

这项工作解决了2D和3D多目标跟踪(MOT)问题。无论是2D还是3D多目标跟踪都是计算机视觉领域长期存在的任务。MOT的目标是在2D图像平面或3D世界坐标中判断感兴趣的物体的轨迹。成功解决这一问题将有利于自动驾驶和智能交通等许多应用。

2D多目标跟踪和3D多目标跟踪本质上是交织在一起的。这两项任务都必须定位对象并获得跨不同帧的对象对应关系。然而,由于输入数据来自不同的模态,来自不同领域的研究人员已经独立地解决了这些问题。2D-MOT是在图像平面上进行的,图像信息是物体对应关系的重要线索。基于外观的跟踪器从图像中提取物体外观特征,然后计算特征距离作为对应关系。3D-MOT通常在包含深度信息的世界系统中进行。通过空间相似性(如3D Intersection over Union (IoU),或点距离)更容易区分不同的对象。二维MOT和三维MOT可视化如图1所示。

我们通过三个模块解决 2D 和 3D MOT 的任务,即检测、运动预测和数据关联。首先,目标检测器生成 2D/3D 检测框和分数。在起始帧中,检测到的对象被初始化为轨迹。然后,诸如卡尔曼滤波器之类的运动预测器预测下一帧中轨迹的位置。运动预测很容易在图像平面和 3D 世界空间上实现。最后,检测框根据一些空间相似性与轨迹的预测位置相关联。

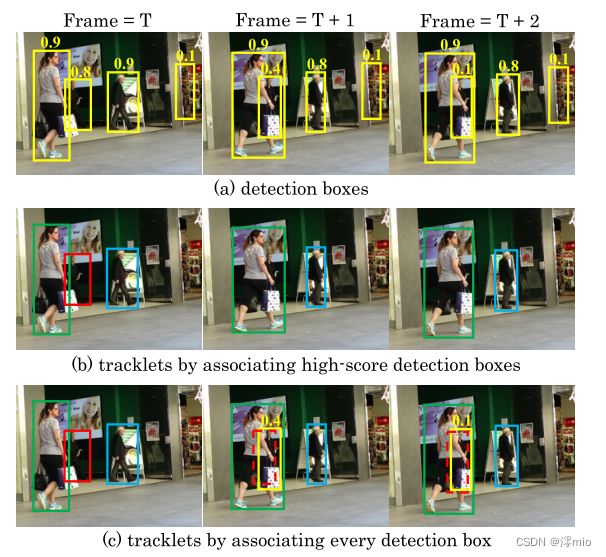

检测是整个MOT框架的基础。由于视频中的场景复杂,检测器容易做出不完美的预测。高分检测框通常比低分检测框包含更多的真阳性。然而,简单地消除所有低分框是次优的,因为低分检测框有时表明对象的存在,例如。被遮挡的物体。过滤掉这些对象会对 MOT 造成不可逆的错误,并带来不可忽略的检测缺失和轨迹碎片,如图 2 的(b)行所示。

PS:这个图2和ByteTrackV1里面的图一模一样

为了解决因消除低分框而导致的漏检和轨迹碎片化问题,我们提出了一种由检测驱动的分层数据关联策略。它充分利用了从高分到低分的检测框。我们发现检测框和轨迹之间的运动相似性提供了一个强有力的线索来区分低分检测框中的对象和背景。我们首先根据运动相似性将高分检测框与轨迹关联起来。与SORT类似,我们采用卡尔曼滤波器来预测新帧中轨迹的位置。相似度可以通过预测框和检测框的 2D 或 3D IoU 来计算。然后,我们使用相同的运动相似性在未匹配的轨迹和低分检测框之间执行第二次关联,以恢复真实对象并去除背景。关联结果如图 2 的行 (c) 所示。

在 3D MOT 中,尤其是驾驶场景中,物体的突然运动和遮挡或模糊导致的短暂消失会带来身份切换。与 2D MOT 不同,跟踪器更容易预测世界坐标中的准确速度。我们提出了一种互补的 3D 运动预测策略来解决对象突然运动和短期消失的问题。更精确的运动预测往往会获得更可靠的关联结果,并为跟踪性能带来收益。以前的工作使用检测到的速度或卡尔曼滤波器进行运动预测。然而,检测到的速度由于缺乏历史运动信息而难以进行长期关联。另一方面,卡尔曼滤波器利用历史信息产生更平滑的运动预测。但是当遇到突然和不可预测的运动或低帧率视频时,它无法预测准确的位置。我们通过将检测到的物体速度与卡尔曼滤波器相结合,提出了一种互补的运动预测方法。具体来说,我们利用检测到的速度来执行短期关联,这对突然的运动更加稳健。我们采用卡尔曼滤波器来预测每帧中每个轨迹的更平滑位置。当发生短期消失时,卡尔曼滤波器可以保持目标位置并在目标再次出现时进行长期关联。

总之,我们提出了 ByteTrackV2 来解决 2D 和 3D MOT 问题。这建立在我们最初的工作 ByteTrack 的基础上,以每个检测框命名是 tracklet 的一个基本单元,作为计算机程序中的一个byte。 ByteTrack 专注于如何利用低分检测框来减少数据关联策略中的真实对象丢失和碎片化轨迹。下面展示了 ByteTrackV2 的主要贡献和扩展结果。

统一的 2D 和 3D 数据关联。我们提出了统一的数据关联策略来解决 2D 和 3D MOT 问题。它在低分检测框中挖掘真实物体,缓解物体丢失和轨迹碎片化的问题。此外,它是非参数的,可以与各种检测器结合使用,使其在实际应用中具有吸引力。

互补的 3D 运动预测。我们提出了一种互补的 3D 运动预测策略来应对突然运动和物体短期消失的挑战。具体来说,我们利用检测器预测的物体速度来执行短期关联,这对突然运动更加稳健。我们还采用卡尔曼滤波器为每个轨迹预测更平滑的位置,并在对象丢失和重新出现时执行长期关联。

在不同模式下对 3D MOT 基准进行彻底的实验。我们对大规模 nuScenes 数据集进行了详细的实验。检测驱动的分层数据关联和集成 3D 运动预测策略在 3D 场景中得到验证。 ByteTrackV2 在相机和 LiDAR 设置下的 nuScenes 跟踪任务上实现了最先进的性能。

2 RELATED WORK

在本节中,我们简要回顾了与我们的主题相关的现有工作,包括 2D 目标检测、3D 目标检测、2D 多目标跟踪和 3D 多目标跟踪。我们还讨论了这些任务之间的关系。

2.1 2D Object Detection

2D目标检测旨在从图像输入中预测边界框。它是计算机视觉中最活跃的课题之一,是多目标跟踪的基础。随着目标检测的快速发展,越来越多的多目标跟踪方法开始利用更强大的检测器以获得更高的跟踪性能。单阶段目标检测器 RetinaNet 开始被多种方法采用,例如 Retinatrack、Chained-tracker。anchor-free检测器 CenterNet 是大多数方法Fairmot、CenterTrack、TraDeS、SOTMOT、GSDT、Learning to track with

object permanence、CorrTracker 最常用的检测器,因为它简单高效. YOLO系列检测器YOLOV3、YOLOV4、YOLOX也被大量方法采用,如Towards real-time

multi-object tracking、CSTracker、CSTrackerV2、Transmot、Unicorn、Robust multi-object tracking by marginal inference,来实现准确性和速度的平衡。最近,基于transformer的检测器DETR、Deformable detr、Conditional detr for fast training convergence 被一些跟踪器Transtrack、TrackFormer、MOTR 用于其优雅的端到端框架。我们采用 YOLOX 作为我们的高效二维物体检测器。

MOT17 数据集提供了 DPM、Faster R-CNN 和 SDP 等流行检测器获得的检测结果。大量的多目标跟踪方法[49]、[50]、[51]、[52]、[53]、[54]、[55]侧重于基于这些给定的检测结果来提高跟踪性能。我们还在这个公共检测设置下评估我们的跟踪算法。

[49] J. Xu, Y . Cao, Z. Zhang, and H. Hu, “Spatial-temporal relation networks for multi-object tracking,” in ICCV, 2019, pp. 3988–3998.

[50] P . Chu and H. Ling, “Famnet: Joint learning of feature, affinity and multi-dimensional assignment for online multiple object tracking,” in ICCV, 2019, pp. 6172–6181.

[51] P . Bergmann, T. Meinhardt, and L. Leal-Taixe, “Tracking without bells and whistles,” in ICCV, 2019, pp. 941–951.

[52] L. Chen, H. Ai, Z. Zhuang, and C. Shang, “Real-time multiple people tracking with deeply learned candidate selection and person reidentification,” in ICME. IEEE, 2018, pp. 1–6.

[53] J. Zhu, H. Yang, N. Liu, M. Kim, W. Zhang, and M.-H. Yang, “Online multi-object tracking with dual matching attention networks,” in Proceedings of the ECCV (ECCV), 2018, pp. 366–382.

[54] G. Brasó and L. Leal-Taixé, “Learning a neural solver for multiple object tracking,” in CVPR, 2020, pp. 6247–6257.

[55] A. Hornakova, R. Henschel, B. Rosenhahn, and P . Swoboda, “Lifted disjoint paths with application in multiple object tracking,” in International Conference on Machine Learning. PMLR, 2020, pp. 4364–4375.

2.2 3D Object Detection

3D 目标检测旨在从图像或 LiDAR 输入中预测三维旋转边界框。它是 3D 多目标跟踪不可或缺的组成部分,因为预测的 3D 边界框的质量在跟踪性能中起着重要作用。

基于 LiDAR 的 3D 目标检测方法 [11]、[56]、[57]、[58]、[59]、[60]、[61] 取得了令人印象深刻的性能,因为从 LiDAR 传感器检索的点云包含准确的 3D 结构信息.然而,激光雷达的高成本限制了其广泛应用。

[11] T. Yin, X. Zhou, and P . Krahenbuhl, “Center-based 3d object detection and tracking,” in CVPR, 2021, pp. 11 784–11 793.

[56] Y . Zhou and O. Tuzel, “V oxelnet: End-to-end learning for point cloud based 3d object detection,” in CVPR, 2018, pp. 4490–4499.

[57] Y . Yan, Y . Mao, and B. Li, “Second: Sparsely embedded convolutional detection,” Sensors, vol. 18, no. 10, p. 3337, 2018.

[58] A. H. Lang, S. V ora, H. Caesar, L. Zhou, J. Yang, and O. Beijbom, “Pointpillars: Fast encoders for object detection from point clouds,” in CVPR, 2019, pp. 12 697–12 705.

[59] S. Shi, X. Wang, and H. Li, “Pointrcnn: 3d object proposal generation and detection from point cloud,” in CVPR, 2019, pp. 770–779.

[60] L. Du, X. Ye, X. Tan, E. Johns, B. Chen, E. Ding, X. Xue, and J. Feng, “Ago-net: Association-guided 3d point cloud object detection network,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

[61] J. Liu, Y . Chen, X. Ye, Z. Tian, X. Tan, and X. Qi, “Spatial pruned sparse convolution for efficient 3d object detection,” arXiv preprint arXiv:2209.14201, 2022.

或者,基于相机的方法的最新进展使其低成本且移动性广泛可用,因此基于相机的方法由于其低成本和丰富的上下文信息而受到越来越多的关注。由于缺乏准确的深度,单目 3D 对象检测方法 [62]、[63]、[64]、[65]、[66]、[67] 直接通过深度神经网络推断几何知识或逐像素研究深度估计分布将图像转换为伪 LiDAR 点 [68]、[69]、[70]、[71]、[72]、[73]。

[62] X. Chen, K. Kundu, Y . Zhu, A. G. Berneshawi, H. Ma, S. Fidler, and R. Urtasun, “3d object proposals for accurate object class detection,” Advances in neural information processing systems, vol. 28, 2015.

[63] X. Chen, K. Kundu, Z. Zhang, H. Ma, S. Fidler, and R. Urtasun, “Monocular 3d object detection for autonomous driving,” in CVPR, 2016, pp. 2147–2156.

[64] T. Wang, X. Zhu, J. Pang, and D. Lin, “Fcos3d: Fully convolutional one-stage monocular 3d object detection,” in ICCV, 2021, pp. 913–922.

[65] Z. Zou, X. Ye, L. Du, X. Cheng, X. Tan, L. Zhang, J. Feng, X. Xue, and E. Ding, “The devil is in the task: Exploiting reciprocal appearancelocalization features for monocular 3d object detection,” in ICCV, 2021, pp. 2713–2722.

[66] Y . Zhang, J. Lu, and J. Zhou, “Objects are different: Flexible monocular 3d object detection,” in CVPR, 2021, pp. 3289–3298.

[67] X. Ye, M. Shu, H. Li, Y . Shi, Y . Li, G. Wang, X. Tan, and E. Ding, “Rope3d: The roadside perception dataset for autonomous driving and monocular 3d object detection task,” in CVPR, 2022, pp. 21 341–21 350.

[68] C. Reading, A. Harakeh, J. Chae, and S. L. Waslander, “Categorical depth distribution network for monocular 3d object detection,” in CVPR, 2021, pp. 8555–8564.

[69] X. Ye, L. Du, Y . Shi, Y . Li, X. Tan, J. Feng, E. Ding, and S. Wen, “Monocular 3d object detection via feature domain adaptation,” in ECCV. Springer, 2020, pp. 17–34.

[70] Y . Wang, W.-L. Chao, D. Garg, B. Hariharan, M. Campbell, and K. Q. Weinberger, “Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving,” in CVPR, 2019, pp.8445–8453.

[71] X. Weng and K. Kitani, “Monocular 3d object detection with pseudolidar point cloud,” in CVPRW, 2019, pp. 0–0.

[72] Y . Y ou, Y . Wang, W.-L. Chao, D. Garg, G. Pleiss, B. Hariharan, M. Campbell, and K. Q. Weinberger, “Pseudo-lidar++: Accurate depth for 3d object detection in autonomous driving,” arXiv preprint arXiv:1906.06310, 2019.

[73] J. M. U. Vianney, S. Aich, and B. Liu, “Refinedmpl: Refined monocular pseudolidar for 3d object detection in autonomous driving,” arXiv preprint arXiv:1911.09712, 2019.

多摄像头 3D 对象检测 [74]、[75]、[76]、[77]、[78]、[79] 通过学习鸟瞰图 (BEV) 中的强大表示,正在成为趋势并引起广泛关注,这由于其统一表示和对未来预测和规划等下游任务的轻松适应,因此非常简单。因此,以视觉为中心的多视图 BEV 感知方法显着缩小了基于相机和基于 LiDAR 的方法之间的性能差距。

[74] J. Philion and S. Fidler, “Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d,” in ECCV.Springer, 2020, pp. 194–210.

[75] Z. Li, W. Wang, H. Li, E. Xie, C. Sima, T. Lu, Q. Y u, and J. Dai, “Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers,” arXiv preprint arXiv:2203.17270, 2022.

[76] Y . Wang, V . C. Guizilini, T. Zhang, Y . Wang, H. Zhao, and J. Solomon, “Detr3d: 3d object detection from multi-view images via 3d-to-2d queries,” in Conference on Robot Learning. PMLR, 2022, pp. 180–191.

[77] Y . Liu, T. Wang, X. Zhang, and J. Sun, “Petr: Position embedding transformation for multi-view 3d object detection,” arXiv preprint arXiv:2203.05625, 2022.

[78] Y . Li, Z. Ge, G. Y u, J. Yang, Z. Wang, Y . Shi, J. Sun, and Z. Li, “Bevdepth: Acquisition of reliable depth for multi-view 3d object detection,” arXiv preprint arXiv:2206.10092, 2022.

[79] K. Xiong, S. Gong, X. Ye, X. Tan, J. Wan, E. Ding, J. Wang, and X. Bai, “Cape: Camera view position embedding for multi-view 3d object detection,” arXiv preprint arXiv:2303.10209, 2023.

基于 LiDAR 的检测器是 3D MOT 的热门选择。PointRCNN和 CenterPoint 因其简单性和有效性而被许多 3D MOT 方法 [7]、[11]、[80]、[81]、[82] 采用。最近,基于图像的 3D 对象检测器 [76]、[78]、[83] 开始被一些 3D 跟踪器采用,例如 [84]、[85]、[86],因为图像信息可以为跟踪提供外观线索。我们的跟踪框架与模式无关,因此可以轻松地与各种 3D 对象检测器结合使用。

[7] X. Weng, J. Wang, D. Held, and K. Kitani, “3d multi-object tracking: A baseline and new evaluation metrics,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 10 359–10 366.

[11] T. Yin, X. Zhou, and P . Krahenbuhl, “Center-based 3d object detection and tracking,” in CVPR, 2021, pp. 11 784–11 793.

[80] N. Benbarka, J. Schröder, and A. Zell, “Score refinement for confidencebased 3d multi-object tracking,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp.8083–8090.

[81] Z. Pang, Z. Li, and N. Wang, “Simpletrack: Understanding and rethinking 3d multi-object tracking,” arXiv preprint arXiv:2111.09621, 2021.

[82] Q. Wang, Y . Chen, Z. Pang, N. Wang, and Z. Zhang, “Immortal tracker: Tracklet never dies,” arXiv preprint arXiv:2111.13672, 2021.

[83] A. Kundu, Y . Li, and J. M. Rehg, “3d-rcnn: Instance-level 3d object reconstruction via render-and-compare,” in CVPR, 2018, pp. 3559– 3568.

[84] H.-N. Hu, Y .-H. Yang, T. Fischer, T. Darrell, F. Y u, and M. Sun, “Monocular quasi-dense 3d object tracking,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

[85] T. Zhang, X. Chen, Y . Wang, Y . Wang, and H. Zhao, “Mutr3d: A multicamera tracking framework via 3d-to-2d queries,” in CVPR, 2022, pp.4537–4546.

[86] J. Yang, E. Y u, Z. Li, X. Li, and W. Tao, “Quality matters: Embracing quality clues for robust 3d multi-object tracking,” arXiv preprint arXiv:2208.10976, 2022.

2.3 2D Multi-Object Tracking

数据关联是多目标跟踪的核心,它首先计算tracklets和检测框之间的相似度,并根据相似度利用不同的策略来匹配它们。

在 2D MOT 中,图像信息起着计算相似性的基本作用。位置、运动和外观是数据关联的有用线索。 SORT以非常简单的方式结合了位置和运动提示。它首先采用卡尔曼滤波器来预测新帧中轨迹的位置,然后计算检测框与预测框之间的 IoU 作为相似度。其他方法 [26]、[27]、[43]、[87] 设计网络来学习对象运动,并在大相机运动或低帧率的情况下获得更稳健的结果。位置和运动相似性在短期关联中都是准确的,而外观相似性有助于长期关联。长时间遮挡后,可以使用外观相似度重新识别对象。外观相似度可以通过 Re-ID 特征的余弦相似度来衡量。 DeepSORT 采用独立的 Re-ID 模型从检测框中提取外观特征。最近,联合检测和 Re-ID 模型 [8]、[9]、[23]、[34]、[88]、[89]、[90] 因其简单和高效而变得越来越流行。

[8] Y . Zhang, C. Wang, X. Wang, W. Zeng, and W. Liu, “Fairmot: On the fairness of detection and re-identification in multiple object tracking,” International Journal of Computer Vision, vol. 129, no. 11, pp. 3069– 3087, 2021.

[9] J. Pang, L. Qiu, X. Li, H. Chen, Q. Li, T. Darrell, and F. Y u, “Quasidense similarity learning for multiple object tracking,” in CVPR, 2021, pp. 164–173.

[23] Z. Lu, V . Rathod, R. V otel, and J. Huang, “Retinatrack: Online single stage joint detection and tracking,” in CVPR, 2020, pp. 14 668–14 678.

[34] Z. Wang, L. Zheng, Y . Liu, Y . Li, and S. Wang, “Towards real-time multi-object tracking,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XI 16. Springer, 2020, pp. 107–122.

[26] X. Zhou, V . Koltun, and P . Krähenbühl, “Tracking objects as points,” in ECCV. Springer, 2020, pp. 474–490.

[27] J. Wu, J. Cao, L. Song, Y . Wang, M. Yang, and J. Y uan, “Track to detect and segment: An online multi-object tracker,” in CVPR, 2021, pp. 12 352–12 361.

[43] P . Sun, Y . Jiang, R. Zhang, E. Xie, J. Cao, X. Hu, T. Kong, Z. Y uan, C. Wang, and P . Luo, “Transtrack: Multiple-object tracking with transformer,” arXiv preprint arXiv:2012.15460, 2020.

[87] B. Shuai, A. Berneshawi, X. Li, D. Modolo, and J. Tighe, “Siammot: Siamese multi-object tracking,” in CVPR, 2021, pp. 12 372–12 382.

[88] Y . Zhang, C. Wang, X. Wang, W. Liu, and W. Zeng, “V oxeltrack: Multi-person 3d human pose estimation and tracking in the wild,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

[89] X. Zhou, T. Yin, V . Koltun, and P . Krähenbühl, “Global tracking transformers,” in CVPR, 2022, pp. 8771–8780.

[90] Z. Xu, W. Yang, W. Zhang, X. Tan, H. Huang, and L. Huang, “Segment as points for efficient and effective online multi-object tracking and segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 10, pp. 6424–6437, 2021.

在相似度计算之后,匹配策略为对象分配身份。这可以通过匈牙利算法或贪心分配来完成。 SORT 通过匹配一次将检测框与轨迹匹配。 DeepSORT 提出了一种级联匹配策略,首先将检测框与最近的轨迹匹配,然后再与丢失的轨迹匹配。MOTDT 首先利用外观相似性进行匹配,然后利用 IoU 相似性来匹配未匹配的轨迹。QDTrack 通过双向 softmax 操作将外观相似度转化为概率,并采用最近邻搜索来完成匹配。注意力机制可以直接在帧之间传播框并隐式执行关联。最近的方法,如 [44]、[45]、[93] 提出跟踪查询来预测跟踪对象在后续帧中的位置。在不使用匈牙利算法的情况下,匹配是在注意交互过程中隐式执行的。

[44] T. Meinhardt, A. Kirillov, L. Leal-Taixe, and C. Feichtenhofer, “Trackformer: Multi-object tracking with transformers,” in CVPR, 2022, pp.8844–8854.

[45] F. Zeng, B. Dong, T. Wang, C. Chen, X. Zhang, and Y . Wei, “Motr: End-to-end multiple-object tracking with transformer,” arXiv preprint arXiv:2105.03247, 2021.

[93] Z. Zhao, Z. Wu, Y . Zhuang, B. Li, and J. Jia, “Tracking objects as pixel-wise distributions,” in ECCV. Springer, 2022, pp. 76–94.

大多数 2D MOT 方法侧重于如何设计更好的关联策略。然而,我们认为检测框的使用方式决定了数据关联的上限。在通过各种检测器获得检测框后,大多数方法[8]、[9]、[23]、[34]只将高分框保持一个阈值,即0.5,并将这些框作为数据关联的输入。这是因为低分框包含许多损害跟踪性能的背景。然而,我们观察到许多被遮挡的物体可以被正确检测到但得分较低。为了减少缺失检测并保持轨迹的持久性,我们保留所有检测框并关联它们中的每一个。我们专注于如何在关联过程中充分利用从高分到低分的检测框。

2.4 3D Multi-Object Tracking

3D MOT 与 2D MOT 有许多共同点,即数据关联。大多数 3D MOT 方法遵循检测跟踪范例,该范例首先检测对象,然后跨时间关联它们。与 2D MOT 相比,3D MOT 中使用的位置和运动线索更加准确可靠,因为它们包含深度信息。例如,当两个行人相遇时,从图像平面获得的 2D IoU 很大,很难通过 2D 位置区分他们。在 3D 场景中,可以根据深度的不同轻松将两个行人分开。

基于 LiDAR 的 3D 跟踪器倾向于利用位置和运动线索来计算相似性。与 SORT 类似,AB3DMOT 为 3D MOT 提供了一个简单的基线和一个新的评估指标,它采用卡尔曼滤波器作为运动模型,并使用检测和轨迹之间的 3D IoU 进行关联。CenterPoint 将 CenterTrack 中基于中心的跟踪范例扩展到 3D。它利用预测的物体速度作为恒速运动模型,并在突然运动下显示出有效性。以下工作 [80]、[81]、[82]、[94] 侧重于关联度量和生命周期管理的改进。 QTrack 估计预测对象属性的质量,并提出质量感知关联策略以获得更稳健的关联。

[80] N. Benbarka, J. Schröder, and A. Zell, “Score refinement for confidencebased 3d multi-object tracking,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp.8083–8090.

[81] Z. Pang, Z. Li, and N. Wang, “Simpletrack: Understanding and rethinking 3d multi-object tracking,” arXiv preprint arXiv:2111.09621, 2021.

[82] Q. Wang, Y . Chen, Z. Pang, N. Wang, and Z. Zhang, “Immortal tracker: Tracklet never dies,” arXiv preprint arXiv:2111.13672, 2021.

[94] H.-K. Chiu, J. Li, R. Ambrus ¸, and J. Bohg, “Probabilistic 3d multi-

modal, multi-object tracking for autonomous driving,” in ICRA, 2021, pp. 14 227–14 233.

从图像中提取的视觉外观特征可以进一步增强 3D MOT 中的长期关联。 QD3DT 在 2D MOT 中提出了一种遵循 QDTrack 的外观特征的准密集相似性学习来处理对象重现问题。 GNN3DMOT 集成了运动和外观特征,并通过图神经网络学习它们之间的交互。 TripletTrack 提出局部对象特征嵌入来编码有关视觉外观和单眼 3D 对象特征的信息,以在遮挡和缺失检测的情况下实现更稳健的性能。 Transformer 的最新进展使其在 3D MOT 中具有吸引力。继 2D MOT 中的 MOTR 之后,基于相机的 3D 跟踪器 MUTR3D 利用 Transformer 学习具有 2D 视觉信息的 3D 表示,并以端到端的方式跨时间传播 3D 边界框。

我们只利用运动线索来执行数据关联,以更简单地统一 2D 和 3D MOT。我们提出了一种基于检测速度和卡尔曼滤波器的综合运动预测策略。采用卡尔曼滤波预测的平滑位置进行长期关联,起到类似外观特征处理重现问题的作用。

3 BYTETRACK V2

我们在一个简单统一的框架中解决了 2D 和 3D MOT 的问题。它包含三个部分:对象检测、运动预测和数据关联。我们首先在第3.1节中介绍了如何表述2D MOT和3D MOT的问题。然后,我们在第3.2节中列出了一些2D和3D检测器以及基本的卡尔曼滤波器运动模型。在第3.3节中,我们介绍了专门为3D MOT提出的互补3D运动预测策略。最后,我们在第3.4节中详细阐述了所提出的检测驱动的分层数据关联策略的核心步骤,以及它如何缓解对象丢失和轨迹碎片的问题。跟踪框架的概述如图3所示。

3.1 Problem Formulation(问题表述)

多目标跟踪。多目标跟踪的目标是估计视频中的目标轨迹。假设我们要在视频中获得 L L L 个轨迹 S = s 1 , s 2 , . . . , s L \mathbb{S} = {s^1, s^2, ..., s^L} S=s1,s2,...,sL。每条轨迹 s i = b t 1 i , b t 1 + 1 i , . . . , b t 2 i s^i = {b^i_{t1}, b^i_{t1+1}, ..., b^i_{t2}} si=bt1i,bt1+1i,...,bt2i包含一个对象在一个时间段内的位置信息,即从 t 1 t_1 t1帧到 t 2 t_2 t2帧,该对象出现的位置。在 2D MOT 中,对象 i i i 在帧 t t t 的位置可以表示为 b t 2 d i = [ x 1 , y 1 , x 2 , y 2 ] ∈ R 4 b^i_{t2d} = [x_1, y_1, x_2, y_2] ∈ \mathbb{R}^4 bt2di=[x1,y1,x2,y2]∈R4,其中 ( x 1 , y 1 ) , ( x 2 , y 2 ) (x_1, y_1), (x_2, y_2) (x1,y1),(x2,y2)是图像平面中二维对象边界框的左上和右下坐标。在 3D MOT 中,跟踪过程通常在 3D 世界坐标中执行。对象 i i i 在帧 t t t 的 3D 位置可以表示为 b t 3 d i = [ x , y , z , θ , l , w , h ] ∈ R 7 b^i_{t3d} = [x, y, z, θ, l, w, h] ∈ \mathbb{R}^7 bt3di=[x,y,z,θ,l,w,h]∈R7,其中 ( x , y , z ) (x, y, z) (x,y,z) 是对象中心的 3D 世界位置, θ θ θ 是物体方向, ( l , w , h ) (l, w, h) (l,w,h) 是物体尺寸。

数据关联。我们遵循多目标跟踪中流行的tracking-by-detection范例,该范例首先检测单个视频帧中的对象,然后将它们关联到帧之间并随时间形成轨迹。假设我们在第 t t t 帧有 M M M 个检测和 N N N 个历史轨迹,我们的目标是将每个检测分配给其中一个轨迹,该轨迹在整个视频中具有相同的身份。令 A \mathbb{A} A 表示由所有可能的关联(或匹配)组成的空间。在多目标跟踪的设置下,每个检测最多匹配一个轨迹,每个轨迹最多匹配一个检测。我们定义空间 A \mathbb{A} A 如下:

其中 M = { 1 , 2 , . . . , M } , N = { 1 , 2 , . . . , N } \mathbb{M} = \left\{ 1, 2, ..., M \right\} ,\mathbb{N} = \left\{ 1, 2, ..., N \right\} M={1,2,...,M},N={1,2,...,N}, A A A 是整个 M M M 个检测和 N N N 个轨迹的一个可能匹配。当第 i i i 个检测与第 $j $个轨迹匹配时,则 m i j = 1 m_{ij} = 1 mij=1。设 d t 1 , . . . , d t M d^1_t , ..., d^M_t dt1,...,dtM 和 h t 1 , . . . , h t N h^1_t , ..., h^N_t ht1,...,htN 分别是帧 t t t 处所有 M M M 个检测和 N N N 个轨迹的位置。我们计算所有检测和轨迹之间的相似度矩阵 S t ∈ R M × N S_t∈\mathbb{R}^{M×N} St∈RM×N,如下所示:

其中相似性可以通过检测和轨迹之间的一些空间距离来计算,例如 IoU 或 L2 距离。我们的目标是获得最佳匹配 A ∗ A* A∗,其中匹配检测和轨迹之间的总相似度(或得分)最高:

3.2 Preliminary

二维物体检测器。我们采用 YOLOX 作为我们的 2D 物体检测器。 YOLOX 是一种无锚检测器,配备了先进的检测技术,即解耦头,以及源自 OTA 的领先标签分配策略 SimOTA。它还采用强大的数据增强,如mosaic[32]和mixup[98],以进一步提高检测性能。与其他现代检测器 [99]、[100] 相比,YOLOX 在速度和精度之间取得了极好的平衡,并且在实际应用中很有吸引力。

[99] H. Zhang, F. Li, S. Liu, L. Zhang, H. Su, J. Zhu, L. M. Ni, and H.-Y .Shum, “Dino: Detr with improved denoising anchor boxes for end-toend object detection,” arXiv preprint arXiv:2203.03605, 2022.

[100] Z. Liu, Y . Lin, Y . Cao, H. Hu, Y . Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” arXiv preprint arXiv:2103.14030, 2021.

基于相机的 3D 对象检测器。我们遵循多摄像头 3D 对象检测设置,通过学习鸟瞰图 (BEV) 中强大且统一的表示,显示出优于单目方法的优势。我们利用 PETRv2 [101] 作为我们基于相机的 3D 物体检测器。它建立在 PETR [77] 之上,通过将 3D 坐标的位置信息编码为图像特征,将基于变换器的 2D 对象检测器 DETR [40] 扩展到多视图 3D 设置。 PETRv2 利用先前帧的时间信息来提高检测性能。

基于 LiDAR 的 3D 物体检测器。我们采用 CenterPoint [11] 和 TransFusion-L [102] 作为我们基于 LiDAR 的 3D 物体检测器。 CenterPoint 利用关键点检测器找到对象的中心,并简单地回归到其他 3D 属性。它还在第二阶段使用对象上的附加点特征来优化这些 3D 属性。 TransFusion-L 由卷积主干和基于变压器解码器的检测头组成。 它使用一组稀疏的对象查询从 LiDAR 点云预测 3D 边界框。

基本运动模型。我们利用卡尔曼滤波器 [12] 作为 2D 和 3D MOT 的基本运动模型。与[4]类似,我们在二维跟踪场景中定义了一个八维状态空间 ( u , v , a , b , u ˙ , v ˙ , a ˙ , b ˙ ) (u, v, a, b, \dot{u}, \dot{v}, \dot{a}, \dot{b}) (u,v,a,b,u˙,v˙,a˙,b˙),其中 P 2 d = ( u , v , a , b ) P^{2d} = (u, v, a, b) P2d=(u,v,a,b)是 2D 边界框中心位置、宽高比(宽度/高度)和边界框高度。 V 2 d = ( u ˙ , v ˙ , a ˙ , b ˙ ) V^{2d} = (\dot{u}, \dot{v}, \dot{a}, \dot{b}) V2d=(u˙,v˙,a˙,b˙) 是图像平面中各自的速度。在 3D 跟踪场景中,我们按照 [7] 定义一个十维状态空间 ( x , y , z , θ , l , w , h , x ˙ , y ˙ , z ˙ ) (x, y, z, θ, l, w, h, \dot{x}, \dot{y}, \dot{z}) (x,y,z,θ,l,w,h,x˙,y˙,z˙),其中 P 3 d = ( x , y , z ) P^{3d} = (x, y, z) P3d=(x,y,z)是 3D 边界框中心位置, ( l , w , h ) (l, w, h) (l,w,h)是物体的大小, θ θ θ是物体方向, V 3 d = ( x ˙ , y ˙ , z ˙ ) V^{3d} = (\dot{x}, \dot{y}, \dot{z}) V3d=(x˙,y˙,z˙) 是 3D 空间中的相应速度。与 [7] 不同,我们在 3D 世界坐标中定义状态空间以消除自我运动的影响。我们直接采用标准的卡尔曼滤波器,具有等速运动和线性观测模型。 2D和3D跟踪场景中第 t + 1 t+1 t+1帧的运动预测过程可以表示如下:

P t + 1 2 d = P t 2 d + V t 2 d P t + 1 3 d = P t 3 d + V t 3 d P^{2d}_{t+1}=P^{2d}_{t}+V^{2d}_{t} \\ P^{3d}_{t+1}=P^{3d}_{t}+V^{3d}_{t} Pt+12d=Pt2d+Vt2dPt+13d=Pt3d+Vt3d

[7] X. Weng, J. Wang, D. Held, and K. Kitani, “3d multi-object tracking: A baseline and new evaluation metrics,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 10 359–10 366.

每个轨迹的更新状态是轨迹和匹配检测(或观察)的加权平均值。权重由轨迹和匹配检测遵循贝叶斯规则的不确定性决定。

3.3 Complementary 3D Motion Prediction(互补的 3D 运动预测)

我们提出了一种互补的 3D 运动预测策略来解决驾驶场景中的突然运动和短期物体消失问题。具体来说,我们采用短期关联的检测速度和长期关联的卡尔曼滤波器。

在 3D 场景中,现代检测器 [11]、[75]、[101] 能够通过时间建模预测准确的短期速度。卡尔曼滤波器通过基于历史信息的状态更新对平滑的长期速度进行建模。我们通过双边预测策略最大化两种运动模型的优势。我们采用卡尔曼滤波器进行前向预测,采用检测到的物体速度进行后向预测。后向预测负责活动轨迹的短期关联,而前向预测用于丢失轨迹的长期关联。图 4 说明了互补运动预测策略。

[11] T. Yin, X. Zhou, and P . Krahenbuhl, “Center-based 3d object detection and tracking,” in CVPR, 2021, pp. 11 784–11 793.

[75] Z. Li, W. Wang, H. Li, E. Xie, C. Sima, T. Lu, Q. Y u, and J. Dai, “Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers,” arXiv preprint arXiv:2203.17270, 2022.

[101] Y . Liu, J. Yan, F. Jia, S. Li, Q. Gao, T. Wang, X. Zhang, and J. Sun, “Petrv2: A unified framework for 3d perception from multi-camera images,” arXiv preprint arXiv:2206.01256, 2022.

在双边预测之后,我们使用了第3.4节中引入的统一2D和3D数据关联策略。在第一个关联中,在来自反向预测的检测结果 D t − 1 D^{t−1} Dt−1和轨迹 T t − 1 T^{t−1} Tt−1之间计算相似度 S t ∈ R M × N S^t∈\mathbb{R}^{M×N} St∈RM×N,如下所示:

S t ( i , j ) = G I o U ( D i t − 1 , T j t − 1 ) ( 9 ) S_t(i,j)=GIoU(D_i^{t-1},T_j^{t-1})\qquad(9) St(i,j)=GIoU(Dit−1,Tjt−1)(9)

我们采用 3D GIoU [103] 作为相似性度量来解决检测框和轨迹框之间偶尔出现的非重叠问题。我们使用匈牙利算法[91]完成基于 S t S_t St的身份分配。关联后,匹配的检测 D m a t c h t D^t_{match} Dmatcht用于按照标准卡尔曼滤波器更新规则更新匹配的tracklets T m a t c h t T^t_{match} Tmatcht。在式8中的前向预测策略,当轨迹丢失时起着重要作用,即没有匹配的检测。当丢失的对象在后续帧中再次出现时,可以通过与预测位置的相似度重新关联,也称为轨迹重生。算法 1 中的第二个关联遵循与第一个关联相同的过程。

我们采用检测分数通过自适应更新帧 t t t 轨迹 j j j 的卡尔曼滤波器中的测量不确定性矩阵 R ^ t j \hat{R}^j_t R^tj 来进一步增强运动预测,如下所示:

R ^ t j = α ( 1 − s t j ) 2 R t j ( 10 ) \hat{R}^j_t=\alpha(1-s_t^j)^2R_t^j\qquad(10) R^tj=α(1−stj)2Rtj(10)

其中 s t j s^j_t stj 是轨迹 j j j 在帧 t t t 的检测分数, α α α 是控制不确定性大小的超参数。通过将检测分数插入不确定性矩阵,我们使卡尔曼滤波器对不同质量的检测更加稳健。

3.4 Unified 2D and 3D Data Association

我们为 2D 和 3D MOT 提出了一种简单、有效且统一的数据关联方法。与以往的方法[7]、[8]、[9]、[11]、[34]只保留高分检测框不同,我们保留每个检测框并将它们分为高分和低分检测框。得分。我们检测驱动的分层数据关联策略的整个流程如图 3 所示。

概述。在视频的第一帧中,我们将所有检测框初始化为 tracklet。在接下来的帧中,我们首先将高分检测框与轨迹关联起来。一些轨迹不匹配是因为它们不匹配适当的高分检测框,这通常发生在发生遮挡、运动模糊或大小变化时。然后,我们将低分检测框与这些不匹配的轨迹关联起来,以恢复低分检测框中的对象并同时过滤掉背景。 ByteTrackV2 的伪代码如算法 1 所示。

输入。 ByteTrackV2 的输入是一个视频序列 V V V,以及一个对象检测器 Det。我们还设置了检测分数阈值 τ τ τ。输出是视频的轨道 T T T,每个轨道包含每个帧中对象的边界框和标识。

检测箱。对于视频中的每一帧,我们使用检测器 Det 预测检测框和分数。我们根据检测分数阈值 τ τ τ 将所有检测框分为 D h i g h D_{high} Dhigh 和 D l o w D_{low} Dlow 两部分。对于分数高于 τ τ τ的检测框,我们将它们放入高分检测框 D h i g h D_{high} Dhigh中。对于分数低于 τ τ τ 的检测框,我们将它们放入低分检测框 D l o w D_{low} Dlow(算法 1 中的第 3 至 13 行)。

运动预测。在分离低分检测框和高分检测框后,我们预测 T T T中每个轨道在当前帧中的新位置(算法1中的第14行至第16行)。对于2D MOT,我们直接采用卡尔曼滤波器进行运动预测。对于3D MOT,我们使用第3.3节中介绍的互补运动预测策略。

高分框关联。在高分检测框 D h i g h D_{high} Dhigh和所有轨道 T T T(包括丢失轨道 T l o s t T_{lost} Tlost)之间执行第一关联。Similarity #1可以通过检测框 D h i g h D_{high} Dhigh和轨道 T T T的预测框之间的空间距离(例如IoU)来计算。然后,我们采用匈牙利算法[91]来完成基于相似度的匹配。我们保留了 D r e m a i n D_{remain} Dremain中的不匹配检测和 T r e m a i n T_{remain} Tremain中的非匹配轨道(算法1中的第17行至第19行)。整个管道非常灵活,可以兼容其他不同的关联方法。例如,当它与FairMOT[8]相结合时,Re-ID特征被添加到算法1中的*first association*中,其他都是一样的。在2D MOT的实验中,我们将关联方法应用于9种不同的最先进的跟踪器,并在几乎所有的指标上都取得了显著的改进。

低分框关联。在低得分检测框 D l o w D_{low} Dlow和第一次关联之后的剩余轨道 T r e m a i n T_{remain} Tremain之间执行第二关联。我们保留 T r e − r e m a i n T_{re−remain} Tre−remain中不匹配的轨道,并删除所有不匹配的低分检测框,因为我们将它们视为背景。(算法1中的第20至21行)。我们发现单独使用IoU作为第二关联中的Similarity#2很重要,因为低分数检测框通常包含严重的遮挡或运动模糊,并且外观特征不可靠。因此,当应用于其他基于Re-ID的跟踪器[8]、[9]、[34]时,我们在第二次关联中不采用外观相似性。

追踪重生。关联后,未匹配的轨迹将从轨迹集中删除。为了简单起见,我们没有在算法1中列出轨道再生[4]、[26]、[52]的过程。事实上,对于长期的关联来说,保留轨迹的身份是必要的。对于第二次关联后保留的不匹配轨道 T r e − r e m a i n T_{re−remain} Tre−remain,我们将它们放入 T l o s t T_{lost} Tlost。对于 T l o s t T_{lost} Tlost中的每个轨道,只有当它存在超过一定数量的帧(即30帧)时,我们才会将其从轨道 T T T中删除。否则,我们保留 T T T中丢失的轨迹 T l o s t T_{lost} Tlost(算法1中的第22行)。最后,我们在第一次关联之后从不匹配的高分检测框 D r e m a i n D_{remain} Dremain中初始化新的轨迹(算法1中的第23到27行)。每个单独帧的输出是当前帧中轨道 T T T的边界框和标识。

讨论。我们根据经验发现,当遮挡率增加时,检测分数下降。当发生遮挡情况时,分数先降低后增加,因为行人先被遮挡,然后再次出现。这激发了我们首先将高分框与tracklets联系起来。如果轨迹集与任何高分框都不匹配,则很有可能被遮挡,检测分数下降。然后,我们将其与低分数框相关联,以跟踪被遮挡的目标。对于那些低分框中的假阳性,没有任何tracklet能够与之匹配。因此,我们将它们扔掉。这是我们的数据关联算法工作的关键点。

4 DATASETS AND METRICS

4.1 Datasets

MOT17数据集[46]具有由移动和固定摄像机以不同帧速率从不同视点拍摄的视频。它包含7个训练视频和7个测试视频。我们使用MOT17训练集中每个视频的前半部分进行训练,后半部分进行验证[26]。MOT17同时提供“公共检测”和“私人检测”协议。公共探测器包括DPM[47]、Faster R-CNN[15]和SDP[48]。对于私人检测设置,我们在消融研究中遵循[26]、[27]、[43]、[45],在CrowdHuman数据集[104]和MOT17半训练集的组合上进行训练。在MOT17的测试集上进行测试时,我们添加了Cityperson[105]和ETHZ[106]用于[8]、[34]、[35]之后的培训。

MOT20数据集[107]在非常拥挤的场景中捕捉视频,因此会发生很多遮挡。车架内的平均行人比MOT17大得多(139人对33人)。MOT20包含4个训练视频和4个较长视频长度的测试视频。它还提供了FasterRCNN的公共检测结果。我们只使用CrowdHuman数据集和MOT20的训练集在私人检测设置下进行训练。

HiEve数据集[108]是一个以人为中心的大规模数据集,专注于拥挤和复杂的事件。它包含更长的平均轨迹长度,给人类跟踪任务带来了更大的难度。HiEve拍摄了30多种不同场景的视频,包括地铁站、街道和餐厅,这使得跟踪问题成为一项更具挑战性的任务。它包含19个培训视频和13个测试视频。我们将CrowdHuman和HiEve的训练集结合起来进行训练。

BDD100K数据集[109]是最大的2D驾驶视频数据集,2D MOT任务的数据集拆分为1400个用于训练的视频、200个用于验证的视频和400个用于测试的视频。它需要跟踪8类对象,并包含大型相机运动的情况。我们将检测任务和2D MOT任务的训练集结合起来进行训练。

nuScenes数据集[110]是一个大规模的3D对象检测和跟踪数据集。这是第一个搭载全自动驾驶汽车传感器套件的数据集:6个摄像头、5个雷达和1个激光雷达,均具有全360度视场。跟踪任务包含具有7个对象类的三维标注。nuScenes包括1000个场景,包括700个训练视频、150个验证视频和150个测试视频。对于每个序列,仅对以2 FPS采样的关键帧进行注释。在一个序列中,每个相机大约有40个关键帧。我们只使用每个序列中的关键帧进行跟踪。

4.2 Metrics

2D MOT。我们使用CLEAR度量[111],包括MOTA、FP、FN、ID等,IDF1[112]和HOTA[113]来评估跟踪性能的不同方面。MOTA是根据FP、FN和ID计算的,如下所示:

其中GT代表地面实况对象的数量。考虑到FP和FN的数量大于ID,MOTA更关注检测性能。IDF1评估身份保存能力,并更多地关注关联性能。HOTA是最近提出的一种度量,它明确地平衡了执行精确检测、关联和定位的效果。对于BDD100K数据集,有一些多类度量,如mMOTA和mIDF1。mMOTA/mIDF1是通过对所有类别的MOTA/IDF1进行平均来计算的。

3D MOT. nuScenes跟踪基准采用平均多目标跟踪精度(AMOTA)[7]作为主要指标,对不同召回阈值上的MOTA指标进行平均,以减少检测置信阈值的影响。sMOTAr[7]将MOTA增加一个术语,以针对相应的召回进行调整,并保证sMOTAr值的范围从0.0到1.0:

然而,我们在实验中发现,它仍然需要选择一个合适的检测分数阈值,因为假阳性可能会误导关联结果

5 EXPERIMENTS

5.1 Implementation Details

2D MOT。检测器是YOLOX[33],YOLOX-X作为主干,COCO预训练模型[114]作为初始化权重。对于MOT17,训练计划是MOT17、CrowdHuman、Cityperson和ETHZ组合的80个epochs。对于MOT20和HiEve,我们只添加CrowdHuman作为额外的训练数据。对于BDD100K,我们不使用额外的训练数据,只训练50个epochs。在多尺度训练过程中,输入图像大小为1440×800,最短边的范围为576到1024。数据扩充包括Mosaic[32]和Mixup[98]。该模型在8个NVIDIA特斯拉V100 GPU上进行训练,批量大小为48。优化器是SGD,重量衰减为5×10−4,动量为0.9。初始学习率为10−3,有1个epochs预热和余弦退火计划。总训练时间约为12小时。根据[33],在单个GPU上以FP16精度[115]和1的批量大小测量FPS。

对于跟踪部分,除非另有规定,否则默认检测分数阈值τ为0.6。对于MOT17、MOT20和HiEve的基准评估,我们只使用IoU作为相似性度量。在线性分配步骤中,如果检测框和轨迹框之间的IoU小于0.2,则匹配将被拒绝。对于丢失的轨迹,我们将其保留30帧,以防再次出现。对于BDD100K,我们使用UniTrack[116]作为Re-ID模型。在消融研究中,我们使用FastReID[117]来提取MOT17的Re-ID特征。

3D MOT. 对于相机模态设置,我们采用PETRv2[101],VoVNetV2主干[118]作为检测器。输入图像大小为1600×640。检测查询编号设置为1500。它在验证数据集上使用预训练模型FCOS3D[64]进行训练,在测试数据集上用DD3D[119]预训练模型进行初始化。优化器是AdamW[120],其重量衰减为0.01。学习率用2.0×10−4初始化,并用余弦退火策略衰减。该模型在8个特斯拉A100 GPU上进行了24个时期的批量8个GPU的训练。对于激光雷达模态设置,我们使用具有VoxelNet[56]3D主干的TransFusion-L作为nuScenes测试集的检测器。该模型以激光雷达点云作为输入,训练了20个时期。对于nuScenes验证集的结果,我们采用了以V oxelNet主干作为检测器的CenterPoint[11]。相同的检测结果用于与其他3D MOT方法[7]、[11]、[80]、[94]进行公平比较。

对于跟踪部分,PETRv2和CenterPoint的检测得分阈值τ为0.2。TransFusionL的检测分数低于其他检测器,因为它被计算为热图分数和分类分数的几何平均值,所以我们将TransFusionL.的阈值τ设置为0.01。我们为不同的对象类别设置不同的3D GIoU阈值,因为它们具有不同的大小和速度。具体地说,我们为自行车设置了-0.7,为公共汽车设置了-0.2,为汽车设置了-0.1,为摩托车设置了-0.5,为行人设置了-0.0,为拖车设置了-0.4,为卡车设置了-0.1。与2D MOT类似,我们将丢失的轨迹保持30帧,以防再次出现。用于更新方程中测量不确定度矩阵的超参数α。10对于基于相机的方法为100,对于基于激光雷达的方法为10。

实验部分就略过了,详细的可以去原论文看,在此不多赘述

6 CONCLUSION

我们介绍了ByteTrackV2,这是一个简单而统一的跟踪框架,旨在解决2D和3D MOT的问题。ByteTrackV2融合了对象检测、运动预测和检测驱动的分层数据关联,使其成为MOT的全面解决方案。分层数据关联策略利用检测分数作为强大的先验,在低分数检测中识别正确的对象,减少了遗漏检测和碎片轨迹的问题。此外,我们针对3D MOT的集成运动预测策略有效地解决了突然运动和物体丢失的问题。ByteTrackV2在2D和3D MOT基准测试上都实现了最先进的性能。此外,它具有强大的泛化能力,可以很容易地与不同的2D和3D检测器组合,而无需任何可学习的参数。我们相信,这个简单统一的跟踪框架将在现实世界的应用中发挥作用。

REFERENCES

[1] A. Milan, S. Roth, and K. Schindler, “Continuous energy minimization for multitarget tracking,” IEEE transactions on pattern analysis and machine intelligence, vol. 36, no. 1, pp. 58–72, 2013.

[2] S.-H. Bae and K.-J. Y oon, “Robust online multi-object tracking based on tracklet confidence and online discriminative appearance learning,” in CVPR, 2014, pp. 1218–1225.

[3] A. Bewley, Z. Ge, L. Ott, F. Ramos, and B. Upcroft, “Simple online and realtime tracking,” in ICIP. IEEE, 2016, pp. 3464–3468.

[4] N. Wojke, A. Bewley, and D. Paulus, “Simple online and realtime tracking with a deep association metric,” in ICIP. IEEE, 2017, pp.3645–3649.

[5] W. Luo, B. Yang, and R. Urtasun, “Fast and furious: Real time endto-end 3d detection, tracking and motion forecasting with a single convolutional net,” in CVPR, 2018, pp. 3569–3577.

[6] E. Baser, V . Balasubramanian, P . Bhattacharyya, and K. Czarnecki, “Fantrack: 3d multi-object tracking with feature association network,” in 2019 IEEE Intelligent V ehicles Symposium (IV). IEEE, 2019, pp.1426–1433.

[7] X. Weng, J. Wang, D. Held, and K. Kitani, “3d multi-object tracking: A baseline and new evaluation metrics,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 10 359–10 366.

[8] Y . Zhang, C. Wang, X. Wang, W. Zeng, and W. Liu, “Fairmot: On the fairness of detection and re-identification in multiple object tracking,” International Journal of Computer Vision, vol. 129, no. 11, pp. 3069– 3087, 2021.

[9] J. Pang, L. Qiu, X. Li, H. Chen, Q. Li, T. Darrell, and F. Y u, “Quasidense similarity learning for multiple object tracking,” in CVPR, 2021, pp. 164–173.

[10] X. Weng, J. Wang, D. Held, and K. Kitani, “Ab3dmot: A baseline for 3d multi-object tracking and new evaluation metrics,” arXiv preprint arXiv:2008.08063, 2020.

[11] T. Yin, X. Zhou, and P . Krahenbuhl, “Center-based 3d object detection and tracking,” in CVPR, 2021, pp. 11 784–11 793.

[12] R. E. Kalman, “A new approach to linear filtering and prediction problems,” J. Fluids Eng., vol. 82, no. 1, pp. 35–45, 1960.

[13] S. Chen, X. Wang, T. Cheng, Q. Zhang, C. Huang, and W. Liu, “Polar parametrization for vision-based surround-view 3d detection,” arXiv preprint arXiv:2206.10965, 2022.

[14] Y . Zhang, P . Sun, Y . Jiang, D. Y u, F. Weng, Z. Y uan, P . Luo, W. Liu, and X. Wang, “Bytetrack: Multi-object tracking by associating every detection box,” in ECCV. Springer, 2022, pp. 1–21.

[15] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” in Advances in neural information processing systems, 2015, pp. 91–99.

[16] K. He, G. Gkioxari, P . Dollár, and R. Girshick, “Mask r-cnn,” in ICCV, 2017, pp. 2961–2969.

[17] J. Redmon and A. Farhadi, “Y olov3: An incremental improvement,” arXiv preprint arXiv:1804.02767, 2018.

[18] T.-Y . Lin, P . Goyal, R. Girshick, K. He, and P . Dollár, “Focal loss for dense object detection,” in ICCV, 2017, pp. 2980–2988.

[19] Z. Cai and N. V asconcelos, “Cascade r-cnn: Delving into high quality object detection,” in CVPR, 2018, pp. 6154–6162.

[20] P . Sun, R. Zhang, Y . Jiang, T. Kong, C. Xu, W. Zhan, M. Tomizuka, L. Li, Z. Y uan, C. Wang et al, “Sparse r-cnn: End-to-end object detection with learnable proposals,” in CVPR, 2021, pp. 14 454–14 463.

[21] P . Sun, Y . Jiang, E. Xie, W. Shao, Z. Y uan, C. Wang, and P . Luo, “What makes for end-to-end object detection?” in Proceedings of the 38th International Conference on Machine Learning, ser. Proceedings of Machine Learning Research, vol. 139. PMLR, 2021, pp. 9934–9944.

[22] C.-Y . Wang, A. Bochkovskiy, and H.-Y . M. Liao, “Y olov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” arXiv preprint arXiv:2207.02696, 2022.

[23] Z. Lu, V . Rathod, R. V otel, and J. Huang, “Retinatrack: Online single stage joint detection and tracking,” in CVPR, 2020, pp. 14 668–14 678.

[24] J. Peng, C. Wang, F. Wan, Y . Wu, Y . Wang, Y . Tai, C. Wang, J. Li, F. Huang, and Y . Fu, “Chained-tracker: Chaining paired attentive regression results for end-to-end joint multiple-object detection and tracking,” in ECCV. Springer, 2020, pp. 145–161.

[25] X. Zhou, D. Wang, and P . Krähenbühl, “Objects as points,” arXiv preprint arXiv:1904.07850, 2019.

[26] X. Zhou, V . Koltun, and P . Krähenbühl, “Tracking objects as points,” in ECCV. Springer, 2020, pp. 474–490.

[27] J. Wu, J. Cao, L. Song, Y . Wang, M. Yang, and J. Y uan, “Track to detect and segment: An online multi-object tracker,” in CVPR, 2021, pp. 12 352–12 361.

[28] L. Zheng, M. Tang, Y . Chen, G. Zhu, J. Wang, and H. Lu, “Improving multiple object tracking with single object tracking,” in CVPR, 2021, pp. 2453–2462.

[29] Y . Wang, K. Kitani, and X. Weng, “Joint object detection and multi-object tracking with graph neural networks,” arXiv preprint arXiv:2006.13164, 2020.

[30] P . Tokmakov, J. Li, W. Burgard, and A. Gaidon, “Learning to track with object permanence,” arXiv preprint arXiv:2103.14258, 2021.

[31] Q. Wang, Y . Zheng, P . Pan, and Y . Xu, “Multiple object tracking with correlation learning,” in CVPR, 2021, pp. 3876–3886.

[32] A. Bochkovskiy, C.-Y . Wang, and H.-Y . M. Liao, “Y olov4: Optimal speed and accuracy of object detection,” arXiv preprint arXiv:2004.10934, 2020.

[33] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “Y olox: Exceeding yolo series in 2021,” arXiv preprint arXiv:2107.08430, 2021.

[34] Z. Wang, L. Zheng, Y . Liu, Y . Li, and S. Wang, “Towards real-time multi-object tracking,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XI 16. Springer, 2020, pp. 107–122.

[35] C. Liang, Z. Zhang, Y . Lu, X. Zhou, B. Li, X. Ye, and J. Zou, “Rethinking the competition between detection and reid in multi-object tracking,” arXiv preprint arXiv:2010.12138, 2020.

[36] C. Liang, Z. Zhang, X. Zhou, B. Li, Y . Lu, and W. Hu, “One more check: Making” fake background” be tracked again,” arXiv preprint arXiv:2104.09441, 2021.

[37] P . Chu, J. Wang, Q. Y ou, H. Ling, and Z. Liu, “Transmot: Spatialtemporal graph transformer for multiple object tracking,” arXiv preprint arXiv:2104.00194, 2021.

[38] B. Yan, Y . Jiang, P . Sun, D. Wang, Z. Y uan, P . Luo, and H. Lu, “Towards grand unification of object tracking,” arXiv preprint arXiv:2207.07078, 2022.

[39] Y . Zhang, C. Wang, X. Wang, W. Zeng, and W. Liu, “Robust multiobject tracking by marginal inference,” in ECCV. Springer, 2022, pp.22–40.

[40] N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-end object detection with transformers,” in ECCV. Springer, 2020, pp. 213–229.

[41] X. Zhu, W. Su, L. Lu, B. Li, X. Wang, and J. Dai, “Deformable detr: Deformable transformers for end-to-end object detection,” arXiv preprint arXiv:2010.04159, 2020.

[42] D. Meng, X. Chen, Z. Fan, G. Zeng, H. Li, Y . Y uan, L. Sun, and J. Wang, “Conditional detr for fast training convergence,” in ICCV, 2021, pp.3651–3660.

[43] P . Sun, Y . Jiang, R. Zhang, E. Xie, J. Cao, X. Hu, T. Kong, Z. Y uan, C. Wang, and P . Luo, “Transtrack: Multiple-object tracking with transformer,” arXiv preprint arXiv:2012.15460, 2020.

[44] T. Meinhardt, A. Kirillov, L. Leal-Taixe, and C. Feichtenhofer, “Trackformer: Multi-object tracking with transformers,” in CVPR, 2022, pp.8844–8854.

[45] F. Zeng, B. Dong, T. Wang, C. Chen, X. Zhang, and Y . Wei, “Motr: End-to-end multiple-object tracking with transformer,” arXiv preprint arXiv:2105.03247, 2021.

[46] A. Milan, L. Leal-Taixé, I. Reid, S. Roth, and K. Schindler, “Mot16: A benchmark for multi-object tracking,” arXiv preprint arXiv:1603.00831, 2016.

[47] P . Felzenszwalb, D. McAllester, and D. Ramanan, “A discriminatively trained, multiscale, deformable part model,” in CVPR. IEEE, 2008, pp. 1–8.

[48] F. Yang, W. Choi, and Y . Lin, “Exploit all the layers: Fast and accurate cnn object detector with scale dependent pooling and cascaded rejection classifiers,” in CVPR, 2016, pp. 2129–2137.

[49] J. Xu, Y . Cao, Z. Zhang, and H. Hu, “Spatial-temporal relation networks for multi-object tracking,” in ICCV, 2019, pp. 3988–3998.

[50] P . Chu and H. Ling, “Famnet: Joint learning of feature, affinity and multi-dimensional assignment for online multiple object tracking,” in ICCV, 2019, pp. 6172–6181.

[51] P . Bergmann, T. Meinhardt, and L. Leal-Taixe, “Tracking without bells and whistles,” in ICCV, 2019, pp. 941–951.

[52] L. Chen, H. Ai, Z. Zhuang, and C. Shang, “Real-time multiple people tracking with deeply learned candidate selection and person reidentification,” in ICME. IEEE, 2018, pp. 1–6.

[53] J. Zhu, H. Yang, N. Liu, M. Kim, W. Zhang, and M.-H. Yang, “Online multi-object tracking with dual matching attention networks,” in Proceedings of the ECCV (ECCV), 2018, pp. 366–382.

[54] G. Brasó and L. Leal-Taixé, “Learning a neural solver for multiple object tracking,” in CVPR, 2020, pp. 6247–6257.

[55] A. Hornakova, R. Henschel, B. Rosenhahn, and P . Swoboda, “Lifted disjoint paths with application in multiple object tracking,” in International Conference on Machine Learning. PMLR, 2020, pp. 4364–4375.

[56] Y . Zhou and O. Tuzel, “V oxelnet: End-to-end learning for point cloud based 3d object detection,” in CVPR, 2018, pp. 4490–4499.

[57] Y . Yan, Y . Mao, and B. Li, “Second: Sparsely embedded convolutional detection,” Sensors, vol. 18, no. 10, p. 3337, 2018.

[58] A. H. Lang, S. V ora, H. Caesar, L. Zhou, J. Yang, and O. Beijbom, “Pointpillars: Fast encoders for object detection from point clouds,” in CVPR, 2019, pp. 12 697–12 705.

[59] S. Shi, X. Wang, and H. Li, “Pointrcnn: 3d object proposal generation and detection from point cloud,” in CVPR, 2019, pp. 770–779.

[60] L. Du, X. Ye, X. Tan, E. Johns, B. Chen, E. Ding, X. Xue, and J. Feng, “Ago-net: Association-guided 3d point cloud object detection network,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

[61] J. Liu, Y . Chen, X. Ye, Z. Tian, X. Tan, and X. Qi, “Spatial pruned sparse convolution for efficient 3d object detection,” arXiv preprint arXiv:2209.14201, 2022.

[62] X. Chen, K. Kundu, Y . Zhu, A. G. Berneshawi, H. Ma, S. Fidler, and R. Urtasun, “3d object proposals for accurate object class detection,” Advances in neural information processing systems, vol. 28, 2015.

[63] X. Chen, K. Kundu, Z. Zhang, H. Ma, S. Fidler, and R. Urtasun, “Monocular 3d object detection for autonomous driving,” in CVPR, 2016, pp. 2147–2156.

[64] T. Wang, X. Zhu, J. Pang, and D. Lin, “Fcos3d: Fully convolutional one-stage monocular 3d object detection,” in ICCV, 2021, pp. 913–922.

[65] Z. Zou, X. Ye, L. Du, X. Cheng, X. Tan, L. Zhang, J. Feng, X. Xue, and E. Ding, “The devil is in the task: Exploiting reciprocal appearancelocalization features for monocular 3d object detection,” in ICCV, 2021, pp. 2713–2722.

[66] Y . Zhang, J. Lu, and J. Zhou, “Objects are different: Flexible monocular 3d object detection,” in CVPR, 2021, pp. 3289–3298.

[67] X. Ye, M. Shu, H. Li, Y . Shi, Y . Li, G. Wang, X. Tan, and E. Ding, “Rope3d: The roadside perception dataset for autonomous driving and monocular 3d object detection task,” in CVPR, 2022, pp. 21 341–21 350.

[68] C. Reading, A. Harakeh, J. Chae, and S. L. Waslander, “Categorical depth distribution network for monocular 3d object detection,” in CVPR, 2021, pp. 8555–8564.

[69] X. Ye, L. Du, Y . Shi, Y . Li, X. Tan, J. Feng, E. Ding, and S. Wen, “Monocular 3d object detection via feature domain adaptation,” in ECCV. Springer, 2020, pp. 17–34.

[70] Y . Wang, W.-L. Chao, D. Garg, B. Hariharan, M. Campbell, and K. Q.

Weinberger, “Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving,” in CVPR, 2019, pp.8445–8453.

[71] X. Weng and K. Kitani, “Monocular 3d object detection with pseudolidar point cloud,” in CVPRW, 2019, pp. 0–0.

[72] Y . Y ou, Y . Wang, W.-L. Chao, D. Garg, G. Pleiss, B. Hariharan, M. Campbell, and K. Q. Weinberger, “Pseudo-lidar++: Accurate depth for 3d object detection in autonomous driving,” arXiv preprint arXiv:1906.06310, 2019.

[73] J. M. U. Vianney, S. Aich, and B. Liu, “Refinedmpl: Refined monocular pseudolidar for 3d object detection in autonomous driving,” arXiv preprint arXiv:1911.09712, 2019.

[74] J. Philion and S. Fidler, “Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d,” in ECCV.Springer, 2020, pp. 194–210.

[75] Z. Li, W. Wang, H. Li, E. Xie, C. Sima, T. Lu, Q. Y u, and J. Dai, “Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers,” arXiv preprint arXiv:2203.17270, 2022.

[76] Y . Wang, V . C. Guizilini, T. Zhang, Y . Wang, H. Zhao, and J. Solomon, “Detr3d: 3d object detection from multi-view images via 3d-to-2d queries,” in Conference on Robot Learning. PMLR, 2022, pp. 180–191.

[77] Y . Liu, T. Wang, X. Zhang, and J. Sun, “Petr: Position embedding transformation for multi-view 3d object detection,” arXiv preprint arXiv:2203.05625, 2022.

[78] Y . Li, Z. Ge, G. Y u, J. Yang, Z. Wang, Y . Shi, J. Sun, and Z. Li, “Bevdepth: Acquisition of reliable depth for multi-view 3d object detection,” arXiv preprint arXiv:2206.10092, 2022.

[79] K. Xiong, S. Gong, X. Ye, X. Tan, J. Wan, E. Ding, J. Wang, and X. Bai, “Cape: Camera view position embedding for multi-view 3d object detection,” arXiv preprint arXiv:2303.10209, 2023.

[80] N. Benbarka, J. Schröder, and A. Zell, “Score refinement for confidencebased 3d multi-object tracking,” in 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021, pp.8083–8090.

[81] Z. Pang, Z. Li, and N. Wang, “Simpletrack: Understanding and rethinking 3d multi-object tracking,” arXiv preprint arXiv:2111.09621, 2021.

[82] Q. Wang, Y . Chen, Z. Pang, N. Wang, and Z. Zhang, “Immortal tracker: Tracklet never dies,” arXiv preprint arXiv:2111.13672, 2021.

[83] A. Kundu, Y . Li, and J. M. Rehg, “3d-rcnn: Instance-level 3d object reconstruction via render-and-compare,” in CVPR, 2018, pp. 3559– 3568.

[84] H.-N. Hu, Y .-H. Yang, T. Fischer, T. Darrell, F. Y u, and M. Sun, “Monocular quasi-dense 3d object tracking,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

[85] T. Zhang, X. Chen, Y . Wang, Y . Wang, and H. Zhao, “Mutr3d: A multicamera tracking framework via 3d-to-2d queries,” in CVPR, 2022, pp.4537–4546.

[86] J. Yang, E. Y u, Z. Li, X. Li, and W. Tao, “Quality matters: Embracing quality clues for robust 3d multi-object tracking,” arXiv preprint arXiv:2208.10976, 2022.

[87] B. Shuai, A. Berneshawi, X. Li, D. Modolo, and J. Tighe, “Siammot: Siamese multi-object tracking,” in CVPR, 2021, pp. 12 372–12 382.

[88] Y . Zhang, C. Wang, X. Wang, W. Liu, and W. Zeng, “V oxeltrack: Multi-person 3d human pose estimation and tracking in the wild,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

[89] X. Zhou, T. Yin, V . Koltun, and P . Krähenbühl, “Global tracking transformers,” in CVPR, 2022, pp. 8771–8780.

[90] Z. Xu, W. Yang, W. Zhang, X. Tan, H. Huang, and L. Huang, “Segment as points for efficient and effective online multi-object tracking and segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 10, pp. 6424–6437, 2021.

[91] H. W. Kuhn, “The hungarian method for the assignment problem,” Naval research logistics quarterly, vol. 2, no. 1-2, pp. 83–97, 1955.

[92] A. V aswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in neural information processing systems, 2017, pp. 5998–6008.

[93] Z. Zhao, Z. Wu, Y . Zhuang, B. Li, and J. Jia, “Tracking objects as pixel-wise distributions,” in ECCV. Springer, 2022, pp. 76–94.

[94] H.-K. Chiu, J. Li, R. Ambrus ¸, and J. Bohg, “Probabilistic 3d multimodal, multi-object tracking for autonomous driving,” in ICRA, 2021, pp. 14 227–14 233.

[95] X. Weng, Y . Wang, Y . Man, and K. M. Kitani, “Gnn3dmot: Graph neural network for 3d multi-object tracking with 2d-3d multi-feature learning,” in CVPR, 2020, pp. 6499–6508.

[96] N. Marinello, M. Proesmans, and L. V an Gool, “Triplettrack: 3d object tracking using triplet embeddings and lstm,” in CVPR, 2022, pp. 4500– 4510.

[97] Z. Ge, S. Liu, Z. Li, O. Y oshie, and J. Sun, “Ota: Optimal transport assignment for object detection,” in CVPR, 2021, pp. 303–312.

[98] H. Zhang, M. Cisse, Y . N. Dauphin, and D. Lopez-Paz, “mixup: Beyond empirical risk minimization,” arXiv preprint arXiv:1710.09412, 2017.

[99] H. Zhang, F. Li, S. Liu, L. Zhang, H. Su, J. Zhu, L. M. Ni, and H.-Y .Shum, “Dino: Detr with improved denoising anchor boxes for end-toend object detection,” arXiv preprint arXiv:2203.03605, 2022.

[100] Z. Liu, Y . Lin, Y . Cao, H. Hu, Y . Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” arXiv preprint arXiv:2103.14030, 2021.

[101] Y . Liu, J. Yan, F. Jia, S. Li, Q. Gao, T. Wang, X. Zhang, and J. Sun, “Petrv2: A unified framework for 3d perception from multi-camera images,” arXiv preprint arXiv:2206.01256, 2022.

[102] X. Bai, Z. Hu, X. Zhu, Q. Huang, Y . Chen, H. Fu, and C.-L. Tai, “Transfusion: Robust lidar-camera fusion for 3d object detection with transformers,” in CVPR, 2022, pp. 1090–1099.

[103] H. Rezatofighi, N. Tsoi, J. Gwak, A. Sadeghian, I. Reid, and S. Savarese, “Generalized intersection over union: A metric and a loss for bounding box regression,” in CVPR, 2019, pp. 658–666.

[104] S. Shao, Z. Zhao, B. Li, T. Xiao, G. Y u, X. Zhang, and J. Sun, “Crowdhuman: A benchmark for detecting human in a crowd,” arXiv preprint arXiv:1805.00123, 2018.

[105] S. Zhang, R. Benenson, and B. Schiele, “Citypersons: A diverse dataset for pedestrian detection,” in CVPR, 2017, pp. 3213–3221.

[106] A. Ess, B. Leibe, K. Schindler, and L. V an Gool, “A mobile vision system for robust multi-person tracking,” in CVPR. IEEE, 2008, pp.1–8.

[107] P . Dendorfer, H. Rezatofighi, A. Milan, J. Shi, D. Cremers, I. Reid, S. Roth, K. Schindler, and L. Leal-Taixé, “Mot20: A benchmark for multi object tracking in crowded scenes,” arXiv preprint arXiv:2003.09003, 2020.

[108] W. Lin, H. Liu, S. Liu, Y . Li, R. Qian, T. Wang, N. Xu, H. Xiong, G.-J. Qi, and N. Sebe, “Human in events: A large-scale benchmark for human-centric video analysis in complex events,” arXiv preprint arXiv:2005.04490, 2020.

[109] F. Y u, H. Chen, X. Wang, W. Xian, Y . Chen, F. Liu, V . Madhavan, and T. Darrell, “Bdd100k: A diverse driving dataset for heterogeneous multitask learning,” in CVPR, 2020, pp. 2636–2645.

[110] H. Caesar, V . Bankiti, A. H. Lang, S. V ora, V . E. Liong, Q. Xu, A. Krishnan, Y . Pan, G. Baldan, and O. Beijbom, “nuscenes: A multimodal dataset for autonomous driving,” in CVPR, 2020, pp. 11 621–11 631.

[111] K. Bernardin and R. Stiefelhagen, “Evaluating multiple object tracking performance: the clear mot metrics,” EURASIP Journal on Image and Video Processing, vol. 2008, pp. 1–10, 2008.

[112] E. Ristani, F. Solera, R. Zou, R. Cucchiara, and C. Tomasi, “Performance measures and a data set for multi-target, multi-camera tracking,” in ECCV. Springer, 2016, pp. 17–35.

[113] J. Luiten, A. Osep, P . Dendorfer, P . Torr, A. Geiger, L. Leal-Taixé, and B. Leibe, “Hota: A higher order metric for evaluating multi-object tracking,” International journal of computer vision, vol. 129, no. 2, pp.548–578, 2021.

[114] T.-Y . Lin, M. Maire, S. Belongie, J. Hays, P . Perona, D. Ramanan, P . Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in ECCV. Springer, 2014, pp. 740–755.

[115] P . Micikevicius, S. Narang, J. Alben, G. Diamos, E. Elsen, D. Garcia, B. Ginsburg, M. Houston, O. Kuchaiev, G. V enkatesh et al, “Mixed precision training,” arXiv preprint arXiv:1710.03740, 2017.

[116] Z. Wang, H. Zhao, Y .-L. Li, S. Wang, P . H. Torr, and L. Bertinetto, “Do different tracking tasks require different appearance models?” arXiv preprint arXiv:2107.02156, 2021.

[117] L. He, X. Liao, W. Liu, X. Liu, P . Cheng, and T. Mei, “Fastreid: A pytorch toolbox for general instance re-identification,” arXiv preprint arXiv:2006.02631, 2020.

[118] Y . Lee and J. Park, “Centermask: Real-time anchor-free instance segmentation,” in CVPR, 2020, pp. 13 906–13 915.

[119] D. Park, R. Ambrus, V . Guizilini, J. Li, and A. Gaidon, “Is pseudolidar needed for monocular 3d object detection?” in ICCV, 2021, pp.3142–3152.

[120] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” arXiv preprint arXiv:1711.05101, 2017.

[121] B. Pang, Y . Li, Y . Zhang, M. Li, and C. Lu, “Tubetk: Adopting tubes to track multi-object in a one-step training model,” in CVPR, 2020, pp.6308–6318.

[122] Y . Xu, Y . Ban, G. Delorme, C. Gan, D. Rus, and X. AlamedaPineda, “Transcenter: Transformers with dense queries for multipleobject tracking,” arXiv preprint arXiv:2103.15145, 2021.

[123] F. Yang, X. Chang, S. Sakti, Y . Wu, and S. Nakamura, “Remot: A model-agnostic refinement for multiple object tracking,” Image and Vision Computing, vol. 106, p. 104091, 2021.

[124] E. Bochinski, V . Eiselein, and T. Sikora, “High-speed tracking-bydetection without using image information,” in A VSS. IEEE, 2017, pp. 1–6.

[125] M. Chaabane, P . Zhang, J. R. Beveridge, and S. O’Hara, “Deft: Detection embeddings for tracking,” arXiv preprint arXiv:2102.02267, 2021.

[126] P . Li and J. Jin, “Time3d: End-to-end joint monocular 3d object detection and tracking for autonomous driving,” in CVPR, 2022, pp.3885–3894.

[127] Y . Shi, J. Shen, Y . Sun, Y . Wang, J. Li, S. Sun, K. Jiang, and D. Yang, “Srcn3d: Sparse r-cnn 3d surround-view camera object detection and tracking for autonomous driving,” arXiv preprint arXiv:2206.14451, 2022.

[128] Y . Li, Y . Chen, X. Qi, Z. Li, J. Sun, and J. Jia, “Unifying voxel-based representation with transformer for 3d object detection,” arXiv preprint arXiv:2206.00630, 2022.

[129] J.-N. Zaech, A. Liniger, D. Dai, M. Danelljan, and L. V an Gool, “Learnable online graph representations for 3d multi-object tracking,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 5103–5110, 2022.

[130] A. Kim, G. Brasó, A. Oˇsep, and L. Leal-Taixé, “Polarmot: How far can geometric relations take us in 3d multi-object tracking?” in ECCV.Springer, 2022, pp. 41–58.

[131] F. Meyer, T. Kropfreiter, J. L. Williams, R. Lau, F. Hlawatsch, P . Braca, and M. Z. Win, “Message passing algorithms for scalable multitarget tracking,” Proceedings of the IEEE, vol. 106, no. 2, pp. 221–259, 2018.

[132] J. Liu, L. Bai, Y . Xia, T. Huang, B. Zhu, and Q.-L. Han, “Gnn-pmb: A simple but effective online 3d multi-object tracker without bells and whistles,” arXiv preprint arXiv:2206.10255, 2022.