【大数据基石】Hadoop环境搭建

文章目录

- 前言

- 配置hosts

- 关闭防火墙

- 配置SSH免密

- 下载Hadoop

- 解压Hadoop到指定目录

- 添加环境变量

- 修改Hadoop配置文件

-

- core-site.xml

- hdfs-site.xml

- yarn-site.xml

- mapred-site.xml

- workers

- hadoop-env.sh

- 其他2台服务器也这样配置

- 初始化NameNode

- 启动hdfs

- 启动Yarn

- 启动历史记录服务器

✨这里是第七人格的博客。小七,欢迎您的到来~✨

系列专栏:【大数据基石】

✈️本篇内容: Hadoop环境搭建✈️

本篇收录完整代码地址:无

前言

本篇文章是基于Centos7搭建的。

配置hosts

vim /etc/hosts

在3台机器上分别修改hosts文件,加入以下内容

192.168.75.3 hadoop01

192.168.75.4 hadoop02

192.168.75.5 hadoop03

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld.servicemkdir -p /mydata/redis/conf

配置SSH免密

ssh-keygen -t rsa

cd /root/.ssh/

cat id_rsa.pub > authorized_keys

cat authorized_keys

下载Hadoop

wget --no-check-certificate https://repo.huaweicloud.com/apache/hadoop/common/hadoop-3.2.2/hadoop-3.2.2.tar.gz

解压Hadoop到指定目录

我这里解压到

/usr/local/hadoop-space/

添加环境变量

vi ~/.bash_profile

添加以下内容,注意修改成自己的路径

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

export HADOOP_PID_DIR=/usr/local/hadoop-space/

export HADOOP_HOME=/usr/local/hadoop-space/hadoop-3.2.2/

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.362.b08-1.el7_9.x86_64/

PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$JAVA_HOME/bin:$HOME/bin

export PATH

source ~/.bash_profile

修改Hadoop配置文件

进入 $HADOOP_HOME/etc/hadoop目录下。我这里是/usr/local/hadoop-space/hadoop-3.2.2/etc/hadoop/

cd /usr/local/hadoop-space/hadoop-3.2.2/etc/hadoop/

core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop01:8020value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/local/hadoop-space/hadoop-3.2.2/datavalue>

property>

<property>

<name>hadoop.http.staticuser.username>

<value>rootvalue>

property>

configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.http-addressname>

<value>hadoop01:9870value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>hadoop03:9868value>

property>

<property>

<name>dfs.replicationname>

<value>2value>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>hadoop02value>

property>

<property>

<name>yarn.nodemanager.env-whitelistname>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOMEvalue>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

property>

<property>

<name>yarn.log.server.urlname>

<value>http://hadoop01:19888/jobhistory/logsvalue>

property>

<property>

<name>yarn.log-aggregation.retain-secondsname>

<value>604800value>

property>

configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.jobhistory.webapp.addressname>

<value>hadoop01:19888value>

property>

configuration>

workers

hadoop01

hadoop02

hadoop03

hadoop-env.sh

修改Java环境变量

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.362.b08-1.el7_9.x86_64/

其他2台服务器也这样配置

初始化NameNode

在Hadoop01初始化NameNode

hadoop namenode -format

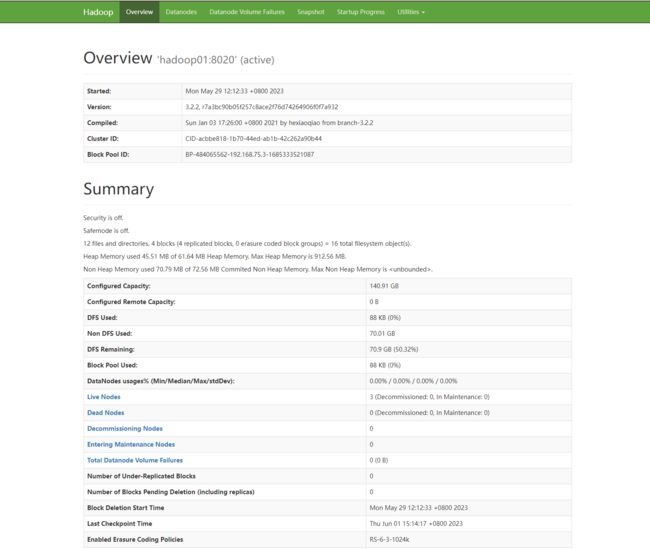

启动hdfs

在Hadoop01

cd /usr/local/hadoop-space/hadoop-3.2.2/sbin/

start-dfs.sh

这时候访问 http://hadoop01:9870 就可以访问到HDFS的Web页面了。

启动Yarn

切换到Hadoop02的机器上

cd /usr/local/hadoop-space/hadoop-3.2.2/sbin/

start-yarn.sh

这时候访问 http://hadoop02:8088/ 可以进入以下页面

启动历史记录服务器

切换到Hadoop01服务器

mapred --daemon start historyserver

这时候访问 http://hadoop01:19888/ 可以进入以下页面

3881)]