部署高可用集群(虚拟机演示)

前言:

准备好三台虚拟机hd01,hd02,hd03(机器名后面会用到,如果更改请修改相关配置文件)

并提前安装好了wget、vim、更换了yum源、配置好了机器名和固定ip地址、关闭了防火墙;

安装并配置好jdk(以上步骤之前的文章都写过,并且已经有相应脚本,可以一键完成)

一、准备工作

将连接三台虚拟机的XShell链接都开启,然后点下面的按钮,能够同时操作三台机器。

1.无密登录(同步)

多机器多链接一起操作:

# 做ssh 公私钥 无秘

ssh-keygen -t rsa -P ''# copy 公钥到 hd01 hd02 hd03

ssh-copy-id root@hd01

ssh-copy-id root@hd02

ssh-copy-id root@hd03#回车

2.所有服务器同步时间(同步)

# 安装chrony

yum -y install chrony#配置chrony

vim /etc/chrony.conf

注释掉server 0.centos.pool.ntp.org iburst等4行,添加配置:

server ntp1.aliyun.com

server ntp2.aliyun.com

server ntp3.aliyun.com#启动chrony

systemctl start chronyd3.安装psmisc工具包(同步)

(linux命令工具包 namenode主备切换时要用到 只需要安装在两个namenode节点上)

yum install -y psmisc二、安装zookeeper集群

1.配置文件

先只操作hd01,稍后复制到其他机器上。

将安装包传入hd01

#解压zookeeper

tar -zxf [文件]#移动并改名(路径名尽量为版本名)

mv zookeeper-3.4.5-cdh5.14.2 soft/zk345# 拷贝并配置zoo.cfg

cd /opt/soft/zk234/conf

cp zoo_sample.cfg zoo.cfg

vim zoo.cfg配置文件:

#修改路径:

dataDir=/opt/soft/zk345/data

#最后加上:

server.1=hd01:2888:3888

server.2=hd02:2888:3888

server.3=hd03:2888:3888

#在上面改好的路径下创建myid文件(不同主机的数字不同,与上面相对应)

cd ..

mkdir data

echo "1"> data/myid#拷贝以上文件给其他集群虚拟机2

scp -r /opt/soft/zk345/ root@hd02:/opt/soft/zk345

scp -r /opt/soft/zk345/ root@hd03:/opt/soft/zk345#记得更改myid文件数字

vim /opt/soft/zk345/data/myid

2或32.配置环境变量

(多机共同操作)

vim /etc/profile添加如下路径

#zookeeper

export ZOOKEEPER_HOME=/opt/soft/zk345

export PATH=SPATH:$ZOOKEEPER_HOME/bin

#重启环境变量

source /etc/profile#启动集群zookeeper

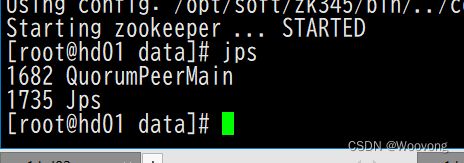

zkServer.sh startjps查看进程,若出现以下进程证明上述步骤正确

三、安装Hadoop集群

1.在单台机上配置hadoop环境 创建文件夹

# 解压

tar -zxf hadoop-2.6.0-cdh5.14.2.tar.gz# 移动到自己的安装文件夹下

mv hadoop-2.6.0-cdh5.14.2 soft/hadoop260# 添加对应各个文件夹,后面会用到

mkdir -p /opt/soft/hadoop260/tmp

mkdir -p /opt/soft/hadoop260/dfs/journalnode_data

mkdir -p /opt/soft/hadoop260/dfs/edits

mkdir -p /opt/soft/hadoop260/dfs/datanode_data

mkdir -p /opt/soft/hadoop260/dfs/namenode_data2.修改配置文件

①hadoop-env.sh

vim /opt/soft/hadoop260/etc/hadoop/hadoop-env.sh#修改两处路径

export JAVA_HOME=/opt/soft/jdk180

export HADOOP_CONF_DIR=/opt/soft/hadoop260/etc/hadoo

②core-site.xml

vim /opt/soft/hadoop260/etc/hadoop/core-site.xml

fs.defaultFS

hdfs://hacluster

hadoop.tmp.dir

file:///opt/soft/hadoop260/tmp

io.file.buffer.size

4096

ha.zookeeper.quorum

hd01:2181,hd02:2181,hd03:2181

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

③hdfs-site.xml

vim /opt/soft/hadoop260/etc/hadoop/hdfs-site.xml

dfs.block.size

134217728

dfs.replication

3

dfs.name.dir

file:///opt/soft/hadoop260/dfs/namenode_data

dfs.data.dir

file:///opt/soft/hadoop260/dfs/datanode_data

dfs.webhdfs.enabled

true

dfs.datanode.max.transfer.threads

4096

dfs.nameservices

hacluster

dfs.ha.namenodes.hacluster

nn1,nn2

dfs.namenode.rpc-address.hacluster.nn1

hd01:9000

dfs.namenode.servicepc-address.hacluster.nn1

hd01:53310

dfs.namenode.http-address.hacluster.nn1

hd01:50070

dfs.namenode.rpc-address.hacluster.nn2

hd02:9000

dfs.namenode.servicepc-address.hacluster.nn2

hd02:53310

dfs.namenode.http-address.hacluster.nn2

hd02:50070

dfs.namenode.shared.edits.dir

qjournal://hd01:8485;hd02:8485;hd03:8485/hacluster

dfs.journalnode.edits.dir

/opt/soft/hadoop260/dfs/journalnode_data

dfs.namenode.edits.dir

/opt/soft/hadoop260/dfs/edits

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.hacluster

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.premissions

false

④mapred.site.xml

需要先复制再修改

cd /opt/soft/hadoop260/etc/hadoop

cp mapred-site.xml.template mapred.site.xml

vim mapred.site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

hd01:10020

mapreduce.jobhistory.webapp.address

hd01:19888

mapreduce.job.ubertask.enable

true

⑤yarn-site.xml

vim yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

hayarn

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

hd02

yarn.resourcemanager.hostname.rm2

hd03

yarn.resourcemanager.zk-address

hd01:2181,hd02:2181,hd03:2181

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.store.class org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.resourcemanager.hostname

hd03

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

⑥更改slaves文件

vim slaves#删掉文件里原本的 localhost

#更改为:

hd01

hd02

hd03

3.复制给其他机器

scp -r hadoop260/ root@hd02:/opt/soft/

scp -r hadoop260/ root@hd03:/opt/soft/(建议在此处多机快照)

4.配置环境变量

(多机操作)

vim /etc/profile#hadoop

export HADOOP_HOME=/opt/soft/hadoop260

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export HADOOP_INSTALL=$HADOOP_HOME

重启环境变量

source /etc/profile四、启动Hadoop集群

1.启动zookeeper(多机)

zkServer.sh start 检查出现QuorumPeerMain进程,正确。

2.启动JournalNode(多机)

hadoop-daemon.sh start journalnode检查出现JournalNode进程,正确。

3.格式化hd01的namenode(单机)

hdfs namenode -format4.将hd01上的Namenode的元数据复制到hd02相同位置(单机)

scp -r /opt/soft/hadoop260/dfs/namenode_data/current/ root@hd02:/opt/soft/hadoop260/dfs/namenode_data5.在hd01或hd02格式化故障转移控制器zkfc(单机)

hdfs zkfc -formatZK6.在hd01上启动dfs服务(单机)

start-dfs.shhd01: DFSZKFailoverController、DataNode、NameNode

hd02: DFSZKFailoverController、DataNode、NameNode

hd03: DataNode

多机多出上述进程,正确。

7.在hd03上启动yarn服务(单机)

start-yarn.shhd01: NodeManager

hd02: NodeManager

hd03: ResourceManager、NodeManager

多机多出上述进程,正确。

8.在hd01上启动history服务器(单机)

mr-jobhistory-daemon.sh start historyserverhd01多出JobHistoryServer进程,正确。

9.在hd02上启动resourcemanager服务(单机)

yarn-daemon.sh start resourcemanagerhd02也有ResourceManager进程,正确。

当检查进程全部无误时,证明启动成功,可以查看验证:

# 在 hd01 上查看服务状态hdfs haadmin -getServiceState nn1 #activehdfs haadmin -getServiceState nn2 #standby# 在 hd03 上查看 resourcemanager 状态yarn rmadmin -getServiceState rm1 #standbyyarn rmadmin -getServiceState rm2 #active