- 知乎盈利之道:多元化策略下的知识变现

高省_飞智666600

知乎,这个以问答形式起家的知识分享平台,如今已发展成为一个涵盖多种内容形式、拥有庞大用户群体的综合性社区。随着用户数量的不断增长和平台功能的日益完善,知乎也逐渐探索出了一条多元化的盈利之路。本文将深入探讨知乎如何通过多种策略实现盈利。广告收入广告是知乎最传统的盈利方式之一。知乎通过在用户浏览问题、答案或专栏时展示广告,获得了可观的收入。这些广告通常与用户的兴趣和浏览历史相关,提高了广告的点击率和转

- 2019-06-02

胡五妹1964

五妹日记我是不是多心了在去年冬天,已忘了哪一天了,我在手机上浏览,听说在上写文章的人,被人抄袭,发表在别的平台上。正因为这个,看到自己在写的几篇文章,也没什么阅读量,投稿又被拒绝,也许自己写的还是不够好吧。于是,我就在精力转到今日头条的悟空问答里做问答。看到那么多的问答题,有时感觉自己有话要说,于是就把自己经历的或知道的事写了不少。有时答诗词方面的问题,我还会去百度上查资料,或看《唐诗三百首》《宋

- 聚焦基础研究突破,北电数智联合复旦大学等团队提出“AI安全”DDPA方法入选ICML

CSDN资讯

人工智能安全数据要素大数据

近日,由北电数智首席科学家窦德景教授牵头,联合复旦大学和美国奥本大学等科研团队共同研发,提出一种DDPA(DynamicDelayedPoisoningAttack)新型对抗性攻击方法,为机器学习领域的安全研究提供新视角与工具,相关论文已被国际机器学习大会(ICML2025)收录。ICML由国际机器学习学会(IMLS)主办,聚焦深度学习、强化学习、自然语言处理等机器学习前沿方向,是机器学习与人工智

- 111.添加点击岐黄慧问图标返回到个人信息页面功能

因为在知识问答界面没有添加返回个人界面的功能,所以特意给其添加上:点击之后会调用gotohome方法:然后使用路由跳转到profile界面这是悬停时显示的效果,可以轻微放大点击之后就可以跳转回原界面

- 日常修炼

夏摩山谷深处

修炼一:【工作篇】1.关于和领导交流在分配任务时,要马上搞明白你的任务是啥,不要因不好意思假装听懂了,再去猜领导的意思,万一猜错了,时间浪费了,工作也白做了,还会留下不好的印象。向领导征求意见时,提前准备好你的备选方案,多让领导去做选择题而不是问答题。2.关于工作学习建议主动学习,主动去接受任务,能学多少学多少。把握两个原则“令行禁止”和“法无禁止即可为“。前者的意思是当你被安排了多个任务时,直系

- LLM初识

从零到一:用Python和LLM构建你的专属本地知识库问答机器人摘要:随着大型语言模型(LLM)的兴起,构建智能问答系统变得前所未有的简单。本文将详细介绍如何使用Python,结合开源的LLM和向量数据库技术,一步步搭建一个基于你本地文档的知识库问答机器人。你将学习到从环境准备、文档加载、文本切分、向量化、索引构建到最终实现问答交互的完整流程。本文包含详细的流程图描述、代码片段思路和关键注意事项,

- 大模型——TRAE+Milvus MCP 自然语言就能搞定向量数据库

不二人生

大模型milvus数据库trae大模型

大模型——TRAE+MilvusMCP自然语言就能搞定向量数据库不久前,继Cursor和ClaudeDesktop在海外市场掀起智能编程浪潮后,字节跳动TRAE海外版也进入了付费模式。相较前两款海外产品,TRAE集成了代码补全、智能问答和Agent模式之外,还可以为中文开发者带来本土化的智能编程体验。恰逢其时,MilvusMCP服务器新增了SSE(Server-SentEvents)支持。相比传统

- Spring Boot + LLM 智能文档生成全流程技术方案,包含从代码注解规范、OpenAPI增强、Prompt工程到企业级落地

夜雨hiyeyu.com

javaspringbootspringjava系统架构后端springcloud人工智能

SpringBoot+LLM智能文档生成全流程技术方案,包含从代码注解规范、OpenAPI增强、Prompt工程到企业级落地一、深度集成架构设计二、代码层深度规范(含20+注解模板)2.1精细化参数描述2.2错误码智能生成三、OpenAPI规范增强策略3.1扩展字段注入3.2多语言支持四、企业级Prompt工程库4.1基础Prompt模板4.2智能问答Prompt五、智能文档生成全流程5.1动态示

- AI产品经理面试宝典第42天:学习方法与产品流程解析

TGITCIC

AI产品经理一线大厂面试题产品经理AI面试大模型面试AI产品经理面试大模型产品经理面试AI产品大模型产品

具体问答:学习产品及AI知识的方法问:请谈谈您是如何学习产品及AI知识的,以及您认为哪些资源对您帮助最大答:我的学习体系包含三个维度:分层知识架构、实践验证闭环、资源筛选机制。在知识获取阶段,采用「理论-案例-工具」三级学习法:通过《人工智能:一种现代的方法》构建AI基础框架,用TensorFlow官方文档掌握工程实现,结合《启示录》《俞军产品方法论》理解产品逻辑。实践环节采用「项目反哺」模式,例

- 在NLP深层语义分析中,深度学习和机器学习的区别与联系

在自然语言处理(NLP)的深层语义分析任务中,深度学习与机器学习的区别和联系主要体现在以下方面:一、核心区别特征提取方式机器学习:依赖人工设计特征(如词频、句法规则、TF-IDF等),需要领域专家对文本进行结构化处理。例如,传统情感分析需人工定义“情感词库”或通过词性标注提取关键成分。深度学习:通过神经网络自动学习多层次特征。例如,BERT等模型可从原始文本中捕获词向量、句法关系甚至篇章级语义,无

- 敏捷开发中的自然语言处理集成

项目管理实战手册

项目管理最佳实践敏捷流程自然语言处理easyuiai

敏捷开发中的自然语言处理集成:让代码与需求“说人话”关键词:敏捷开发、自然语言处理(NLP)、用户故事分析、需求自动化、持续集成优化摘要:在敏捷开发中,“快速响应变化”的核心目标常被繁琐的文本处理拖慢——需求文档像“天书”、用户故事靠“脑补”、缺陷报告整理耗时……自然语言处理(NLP)就像一位“智能翻译官”,能让开发团队与需求文档“流畅对话”。本文将用“搭积木”“翻译机”等生活化比喻,带您理解如何

- 小红书和知乎的区别是什么?小红书和知乎哪个更专业

高省张导师

小红书作为一个内容平台,“种草”的能力是大家都能够看得到的。和小红书相比以及不算新平台的知乎,并没有太多人关注到它的种草能力。实际上知乎作为内容平台来说,具有的电商价值一定是非常高的,那我们该怎么选择小红书和知乎呢?小红书和知乎,哪个平台更好“种草”?知乎知乎有更加完善的问答筛选的机制,保证了内容对于消费者来说都或多或少是有一定影响的。对于内容创作者而言,只需要考虑的问题就是自己的内容质量是否足够

- ChatGPT 与 AIGC 简问乱答

MatrixOnEarth

ChatGPT与AIGC简问乱答**仅代表个人观点。**[Q1]ChatGPT最近非常火爆,2个月突破1亿月活,从产品形态来看,我们知道的微软、谷歌的搜索引擎都会嵌入。那么我们如何看待它的用户粘性,真的会有那么多人持续使用吗还是说只是一阵热潮?[A1]首先,工业界长久以来对搜索引擎的最终产品形态的定义是:信息问答助理。目前的信息检索黄页的产品形态个人认为其实是在技术发展未能满足最终产品形态目标的情

- 使用Python调用Hugging Face Question Answering (问答)模型

墨如夜色

pythoneasyui开发语言Python

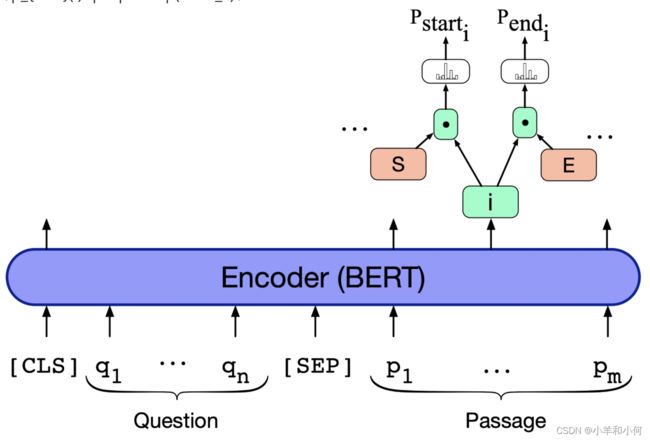

使用Python调用HuggingFaceQuestionAnswering(问答)模型在自然语言处理领域,问答系统是一种能够回答用户提出的问题的智能系统。HuggingFace是一个知名的开源软件库,提供了许多强大的自然语言处理工具和模型。其中,HuggingFace的QuestionAnswering模型可以帮助我们构建问答系统,使得我们能够从给定的文本中提取答案。本文将介绍如何使用Pytho

- AI人工智能领域知识图谱在文本分类中的应用技巧

AI天才研究院

AI大模型企业级应用开发实战人工智能知识图谱分类ai

AI人工智能领域知识图谱在文本分类中的应用技巧关键词:知识图谱、文本分类、图神经网络、实体关系抽取、深度学习、自然语言处理、特征融合摘要:本文深入探讨了知识图谱在文本分类任务中的应用技巧。我们将从知识图谱的基本概念出发,详细分析如何将结构化知识融入传统文本分类流程,介绍最新的图神经网络方法,并通过实际案例展示知识增强型文本分类系统的构建过程。文章特别关注知识表示学习与文本特征的融合策略,以及在不同

- 粉丝问答✺回答篇

惋糖不想更新

没有什么寓意a,我当时觉得惋糖这个名字好听所以就取了这个名字。《好师傅》第一题!没有!第二的话有想过,就是对MC还不太熟悉,因为我一开始是玩迷你的,那个时候我还不知道有什么抄袭这种事,玩了两年之后才知道的(其实退游了一年多,真正也就玩了接近一年)但是我又懒得去熟悉MC了,但是最近突然对MC有点兴趣,但是你们知道的。懂的都懂目标就是破千粉,挺难的,这是目前的目标哈,要说最大的目标就是成为超凡大师。现

- Pad Token技术原理与实现指南

Takoony

AI

目录概述理论基础:第一性原理分析技术实现机制工程最佳实践性能优化策略常见问题与解决方案技术发展趋势附录1.概述1.1文档目的本文档旨在深入阐述深度学习中PadToken的技术原理、实现机制及工程应用,为算法工程师提供全面的理论指导和实践参考。1.2适用范围自然语言处理模型开发序列数据批处理优化深度学习系统架构设计高性能计算资源管理1.3核心问题研究问题:为什么深度学习模型需要将变长序列统一到固定长

- 多语言文本分类在AI应用中的实践

AI原生应用开发

人工智能分类数据挖掘ai

多语言文本分类在AI应用中的实践关键词:多语言文本分类、自然语言处理、机器学习、深度学习、BERT、迁移学习、跨语言模型摘要:本文深入探讨多语言文本分类在AI领域的应用实践。我们将从基础概念出发,逐步讲解其核心原理、技术架构和实现方法,并通过实际案例展示如何构建一个高效的多语言文本分类系统。文章将涵盖从传统机器学习方法到最先进的深度学习技术,特别关注跨语言迁移学习在实际业务场景中的应用。背景介绍目

- PyTorch生成式人工智能(18)——循环神经网络详解与实现

盼小辉丶

pytorchrnn自然语言处理

PyTorch生成式人工智能(18)——循环神经网络详解与实现0.前言1.文本生成的挑战2.循环神经网络2.1文本数据2.2循环神经网络原理3.长短期记忆网络3.自然语言处理基础3.1分词3.2词嵌入3.3词嵌入在自然语言处理中的应用小结系列链接0.前言我们已经学习了如何生成数字和图像等内容。从本节开始,我们将主要聚焦于文本生成。人类语言极其复杂且充满细微差别,不仅仅涉及语法和词汇的理解,还包括上

- 三生原理促进东西方数学观融合统一?

葫三生

三生学派算法

AI辅助创作:问答一:三生原理通过构建动态生成与形式逻辑兼容的跨文化数学模型,展现出统一东西方数学观的潜在可能,但其理论成熟度仍需突破以下关键节点:一、方法论层级的融合路径生成逻辑与公理体系的协同三生原理的素数生成公式(p=3(2n+1)+2(2n+m+1))将阴阳元(2与3)作为生成元,通过参数联动(m∈{0,1,2,3,4})主动构造素数,与传统数论的被动筛法形成互补。这种“动态构造+形式

- 一分钟英语趣问答 95

GBmelody

Whichisnotatree:pine,oak,maple,ivy?译句:松树,橡树,枫树或常春藤,哪个不是一颗树?(答案附在文末)pine/paɪn/n.松树,松木[例句]:•Weputin20rowsofpinetrees.我们种植了20排松树。•Thattreeisactuallyafir,notapine.那棵树实际上是冷杉,不是松树。oak/ok/n.栎树,橡树,栎木,橡木[例句]:•

- 大语言模型应用指南:网页实时浏览

AGI大模型与大数据研究院

AI大模型应用开发实战计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

大语言模型应用指南:网页实时浏览作者:禅与计算机程序设计艺术1.背景介绍1.1大语言模型的崛起1.1.1自然语言处理的发展历程1.1.2Transformer模型的突破1.1.3预训练语言模型的优势1.2网页浏览的痛点1.2.1信息过载与检索困难1.2.2内容理解与知识提取1.2.3个性化与智能化需求1.3大语言模型与网页浏览的结合1.3.1智能问答与对话系统1.3.2知识图谱与语义搜索1.3.3

- Python深度学习实践:LSTM与GRU在序列数据预测中的应用

AI智能应用

Python入门实战计算科学神经计算深度学习神经网络大数据人工智能大型语言模型AIAGILLMJavaPython架构设计AgentRPA

Python深度学习实践:LSTM与GRU在序列数据预测中的应用作者:禅与计算机程序设计艺术/ZenandtheArtofComputerProgramming1.背景介绍1.1问题的由来序列数据预测是机器学习领域的一个重要研究方向,涉及时间序列分析、自然语言处理、语音识别等多个领域。序列数据具有时间依赖性,即序列中每个元素都受到前面元素的影响。传统的机器学习算法难以捕捉这种时间依赖性,而深度学习

- AI编程实战:Cursor避坑指南与高效提示词设计

孟柯coding

人工智能机器学习AIGC

1.简介在AI迅猛发展的时代,掌握利用AI工具提升工作效率,已成为一项必备技能。无论是借助AICoding辅助编程,还是使用Coze或Dify搭建专属知识库问答助手,AI都能让我们事半功倍。当然,AI生成内容有时会存在“幻觉”,切勿完全轻信其输出,关键信息务必自行核查验证后再投入使用。本文将以我在使用Cursor进行开发时遇到的实际问题为例,分享相应的处理思路与解决方案,并同步提供开发用户模块所使

- 进阶向:基于Python的智能客服系统设计与实现

智能客服系统开发指南系统概述智能客服系统是人工智能领域的重要应用,它通过自然语言处理(NLP)和机器学习技术自动化处理用户查询,显著提升客户服务效率和响应速度。基于Python的实现方案因其丰富的生态系统(如NLTK、spaCy、Transformers等库)、跨平台兼容性以及易于集成的特点,成为开发智能客服系统的首选。系统架构系统核心包括两个主要功能模块:1.API集成模块负责连接各类外部服务,

- 一、命运——打赢你的内在比赛——问答(二)

老板阿修罗

身心合一是瞬间,准备是王道。相信身体非常重要,但是还是要做好日常的准备工作。留了一个小小的作业,鼓励竞争,又要发挥自己的自恋。周一是周二是一致的,看到并放下你的自恋,看到自恋放下自恋才能进步和发展。周三又鼓励竞争,竞争是一个人的人性进行展开,通过自己的竞争,才能发现你自己。破掉自恋很难,但是也只有放下自恋才可以成功,常常伴随人的终生。自己没有太大的成就,就是因为难以破除的自恋。在关键性的决赛时间,

- 计算机视觉产品推荐,个性化推荐:人工智能中的计算机视觉、NLP自然语言处理和个性化推荐系统哪个前景更好一些?...

这个问题直接回答的话可能还是有着很强的个人观点,所以不如先向你介绍一些这几个领域目前的研究现状和应用情况(不再具体介绍其中原理)你自己可以斟酌一下哪方面更适合自己个性化推荐。一.所谓计算机视觉,是指使用计算机及相关设备对生物视觉的一种模拟个性化推荐。它的主要任务就是通过对采集的图片或视频进行处理以获得相应场景的三维信息,就像人类和许多其他类生物每天所做的那样[1]。现在人工智能的计算机视觉主要研究

- 英特尔CEO坦承AI领域落后Nvidia,边缘计算成复苏关键

weishi122

人工智能边缘计算AI技术芯片graphql金融科技

据报道,英特尔CEO已向全球员工发表讲话Lip-BuTan似乎提出了坦率的观察和清晰目标所有这些表明英特尔将聚焦于精简业务,并进军AI领域——尽管不是直接追赶Nvidia,而是通过所谓边缘AI英特尔(相对)新任CEO显然承认了公司面临的严峻挑战,但Lip-BuTan似乎制定了复苏计划——而且听起来相当务实。《俄勒冈人报》报道了一段Tan的问答环节录音(由Tom’sHardware发现),该录音据称

- 王阳明问答语录《传习录》读书笔记摘录【读书笔记18】

爱玲姐说说

王阳明问答语录《传习录》读书笔记摘录【读书笔记18】1、惟学功夫有深浅,初时若不着实用意去好善恶恶。如何能为善去恶?这着实用意便是诚意,然不知心之本体原无一物,一向着意去好善恶恶,便又多了这分意思,便不是廓然大公。《书》所谓“无有作好作恶”,方是本体?所以说“有所愤懥好乐,则不得其正”。正心只是诚意功夫里面体当自家心体,常要鉴空衡平,这便是未发之中。【关键词注释】①着实用意:真正切实②着意:执着③

- 【操作系统-Day 7】程序的“分身”:一文彻底搞懂什么是进程 (Process)?

吴师兄大模型

操作系统操作系统计算机组成原理进程(Process)python深度学习大模型人工智能

Langchain系列文章目录01-玩转LangChain:从模型调用到Prompt模板与输出解析的完整指南02-玩转LangChainMemory模块:四种记忆类型详解及应用场景全覆盖03-全面掌握LangChain:从核心链条构建到动态任务分配的实战指南04-玩转LangChain:从文档加载到高效问答系统构建的全程实战05-玩转LangChain:深度评估问答系统的三种高效方法(示例生成、手

- 开发者关心的那些事

圣子足道

ios游戏编程apple支付

我要在app里添加IAP,必须要注册自己的产品标识符(product identifiers)。产品标识符是什么?

产品标识符(Product Identifiers)是一串字符串,它用来识别你在应用内贩卖的每件商品。App Store用产品标识符来检索产品信息,标识符只能包含大小写字母(A-Z)、数字(0-9)、下划线(-)、以及圆点(.)。你可以任意排列这些元素,但我们建议你创建标识符时使用

- 负载均衡器技术Nginx和F5的优缺点对比

bijian1013

nginxF5

对于数据流量过大的网络中,往往单一设备无法承担,需要多台设备进行数据分流,而负载均衡器就是用来将数据分流到多台设备的一个转发器。

目前有许多不同的负载均衡技术用以满足不同的应用需求,如软/硬件负载均衡、本地/全局负载均衡、更高

- LeetCode[Math] - #9 Palindrome Number

Cwind

javaAlgorithm题解LeetCodeMath

原题链接:#9 Palindrome Number

要求:

判断一个整数是否是回文数,不要使用额外的存储空间

难度:简单

分析:

题目限制不允许使用额外的存储空间应指不允许使用O(n)的内存空间,O(1)的内存用于存储中间结果是可以接受的。于是考虑将该整型数反转,然后与原数字进行比较。

注:没有看到有关负数是否可以是回文数的明确结论,例如

- 画图板的基本实现

15700786134

画图板

要实现画图板的基本功能,除了在qq登陆界面中用到的组件和方法外,还需要添加鼠标监听器,和接口实现。

首先,需要显示一个JFrame界面:

public class DrameFrame extends JFrame { //显示

- linux的ps命令

被触发

linux

Linux中的ps命令是Process Status的缩写。ps命令用来列出系统中当前运行的那些进程。ps命令列出的是当前那些进程的快照,就是执行ps命令的那个时刻的那些进程,如果想要动态的显示进程信息,就可以使用top命令。

要对进程进行监测和控制,首先必须要了解当前进程的情况,也就是需要查看当前进程,而 ps 命令就是最基本同时也是非常强大的进程查看命令。使用该命令可以确定有哪些进程正在运行

- Android 音乐播放器 下一曲 连续跳几首歌

肆无忌惮_

android

最近在写安卓音乐播放器的时候遇到个问题。在MediaPlayer播放结束时会回调

player.setOnCompletionListener(new OnCompletionListener() {

@Override

public void onCompletion(MediaPlayer mp) {

mp.reset();

Log.i("H

- java导出txt文件的例子

知了ing

javaservlet

代码很简单就一个servlet,如下:

package com.eastcom.servlet;

import java.io.BufferedOutputStream;

import java.io.IOException;

import java.net.URLEncoder;

import java.sql.Connection;

import java.sql.Resu

- Scala stack试玩, 提高第三方依赖下载速度

矮蛋蛋

scalasbt

原文地址:

http://segmentfault.com/a/1190000002894524

sbt下载速度实在是惨不忍睹, 需要做些配置优化

下载typesafe离线包, 保存为ivy本地库

wget http://downloads.typesafe.com/typesafe-activator/1.3.4/typesafe-activator-1.3.4.zip

解压r

- phantomjs安装(linux,附带环境变量设置) ,以及casperjs安装。

alleni123

linuxspider

1. 首先从官网

http://phantomjs.org/下载phantomjs压缩包,解压缩到/root/phantomjs文件夹。

2. 安装依赖

sudo yum install fontconfig freetype libfreetype.so.6 libfontconfig.so.1 libstdc++.so.6

3. 配置环境变量

vi /etc/profil

- JAVA IO FileInputStream和FileOutputStream,字节流的打包输出

百合不是茶

java核心思想JAVA IO操作字节流

在程序设计语言中,数据的保存是基本,如果某程序语言不能保存数据那么该语言是不可能存在的,JAVA是当今最流行的面向对象设计语言之一,在保存数据中也有自己独特的一面,字节流和字符流

1,字节流是由字节构成的,字符流是由字符构成的 字节流和字符流都是继承的InputStream和OutPutStream ,java中两种最基本的就是字节流和字符流

类 FileInputStream

- Spring基础实例(依赖注入和控制反转)

bijian1013

spring

前提条件:在http://www.springsource.org/download网站上下载Spring框架,并将spring.jar、log4j-1.2.15.jar、commons-logging.jar加载至工程1.武器接口

package com.bijian.spring.base3;

public interface Weapon {

void kil

- HR看重的十大技能

bijian1013

提升能力HR成长

一个人掌握何种技能取决于他的兴趣、能力和聪明程度,也取决于他所能支配的资源以及制定的事业目标,拥有过硬技能的人有更多的工作机会。但是,由于经济发展前景不确定,掌握对你的事业有所帮助的技能显得尤为重要。以下是最受雇主欢迎的十种技能。 一、解决问题的能力 每天,我们都要在生活和工作中解决一些综合性的问题。那些能够发现问题、解决问题并迅速作出有效决

- 【Thrift一】Thrift编译安装

bit1129

thrift

什么是Thrift

The Apache Thrift software framework, for scalable cross-language services development, combines a software stack with a code generation engine to build services that work efficiently and s

- 【Avro三】Hadoop MapReduce读写Avro文件

bit1129

mapreduce

Avro是Doug Cutting(此人绝对是神一般的存在)牵头开发的。 开发之初就是围绕着完善Hadoop生态系统的数据处理而开展的(使用Avro作为Hadoop MapReduce需要处理数据序列化和反序列化的场景),因此Hadoop MapReduce集成Avro也就是自然而然的事情。

这个例子是一个简单的Hadoop MapReduce读取Avro格式的源文件进行计数统计,然后将计算结果

- nginx定制500,502,503,504页面

ronin47

nginx 错误显示

server {

listen 80;

error_page 500/500.html;

error_page 502/502.html;

error_page 503/503.html;

error_page 504/504.html;

location /test {return502;}}

配置很简单,和配

- java-1.二叉查找树转为双向链表

bylijinnan

二叉查找树

import java.util.ArrayList;

import java.util.List;

public class BSTreeToLinkedList {

/*

把二元查找树转变成排序的双向链表

题目:

输入一棵二元查找树,将该二元查找树转换成一个排序的双向链表。

要求不能创建任何新的结点,只调整指针的指向。

10

/ \

6 14

/ \

- Netty源码学习-HTTP-tunnel

bylijinnan

javanetty

Netty关于HTTP tunnel的说明:

http://docs.jboss.org/netty/3.2/api/org/jboss/netty/channel/socket/http/package-summary.html#package_description

这个说明有点太简略了

一个完整的例子在这里:

https://github.com/bylijinnan

- JSONUtil.serialize(map)和JSON.toJSONString(map)的区别

coder_xpf

jqueryjsonmapval()

JSONUtil.serialize(map)和JSON.toJSONString(map)的区别

数据库查询出来的map有一个字段为空

通过System.out.println()输出 JSONUtil.serialize(map): {"one":"1","two":"nul

- Hibernate缓存总结

cuishikuan

开源sshjavawebhibernate缓存三大框架

一、为什么要用Hibernate缓存?

Hibernate是一个持久层框架,经常访问物理数据库。

为了降低应用程序对物理数据源访问的频次,从而提高应用程序的运行性能。

缓存内的数据是对物理数据源中的数据的复制,应用程序在运行时从缓存读写数据,在特定的时刻或事件会同步缓存和物理数据源的数据。

二、Hibernate缓存原理是怎样的?

Hibernate缓存包括两大类:Hib

- CentOs6

dalan_123

centos

首先su - 切换到root下面1、首先要先安装GCC GCC-C++ Openssl等以来模块:yum -y install make gcc gcc-c++ kernel-devel m4 ncurses-devel openssl-devel2、再安装ncurses模块yum -y install ncurses-develyum install ncurses-devel3、下载Erang

- 10款用 jquery 实现滚动条至页面底端自动加载数据效果

dcj3sjt126com

JavaScript

无限滚动自动翻页可以说是web2.0时代的一项堪称伟大的技术,它让我们在浏览页面的时候只需要把滚动条拉到网页底部就能自动显示下一页的结果,改变了一直以来只能通过点击下一页来翻页这种常规做法。

无限滚动自动翻页技术的鼻祖是微博的先驱:推特(twitter),后来必应图片搜索、谷歌图片搜索、google reader、箱包批发网等纷纷抄袭了这一项技术,于是靠滚动浏览器滚动条

- ImageButton去边框&Button或者ImageButton的背景透明

dcj3sjt126com

imagebutton

在ImageButton中载入图片后,很多人会觉得有图片周围的白边会影响到美观,其实解决这个问题有两种方法

一种方法是将ImageButton的背景改为所需要的图片。如:android:background="@drawable/XXX"

第二种方法就是将ImageButton背景改为透明,这个方法更常用

在XML里;

<ImageBut

- JSP之c:foreach

eksliang

jspforearch

原文出自:http://www.cnblogs.com/draem0507/archive/2012/09/24/2699745.html

<c:forEach>标签用于通用数据循环,它有以下属性 属 性 描 述 是否必须 缺省值 items 进行循环的项目 否 无 begin 开始条件 否 0 end 结束条件 否 集合中的最后一个项目 step 步长 否 1

- Android实现主动连接蓝牙耳机

gqdy365

android

在Android程序中可以实现自动扫描蓝牙、配对蓝牙、建立数据通道。蓝牙分不同类型,这篇文字只讨论如何与蓝牙耳机连接。

大致可以分三步:

一、扫描蓝牙设备:

1、注册并监听广播:

BluetoothAdapter.ACTION_DISCOVERY_STARTED

BluetoothDevice.ACTION_FOUND

BluetoothAdapter.ACTION_DIS

- android学习轨迹之四:org.json.JSONException: No value for

hyz301

json

org.json.JSONException: No value for items

在JSON解析中会遇到一种错误,很常见的错误

06-21 12:19:08.714 2098-2127/com.jikexueyuan.secret I/System.out﹕ Result:{"status":1,"page":1,&

- 干货分享:从零开始学编程 系列汇总

justjavac

编程

程序员总爱重新发明轮子,于是做了要给轮子汇总。

从零开始写个编译器吧系列 (知乎专栏)

从零开始写一个简单的操作系统 (伯乐在线)

从零开始写JavaScript框架 (图灵社区)

从零开始写jQuery框架 (蓝色理想 )

从零开始nodejs系列文章 (粉丝日志)

从零开始编写网络游戏

- jquery-autocomplete 使用手册

macroli

jqueryAjax脚本

jquery-autocomplete学习

一、用前必备

官方网站:http://bassistance.de/jquery-plugins/jquery-plugin-autocomplete/

当前版本:1.1

需要JQuery版本:1.2.6

二、使用

<script src="./jquery-1.3.2.js" type="text/ja

- PLSQL-Developer或者Navicat等工具连接远程oracle数据库的详细配置以及数据库编码的修改

超声波

oracleplsql

在服务器上将Oracle安装好之后接下来要做的就是通过本地机器来远程连接服务器端的oracle数据库,常用的客户端连接工具就是PLSQL-Developer或者Navicat这些工具了。刚开始也是各种报错,什么TNS:no listener;TNS:lost connection;TNS:target hosts...花了一天的时间终于让PLSQL-Developer和Navicat等这些客户

- 数据仓库数据模型之:极限存储--历史拉链表

superlxw1234

极限存储数据仓库数据模型拉链历史表

在数据仓库的数据模型设计过程中,经常会遇到这样的需求:

1. 数据量比较大; 2. 表中的部分字段会被update,如用户的地址,产品的描述信息,订单的状态等等; 3. 需要查看某一个时间点或者时间段的历史快照信息,比如,查看某一个订单在历史某一个时间点的状态, 比如,查看某一个用户在过去某一段时间内,更新过几次等等; 4. 变化的比例和频率不是很大,比如,总共有10

- 10点睛Spring MVC4.1-全局异常处理

wiselyman

spring mvc

10.1 全局异常处理

使用@ControllerAdvice注解来实现全局异常处理;

使用@ControllerAdvice的属性缩小处理范围

10.2 演示

演示控制器

package com.wisely.web;

import org.springframework.stereotype.Controller;

import org.spring