基于pytorch并且使用resnet18 的fer2013人脸识别

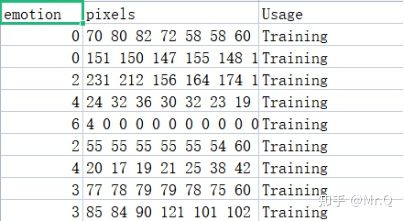

Fer2013人脸表情数据集由35886张人脸表情图片组成,其中,测试图(Training)28708张,公共验证图(PublicTest)和私有验证图(PrivateTest)各3589张,每张图片是由大小固定为48×48的灰度图像组成,共有7种表情,分别对应于数字标签0-6,具体表情对应的标签和中英文如下:0 anger 生气; 1 disgust 厌恶; 2 fear 恐惧; 3 happy 开心; 4 sad 伤心;5 surprised 惊讶; 6 normal 中性。数据集并没有直接给出图片,而是将表情、图片数据、用途的数据保存到csv文件中

我是使用jupyter notebook 对新手友好 每行程序调试方便

fer2013数据集预览:

fer2013数据集可视化:

#引入库

import csv

import os

from __future__ import print_function, division

from PIL import Image

import numpy as np

import h5py

import torch.utils.data as data

import argparse

import torch.optim as optim

import torch

import torch.nn as nn

from torch.autograd import Variable

import torch.nn.functional as F

import matplotlib.pyplot as plt

import os

from torchvision import datasets, transforms

import time

from torch.utils.data import DataLoader, Dataset

#使用显卡

torch.cuda.is_available()

#显示显卡类型

torch.cuda.get_device_name(0)

数据预处理

Training_x = []

Training_y = []

PublicTest_x = []

PublicTest_y = []

PrivateTest_x = []

PrivateTest_y = []

file = r'D:\fer20131\fer2013.csv' #文件路径

datapath = os.path.join('data', 'data.h5') #连接两个或更多的路径名组件

if not os.path.exists(os.path.dirname(datapath)): #os.path.dirname去掉文件名,返回目录

os.makedirs(os.path.dirname(datapath))

with open(file, 'r') as csvin:

data = csv.reader(csvin)

for row in data:

if row[-1] == 'Training':

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel)) #int() 函数用于将一个字符串或数字转换为整型

I = np.asarray(temp_list)

Training_y.append(int(row[0])) #在列表末尾添加新的对象list.append(erd)

Training_x.append(I.tolist()) #转化为List集合

if row[-1] == "PublicTest" :

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PublicTest_y.append(int(row[0]))

PublicTest_x.append(I.tolist())

if row[-1] == 'PrivateTest':

temp_list = []

for pixel in row[1].split( ):

temp_list.append(int(pixel))

I = np.asarray(temp_list)

PrivateTest_y.append(int(row[0]))

PrivateTest_x.append(I.tolist())

print(np.shape(Training_x))

print(np.shape(PublicTest_x))

print(np.shape(PrivateTest_x))

datafile = h5py.File(datapath, 'w')

datafile.create_dataset("Training_pixel", dtype = 'uint8', data=Training_x)

datafile.create_dataset("Training_label", dtype = 'int64', data=Training_y)

datafile.create_dataset("PublicTest_pixel", dtype = 'uint8', data=PublicTest_x)

datafile.create_dataset("PublicTest_label", dtype = 'int64', data=PublicTest_y)

datafile.create_dataset("PrivateTest_pixel", dtype = 'uint8', data=PrivateTest_x)

datafile.create_dataset("PrivateTest_label", dtype = 'int64', data=PrivateTest_y)

datafile.close()

print("Save data finish!!!")

创建FER2013数据包

from __future__ import print_function

from PIL import Image

import numpy as np

import h5py

import torch.utils.data as data

class FER2013(data.Dataset):

"""`FER2013 Dataset.

Args:

train (bool, optional): If True, creates dataset from training set, otherwise

creates from test set.

transform (callable, optional): A function/transform that takes in an PIL image

and returns a transformed version. E.g, ``transforms.RandomCrop``

"""

#def __init__(self, split='Training', transform=None):

def __init__(self, split, transform=None):

self.transform = transform

self.split = split # training set or test set

self.data = h5py.File('./data/data.h5', 'r', driver='core')

# now load the picked numpy arrays

if self.split == 'Training':

self.train_data = self.data['Training_pixel']

self.train_labels = self.data['Training_label']

self.train_data = np.asarray(self.train_data)

self.train_data = self.train_data.reshape((28709, 48, 48))

self.train_data = np.array(self.train_data)

elif self.split == 'PublicTest':

self.PublicTest_data = self.data['PublicTest_pixel']

self.PublicTest_labels = self.data['PublicTest_label']

self.PublicTest_data = np.asarray(self.PublicTest_data)

self.PublicTest_data = self.PublicTest_data.reshape((3589, 48, 48))

self.PublicTest_data = np.array(self.PublicTest_data)

else :

self.PrivateTest_data = self.data['PrivateTest_pixel']

self.PrivateTest_labels = self.data['PrivateTest_label']

self.PrivateTest_data = np.asarray(self.PrivateTest_data)

self.PrivateTest_data = self.PrivateTest_data.reshape((3589, 48, 48))

self.PrivateTest_data = np.array(self.PrivateTest_data)

def __getitem__(self, index):

"""

Args:

index (int): Index

Returns:

tuple: (image, target) where target is index of the target class.

"""

if self.split == 'Training':

img, target = self.train_data[index], self.train_labels[index]

elif self.split == 'PublicTest':

img, target = self.PublicTest_data[index], self.PublicTest_labels[index]

else:

img, target = self.PrivateTest_data[index], self.PrivateTest_labels[index]

# doing this so that it is consistent with all other datasets

# to return a PIL Image

img = img[:, :, np.newaxis] # np.newaxis的作用是增加一个维度

img = np.concatenate((img, img, img), axis=2) #完成多个数组的拼接

img = Image.fromarray(img)

if self.transform is not None:

img = self.transform(img)

return img, target

def __len__(self):

if self.split == 'Training':

return len(self.train_data)

elif self.split == 'PublicTest':

return len(self.PublicTest_data)

else :

return len(self.PrivateTest_data)

print("finish!!!")

主函数中程序数据预处理及加载

#resnet的数据

print('==> Preparing data..')

transform_train = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)])

transform_test = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)])

trainset = FER2013(split = 'Training', transform=transform_train)

trainloader = DataLoader(trainset, batch_size=1 , shuffle=True)#, num_workers=1

PublicTestset = FER2013(split = 'PublicTest', transform=transform_test)

PublicTestloader = DataLoader(PublicTestset, batch_size=1 , shuffle=False)#, num_workers=1)

PrivateTestset = FER2013(split = 'PrivateTest', transform=transform_test)

PrivateTestloader = DataLoader(PrivateTestset, batch_size=1 , shuffle=False)#, num_workers=1)

print("finish!!!")

可以查看数据形状

print(len(trainset))

print(len(trainloader))

for batch_datas, batch_labels in trainloader:

print(batch_datas.size(),batch_labels.size())

加载损失函数及优化器

optimizer = torch.optim.Adam(model_2 .parameters(), lr=0.001)

loss_func = nn.CrossEntropyLoss()

加载resnet18并且使用GPU

model_2 = torchvision.models.resnet18(pretrained=True).eval().cuda()

打印resnet 形状

print(model_2)

定义准确率函数

def accuracy(predictions, labels):

pred = torch.max(predictions.data, 1)[1]

rights = pred.eq(labels.data.view_as(pred)).sum()

return rights, len(labels)

测试检验及打印

epoch = 2

train_acc = 0

for epoch in range(epoch):

train_rights = []

for batch_idx, (data, target) in enumerate(trainloader):

model_2.train()

data, target = data.cuda(), target.cuda()

data = data.type(torch.float32)

target = target.type(torch.float32)

data, target = Variable(data, requires_grad=True), Variable(target, requires_grad=True) # 把数据转换成Variable

# print(data)

# print(target)

optimizer.zero_grad() # 优化器梯度初始化为零

#with torch.no_grad():

output = model_2(data) # 把数据输入网络并得到输出,即进行前向传播

target = target.long()

loss = loss_func(output, target) # 交叉熵损失函数

loss.backward() # 反向传播梯度

optimizer.step() # 结束一次前传+反传之后,更新参数

right = accuracy(output, target)

train_rights.append(right)

if batch_idx % 100 ==0

model_2.eval()

val_rights = []

for data, target in PrivateTestloader:

data, target = data.cuda(), target.cuda()

data = data.type(torch.float32)

target = target.type(torch.float32)

data, target = Variable(data,requires_grad=True), Variable(target,requires_grad=True) # 计算前要把变量变成Variable形式,因为这样子才有梯度

output = model_2(data)

right = accuracy(output, target)

#准确率计算

train_r = (sum([tup[0] for tup in train_rights]),sum([tup[1] for tup in train_rights]))

val_r = (sum([tup[0] for tup in val_rights]),sum([tup[1] for tup in val_rights]))

print('当前epoch:{} [{}/{} ({:.0f}%)]\t损失: {:.6f}\t训练集准确率:{:.2f}%\t测试集正确率: {:.2f}%'.format(

epoch, batch_idx * 1,len(train_loader.dataset),

100.* batch_idx /len(train_loader),

loss.data,

100.* train_r[0].numpy()/train_r[1]

100.* val[0].numpy()/val[1]

))