Doris-1.2.3 详细安装教程&doris-1.2.3性能测试教程

这是一个详细的安装和测试教程,包含了二进制包的版本选择和下载,FE、BE节点的部署,ssb数据集性能测试和tpch数据集性能测试。都有详细的教程和截图、步骤讲解

目录

- 一、二进制包下载

-

- 1、下载

- 2、选择下载包

-

- 2.1 BE下载

- 2.2 FE下载

- 2.3 安装 Java UDF 函数

- 二、安装部署

-

- 1、文件归一

- 2、添加环境变量

- 3、修改可打开文件数(每个节点)

- 添加

-

- 4、部署FE节点

-

- 1.1 解压文件

- 1.2 创建FE元数据存储的目录

- 1.3 修改FE的配置文件

- 1.4 启动FE

- 5、配置BE节点

-

- 5.1 拷贝依赖

- 5.2 分发BE

- 5.3 创建 BE 数据存放目录(每个节点)

- 5.4 修改 BE 的配置文件(每个节点)

- 6、在FE中添加BE节点

-

- 6.1 安装MySQL Client

- 6.2 使用 MySQL Client 连接 FE

- 6.3 添加 BE

- 6.4 查看 BE 状态

- 7、启动BE节点

- 8、doris登录

- 三、性能测试

-

- ssb数据集测试

- 1、下载Doris源码

- 2、编译安装 SSB 数据生成工具

- 3、生成 SSB 测试集

- 4、建表

-

- 4.1 准备doris-cluster.conf文件

- 4.2执行以下脚步生成创建SSB表

- 5、导入数据

- 6、检查导入数据

-

-

- **7、SSB 标准测试 SQL**

-

- 8、测试结构表

- tpc-h数据集测试

- 1、数据准备

-

- 1.1 载安装 TPC-H 数据生成工具

- 1.2 生成 TPC-H 测试集

- 2 建表

-

-

- 2.1 准备 doris-cluster.conf 文件

- 2.2 执行以下脚本生成创建 TPC-H 表

-

- 3 导入数据

- 4 数据验证

- 5 单个 SQL 执行

-

- 5.1 执行语句

- 5.2 执行结果

一、二进制包下载

可以下载源码自己编译,这里我使用官网已经编译好的二进制包

1、下载

下载链接:https://doris.apache.org/zh-CN/download

注意:

自己选择自己需要的版本即可

2、选择下载包

1)看Linux的服务器CPU是否支持avx2指令集

cat /proc/cpuinfo

经过查看我所安装的服务器是支持avx2指令集,这里就下载avx2指令集

经过查看我所安装的服务器是支持avx2指令集,这里就下载avx2指令集

如果不支持avx2指令集,则下载另外一个包

2.1 BE下载

如果不能本地上传服务器,可以直接在服务器上下载

BE(Backend):负责物理数据的存储和计算;依据 FE 生成的物理计划,分布式地执行查询。 数据的可靠性由 BE 保证,BE 会对整个数据存储多副本或者是三副本。副本数可根据 需求动态调整。

wget https://archive.apache.org/dist/doris/1.2/1.2.3-rc02/apache-doris-be-1.2.3-bin-x86_64.tar.xz

2.2 FE下载

说明:

FE(Frontend):

存储、维护集群元数据;负责接收、解析查询请求,规划查询计划, 调度查询执行,返回查询结果。

主要有三个角色:

(1)Leader 和 Follower:主要是用来达到元数据的高可用,保证单节点宕机的情况下, 元数据能够实时地在线恢复,而不影响整个服务。

(2)Observer:用来扩展查询节点,同时起到元数据备份的作用。如果在发现集群压力 非常大的情况下,需要去扩展整个查询的能力,那么可以加 observer 的节点。observer 不 参与任何的写入,只参与读取。

wget https://archive.apache.org/dist/doris/1.2/1.2.3-rc02/apache-doris-fe-1.2.3-bin-x86_64.tar.xz

2.3 安装 Java UDF 函数

说明:

因为从 1.2 版本开始支持 Java UDF 函数,需要从官网下载 Java UDF 函数的 JAR 包放到 BE 的 lib 目录下,否则可能会启动失败。

wget https://archive.apache.org/dist/doris/1.2/1.2.3-rc02/apache-doris-dependencies-1.2.3-bin-x86_64.tar.xz

二、安装部署

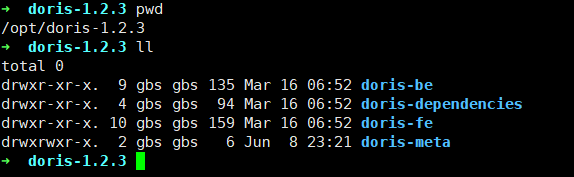

1、文件归一

将FE、BE、依赖放到同一个文件内,方便安装和后期管理,当然你也可以不用这样做

#创建doris目录

mkdir doris-1.2.3

#移动BE到doris目录

mv apache-doris-be-1.2.3-bin-x86_64 doris-1.2.3

#修改BE的名字为doris-be

mv apache-doris-be-1.2.3-bin-x86_64 doris-be

#移动FE到doris目录

mv apache-doris-fe-1.2.3-bin-x86_64 doris-1.2.3

#修改FE的名字为doris-fe

mv apache-doris-fe-1.2.3-bin-x86_64 doris-fe

#解压依赖到指定目录并改名

tar -xvf apache-doris-dependencies-1.2.3-bin-x86_64.tar.xz -C doris-1.2.3

mv apache-doris-dependencies-1.2.3-bin-x86_64 doris-dependencies

2、添加环境变量

#打开环境变量

sudo vim ~/.zshrc

#添加的内容

export DORIS_HOME=/opt/doris-1.2.3

export PATH=$PATH:$DORIS_HOME/doris-be/bin:$DORIS_HOME/doris-fe/bin

#使之生效

source ~/.zshrc

3、修改可打开文件数(每个节点)

sudo vim /etc/security/limits.conf

* soft nofile 65535

* hard nofile 65535

* soft nproc 65535

* hard nproc 65535

重启永久生效,也可以用 ulimit -n 65535 临时生效。

打开文件sysctl.conf

sudo vim /etc/sysctl.conf

添加

fs.file-max = 6553560

vm.max_map_count=2000000

重启生效

reboot -h now

4、部署FE节点

1.1 解压文件

sudo tar -xvf apache-doris-fe-1.2.3-bin-x86_64.tar.xz

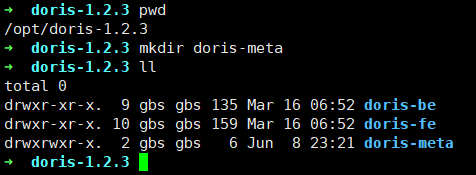

1.2 创建FE元数据存储的目录

mkdir doris-meta

1.3 修改FE的配置文件

pwd

/opt/doris-1.2.3/doris-fe/conf

vim fe.conf

#配置文件中指定元数据路径:

meta_dir = ${DORIS_HOME}/doris-meta

#配置fe日志路径

LOG_DIR = ${DORIS_HOME}/fe-log

#修改绑定 ip(每台机器修改成自己的 ip)

priority_networks = 10.10.1.7/24

1.4 启动FE

bin/start_fe.sh --daemon

验证是否启动成功

在服务器端口输入

http://10.10.1.7:8060/api/bootstrap

显示如下内容即成功

5、配置BE节点

5.1 拷贝依赖

将依赖包中的java-udf-jar-with-dependencies.jar依赖拷贝到BE的lib目录下

scp doris-dependencies/java-udf-jar-with-dependencies.jar doris-be/lib

5.2 分发BE

pwd

/opt/doris-1.2.3/doris-be

#我是在/opt/doris-1.2.3/doris-be这个目录下分发的哈

scp -r ./* node2:/opt/doris-1.2.3/doris-be

scp -r ./* node3:/opt/doris-1.2.3/doris-be

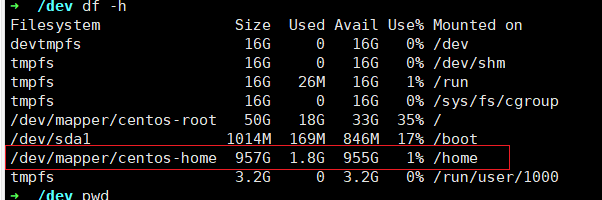

5.3 创建 BE 数据存放目录(每个节点)

创建完记得看一下那个磁盘容量大,然后创建在对应目录下

这里我创建在home目录下面

#node1

mkdir -p /home/data/doris-1.2.3/doris-storage1

mkdir -p /home/data/doris-1.2.3/doris-storage2

mkdir -p /home/data/doris-1.2.3/doris-storage3

#node2

mkdir -p /home/data/doris-1.2.3/doris-storage2

mkdir -p /home/data/doris-1.2.3/doris-storage2

mkdir -p /home/data/doris-1.2.3/doris-storage3

#node3

mkdir -p /home/data/doris-1.2.3/doris-storage3

mkdir -p /home/data/doris-1.2.3/doris-storage2

mkdir -p /home/data/doris-1.2.3/doris-storage3

5.4 修改 BE 的配置文件(每个节点)

vim $DORIS_HOME/doris-be/conf/be.conf

#增加如下内容

#配置文件指定数据存放路径

storage_root_path=/home/data/doris-1.2.3/doris-storage1;/home/data/doris-1.2.3/doris-storage2;/home/data/doris-1.2.3/doris-storage3

#修改绑定ip(每台机器都要修改成自己的ip)

priority_networks=10.10.1.7/24

6、在FE中添加BE节点

BE 节点需要先在 FE 中添加,才可加入集群。可以使用 mysql-client 连接到 FE

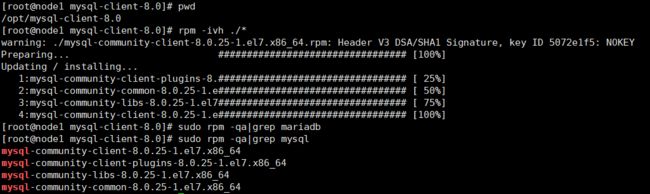

6.1 安装MySQL Client

1)创建目录

mkdir /opt/mysql-client-8.0

2)下载四个需要的MySQL组件

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-common-8.0.25-1.el7.x86_64.rpm

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-client-plugins-8.0.25-1.el7.x86_64.rpm

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-libs-8.0.25-1.el7.x86_64.rpm

wget https://cdn.mysql.com/archives/mysql-8.0/mysql-community-client-8.0.25-1.el7.x86_64.rpm

3)检查当前系统是否安装过MySQL

sudo rpm -qa|grep mariadb

#如果存在,先卸载

sudo rpm -e --nodeps mariadb mariadb-libs mariadb-server

4)安装刚刚下载的MySQL

rpm -ivh /opt/mysql-client-8.0/*

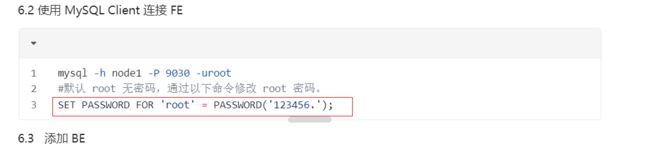

6.2 使用 MySQL Client 连接 FE

mysql -h node1 -P 9030 -uroot

#默认 root 无密码,通过以下命令修改 root 密码。

SET PASSWORD FOR 'root' = PASSWORD('123456.');

6.3 添加 BE

# 添加 BE

ALTER SYSTEM ADD BACKEND "node1:9050";

ALTER SYSTEM ADD BACKEND "node2:9050";

ALTER SYSTEM ADD BACKEND "node3:9050";

6.4 查看 BE 状态

SHOW PROC '/backends';

7、启动BE节点

记得每个节点都要启动

/opt/doris-1.2.3/doris-be/bin/start_be.sh --daemon

查看BE状态

注意:刚启动这里可能还没有更新,等待一会儿在看就会有的,或者是取be的启动日志:出现图中的日志,则表示启动成功

查看be状态截图

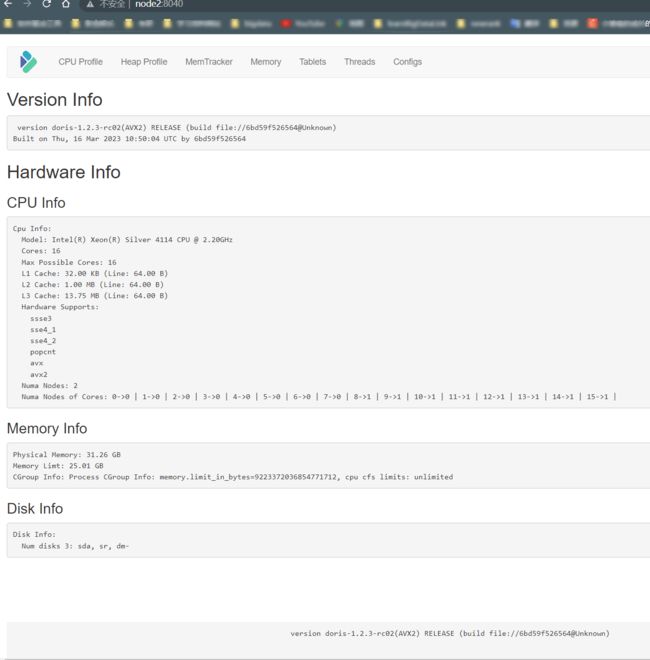

或者是访问web出现一下页面也是安装成功

http://be_host:webserver_port/api/health

webserver_port BE安装节点conf/be.conf中配置的端口

我这里的地址是:node2:8040

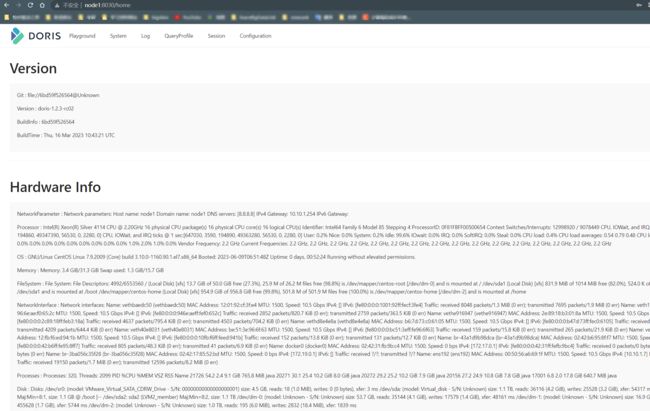

8、doris登录

http://fe_host:fe_http_port

我的地址是:http://node1:8030/

登录密码是前面设置的密码

登录后的界面

我安装是参考的链接:

https://blog.csdn.net/paicMis/article/details/130178291

https://zhuanlan.zhihu.com/p/621354583?utm_id=0

还有就是尚硅谷的doris教程

三、性能测试

ssb数据集测试

1、下载Doris源码

下载该源码是因为数据准备需要的脚步都存放在 Apache Doris 代码库:ssb-tools

1.1 下载源码

wget https://codeload.github.com/apache/doris/zip/refs/heads/master

1.2 解压源码

unzip master

解压后会得到doris-master目录

2、编译安装 SSB 数据生成工具

执行以下脚本下载并编译 ssb-dbgen 工具。

sh build-ssb-dbgen.sh

安装成功后,将在 ssb-dbgen/ 目录下生成 dbgen 二进制文件。

编译成功

ssb-dbgen/ 目录下生成 dbgen 二进制文件

注意

该编译需要gcc组件,如果服务器没有,记得下载

sudo yum install gcc

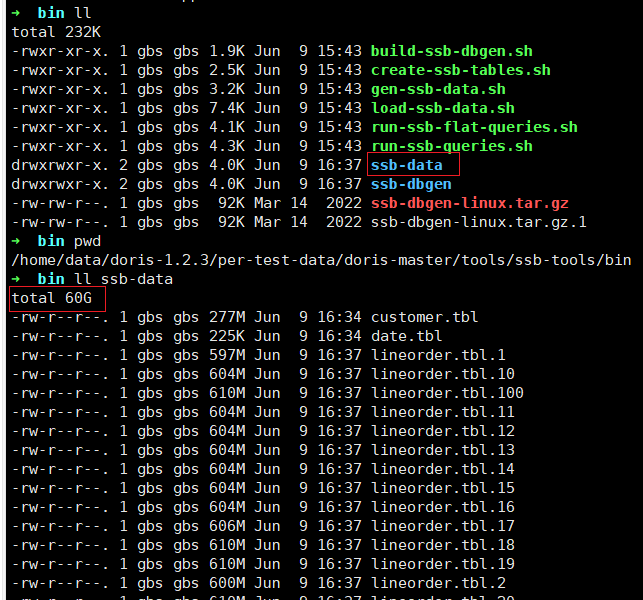

3、生成 SSB 测试集

执行以下脚本生成 SSB 数据集:

sh gen-ssb-data.sh -s 100 -c 100

注1:通过 sh gen-ssb-data.sh -h 查看脚本帮助。

注2:数据会以 .tbl 为后缀生成在 ssb-data/ 目录下。文件总大小约60GB。生成时间可能在数分钟到1小时不等。

注3:-s 100 表示测试集大小系数为 100,-c 100 表示并发100个线程生成lineorder 表的数据。-c 参数也决定了最终 lineorder 表的文件数量。参数越大,文件数越多,每个文件越小。

4、建表

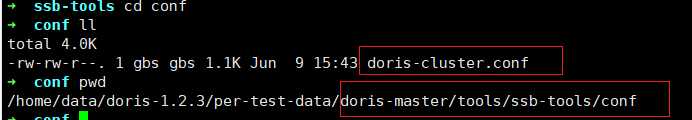

4.1 准备doris-cluster.conf文件

在调用导入脚本前,需要将FE的ip端口等信息写在doris-cluster.conf文件中

文件内容包括FE的ip,HTTP端口,用户名,密码已经待导入数据库DB名称

# Any of FE host

export FE_HOST='10.10.1.7'

# http_port in fe.conf

export FE_HTTP_PORT=8030

# query_port in fe.conf

export FE_QUERY_PORT=9030

# Doris username

export USER='root'

# Doris password

export PASSWORD='1xxxxxx.'

# The database where SSB tables located

export DB='ssb'

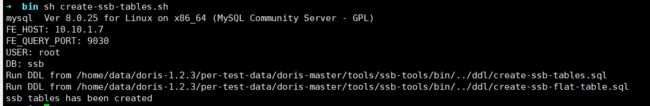

4.2执行以下脚步生成创建SSB表

sh create-ssb-tables.sh

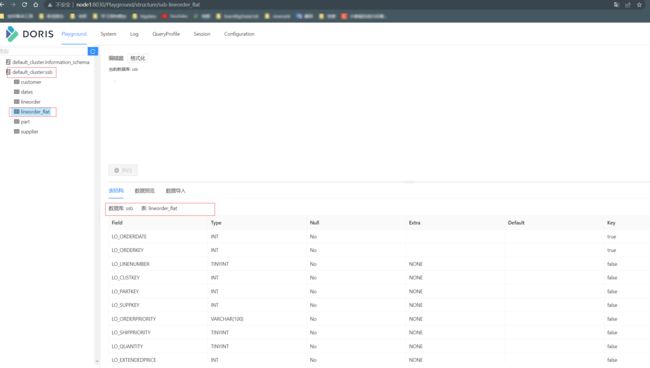

或者复制 create-ssb-tables.sql 和 create-ssb-flat-table.sql 中的建表语句,在 MySQL 客户端中执行。

下面是 lineorder_flat 表建表语句。在上面的 create-ssb-flat-table.sh 脚本中创建 lineorder_flat 表,并进行了默认分桶数(48个桶)。您可以删除该表,根据您的集群规模节点配置对这个分桶数进行调整,这样可以获取到更好的一个测试效果。

CREATETABLElineorder_flat(

LO_ORDERDATEdateNOTNULLCOMMENT"“,

LO_ORDERKEYint(11)NOTNULLCOMMENT”“,

LO_LINENUMBERtinyint(4)NOTNULLCOMMENT”“,

LO_CUSTKEYint(11)NOTNULLCOMMENT”“,

LO_PARTKEYint(11)NOTNULLCOMMENT”“,

LO_SUPPKEYint(11)NOTNULLCOMMENT”“,

LO_ORDERPRIORITYvarchar(100)NOTNULLCOMMENT”“,

LO_SHIPPRIORITYtinyint(4)NOTNULLCOMMENT”“,

LO_QUANTITYtinyint(4)NOTNULLCOMMENT”“,

LO_EXTENDEDPRICEint(11)NOTNULLCOMMENT”“,

LO_ORDTOTALPRICEint(11)NOTNULLCOMMENT”“,

LO_DISCOUNTtinyint(4)NOTNULLCOMMENT”“,

LO_REVENUEint(11)NOTNULLCOMMENT”“,

LO_SUPPLYCOSTint(11)NOTNULLCOMMENT”“,

LO_TAXtinyint(4)NOTNULLCOMMENT”“,

LO_COMMITDATEdateNOTNULLCOMMENT”“,

LO_SHIPMODEvarchar(100)NOTNULLCOMMENT”“,

C_NAMEvarchar(100)NOTNULLCOMMENT”“,

C_ADDRESSvarchar(100)NOTNULLCOMMENT”“,

C_CITYvarchar(100)NOTNULLCOMMENT”“,

C_NATIONvarchar(100)NOTNULLCOMMENT”“,

C_REGIONvarchar(100)NOTNULLCOMMENT”“,

C_PHONEvarchar(100)NOTNULLCOMMENT”“,

C_MKTSEGMENTvarchar(100)NOTNULLCOMMENT”“,

S_NAMEvarchar(100)NOTNULLCOMMENT”“,

S_ADDRESSvarchar(100)NOTNULLCOMMENT”“,

S_CITYvarchar(100)NOTNULLCOMMENT”“,

S_NATIONvarchar(100)NOTNULLCOMMENT”“,

S_REGIONvarchar(100)NOTNULLCOMMENT”“,

S_PHONEvarchar(100)NOTNULLCOMMENT”“,

P_NAMEvarchar(100)NOTNULLCOMMENT”“,

P_MFGRvarchar(100)NOTNULLCOMMENT”“,

P_CATEGORYvarchar(100)NOTNULLCOMMENT”“,

P_BRANDvarchar(100)NOTNULLCOMMENT”“,

P_COLORvarchar(100)NOTNULLCOMMENT”“,

P_TYPEvarchar(100)NOTNULLCOMMENT”“,

P_SIZEtinyint(4)NOTNULLCOMMENT”“,

P_CONTAINERvarchar(100)NOTNULLCOMMENT”"

)ENGINE=OLAP

DUPLICATEKEY(LO_ORDERDATE,LO_ORDERKEY)

COMMENT"OLAP"

PARTITIONBY RANGE(LO_ORDERDATE)

(PARTITION p1 VALUES[(‘0000-01-01’),(‘1993-01-01’)),

PARTITION p2 VALUES[(‘1993-01-01’),(‘1994-01-01’)),

PARTITION p3 VALUES[(‘1994-01-01’),(‘1995-01-01’)),

PARTITION p4 VALUES[(‘1995-01-01’),(‘1996-01-01’)),

PARTITION p5 VALUES[(‘1996-01-01’),(‘1997-01-01’)),

PARTITION p6 VALUES[(‘1997-01-01’),(‘1998-01-01’)),

PARTITION p7 VALUES[(‘1998-01-01’),(‘1999-01-01’)))

DISTRIBUTEDBYHASH(LO_ORDERKEY) BUCKETS 48

PROPERTIES (

“replication_num”=“1”,

“colocate_with”=“groupxx1”,

“in_memory”=“false”,

“storage_format”=“DEFAULT”

);

5、导入数据

我们使用以下命令完成 SSB 测试集所有数据导入及 SSB FLAT 宽表数据合成并导入到表里。

sh bin/load-ssb-data.sh -c 10

-c 10 表示启动 10 个并发线程导入(默认为 5)。在单 BE 节点情况下,由 sh gen-ssb-data.sh -s 100 -c 100 生成的 lineorder 数据,同时会在最后生成ssb-flat表的数据,如果开启更多线程,可以加快导入速度,但会增加额外的内存开销。

注:

- 为获得更快的导入速度,你可以在 be.conf 中添加 flush_thread_num_per_store=10 后重启BE。该配置表示每个数据目录的写盘线程数,默认为6。较大的数据可以提升写数据吞吐,但可能会增加 IO Util。(参考值:1块机械磁盘,在默认为2的情况下,导入过程中的 IO Util 约为12%,设置为5时,IO Util 约为26%。如果是 SSD 盘,则几乎为 0)。

- flat 表数据采用 'INSERT INTO … SELECT … ’ 的方式导入。

运行成功的日志如下

#只复制了部分

➜ ssb-tools sh bin/load-ssb-data.sh -c 10

Parallelism: 10

curl 7.29.0 (x86_64-redhat-linux-gnu) libcurl/7.29.0 NSS/3.53.1 zlib/1.2.7 libidn/1.28 libssh2/1.8.0

Protocols: dict file ftp ftps gopher http https imap imaps ldap ldaps pop3 pop3s rtsp scp sftp smtp smtps telnet tftp

Features: AsynchDNS GSS-Negotiate IDN IPv6 Largefile NTLM NTLM_WB SSL libz unix-sockets

FE_HOST: 10.10.1.7

FE_HTTP_PORT: 8030

USER: root

DB: ssb

Start time: Fri Jun 9 16:56:20 CST 2023

==========Start to load data into ssb tables==========

Loading data for table: part

{

"TxnId": 64,

"Label": "d5143f1e-13cd-4875-9d93-becdb37df6fa",

"TwoPhaseCommit": "false",

"Status": "Success",

"Message": "OK",

"NumberTotalRows": 1400000,

"NumberLoadedRows": 1400000,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 121042413,

"LoadTimeMs": 2700,

"BeginTxnTimeMs": 158,

"StreamLoadPutTimeMs": 558,

"ReadDataTimeMs": 1041,

"WriteDataTimeMs": 1766,

"CommitAndPublishTimeMs": 214

}

Loading data for table: date

{

"TxnId": 65,

"Label": "6cdc86b6-828a-48ac-8ae8-2aef1d611bce",

"TwoPhaseCommit": "false",

"Status": "Success",

"Message": "OK",

"NumberTotalRows": 2556,

"NumberLoadedRows": 2556,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 229965,

"LoadTimeMs": 97,

"BeginTxnTimeMs": 0,

"StreamLoadPutTimeMs": 4,

"ReadDataTimeMs": 0,

"WriteDataTimeMs": 72,

"CommitAndPublishTimeMs": 17

}

Loading data for table: supplier

{

"TxnId": 66,

"Label": "819e2ee0-59a3-49c9-94d0-dac265e6df3f",

"TwoPhaseCommit": "false",

"Status": "Success",

"Message": "OK",

"NumberTotalRows": 200000,

"NumberLoadedRows": 200000,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 17062852,

"LoadTimeMs": 319,

"BeginTxnTimeMs": 1,

"StreamLoadPutTimeMs": 3,

"ReadDataTimeMs": 61,

"WriteDataTimeMs": 293,

"CommitAndPublishTimeMs": 19

}

Loading data for table: customer

{

"TxnId": 67,

"Label": "f3c48ce1-fd8c-4540-af5f-8340461ecb48",

"TwoPhaseCommit": "false",

"Status": "Success",

"Message": "OK",

"NumberTotalRows": 3000000,

"NumberLoadedRows": 3000000,

"NumberFilteredRows": 0,

"NumberUnselectedRows": 0,

"LoadBytes": 289529327,

"LoadTimeMs": 3033,

"BeginTxnTimeMs": 0,

"StreamLoadPutTimeMs": 5,

"ReadDataTimeMs": 1801,

"WriteDataTimeMs": 3002,

"CommitAndPublishTimeMs": 24

}

6、检查导入数据

select count(*) from part;

select count(*) from customer;

select count(*) from supplier;

select count(*) from dates;

select count(*) from lineorder;

select count(*) from lineorder_flat;

| Table | Rows | 备注 |

|---|---|---|

| lineorder_flat | 0(0) | 内存不够 |

| lineorder | 5亿(594038733) | 60 GB |

| customer | 300万(3000000) | 277 MB |

| part | 140万(1400000) | 116 MB |

| supplier | 20万(200000) | 17 MB |

| dates | 2556 | 228 KB |

7、SSB 标准测试 SQL

--Q1.1

SELECT SUM(lo_extendedprice * lo_discount) AS REVENUE

FROM lineorder, dates

WHERE

lo_orderdate = d_datekey

AND d_year = 1993

AND lo_discount BETWEEN 1 AND 3

AND lo_quantity < 25;

--Q1.2

SELECT SUM(lo_extendedprice * lo_discount) AS REVENUE

FROM lineorder, dates

WHERE

lo_orderdate = d_datekey

AND d_yearmonth = 'Jan1994'

AND lo_discount BETWEEN 4 AND 6

AND lo_quantity BETWEEN 26 AND 35;

--Q1.3

SELECT

SUM(lo_extendedprice * lo_discount) AS REVENUE

FROM lineorder, dates

WHERE

lo_orderdate = d_datekey

AND d_weeknuminyear = 6

AND d_year = 1994

AND lo_discount BETWEEN 5 AND 7

AND lo_quantity BETWEEN 26 AND 35;

--Q2.1

SELECT SUM(lo_revenue), d_year, p_brand

FROM lineorder, dates, part, supplier

WHERE

lo_orderdate = d_datekey

AND lo_partkey = p_partkey

AND lo_suppkey = s_suppkey

AND p_category = 'MFGR#12'

AND s_region = 'AMERICA'

GROUP BY d_year, p_brand

ORDER BY p_brand;

--Q2.2

SELECT SUM(lo_revenue), d_year, p_brand

FROM lineorder, dates, part, supplier

WHERE

lo_orderdate = d_datekey

AND lo_partkey = p_partkey

AND lo_suppkey = s_suppkey

AND p_brand BETWEEN 'MFGR#2221' AND 'MFGR#2228'

AND s_region = 'ASIA'

GROUP BY d_year, p_brand

ORDER BY d_year, p_brand;

--Q2.3

SELECT SUM(lo_revenue), d_year, p_brand

FROM lineorder, dates, part, supplier

WHERE

lo_orderdate = d_datekey

AND lo_partkey = p_partkey

AND lo_suppkey = s_suppkey

AND p_brand = 'MFGR#2239'

AND s_region = 'EUROPE'

GROUP BY d_year, p_brand

ORDER BY d_year, p_brand;

--Q3.1

SELECT

c_nation,

s_nation,

d_year,

SUM(lo_revenue) AS REVENUE

FROM customer, lineorder, supplier, dates

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_orderdate = d_datekey

AND c_region = 'ASIA'

AND s_region = 'ASIA'

AND d_year >= 1992

AND d_year <= 1997

GROUP BY c_nation, s_nation, d_year

ORDER BY d_year ASC, REVENUE DESC;

--Q3.2

SELECT

c_city,

s_city,

d_year,

SUM(lo_revenue) AS REVENUE

FROM customer, lineorder, supplier, dates

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_orderdate = d_datekey

AND c_nation = 'UNITED STATES'

AND s_nation = 'UNITED STATES'

AND d_year >= 1992

AND d_year <= 1997

GROUP BY c_city, s_city, d_year

ORDER BY d_year ASC, REVENUE DESC;

--Q3.3

SELECT

c_city,

s_city,

d_year,

SUM(lo_revenue) AS REVENUE

FROM customer, lineorder, supplier, dates

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_orderdate = d_datekey

AND (

c_city = 'UNITED KI1'

OR c_city = 'UNITED KI5'

)

AND (

s_city = 'UNITED KI1'

OR s_city = 'UNITED KI5'

)

AND d_year >= 1992

AND d_year <= 1997

GROUP BY c_city, s_city, d_year

ORDER BY d_year ASC, REVENUE DESC;

--Q3.4

SELECT

c_city,

s_city,

d_year,

SUM(lo_revenue) AS REVENUE

FROM customer, lineorder, supplier, dates

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_orderdate = d_datekey

AND (

c_city = 'UNITED KI1'

OR c_city = 'UNITED KI5'

)

AND (

s_city = 'UNITED KI1'

OR s_city = 'UNITED KI5'

)

AND d_yearmonth = 'Dec1997'

GROUP BY c_city, s_city, d_year

ORDER BY d_year ASC, REVENUE DESC;

--Q4.1

SELECT /*+SET_VAR(parallel_fragment_exec_instance_num=4, enable_vectorized_engine=true, batch_size=4096, enable_cost_based_join_reorder=true, enable_projection=true) */

d_year,

c_nation,

SUM(lo_revenue - lo_supplycost) AS PROFIT

FROM dates, customer, supplier, part, lineorder

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_partkey = p_partkey

AND lo_orderdate = d_datekey

AND c_region = 'AMERICA'

AND s_region = 'AMERICA'

AND (

p_mfgr = 'MFGR#1'

OR p_mfgr = 'MFGR#2'

)

GROUP BY d_year, c_nation

ORDER BY d_year, c_nation;

--Q4.2

SELECT /*+SET_VAR(parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, enable_cost_based_join_reorder=true, enable_projection=true) */

d_year,

s_nation,

p_category,

SUM(lo_revenue - lo_supplycost) AS PROFIT

FROM dates, customer, supplier, part, lineorder

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_partkey = p_partkey

AND lo_orderdate = d_datekey

AND c_region = 'AMERICA'

AND s_region = 'AMERICA'

AND (

d_year = 1997

OR d_year = 1998

)

AND (

p_mfgr = 'MFGR#1'

OR p_mfgr = 'MFGR#2'

)

GROUP BY d_year, s_nation, p_category

ORDER BY d_year, s_nation, p_category;

--Q4.3

SELECT /*+SET_VAR(parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, enable_cost_based_join_reorder=true, enable_projection=true) */

d_year,

s_city,

p_brand,

SUM(lo_revenue - lo_supplycost) AS PROFIT

FROM dates, customer, supplier, part, lineorder

WHERE

lo_custkey = c_custkey

AND lo_suppkey = s_suppkey

AND lo_partkey = p_partkey

AND lo_orderdate = d_datekey

AND s_nation = 'UNITED STATES'

AND (

d_year = 1997

OR d_year = 1998

)

AND p_category = 'MFGR#14'

GROUP BY d_year, s_city, p_brand

ORDER BY d_year, s_city, p_brand;

8、测试结构表

| 测试语句 | 执行最高耗时(ms) | 执行最低耗时(ms) | 备注 |

|---|---|---|---|

| Q1.1 | 1312 | 203 | |

| Q1.2 | 1404 | 158 | |

| Q1.3 | 224 | 158 | |

| Q2.1 | 10097 | 1179 | |

| Q2.2 | 1239 | 1162 | |

| Q2.3 | 1228 | 1098 | |

| Q3.1 | 3258 | 2738 | |

| Q3.2 | 1111 | 1075 | |

| Q3.3 | 1254 | 987 | |

| Q3.4 | 213 | 167 | |

| Q4.1 | 2467 | 2128 | |

| Q4.2 | 841 | 751 | |

| Q4.3 | 980 | 875 |

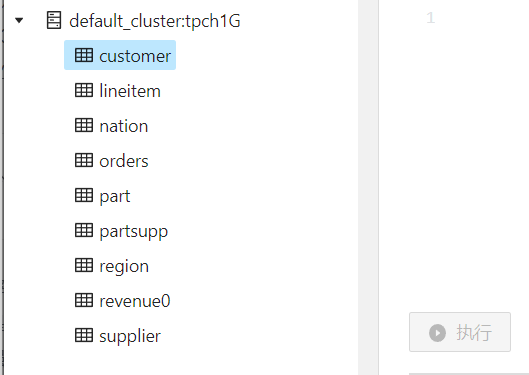

tpc-h数据集测试

1、数据准备

1.1 载安装 TPC-H 数据生成工具

执行以下脚本下载并编译 tpch-tools 工具。

sh build-tpch-dbgen.sh

安装成功后,将在 TPC-H_Tools_v3.0.0/ 目录下生成 dbgen 二进制文件。

1.2 生成 TPC-H 测试集

执行以下脚本生成 TPC-H 数据集:

sh gen-tpch-data.sh

注1:通过 sh gen-tpch-data.sh -h 查看脚本帮助。

注2:数据会以 .tbl 为后缀生成在 tpch-data/ 目录下。文件总大小约100GB。生成时间可能在数分钟到1小时不等。

注3:默认生成 100G 的标准测试数据集

2 建表

2.1 准备 doris-cluster.conf 文件

在调用导入脚本前,需要将 FE 的 ip 端口等信息写在 doris-cluster.conf 文件中。

文件位置在 ${DORIS_HOME}/tools/tpch-tools/conf/ 目录下。

文件内容包括 FE 的 ip,HTTP 端口,用户名,密码以及待导入数据的 DB 名称:

# Any of FE host

export FE_HOST='127.0.0.1'

# http_port in fe.conf

export FE_HTTP_PORT=8030

# query_port in fe.conf

export FE_QUERY_PORT=9030

# Doris username

export USER='root'

# Doris password

export PASSWORD=''

# The database where TPC-H tables located

export DB='tpch1'

2.2 执行以下脚本生成创建 TPC-H 表

sh create-tpch-tables.sh

或者复制 create-tpch-tables.sql 中的建表语句,在 Doris 中执行。

3 导入数据

通过下面的命令执行数据导入:

sh ./load-tpch-data.sh

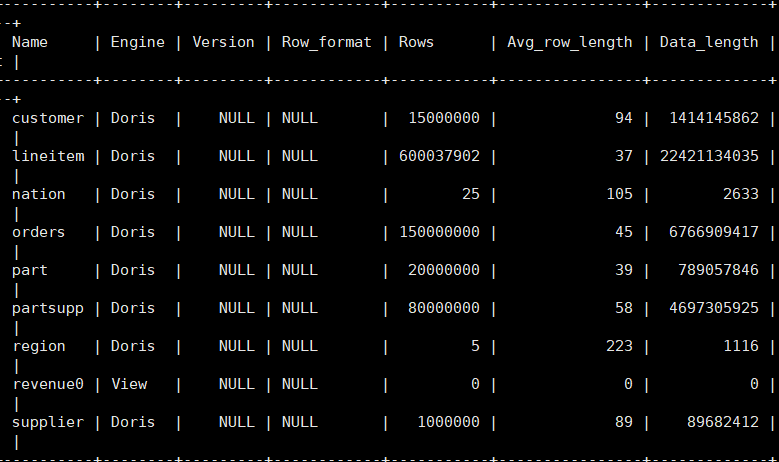

4 数据验证

| TPC-H表名 | 行数 | 导入后大小 | 备注 |

|---|---|---|---|

| REGION | 5 | 400KB | 区域表 |

| NATION | 25 | 7.714 KB | 国家表 |

| SUPPLIER | 100万 | 85.528 MB | 供应商表 |

| PART | 2000万 | 752.330 MB | 零部件表 |

| PARTSUPP | 8000万 | 4.375 GB | 零部件供应表 |

| CUSTOMER | 1500万 | 1.317 GB | 客户表 |

| ORDERS | 1.5亿 | 6.301 GB | 订单表 |

| LINEITEM | 6亿 | 20.882 GB | 订单明细表 |

5 单个 SQL 执行

5.1 执行语句

下面是测试时使用的 SQL 语句,你也可以从代码库里获取最新的 SQL 。最新测试查询语句地址:TPC-H 测试查询语句

--Q1

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=false, enable_projection=false) */

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice * (1 - l_discount)) as sum_disc_price,

sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

from

lineitem

where

l_shipdate <= date '1998-12-01' - interval '90' day

group by

l_returnflag,

l_linestatus

order by

l_returnflag,

l_linestatus;

--Q2

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=1, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=false, enable_projection=true) */

s_acctbal,

s_name,

n_name,

p_partkey,

p_mfgr,

s_address,

s_phone,

s_comment

from

partsupp join

(

select

ps_partkey as a_partkey,

min(ps_supplycost) as a_min

from

partsupp,

part,

supplier,

nation,

region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

and p_size = 15

and p_type like '%BRASS'

group by a_partkey

) A on ps_partkey = a_partkey and ps_supplycost=a_min ,

part,

supplier,

nation,

region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and p_size = 15

and p_type like '%BRASS'

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

order by

s_acctbal desc,

n_name,

s_name,

p_partkey

limit 100;

--Q3

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=false, enable_projection=true, runtime_filter_wait_time_ms=10000) */

l_orderkey,

sum(l_extendedprice * (1 - l_discount)) as revenue,

o_orderdate,

o_shippriority

from

(

select l_orderkey, l_extendedprice, l_discount, o_orderdate, o_shippriority, o_custkey from

lineitem join orders

where l_orderkey = o_orderkey

and o_orderdate < date '1995-03-15'

and l_shipdate > date '1995-03-15'

) t1 join customer c

on c.c_custkey = t1.o_custkey

where c_mktsegment = 'BUILDING'

group by

l_orderkey,

o_orderdate,

o_shippriority

order by

revenue desc,

o_orderdate

limit 10;

--Q4

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=4, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=false, enable_projection=true) */

o_orderpriority,

count(*) as order_count

from

(

select

*

from

lineitem

where l_commitdate < l_receiptdate

) t1

right semi join orders

on t1.l_orderkey = o_orderkey

where

o_orderdate >= date '1993-07-01'

and o_orderdate < date '1993-07-01' + interval '3' month

group by

o_orderpriority

order by

o_orderpriority;

--Q5

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=false, enable_projection=true) */

n_name,

sum(l_extendedprice * (1 - l_discount)) as revenue

from

customer,

orders,

lineitem,

supplier,

nation,

region

where

c_custkey = o_custkey

and l_orderkey = o_orderkey

and l_suppkey = s_suppkey

and c_nationkey = s_nationkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'ASIA'

and o_orderdate >= date '1994-01-01'

and o_orderdate < date '1994-01-01' + interval '1' year

group by

n_name

order by

revenue desc;

--Q6

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=1, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=false, enable_projection=true) */

sum(l_extendedprice * l_discount) as revenue

from

lineitem

where

l_shipdate >= date '1994-01-01'

and l_shipdate < date '1994-01-01' + interval '1' year

and l_discount between .06 - 0.01 and .06 + 0.01

and l_quantity < 24;

--Q7

select /*+SET_VAR(exec_mem_limit=458589934592, parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=false, enable_projection=true) */

supp_nation,

cust_nation,

l_year,

sum(volume) as revenue

from

(

select

n1.n_name as supp_nation,

n2.n_name as cust_nation,

extract(year from l_shipdate) as l_year,

l_extendedprice * (1 - l_discount) as volume

from

supplier,

lineitem,

orders,

customer,

nation n1,

nation n2

where

s_suppkey = l_suppkey

and o_orderkey = l_orderkey

and c_custkey = o_custkey

and s_nationkey = n1.n_nationkey

and c_nationkey = n2.n_nationkey

and (

(n1.n_name = 'FRANCE' and n2.n_name = 'GERMANY')

or (n1.n_name = 'GERMANY' and n2.n_name = 'FRANCE')

)

and l_shipdate between date '1995-01-01' and date '1996-12-31'

) as shipping

group by

supp_nation,

cust_nation,

l_year

order by

supp_nation,

cust_nation,

l_year;

--Q8

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=false, enable_projection=true) */

o_year,

sum(case

when nation = 'BRAZIL' then volume

else 0

end) / sum(volume) as mkt_share

from

(

select

extract(year from o_orderdate) as o_year,

l_extendedprice * (1 - l_discount) as volume,

n2.n_name as nation

from

lineitem,

orders,

customer,

supplier,

part,

nation n1,

nation n2,

region

where

p_partkey = l_partkey

and s_suppkey = l_suppkey

and l_orderkey = o_orderkey

and o_custkey = c_custkey

and c_nationkey = n1.n_nationkey

and n1.n_regionkey = r_regionkey

and r_name = 'AMERICA'

and s_nationkey = n2.n_nationkey

and o_orderdate between date '1995-01-01' and date '1996-12-31'

and p_type = 'ECONOMY ANODIZED STEEL'

) as all_nations

group by

o_year

order by

o_year;

--Q9

select/*+SET_VAR(exec_mem_limit=37179869184, parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=false, enable_projection=true, enable_remove_no_conjuncts_runtime_filter_policy=true, runtime_filter_wait_time_ms=100000) */

nation,

o_year,

sum(amount) as sum_profit

from

(

select

n_name as nation,

extract(year from o_orderdate) as o_year,

l_extendedprice * (1 - l_discount) - ps_supplycost * l_quantity as amount

from

lineitem join orders on o_orderkey = l_orderkey

join[shuffle] part on p_partkey = l_partkey

join[shuffle] partsupp on ps_partkey = l_partkey

join[shuffle] supplier on s_suppkey = l_suppkey

join[broadcast] nation on s_nationkey = n_nationkey

where

ps_suppkey = l_suppkey and

p_name like '%green%'

) as profit

group by

nation,

o_year

order by

nation,

o_year desc;

--Q10

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=4, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=false, enable_projection=true) */

c_custkey,

c_name,

sum(t1.l_extendedprice * (1 - t1.l_discount)) as revenue,

c_acctbal,

n_name,

c_address,

c_phone,

c_comment

from

customer,

(

select o_custkey,l_extendedprice,l_discount from lineitem, orders

where l_orderkey = o_orderkey

and o_orderdate >= date '1993-10-01'

and o_orderdate < date '1993-10-01' + interval '3' month

and l_returnflag = 'R'

) t1,

nation

where

c_custkey = t1.o_custkey

and c_nationkey = n_nationkey

group by

c_custkey,

c_name,

c_acctbal,

c_phone,

n_name,

c_address,

c_comment

order by

revenue desc

limit 20;

--Q11

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=true, enable_projection=true) */

ps_partkey,

sum(ps_supplycost * ps_availqty) as value

from

partsupp,

(

select s_suppkey

from supplier, nation

where s_nationkey = n_nationkey and n_name = 'GERMANY'

) B

where

ps_suppkey = B.s_suppkey

group by

ps_partkey having

sum(ps_supplycost * ps_availqty) > (

select

sum(ps_supplycost * ps_availqty) * 0.000002

from

partsupp,

(select s_suppkey

from supplier, nation

where s_nationkey = n_nationkey and n_name = 'GERMANY'

) A

where

ps_suppkey = A.s_suppkey

)

order by

value desc;

--Q12

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=true, enable_projection=true) */

l_shipmode,

sum(case

when o_orderpriority = '1-URGENT'

or o_orderpriority = '2-HIGH'

then 1

else 0

end) as high_line_count,

sum(case

when o_orderpriority <> '1-URGENT'

and o_orderpriority <> '2-HIGH'

then 1

else 0

end) as low_line_count

from

orders,

lineitem

where

o_orderkey = l_orderkey

and l_shipmode in ('MAIL', 'SHIP')

and l_commitdate < l_receiptdate

and l_shipdate < l_commitdate

and l_receiptdate >= date '1994-01-01'

and l_receiptdate < date '1994-01-01' + interval '1' year

group by

l_shipmode

order by

l_shipmode;

--Q13

select /*+SET_VAR(exec_mem_limit=45899345920, parallel_fragment_exec_instance_num=16, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=true, enable_projection=true) */

c_count,

count(*) as custdist

from

(

select

c_custkey,

count(o_orderkey) as c_count

from

orders right outer join customer on

c_custkey = o_custkey

and o_comment not like '%special%requests%'

group by

c_custkey

) as c_orders

group by

c_count

order by

custdist desc,

c_count desc;

--Q14

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=true, enable_projection=true, runtime_filter_mode=OFF) */

100.00 * sum(case

when p_type like 'PROMO%'

then l_extendedprice * (1 - l_discount)

else 0

end) / sum(l_extendedprice * (1 - l_discount)) as promo_revenue

from

part,

lineitem

where

l_partkey = p_partkey

and l_shipdate >= date '1995-09-01'

and l_shipdate < date '1995-09-01' + interval '1' month;

--Q15

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=true, enable_projection=true) */

s_suppkey,

s_name,

s_address,

s_phone,

total_revenue

from

supplier,

revenue0

where

s_suppkey = supplier_no

and total_revenue = (

select

max(total_revenue)

from

revenue0

)

order by

s_suppkey;

--Q16

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=8, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=true, enable_projection=true) */

p_brand,

p_type,

p_size,

count(distinct ps_suppkey) as supplier_cnt

from

partsupp,

part

where

p_partkey = ps_partkey

and p_brand <> 'Brand#45'

and p_type not like 'MEDIUM POLISHED%'

and p_size in (49, 14, 23, 45, 19, 3, 36, 9)

and ps_suppkey not in (

select

s_suppkey

from

supplier

where

s_comment like '%Customer%Complaints%'

)

group by

p_brand,

p_type,

p_size

order by

supplier_cnt desc,

p_brand,

p_type,

p_size;

--Q17

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=1, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=true, enable_projection=true) */

sum(l_extendedprice) / 7.0 as avg_yearly

from

lineitem join [broadcast]

part p1 on p1.p_partkey = l_partkey

where

p1.p_brand = 'Brand#23'

and p1.p_container = 'MED BOX'

and l_quantity < (

select

0.2 * avg(l_quantity)

from

lineitem join [broadcast]

part p2 on p2.p_partkey = l_partkey

where

l_partkey = p1.p_partkey

and p2.p_brand = 'Brand#23'

and p2.p_container = 'MED BOX'

);

--Q18

select /*+SET_VAR(exec_mem_limit=45899345920, parallel_fragment_exec_instance_num=4, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=true, enable_projection=true) */

c_name,

c_custkey,

t3.o_orderkey,

t3.o_orderdate,

t3.o_totalprice,

sum(t3.l_quantity)

from

customer join

(

select * from

lineitem join

(

select * from

orders left semi join

(

select

l_orderkey

from

lineitem

group by

l_orderkey having sum(l_quantity) > 300

) t1

on o_orderkey = t1.l_orderkey

) t2

on t2.o_orderkey = l_orderkey

) t3

on c_custkey = t3.o_custkey

group by

c_name,

c_custkey,

t3.o_orderkey,

t3.o_orderdate,

t3.o_totalprice

order by

t3.o_totalprice desc,

t3.o_orderdate

limit 100;

--Q19

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=false, enable_cost_based_join_reorder=false, enable_projection=true) */

sum(l_extendedprice* (1 - l_discount)) as revenue

from

lineitem,

part

where

(

p_partkey = l_partkey

and p_brand = 'Brand#12'

and p_container in ('SM CASE', 'SM BOX', 'SM PACK', 'SM PKG')

and l_quantity >= 1 and l_quantity <= 1 + 10

and p_size between 1 and 5

and l_shipmode in ('AIR', 'AIR REG')

and l_shipinstruct = 'DELIVER IN PERSON'

)

or

(

p_partkey = l_partkey

and p_brand = 'Brand#23'

and p_container in ('MED BAG', 'MED BOX', 'MED PKG', 'MED PACK')

and l_quantity >= 10 and l_quantity <= 10 + 10

and p_size between 1 and 10

and l_shipmode in ('AIR', 'AIR REG')

and l_shipinstruct = 'DELIVER IN PERSON'

)

or

(

p_partkey = l_partkey

and p_brand = 'Brand#34'

and p_container in ('LG CASE', 'LG BOX', 'LG PACK', 'LG PKG')

and l_quantity >= 20 and l_quantity <= 20 + 10

and p_size between 1 and 15

and l_shipmode in ('AIR', 'AIR REG')

and l_shipinstruct = 'DELIVER IN PERSON'

);

--Q20

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=2, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=true, enable_projection=true, runtime_bloom_filter_size=551943) */

s_name, s_address from

supplier left semi join

(

select * from

(

select l_partkey,l_suppkey, 0.5 * sum(l_quantity) as l_q

from lineitem

where l_shipdate >= date '1994-01-01'

and l_shipdate < date '1994-01-01' + interval '1' year

group by l_partkey,l_suppkey

) t2 join

(

select ps_partkey, ps_suppkey, ps_availqty

from partsupp left semi join part

on ps_partkey = p_partkey and p_name like 'forest%'

) t1

on t2.l_partkey = t1.ps_partkey and t2.l_suppkey = t1.ps_suppkey

and t1.ps_availqty > t2.l_q

) t3

on s_suppkey = t3.ps_suppkey

join nation

where s_nationkey = n_nationkey

and n_name = 'CANADA'

order by s_name;

--Q21

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=4, enable_vectorized_engine=true, batch_size=4096, disable_join_reorder=true, enable_cost_based_join_reorder=true, enable_projection=true) */

s_name, count(*) as numwait

from

lineitem l2 right semi join

(

select * from

lineitem l3 right anti join

(

select * from

orders join lineitem l1 on l1.l_orderkey = o_orderkey and o_orderstatus = 'F'

join

(

select * from

supplier join nation

where s_nationkey = n_nationkey

and n_name = 'SAUDI ARABIA'

) t1

where t1.s_suppkey = l1.l_suppkey and l1.l_receiptdate > l1.l_commitdate

) t2

on l3.l_orderkey = t2.l_orderkey and l3.l_suppkey <> t2.l_suppkey and l3.l_receiptdate > l3.l_commitdate

) t3

on l2.l_orderkey = t3.l_orderkey and l2.l_suppkey <> t3.l_suppkey

group by

t3.s_name

order by

numwait desc,

t3.s_name

limit 100;

--Q22

with tmp as (select

avg(c_acctbal) as av

from

customer

where

c_acctbal > 0.00

and substring(c_phone, 1, 2) in

('13', '31', '23', '29', '30', '18', '17'))

select /*+SET_VAR(exec_mem_limit=8589934592, parallel_fragment_exec_instance_num=4,runtime_bloom_filter_size=4194304) */

cntrycode,

count(*) as numcust,

sum(c_acctbal) as totacctbal

from

(

select

substring(c_phone, 1, 2) as cntrycode,

c_acctbal

from

orders right anti join customer c on o_custkey = c.c_custkey join tmp on c.c_acctbal > tmp.av

where

substring(c_phone, 1, 2) in

('13', '31', '23', '29', '30', '18', '17')

) as custsale

group by

cntrycode

order by

cntrycode;

5.2 执行结果

| 测试语句 | 执行最高耗时(ms) | 执行最低耗时(ms) | 备注 |

|---|---|---|---|

| Q1 | 17244 | 8532 | |

| Q2 | 4474 | 755 | |

| Q3 | 6533 | 1905 | |

| Q4 | 3425 | 1935 | |

| Q5 | 4415 | 4020 | |

| Q6 | 501 | 468 | |

| Q7 | 1900 | 1750 | |

| Q8 | 3539 | 2833 | |

| Q9 | |||

| Q10 | 6012 | 4774 | |

| Q11 | 771 | 642 | |

| Q12 | 6611 | 5436 | |

| Q13 | 8069 | 7451 | |

| Q14 | 603 | 466 | |

| Q15 | 1179 | 981 | |

| Q16 | 2041 | 925 | |

| Q17 | 3471 | 2405 | |

| Q18 | 12202 | 9592 | |

| Q19 | 8979 | 2202 | |

| Q20 | 7665 | 1866 | |

| Q21 | 8968 | 7808 | |

| Q22 | 1340 | 1290 |