【深度学习】YOLOv8训练过程,YOLOv8实战教程,目标检测任务SOTA,关键点回归

文章目录

- 可用资源

- 资源安装

- 模型训练(检测)

- 模型pridict

- 模型导出

可用资源

https://github.com/ultralytics/ultralytics

官方教程:https://docs.ultralytics.com/modes/train/

资源安装

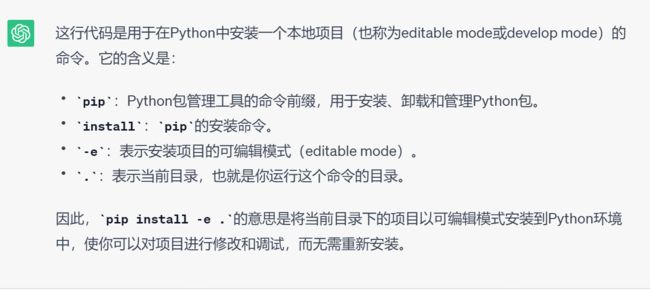

更建议下载代码后使用 下面指令安装,这样可以更改源码,如果不需要更改源码就直接pip install ultralytics也是可以的。

pip install -e .

这样安装后,可以直接修改yolov8源码,并且可以立即生效。此图是命令解释:

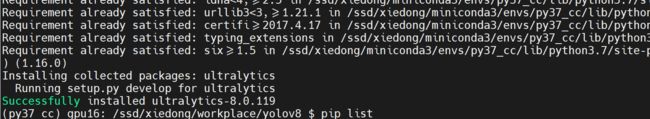

安装成功后:

pip list可以看到安装的包:

模型训练(检测)

可以重新创建一个新的工程去使用安装好的ultralytics包,这样修改源码可以在別的工程。

下载一个demo数据集:https://ultralytics.com/assets/coco128.zip

from ultralytics import YOLO

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "2"

model = YOLO('yolov8n.yaml').load('yolov8n.pt') # build from YAML and transfer weights

# Train the model

model.train(data='/ssd/xiedong/workplace/yolov8_script/coco128.yaml', epochs=100, imgsz=640)

coco128.yaml,这个文件在yolov8的源码中是有的,拉出来改一下,改为绝对路径。

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: /ssd/xiedong/workplace/yolov8_script/coco128 # dataset root dir

train: images/train2017 # train images (relative to 'path') 128 images

val: images/train2017 # val images (relative to 'path') 128 images

test: # test images (optional)

# Classes

names:

0: person

1: bicycle

2: car

3: motorcycle

4: airplane

5: bus

6: train

7: truck

8: boat

9: traffic light

10: fire hydrant

11: stop sign

12: parking meter

13: bench

14: bird

15: cat

16: dog

17: horse

18: sheep

19: cow

20: elephant

21: bear

22: zebra

23: giraffe

24: backpack

25: umbrella

26: handbag

27: tie

28: suitcase

29: frisbee

30: skis

31: snowboard

32: sports ball

33: kite

34: baseball bat

35: baseball glove

36: skateboard

37: surfboard

38: tennis racket

39: bottle

40: wine glass

41: cup

42: fork

43: knife

44: spoon

45: bowl

46: banana

47: apple

48: sandwich

49: orange

50: broccoli

51: carrot

52: hot dog

53: pizza

54: donut

55: cake

56: chair

57: couch

58: potted plant

59: bed

60: dining table

61: toilet

62: tv

63: laptop

64: mouse

65: remote

66: keyboard

67: cell phone

68: microwave

69: oven

70: toaster

71: sink

72: refrigerator

73: book

74: clock

75: vase

76: scissors

77: teddy bear

78: hair drier

79: toothbrush

# Download script/URL (optional)

download: https://ultralytics.com/assets/coco128.zip

即可看到成功训练起来的情况:

Transferred 355/355 items from pretrained weights

Ultralytics YOLOv8.0.119 Python-3.7.16 torch-1.12.1+cu116 CUDA:0 (NVIDIA A100-PCIE-40GB, 40390MiB)

WARNING ⚠️ Upgrade to torch>=2.0.0 for deterministic training.

yolo/engine/trainer: task=detect, mode=train, model=yolov8n.yaml, data=/ssd/xiedong/workplace/yolov8_script/coco128.yaml, epochs=100, patience=50, batch=16, imgsz=640, save=True, save_period=-1, cache=False, device=None, workers=8, project=None, name=None, exist_ok=False, pretrained=True, optimizer=auto, verbose=True, seed=0, deterministic=True, single_cls=False, rect=False, cos_lr=False, close_mosaic=0, resume=False, amp=True, fraction=1.0, profile=False, overlap_mask=True, mask_ratio=4, dropout=0.0, val=True, split=val, save_json=False, save_hybrid=False, conf=None, iou=0.7, max_det=300, half=False, dnn=False, plots=True, source=None, show=False, save_txt=False, save_conf=False, save_crop=False, show_labels=True, show_conf=True, vid_stride=1, line_width=None, visualize=False, augment=False, agnostic_nms=False, classes=None, retina_masks=False, boxes=True, format=torchscript, keras=False, optimize=False, int8=False, dynamic=False, simplify=False, opset=None, workspace=4, nms=False, lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=7.5, cls=0.5, dfl=1.5, pose=12.0, kobj=1.0, label_smoothing=0.0, nbs=64, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0, cfg=None, v5loader=False, tracker=botsort.yaml, save_dir=runs/detect/train5

from n params module arguments

0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2]

1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2]

2 -1 1 7360 ultralytics.nn.modules.block.C2f [32, 32, 1, True]

3 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]

4 -1 2 49664 ultralytics.nn.modules.block.C2f [64, 64, 2, True]

5 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]

6 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]

7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

8 -1 1 460288 ultralytics.nn.modules.block.C2f [256, 256, 1, True]

9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5]

10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

12 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

15 -1 1 37248 ultralytics.nn.modules.block.C2f [192, 64, 1]

16 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]

18 -1 1 123648 ultralytics.nn.modules.block.C2f [192, 128, 1]

19 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1]

21 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

22 [15, 18, 21] 1 897664 ultralytics.nn.modules.head.Detect [80, [64, 128, 256]]

YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

Transferred 355/355 items from pretrained weights

TensorBoard: Start with 'tensorboard --logdir runs/detect/train5', view at http://localhost:6006/

AMP: running Automatic Mixed Precision (AMP) checks with YOLOv8n...

AMP: checks passed ✅

train: Scanning /ssd/xiedong/workplace/yolov8_script/coco128/labels/train2017...

train: New cache created: /ssd/xiedong/workplace/yolov8_script/coco128/labels/train2017.cache

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

val: Scanning /ssd/xiedong/workplace/yolov8_script/coco128/labels/train2017.cach

Plotting labels to runs/detect/train5/labels.jpg...

optimizer: AdamW(lr=0.000119, momentum=0.9) with parameter groups 57 weight(decay=0.0), 64 weight(decay=0.0005), 63 bias(decay=0.0)

Image sizes 640 train, 640 val

Using 8 dataloader workers

Logging results to runs/detect/train5

Starting training for 100 epochs...

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

1/100 2.55G 1.179 1.595 1.254 127 640: 1

Class Images Instances Box(P R mAP50 m

all 128 929 0.641 0.534 0.612 0.454

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

2/100 2.54G 1.249 1.534 1.247 209 640: 1

Class Images Instances Box(P R mAP50 m

all 128 929 0.693 0.532 0.632 0.469

训练的参数调整:

| Key | Value | Description |

|---|---|---|

model |

None |

path to model file, i.e. yolov8n.pt, yolov8n.yaml |

data |

None |

path to data file, i.e. coco128.yaml |

epochs |

100 |

number of epochs to train for |

patience |

50 |

epochs to wait for no observable improvement for early stopping of training |

batch |

16 |

number of images per batch (-1 for AutoBatch) |

imgsz |

640 |

size of input images as integer or w,h |

save |

True |

save train checkpoints and predict results |

save_period |

-1 |

Save checkpoint every x epochs (disabled if < 1) |

cache |

False |

True/ram, disk or False. Use cache for data loading |

device |

None |

device to run on, i.e. cuda device=0 or device=0,1,2,3 or device=cpu |

workers |

8 |

number of worker threads for data loading (per RANK if DDP) |

project |

None |

project name |

name |

None |

experiment name |

exist_ok |

False |

whether to overwrite existing experiment |

pretrained |

False |

whether to use a pretrained model |

optimizer |

'auto' |

optimizer to use, choices=[SGD, Adam, Adamax, AdamW, NAdam, RAdam, RMSProp, auto] |

verbose |

False |

whether to print verbose output |

seed |

0 |

random seed for reproducibility |

deterministic |

True |

whether to enable deterministic mode |

single_cls |

False |

train multi-class data as single-class |

rect |

False |

rectangular training with each batch collated for minimum padding |

cos_lr |

False |

use cosine learning rate scheduler |

close_mosaic |

0 |

(int) disable mosaic augmentation for final epochs |

resume |

False |

resume training from last checkpoint |

amp |

True |

Automatic Mixed Precision (AMP) training, choices=[True, False] |

fraction |

1.0 |

dataset fraction to train on (default is 1.0, all images in train set) |

profile |

False |

profile ONNX and TensorRT speeds during training for loggers |

lr0 |

0.01 |

initial learning rate (i.e. SGD=1E-2, Adam=1E-3) |

lrf |

0.01 |

final learning rate (lr0 * lrf) |

momentum |

0.937 |

SGD momentum/Adam beta1 |

weight_decay |

0.0005 |

optimizer weight decay 5e-4 |

warmup_epochs |

3.0 |

warmup epochs (fractions ok) |

warmup_momentum |

0.8 |

warmup initial momentum |

warmup_bias_lr |

0.1 |

warmup initial bias lr |

box |

7.5 |

box loss gain |

cls |

0.5 |

cls loss gain (scale with pixels) |

dfl |

1.5 |

dfl loss gain |

pose |

12.0 |

pose loss gain (pose-only) |

kobj |

2.0 |

keypoint obj loss gain (pose-only) |

label_smoothing |

0.0 |

label smoothing (fraction) |

nbs |

64 |

nominal batch size |

overlap_mask |

True |

masks should overlap during training (segment train only) |

mask_ratio |

4 |

mask downsample ratio (segment train only) |

dropout |

0.0 |

use dropout regularization (classify train only) |

val |

True |

validate/test during training |

模型pridict

https://docs.ultralytics.com/modes/predict/

import cv2

from ultralytics import YOLO

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "2"

import matplotlib.pyplot as plt

img = cv2.imread("img.png")

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img_1 = cv2.imread("img_1.png")

img_1 = cv2.cvtColor(img_1, cv2.COLOR_BGR2RGB)

model = YOLO('yolov8n.yaml').load('yolov8n.pt')

inputs = [img, img_1] # list of numpy arrays

results = model(inputs) # list of Results objects

for img,result in zip(inputs, results):

boxes = result.boxes # Boxes object for bbox outputs

masks = result.masks # Masks object for segmentation masks outputs

probs = result.probs # Class probabilities for classification outputs

模型导出

https://docs.ultralytics.com/modes/export/#arguments

from ultralytics import YOLO

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "2"

model = YOLO('yolov8n.yaml').load('yolov8n.pt') # build from YAML and transfer weights

# Export the model

model.export(format='onnx')

导出成功:

from n params module arguments

0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2]

1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2]

2 -1 1 7360 ultralytics.nn.modules.block.C2f [32, 32, 1, True]

3 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]

4 -1 2 49664 ultralytics.nn.modules.block.C2f [64, 64, 2, True]

5 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]

6 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]

7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

8 -1 1 460288 ultralytics.nn.modules.block.C2f [256, 256, 1, True]

9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5]

10 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

11 [-1, 6] 1 0 ultralytics.nn.modules.conv.Concat [1]

12 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1]

13 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

14 [-1, 4] 1 0 ultralytics.nn.modules.conv.Concat [1]

15 -1 1 37248 ultralytics.nn.modules.block.C2f [192, 64, 1]

16 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

17 [-1, 12] 1 0 ultralytics.nn.modules.conv.Concat [1]

18 -1 1 123648 ultralytics.nn.modules.block.C2f [192, 128, 1]

19 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

20 [-1, 9] 1 0 ultralytics.nn.modules.conv.Concat [1]

21 -1 1 493056 ultralytics.nn.modules.block.C2f [384, 256, 1]

22 [15, 18, 21] 1 897664 ultralytics.nn.modules.head.Detect [80, [64, 128, 256]]

YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

Transferred 355/355 items from pretrained weights

Ultralytics YOLOv8.0.119 Python-3.7.16 torch-1.12.1+cu116 CPU

YOLOv8n summary (fused): 168 layers, 3151904 parameters, 0 gradients, 8.7 GFLOPs

PyTorch: starting from yolov8n.yaml with input shape (1, 3, 640, 640) BCHW and output shape(s) (1, 84, 8400) (0.0 MB)

ONNX: starting export with onnx 1.14.0 opset 10...

ONNX: export success ✅ 10.7s, saved as yolov8n.onnx (12.2 MB)

Export complete (28.4s)

Results saved to /ssd/xiedong/workplace/yolov8_script

Predict: yolo predict task=detect model=yolov8n.onnx imgsz=640

Validate: yolo val task=detect model=yolov8n.onnx imgsz=640 data=None

Visualize: https://netron.app