Python爬虫初探——天涯

天涯论坛爬取全部博文,保存为word文档

-

- 一切工作从目录开始

- 每个文章的URL得到了,终于要开始爬文章和图片

-

- 图片下载出了一些大问题

- 调试阶段

一切工作从目录开始

[注:全部过程先看了一遍 https://www.jianshu.com/p/81a5da4fa161 简书,理清思路]

首先我们要从博主的主页面入手,将显示的博文一篇一篇爬取下来。主页面如下

(网页url: http://www.tianya.cn/6090416/bbs )

爬取目录文章的URL是我们的目标。重点在于我们如何从前一篇文章的url得到后一篇文章的URL,这样就可以完全实现全自动的爬取了。然后把爬取的文章url放在txt文档之后调用即可。

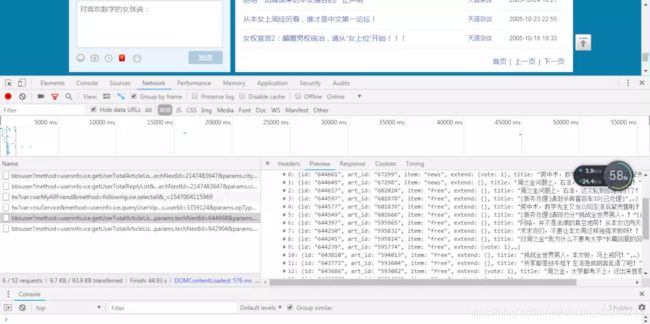

首先我遵从人类进化起源于懒惰这一至理名言,希望利用八爪鱼可视化爬虫软件完成第一步骤,遂卒。。。因为从调试中我们可以看到是无法直接读取目录信息的,是简书所说接口难寻,也就是,因为这部分是异步加载,需要我们去得到目录页的真实URL。F12打开network找到bbsuser后的hearder,Request URL: http://www.tianya.cn/api/bbsuser?method=userinfo.ice.getUserTotalArticleList¶ms.userId=6090416¶ms.pageSize=20¶ms.bMore=true¶ms.kindId=-1¶ms.publicNextId=2147483647¶ms.techNextId=2147483647¶ms.cityNextId=2147483647

这才是我们所需要的URL。

分析前后两篇文章的URL可以发现前后的URL是有联系的,即前一个的"public_next_id"字段就是后面一篇URL的params.publicNextId、params.techNextId、params.cityNextId的内容。于是可以正则表达提取合并从而得到全部文章的URL。代码如下:

# -*- coding: utf-8 -*-

"""

Created on Mon Jun 3 12:28:32 2019

@author: Administrator

"""

from pyspider.libs.base_handler import *

import urllib.request

import re

filepath ='D:\Ayu\\website.txt'

filehandle = open(filepath,'w')

art_list ='http://www.tianya.cn/api/bbsuser?method=userinfo.ice.getUserTotalArticleList¶ms.userId=6090416¶ms.pageSize=20¶ms.bMore=true¶ms.kindId=-1¶ms.publicNextId=2147483647¶ms.techNextId=2147483647¶ms.cityNextId=2147483647'

link = urllib.request.urlopen(art_list)

html = link.read().decode('utf-8')

#print (html)

get_id = re.compile(r'"art_id":"(.*?)","item"') #[\S\s]匹配任意字符

art_id = re.findall(get_id,html)

#print(len(art_id))

get_type = re.compile(r'"item":"(.*?)","extend"') #[\S\s]匹配任意字符

item_type = re.findall(get_type,html)

#print(item_type)

i=1

l=1

while l:

if len(art_id) !=0:

str1=""

for index in range(0,len(art_id)):

str1= 'http://bbs.tianya.cn/post-'+ item_type[index] +'-'+art_id[index]+'-1.shtml'

filehandle.write(str1+'\n')

print(str1)

get_nextid = re.compile(r'"public_next_id":"(.*?)","tech_next_id"')

next_id = re.findall(get_nextid,html)

#print(next_id)

next_list=""

next_list ='http://www.tianya.cn/api/bbsuser?method=userinfo.ice.getUserTotalArticleList¶ms.userId=6090416¶ms.pageSize=20¶ms.bMore=true¶ms.kindId=-1¶ms.publicNextId='+next_id[0]+'¶ms.techNextId='+next_id[0]+'¶ms.cityNextId='+next_id[0]

#print (art_list)

link = urllib.request.urlopen(next_list)

html = link.read().decode('utf-8')

get_id = re.compile(r'"art_id":"(.*?)","item"') #[\S\s]匹配任意字符

art_id = re.findall(get_id,html)

#print(len(art_id))

get_type = re.compile(r'"item":"(.*?)","extend"') #[\S\s]匹配任意字符

item_type = re.findall(get_type,html)

#print(item_type)

str1 = '============================'+'第'+ str(i) +' 页'+'=============================='

print(str1)

i=i+1

else:

l=0

每个文章的URL得到了,终于要开始爬文章和图片

人类的本质是复读机————某至理名言

此部分框架用的是csdn上这位大哥的 https://blog.csdn.net/koanzhongxue/article/details/45709861 ,其次需要我们按照自己情况来修改的地方(不得不说虽然re正则表达式 是最基础最灵活的爬虫工具,但能够灵活运用还是需要火候,比如我在爬取的时候就因为没有匹配好前后值,导致总是爬到某一篇文章就断掉,因为那篇文章的结构和其他的不一样。相比下来beautiful soup是真的好用,上手快且不需要处理太多异常情况)

其中我们使用了Python的doc库函数,re正则化,urlib.request Python3访问url函数,image读取函数,beautifulsoup和time函数推迟调用线程的运行

# -*- coding: utf-8 -*-

"""

Spyder Editor

This is a temporary script file.

"""

import urllib.request

import re

import _locale

from bs4 import BeautifulSoup

import os

import time

import requests

from docx import Document

from docx.shared import Inches

from PIL import Image

_locale._getdefaultlocale = (lambda *args: ['zh_CN', 'utf8'])

inde=1

for line in open("D:\\Ayu\\files\\readWebsite0.txt","r",encoding='utf-8'):

link = urllib.request.urlopen(line)

html = link.read().decode('utf-8')

#print (html)

gettitle = re.compile(r'(.*?) ')

title = re.findall(gettitle,html)

print(title)

#获取文章标题

if os.path.exists(title[0]+'.doc'): #为了防止900多篇文章若出错 不要再从头开始了

print("该文章已存在")

continue

else:

getmaxlength = re.compile(r'(\d*)\s*下页')

#用正则获取最大页数信息

if getmaxlength.search(html):

maxlength = getmaxlength.search(html).group(1)

print(maxlength)

else:

maxlength=1

#*************************************************************************

#正则匹配 除所有的帖子内容

gettext = re.compile(r'\s*([\S\s]*?)\s*') #[\S\s]匹配任意字符

gettext1 = re.compile(r'(.*?)')

origin_soup=BeautifulSoup(html,'lxml')

content= origin_soup.find(class_="bbs-content clearfix")

getpagemsg = re.compile(r'\s*(.*?).*\s*时间:(.*?)')

getnextpagelink = re.compile(r'下页')

#遍历每一页,获取发帖作者,时间,内容,并打印

content_image= origin_soup.findAll('img',)

#print(content)

#print(content_image)

#指定文件路径

path = os.getcwd()

new_path = os.path.join(path, 'pictures')

if not os.path.isdir(new_path):

os.mkdir(new_path)

new_path += '/'

#print(new_path)

#下载图片

image_couter=1

#requests.DEFAULT_RETRIES = 5

for img in content_image:

#s = requests.session()

#s.keep_alive = False

img_url=img.get('original')

#print (img_url)

if img_url is not None:

try:

url_content = requests.get(img_url+line)# 可以

except:

print(u'HTTP请求失败!正在准备重发...')

time.sleep(2)

continue

url_content.encoding = url_content.apparent_encoding

img_name = '%s.jpg' % image_couter

with open(os.path.join(img_name), 'wb') as f:

f.write(url_content.content)

f.close()

#time.sleep(3)

image_couter += 1

print('下载图片完成')

############################写入#############################

#filepath ='D:\\Ayu\\files\\'+title[0]+'.doc' #utf-8编码,需要转为gbk .decode('utf-8').encode('gbk')

#

#filehandle = open(filepath,'a+')

##打印文章标题

#filehandle.write(title[0] +'\n')

doc = Document()

doc.add_paragraph(title[0] +'\n')

str1 = ""

for pageno in range(1,int(maxlength)+1):

i = 0

str1 = '============================'+'第 '+ str(pageno)+' 页'+'=============================='

print (str1)

doc.add_paragraph(str1 +'\n')

#获取每条发言的信息头,包含作者,时间

#print(type(html))

pagemsg = re.findall(getpagemsg,html)

if pageno is 1:

#获取第一个帖子正文

# html中的 ,在转换成文档后,变成\t\n\r

if content is None:

gettext1 = ''

else:

gettext1 = content.text.replace('\t','').replace('\r','').replace('\n','')

#因为第一条内容由text1获取,text获取剩下的,所以text用i-1索引

#获取帖子正文

text = re.findall(gettext,html)

for ones in pagemsg:

if pageno > 1:

if ones is pagemsg[0]:

continue #若不是第一页,跳过第一个日期

if 'host' in ones[0]:

str1= '楼主:'+ ones[1] +' 时间:' + ones[2]

doc.add_paragraph(str1 +'\n')

else:

str1= ones[0] + ones[1] + ' 时间:' + ones[2]

doc.add_paragraph(str1+'\n')

if pageno is 1: #第一页特殊处理

if i is 0:

str1= gettext1.split('\u3000\u3000')

img_index=1

for j in range(1,len(str1)):

#print(str1[j])

if len(str1[j]) == 0:

images = '%s.jpg'%img_index

#print('此处插入图片')

img_index = img_index+1

try:

doc.add_picture(images, width=Inches(4)) # 添加图, 设置宽度

os.remove(images)#删除保存在本地的图片

except Exception:

continue

else:

doc.add_paragraph(str1[j])

#print('此处插入段落')

else:

str1 = text[i-1].replace('

','\n').replace('title="点击图片查看幻灯模式"','\n')

str0 = str1.split()

#print(str0)

for m in range(0,len(str0)):

if str0[m].startswith('','\n').replace('title="点击图片查看幻灯模式"','\n')

str2 = str1.split()

#print(str2)

for k in range(0,len(str2)):

if str2[k].startswith('>pageno:'+str(pageno)+' line:'+str(i))

print ('>>'+text[i-1])

print (e)

i = i +1

if pageno < int(maxlength):

#获取帖子的下一页链接,读取页面内容

nextpagelink = 'http://bbs.tianya.cn'+getnextpagelink.search(html).group(1)

#print(type(html))

link = urllib.request.urlopen(nextpagelink)

html = link.read().decode('utf-8')

doc.save(title[0]+'.doc')

图片下载出了一些大问题

https://blog.csdn.net/weixin_40420401/article/details/82384049>python 3.x 爬虫基础—http headers详解(转)

https://blog.csdn.net/hu77700021/article/details/79837569 爬虫反爬-关于headers(UA、referer、cookies)的一些有趣反爬

https://blog.csdn.net/qq_33733970/article/details/77876761 爬虫之突破天涯防盗链

我要单独拿出来这段代码的想法就是,真的被这问题搞了好几天!若用src或者original直接得到的图片不是正在加载滚动,就是天涯社区的马赛克,URL显示的是403。直到看到上面最后一篇文章的解决方法。其实自己对前端的知识也不了解,参考网址仅记录在此日后学习查看。

#指定文件路径 下载图片

path = os.getcwd()

new_path = os.path.join(path, 'pictures')

if not os.path.isdir(new_path):

os.mkdir(new_path)

new_path += '/'

#print(new_path)

image_couter=1

for img in content_image:

img_url=img.get('original')

#print (img_url)

if img_url is not None:

url_content = requests.get(img_url+line)

url_content.encoding = url_content.apparent_encoding

img_name = '%s.jpg' % image_couter

with open(os.path.join(img_name), 'wb') as f:

f.write(url_content.content)

f.close()

#time.sleep(3)

image_couter += 1

print('下载图片完成')

调试阶段

利用单网页.py排查错误,意思就是每当大代码循环被终止的时候,把出错的网页URL放在下面代码的line中再运行并且运行成功后再去大代码跑循环。其实内容和主代码一致,只是这里line是固定的,主代码是循环读取的。

# -*- coding: utf-8 -*-

"""

Created on Thu Jun 6 16:52:50 2019

@author: sybil_yan

"""

import urllib.request

import re

import _locale

from bs4 import BeautifulSoup

import os

import time

import requests

from docx import Document

from docx.shared import Inches

from PIL import Image

_locale._getdefaultlocale = (lambda *args: ['zh_CN', 'utf8'])

line='http://bbs.tianya.cn/post-develop-102356-1.shtml'

link = urllib.request.urlopen(line)

html = link.read().decode('utf-8')

#print (html)

gettitle = re.compile(r'(.*?) ')

title = re.findall(gettitle,html)

#print(title)

#获取文章标题

getmaxlength = re.compile(r'(\d*)\s*下页')

#用正则获取最大页数信息

if getmaxlength.search(html):

maxlength = getmaxlength.search(html).group(1)

print(maxlength)

else:

maxlength=1

#*************************************************************************

#正则匹配 除所有的帖子内容

gettext = re.compile(r'\s*([\S\s]*?)\s*') #[\S\s]匹配任意字符

gettext1 = re.compile(r'(.*?)')

origin_soup=BeautifulSoup(html,'lxml')

content= origin_soup.find(class_="bbs-content clearfix")

content_image= origin_soup.findAll('img',)

#print(content)

#print(content_image)

#指定文件路径

path = os.getcwd()

new_path = os.path.join(path, 'pictures')

if not os.path.isdir(new_path):

os.mkdir(new_path)

new_path += '/'

#print(new_path)

#下载图片

image_couter=1

#requests.DEFAULT_RETRIES = 5

for img in content_image:

#s = requests.session()

#s.keep_alive = False

img_url=img.get('original')

print (img_url)

if img_url is not None:

try:

url_content = requests.get(img_url+line)# 可以

except:

print(u'HTTP请求失败!正在准备重发...')

time.sleep(2)

continue

url_content.encoding = url_content.apparent_encoding

img_name = '%s.jpg' % image_couter

with open(os.path.join(img_name), 'wb') as f:

f.write(url_content.content)

f.close()

#time.sleep(3)

image_couter += 1

print('下载图片完成')

#print(type(content))

#

#if content is None:

# gettext1 = ''

#else:

# gettext1 = content.text.replace('\t','').replace('\r','').replace('\n','')

#

# #print (content.text)

#

# gettext1 = gettext1.split('\u3000\u3000')

#print(len(gettext1[1]))

getpagemsg = re.compile(r'\s*(.*?).*\s*时间:(.*?)')

getnextpagelink = re.compile(r'下页')

#遍历每一页,获取发帖作者,时间,内容,并打印

############################写入#############################

#filepath ='D:\\Ayu\\files\\'+title[0]+'.doc' #utf-8编码,需要转为gbk .decode('utf-8').encode('gbk')

#

#filehandle = open(filepath,'a+')

##打印文章标题

#filehandle.write(title[0] +'\n')

doc = Document()

doc.add_paragraph(title[0] +'\n')

str1 = ""

for pageno in range(1,int(maxlength)+1):

i = 0

str1 = '============================'+'第 '+ str(pageno)+' 页'+'=============================='

print (str1)

doc.add_paragraph(str1 +'\n')

#获取每条发言的信息头,包含作者,时间

#print(type(html))

pagemsg = re.findall(getpagemsg,html)

if pageno is 1:

#获取第一个帖子正文

# html中的 ,在转换成文档后,变成\t\n\r

if content is None:

gettext1 = ''

else:

gettext1 = content.text.replace('\t','').replace('\r','').replace('\n','')

#因为第一条内容由text1获取,text获取剩下的,所以text用i-1索引

#获取帖子正文

text = re.findall(gettext,html)

for ones in pagemsg:

if pageno > 1:

if ones is pagemsg[0]:

continue #若不是第一页,跳过第一个日期

if 'host' in ones[0]:

str1= '楼主:'+ ones[1] +' 时间:' + ones[2]

doc.add_paragraph(str1 +'\n')

else:

str1= ones[0] + ones[1] + ' 时间:' + ones[2]

doc.add_paragraph(str1+'\n')

if pageno is 1: #第一页特殊处理

if i is 0:

str1= gettext1.split('\u3000\u3000')

img_index=1

for j in range(1,len(str1)):

#print(str1[j])

if len(str1[j]) == 0:

images = '%s.jpg'%img_index

print('此处插入图片')

img_index = img_index+1

try:

doc.add_picture(images, width=Inches(4)) # 添加图, 设置宽度

os.remove(images)#删除保存在本地的图片

except Exception:

continue

else:

doc.add_paragraph(str1[j])

print('此处插入段落')

else:

str1 = text[i-1].replace('

','\n').replace('title="点击图片查看幻灯模式"','\n')

str0 = str1.split()

print(str0)

for m in range(0,len(str0)):

if str0[m].startswith('','\n').replace('title="点击图片查看幻灯模式"','\n')

str2 = str1.split()

print(str2)

for k in range(0,len(str2)):

if str2[k].startswith('>pageno:'+str(pageno)+' line:'+str(i))

print ('>>'+text[i-1])

print (e)

i = i +1

if pageno < int(maxlength):

#获取帖子的下一页链接,读取页面内容

nextpagelink = 'http://bbs.tianya.cn'+getnextpagelink.search(html).group(1)

#print(type(html))

link = urllib.request.urlopen(nextpagelink)

html = link.read().decode('utf-8')

doc.save(title[0]+'.doc')

问题一:

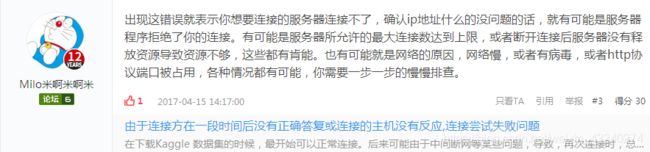

解决方法参考 https://blog.csdn.net/jingsiyu6588/article/details/88653946 。实际情况中隔了五分钟直接重运行就可以了,可能是天涯的网站监督不是那么严格。

问题二:

http://bbs.tianya.cn/post-develop-1385610-1.shtml (ConnectionError:HTTPConnectionPool(host=‘img7.laibafile.cn.youjz.org’, port=80):Max retries exceeded with url: /1.jpghttp://bbs.tianya.cn/post-develop-1385610-1.shtml%0A (Caused by NewConnectionError (’: Failed to establish a new

connection: [Errno 11004] getaddrinfo failed’))

第一次参考解决方法https://www.cnblogs.com/caicaihong/p/7495435.html 解决Max retries exceeded with url的问题,但我用了一次就不好用了(内心OS:难道真的要逼我学cookie和header么)。第二天我排查发现问题出在图片URL获取上,那么我参考了简书一位小哥的形式,当找不到地址时不断重播,发现是可行的OMG!代码如下:

try:

url_content = requests.get(img_url+line)

except:

print(u'HTTP请求失败!正在准备重发...')

time.sleep(2)

continue

未解决问题html记录:

-

http://bbs.tianya.cn/post-develop-2056252-1.shtml

http://bbs.tianya.cn/post-free-3229755-1.shtml

…

(ValueError : All strings must be XML compatible: Unicode or ASCII, no NULL bytes or control characters,未查到原因 空白页处置。如有网友知道问题出在哪希望告知在下,不胜感激)

-

http://bbs.tianya.cn/post-worldlook-595711-1.shtml (HTTPError: Bad Gateway HTTPError: Gateway Time-out)

后来发现所有含有worldlook和sock的地址都被封了无法访问(即网页进去都无法访问)。所以我们需要把所有不含此字节的挑出来:

# -*- coding: utf-8 -*-

"""

Created on Sat Jun 15 10:47:15 2019

@author: Administrator

"""

import _locale

from bs4 import BeautifulSoup

from docx import Document

from docx.shared import Inches

from PIL import Image

filepath0 ='errorWebsite.txt'

filehandle0 = open(filepath0,'w')

filepath1 ='readWebsite.txt'

filehandle1 = open(filepath1,'w')

for line in open("D:\\Ayu\\website0.txt","r",encoding='utf-8'):

if 'worldlook' in line or 'stocks' in line:

filehandle0.write(line)

else:

filehandle1.write(line)

撒花花 --<-<-<@