实验三:熟悉常用的HBase操作

实验环境:

(1)操作系统:Linux(建议 Ubuntu 16.04 或 Ubuntu 18.04)。

(2)Hadoop 版本:3.1.3。

(3)HBase 版本:2.2.2。

(4)JDK 版本:1.8。

(5)Java IDE: Eclipse。

实验内容与完成情况:

(1)现有以下关系数据库中的表和数据(见表14-3〜表14-5),要求将其转换为适合于

HBase存储的表并插入数据。

在这里插入图片描述

(1)学生Student表

创建表的HBase Shell命令语句如下:

第三行数据

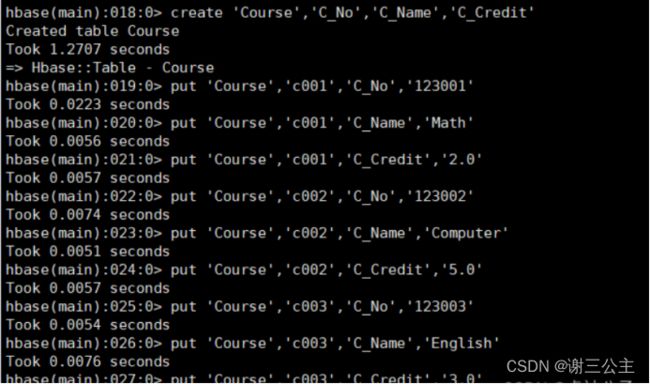

(2)课程Course表

创建表的HBase Shell命令语句如下:

(3)选课表

创建表的HBase Shell命令语句如下:

(2)编程实现以下功能。

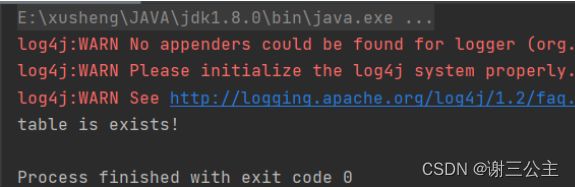

①createTable(String tableName, String]] fields)。

创建表,参数tableName 表的名称,字符串数组fields *存储记录各字段名的数组。 要求当HBase已经存在名为tableName的表时,先删除原有的表,再创建新的表。

Java代码:

package com.xusheng.HBase.shiyan31;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class CreateTable {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void createTable(String tableName,String[] fields) throws IOException {

init();

TableName tablename = TableName.valueOf(tableName);

if(admin.tableExists(tablename)){

System.out.println("table is exists!");

admin.disableTable(tablename);

admin.deleteTable(tablename);//删除原来的表

}

TableDescriptorBuilder tableDescriptor = TableDescriptorBuilder.newBuilder(tablename);

for(String str : fields){

tableDescriptor.setColumnFamily(ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes(str)).build());

admin.createTable(tableDescriptor.build());

}

close();

}

//建立连接

public static void init() {

configuration = HBaseConfiguration.create();

//configuration.set("hbase.rootdir", "hdfs://hadoop102:8020/HBase");

configuration.set("hbase.zookeeper.quorum","hadoop102,hadoop103,hadoop104");

try {

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

//关闭连接

public static void close() {

try {

if (admin != null) {

admin.close();

}

if (null != connection) {

connection.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

String[] fields = {"Score"};

try {

createTable("person", fields);

} catch (IOException e) {

e.printStackTrace();

}

}

}

②addRecord(String tableName, String row, String]] fields, String口 values) 。

向表tableName、行row(用S_Name表示)和字符串数组fields指定的单元格中添加对 应的数据valueso其中,fields中每个元素如果对应的列族下还有相应的列限定符,用 “columnFamily: column"表示。例如,同时向MathComputerEnglish三列添加成绩时,字 符串数组 fields 为( “Score: Math” ," Score: Computer" , “Score: English” },数组 values 存储 这三门课的成绩。

Java代码:

package com.xusheng.HBase.shiyan31;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class addRecord {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void addRecord(String tableName, String row, String[] fields, String[] values) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

for (int i = 0; i != fields.length; i++) {

Put put = new Put(row.getBytes());

String[] cols = fields[i].split(":");

put.addColumn(cols[0].getBytes(), cols[1].getBytes(), values[i].getBytes());

table.put(put);

}

table.close();

close();

}

public static void init() {

configuration = HBaseConfiguration.create();

//configuration.set("hbase.rootdir", "hdfs://hadoop102:8020/HBase");

configuration.set("hbase.zookeeper.quorum","hadoop102,hadoop103,hadoop104");

try {

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

public static void close() {

try {

if (admin != null) {

admin.close();

}

if (null != connection) {

connection.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

String[] fields = {"Score:Math", "Score:Computer Science", "Score:English"};

String[] values = {"99", "80", "100"};

try {

addRecord("tableName", "Score", fields, values);

} catch (IOException e) {

e.printStackTrace();

}

}

}

③scanColumn(String tableName, String column) 。

浏览表tableName某列的数据,如果某行记录中该列数据不存在,则返回null。要求 当参数column为某列族名时,如果底下有若干个列限定符,则要列出每个列限定符代表 的列的数据;当参数column为某列具体名(例如“ Score: Math")时,只需要列出该列的 数据。

Java代码:

package com.xusheng.HBase.shiyan31;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class scanColumn {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void scanColumn(String tableName, String column) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Scan scan = new Scan();

scan.addFamily(Bytes.toBytes(column));

ResultScanner scanner = table.getScanner(scan);

for (Result result = scanner.next(); result != null; result = scanner.next()) {

showCell(result);

}

table.close();

close();

}

public static void showCell(Result result) {

Cell[] cells = result.rawCells();

for (Cell cell : cells) {

System.out.println("RowName:" + new String(CellUtil.cloneRow(cell)) + " ");

System.out.println("Timetamp:" + cell.getTimestamp() + " ");

System.out.println("column Family:" + new String(CellUtil.cloneFamily(cell)) + " ");

System.out.println("row Name:" + new String(CellUtil.cloneQualifier(cell)) + " ");

System.out.println("value:" + new String(CellUtil.cloneValue(cell)) + " ");

}

}

public static void init() {

configuration = HBaseConfiguration.create();

//configuration.set("hbase.rootdir", "hdfs://hadoop102:8020/HBase");

configuration.set("hbase.zookeeper.quorum","hadoop102,hadoop103,hadoop104");

try {

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

// 关闭连接

public static void close() {

try {

if (admin != null) {

admin.close();

}

if (null != connection) {

connection.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

try {

scanColumn("tableName", "Score");

} catch (IOException e) {

e.printStackTrace();

}

}

}

④modifyData(String tableName, String row, String column) 。

修改表tableName,即修改行row(可以用学生姓名S_Name表示)、列column指定的 单元格的数据。

Java代码:

package com.xusheng.HBase.shiyan31;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class modifyData {

public static long ts;

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void modifyData(String tableName, String row, String column, String val) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(row.getBytes());

Scan scan = new Scan();

ResultScanner resultScanner = table.getScanner(scan);

for (Result r : resultScanner) {

for (Cell cell : r.getColumnCells(row.getBytes(), column.getBytes())) {

ts = cell.getTimestamp();

}

}

put.addColumn(row.getBytes(), column.getBytes(), ts, val.getBytes());

table.put(put);

table.close();

close();

}

public static void init() {

configuration = HBaseConfiguration.create();

//configuration.set("hbase.rootdir", "hdfs://hadoop102:8020/HBase");

configuration.set("hbase.zookeeper.quorum","hadoop102,hadoop103,hadoop104");

try {

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

public static void close() {

try {

if (admin != null) {

admin.close();

}

if (null != connection) {

connection.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

try {

modifyData("tableName", "Score", "Math", "100");

} catch (IOException e) {

e.printStackTrace();

}

}

}

⑤deleteRow(String tableName, String row)。

删除表tableName中row指定的行的记录。

Java代码:

package com.xusheng.HBase.shiyan31;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class deleteRow {

public static long ts;

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void deleteRow(String tableName, String row) throws IOException {

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Delete delete=new Delete(row.getBytes());

table.delete(delete);

table.close();

close();

}

public static void init() {

configuration = HBaseConfiguration.create();

//configuration.set("hbase.rootdir", "hdfs://hadoop102:8020/HBase");

configuration.set("hbase.zookeeper.quorum","hadoop102,hadoop103,hadoop104");

try {

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

public static void close() {

try {

if (admin != null) {

admin.close();

}

if (null != connection) {

connection.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

try {

deleteRow("tableName", "Score");

} catch (IOException e) {

e.printStackTrace();

}

}

}