Doris-问题

1.启动BE报错

设置系统最大打开文件句柄数(注意这里的*不要去掉)

重启生效!!!重启生效!!!重启生效!!!

sudo vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072重启生效

2. 多列分区

创建表:

CREATE TABLE IF NOT EXISTS test_db.example_range_tbl2

(

`date` DATE NOT NULL COMMENT "数据灌入日期时间",

`id` int COMMENT "id"

)

ENGINE=OLAP

AGGREGATE KEY(`date`,`id`)

PARTITION BY RANGE(`date`, `id`)

(

PARTITION `p201701_1000` VALUES LESS THAN ("2017-02-01", "1000"),

PARTITION `p201702_2000` VALUES LESS THAN ("2017-03-01", "2000"),

PARTITION `p201703_all` VALUES LESS THAN ("2017-04-01")

)

DISTRIBUTED BY HASH(`id`) BUCKETS 16

PROPERTIES

(

"replication_num" = "1"

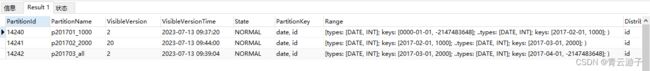

);查看分区:

show partitions from test_db.example_range_tbl2;插入数据:

insert into test_db.example_range_tbl2 values ('2017-02-01', 2001);Doris 支持指定多列作为分区列

- Range 分区

-

PARTITION BY RANGE(`date`, `id`) ( PARTITION `p201701_1000` VALUES LESS THAN ("2017-02-01", "1000"), PARTITION `p201702_2000` VALUES LESS THAN ("2017-03-01", "2000"), PARTITION `p201703_all` VALUES LESS THAN ("2017-04-01") )指定 `date`(DATE 类型) 和 `id`(INT 类型) 作为分区列。以上示例最终得到的分区如下:

-

p201701_1000: [(MIN_VALUE, MIN_VALUE), ("2017-02-01", "1000") ) p201702_2000: [("2017-02-01", "1000"), ("2017-03-01", "2000") ) p201703_all: [("2017-03-01", "2000"), ("2017-04-01", MIN_VALUE))注意,最后一个分区用户缺省只指定了 `date` 列的分区值,所以 `id` 列的分区值会默认填充 `MIN_VALUE`。当用户插入数据时,分区列值会按照顺序依次比较, 当第一列处于边界的时候, 由第二列决定,最终得到对应的分区。举例如下:

-

数据 --> 分区 2017-01-01, 200 --> p201701_1000 2017-01-01, 2000 --> p201701_1000 2017-02-01, 100 --> p201701_1000 2017-02-01, 2000 --> p201702_2000 2017-02-15, 5000 --> p201702_2000 2017-03-01, 2000 --> p201703_all 2017-03-10, 1 --> p201703_all 2017-04-01, 1000 --> 无法导入 2017-05-01, 1000 --> 无法导入List 分区

-

PARTITION BY LIST(`id`, `city`) ( PARTITION `p1_city` VALUES IN (("1", "Beijing"), ("1", "Shanghai")), PARTITION `p2_city` VALUES IN (("2", "Beijing"), ("2", "Shanghai")), PARTITION `p3_city` VALUES IN (("3", "Beijing"), ("3", "Shanghai")) )指定 `id`(INT 类型) 和 `city`(VARCHAR 类型) 作为分区列。最终得到的分区如下:

-

p1_city: [("1", "Beijing"), ("1", "Shanghai")] p2_city: [("2", "Beijing"), ("2", "Shanghai")] p3_city: [("3", "Beijing"), ("3", "Shanghai")]当用户插入数据时,分区列值会按照顺序依次比较,最终得到对应的分区。举例如下:

-

数据 ---> 分区 1, Beijing ---> p1_city 1, Shanghai ---> p1_city 2, Shanghai ---> p2_city 3, Beijing ---> p3_city 1, Tianjin ---> 无法导入 4, Beijing ---> 无法导入Doris官网

-

https://doris.apache.org/docs/dev/data-table/data-partition

3.Flink无法写数据到Doris

解决办法:开启检查点

package com.atguigu.gmall.realtime.app.dws;

import com.atguigu.gmall.realtime.utils.KafkaUtil;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import com.atguigu.gmall.realtime.app.func.KeywordUDTF;

/**

* Created by 黄凯 on 2023/7/13 0013 11:00

*

* @author 黄凯

* 永远相信美好的事情总会发生.

*

* 流量域:搜索关键词聚合统计

* * 需要启动的进程

* * zk、kafka、flume、DwdTrafficBaseLogSplit、DwsTrafficSourceKeywordPageViewWindow

*/

public class DwsTrafficSourceKeywordPageViewWindow {

public static void main(String[] args) {

//TODO 1.基本环境准备

//1.1 指定流处理环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//1.2 设置并行度

env.setParallelism(4);

//1.3 指定表执行环境

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

//1.4 注册自定义函数到表执行环境中

tableEnv.createTemporarySystemFunction("ik_analyze", KeywordUDTF.class);

//TODO 2.检查点相关的设置(略)

env.enableCheckpointing(5000L);

env.setRestartStrategy(RestartStrategies.failureRateRestart(3, Time.days(30),Time.seconds(3)));

//TODO 2.检查点相关的设置(略)

//TODO 3.从页面日志中读取数据 创建动态表 指定Watermark生成策略以及提取事件时间字段

String topic = "dwd_traffic_page_log";

String groupId = "dws_traffic_keyword_group";

/**

* >>>>:4> {"common":{"ar":"28","uid":"1728","os":"Android 13.0","ch":"xiaomi","is_new":"0","md":"realme Neo2","mid":"mid_162",

* "vc":"v2.1.134","ba":"realme","sid":"ce0398d2-26cd-47aa-a761-92710d959deb"},

* "page":{"page_id":"payment","item":"476920","during_time":10429,"item_type":"order_id","last_page_id":"trade"},"ts":1654647013000}

*

* ===============================

*

*

*/

tableEnv.executeSql("create table page_log(\n" +

"\n" +

" common map,\n" +

" page map,\n" +

" ts bigint,\n" +

" row_time as TO_TIMESTAMP(FROM_UNIXTIME(ts/1000)),\n" +

" WATERMARK FOR row_time AS row_time\n" +

"\n" +

") " + KafkaUtil.getKafkaDDL(topic, groupId));

// tableEnv.executeSql("select * from page_log").print();

//TODO 4.过滤出搜索行为

Table searchTable = tableEnv.sqlQuery("select\n" +

" page['item'] fullword,\n" +

" row_time\n" +

" from page_log\n" +

"where page['last_page_id'] = 'search'\n" +

"and page['item_type'] = 'keyword'\n" +

"and page['item'] is not null");

tableEnv.createTemporaryView("search_table",searchTable);

// tableEnv.executeSql("select * from search_table").print();

//TODO 5.使用自定义函数对搜索内容分词 并和原表字段进行关联

Table splitTable = tableEnv.sqlQuery("select keyword,\n" +

" row_time\n" +

"from search_table,\n" +

" LATERAL TABLE(ik_analyze(fullword)) t(keyword)");

tableEnv.createTemporaryView("split_table", splitTable);

// tableEnv.executeSql("select * from split_table").print();

//TODO 6.分组、开窗、聚合统计

/**

* +----+--------------------------------+--------------------------------+--------------------------------+--------------------------------+----------------------+

* | op | stt | edt | keyword | cur_date | keyword_count |

* +----+--------------------------------+--------------------------------+--------------------------------+--------------------------------+----------------------+

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | 前端 | 20220608 | 2 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | python | 20220608 | 1 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | 大 | 20220608 | 6 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | java | 20220608 | 1 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | 数据 | 20220608 | 6 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | hadoop | 20220608 | 4 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | 多线程 | 20220608 | 2 |

* | +I | 2022-06-08 11:51:40 | 2022-06-08 11:51:50 | 数据库 | 20220608 | 1 |

*/

Table result = tableEnv.sqlQuery("select\n" +

" DATE_FORMAT(TUMBLE_START(row_time, INTERVAL '10' SECOND),'yyyy-MM-dd HH:mm:ss') stt,\n" +

" DATE_FORMAT(TUMBLE_END(row_time, INTERVAL '10' SECOND),'yyyy-MM-dd HH:mm:ss') edt,\n" +

" keyword,\n" +

" date_format(TUMBLE_START(row_time, INTERVAL '10' SECOND), 'yyyyMMdd') cur_date,\n" +

" count(*) keyword_count\n" +

" from split_table\n" +

"group by TUMBLE(row_time,INTERVAL '10' SECOND ),keyword");

tableEnv.createTemporaryView("res_table",result);

// tableEnv.executeSql("select * from res_table").print();

//TODO 7.将聚合的结果写到Doris

tableEnv.executeSql("CREATE table doris_t( " +

" stt string, " +

" edt string, " +

" keyword string, " +

" cur_date string, " +

" keyword_count bigint " +

")WITH (" +

" 'connector' = 'doris', " +

" 'fenodes' = 'hadoop102:7030', " +

" 'table.identifier' = 'gmall.dws_traffic_source_keyword_page_view_window', " +

" 'username' = 'root', " +

" 'password' = 'aaaaaa', " +

" 'sink.properties.format' = 'json', " +

" 'sink.properties.read_json_by_line' = 'true', " +

" 'sink.buffer-count' = '4', " +

" 'sink.buffer-size' = '4086'," +

" 'sink.enable-2pc' = 'false' " + // 测试阶段可以关闭两阶段提交,方便测试

") ");

tableEnv.executeSql("insert into doris_t select * from res_table");

}

}

官网说明

结果